Go Here to Read this Fast! Upcoming OnePlus Watch 3 might have a rotating crown

Originally appeared here:

Upcoming OnePlus Watch 3 might have a rotating crown

Go Here to Read this Fast! Upcoming OnePlus Watch 3 might have a rotating crown

Originally appeared here:

Upcoming OnePlus Watch 3 might have a rotating crown

Originally appeared here:

Like Clint Eastwood’s thriller Juror #2? Then watch these three movies now

Originally appeared here:

Tom Holland says he doesn’t know anything about the Christopher Nolan movie he’s starring in

Go Here to Read this Fast! I tested Intel’s new XeSS 2 to see if it really holds up against DLSS 3

Originally appeared here:

I tested Intel’s new XeSS 2 to see if it really holds up against DLSS 3

Originally appeared here:

James Gunn says Superman trailer is most viewed in history of DC and Warner Bros.

We may be a bit technology-obsessed here, but the Engadget team does occasionally get around to low-tech activities, like reading. Well, some of us read on ereaders or our smartphones, but you get the point — books are great, and we read some exceptional ones this year that each deserve a shoutout. These are some of the best books we read in 2024.

This article originally appeared on Engadget at https://www.engadget.com/entertainment/our-favorite-books-we-read-in-2024-151514842.html?src=rss

Go Here to Read this Fast! Our favorite books we read in 2024

Originally appeared here:

Our favorite books we read in 2024

A federal judge in California has agreed with WhatsApp that the NSO Group, the Israeli cybersurveillance firm behind the Pegasus spyware, had hacked into its systems by sending malware through its servers to thousands of its users’ phones. WhatsApp and its parent company, Meta, sued the NSO Group back in 2019 and accused it of spreading malware to 1,400 mobile devices across 20 countries with surveillance as its purpose. They revealed back then some of the targeted phones were owned by journalists, human rights activists, prominent female leaders and political dissidents. The Washington Post reports that District Judge Phyllis Hamilton has granted WhatsApp’s motion for summary judgement against NSO and has ruled that it had violated the US Computer Fraud and Abuse Act (CFAA).

The NSO Group disputed the allegations in the “strongest possible terms” when the lawsuit was filed. It denied that it had a hand in the attacks and told Engadget back then that its sole purpose was to “provide technology to licensed government intelligence and law enforcement agencies to help them fight terrorism and serious crime.” The company argued that it should not be held liable, because it merely sells its services to government agencies, which are the ones that determine their targets. In 2020, Meta escalated its lawsuit and accused the firm of using US-based servers to stage its Pegasus spyware attacks.

Judge Hamilton has ruled that the NSO Group violated the CFAA, because the firm appears to fully acknowledge that the modified WhatsApp program its clients use to target users send messages through legitimate WhatsApp servers. Those messages then allow the Pegasus spyware to be installed on users’ devices — the targets don’t even have to do anything, such as pick up the phone to take a call or click a link, to be infected. The court has also found that the plaintiff’s motion for sanctions must be granted on account of the NSO Group “repeatedly [failing] to produce relevant discovery,” most significant of which is the Pegasus source code.

WhatsApp spokesperson Carl Woog told The Post that the company believes this is the first court decision agreeing that a major spyware vendor had broken US hacking laws. “We’re grateful for today’s decision,” Woog told the publication. “NSO can no longer avoid accountability for their unlawful attacks on WhatsApp, journalists, human rights activists and civil society. With this ruling, spyware companies should be on notice that their illegal actions will not be tolerated.” In her decision, Judge Hamilton wrote that her order resolves all issues regarding the NSO Group’s liability and that a trial will only proceed to determine how much the company should pay in damages.

This article originally appeared on Engadget at https://www.engadget.com/cybersecurity/judge-finds-spyware-maker-nso-group-liable-for-attacks-on-whatsapp-users-140054522.html?src=rss

Go Here to Read this Fast! Judge finds spyware-maker NSO Group liable for attacks on WhatsApp users

Originally appeared here:

Judge finds spyware-maker NSO Group liable for attacks on WhatsApp users

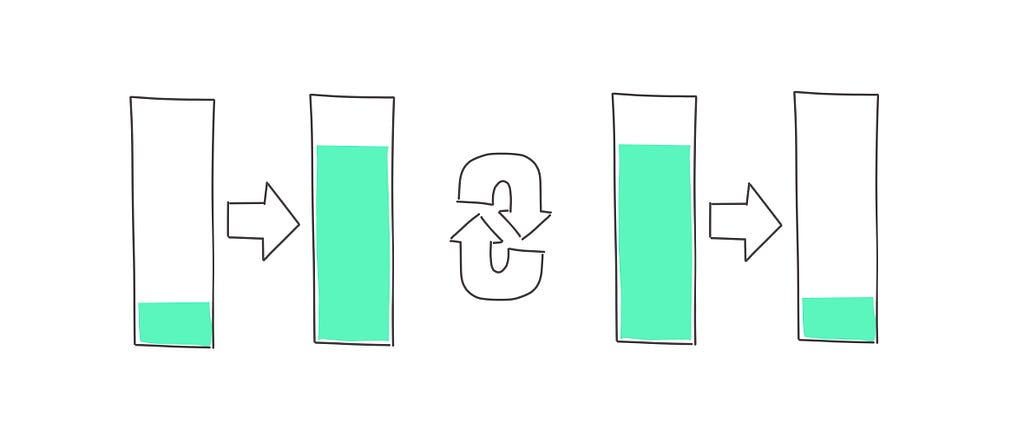

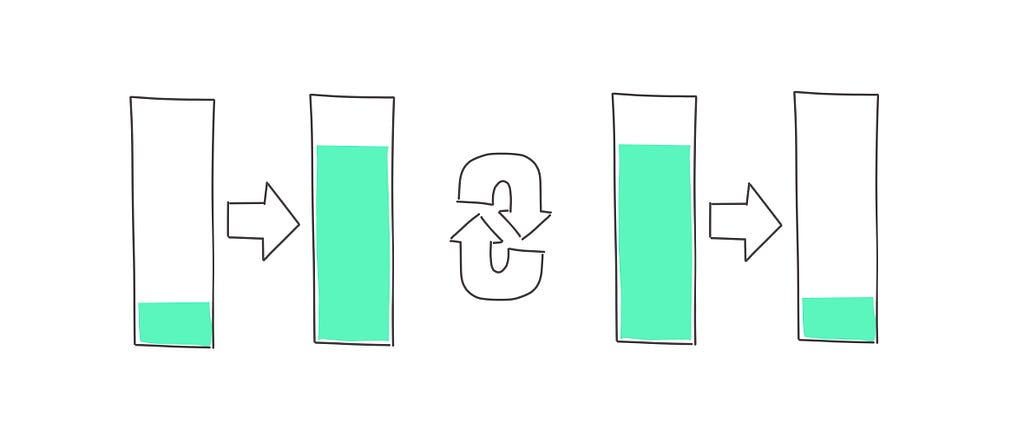

The Pareto principle says if you solve a problem 20% through, you get 80% of the value. The opposite seems to be true for generative AI.

About the author: Zsombor Varnagy-Toth is a Sr UX Researcher at SAP with background in machine learning and cognitive science. Working with qualitative and quantitative data for product development.

I first realized this as I studied professionals writing marketing copy using LLMs. I observed that when these professionals start using LLMs, their enthusiasm quickly fades away, and most return to their old way of manually writing content.

This was an utterly surprising research finding because these professionals acknowledged that the AI-generated content was not bad. In fact, they found it unexpectedly good, say 80% good. But if that’s so, why do they still fall back on creating the content manually? Why not take the 80% good AI-generated content and just add that last 20% manually?

Here is the intuitive explanation:

If you have a mediocre poem, you can’t just turn it into a great poem by replacing a few words here and there.

Say, you have a house that is 80% well built. It’s more or less OK, but the walls are not straight, and the foundations are weak. You can’t fix that with some additional work. You have to tear it down and start building it from the ground up.

We investigated this phenomenon further and identified its root. For these marketing professionals if a piece of copy is only 80% good, there is no individual piece in the text they could swap that would make it 100%. For that, the whole copy needs to be reworked, paragraph by paragraph, sentence by sentence. Thus, going from AI’s 80% to 100% takes almost as much effort as going from 0% to 100% manually.

Now, this has an interesting implication. For such tasks, the value of LLMs is “all or nothing.” It either does an excellent job or it’s useless. There is nothing in between.

We looked at a few different types of user tasks and figured that this reverse Pareto principle affects a specific class of tasks.

If one of these conditions are not met, the reverse Pareto effect doesn’t apply.

Writing code, for example, is more composable than writing prose. Code has its individual parts: commands and functions that can be singled out and fixed independently. If AI takes the code to 80%, it really only takes about 20% extra effort to get to the 100% result.

As for the task size, LLMs have great utility in writing short copy, such as social posts. The LLM-generated short content is still “all or nothing” — it’s either good or worthless. However, because of the brevity of these pieces of copy, one can generate ten at a time and spot the best one in seconds. In other words, users don’t need to tackle the 80% to 100% problem — they just pick the variant that came out 100% in the first place.

As for quality, there are those use cases when professional grade quality is not a requirement. For example, a content factory may be satisfied with 80% quality articles.

If you are building an LLM-powered product that deals with large tasks that are hard to decompose but the user is expected to produce 100% quality, you must build something around the LLM that turns its 80% performance into 100%. It can be a sophisticated prompting approach on the backend, an additional fine-tuned layer, or a cognitive architecture of various tools and agents that work together to iron out the output. Whatever this wrapper does, that’s what gives 80% of the customer value. That’s where the treasure is buried, the LLM only contributes 20%.

This conclusion is in line with Sequoia Capital’s Sonya Huang’s and Pat Grady’s assertion that the next wave of value in the AI space will be created by these “last-mile application providers” — the wrapper companies that figure out how to jump that last mile that creates 80% of the value.

The 80/20 problem of generative AI — a UX research insight was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

The 80/20 problem of generative AI — a UX research insight

Go Here to Read this Fast! The 80/20 problem of generative AI — a UX research insight

Go Here to Read this Fast! This Capcom bundle is the best deal of the Steam Winter Sale

Originally appeared here:

This Capcom bundle is the best deal of the Steam Winter Sale

Originally appeared here:

The greatest disaster movie ever made just turned 50. Here’s why it still entertains