A surprisingly effective means to identify outliers in numeric data

PCA (principle component analysis) is commonly used in data science, generally for dimensionality reduction (and often for visualization), but it is actually also very useful for outlier detection, which I’ll describe in this article.

This articles continues my series in outlier detection, which also includes articles on FPOF, Counts Outlier Detector, Distance Metric Learning, Shared Nearest Neighbors, and Doping. This also includes another excerpt from my book Outlier Detection in Python.

The idea behind PCA is that most datasets have much more variance in some columns than others, and also have correlations between the features. An implication of this is: to represent the data, it’s often not necessary to use as many features as we have; we can often approximate the data quite well using fewer features — sometimes far fewer. For example, with a table of numeric data with, say, 100 features, we may be able to represent the data reasonably well using perhaps 30 or 40 features, possibly less, and possibly much less.

To allow for this, PCA transforms the data into a different coordinate system, where the dimensions are known as components.

Given the issues we often face with outlier detection due to the curse of dimensionality, working with fewer features can be very beneficial. As described in Shared Nearest Neighbors and Distance Metric Learning for Outlier Detection, working with many features can make outlier detection unreliable; among the issues with high-dimensional data is that it leads to unreliable distance calculations between points (which many outlier detectors rely on). PCA can mitigate these effects.

As well, and surprisingly, using PCA can often create a situation where outliers are actually easier to detect. The PCA transformations often reshape the data so that any unusual points are are more easily identified.

An example is shown here.

import numpy as np

import pandas as pd

from sklearn.decomposition import PCA

# Create two arrays of 100 random values, with high correlation between them

x_data = np.random.random(100)

y_data = np.random.random(100) / 10.0

# Create a dataframe with this data plus two additional points

data = pd.DataFrame({'A': x_data, 'B': x_data + y_data})

data= pd.concat([data,

pd.DataFrame([[1.8, 1.8], [0.5, 0.1]], columns=['A', 'B'])])

# Use PCA to transform the data to another 2D space

pca = PCA(n_components=2)

pca.fit(data)

print(pca.explained_variance_ratio_)

# Create a dataframe with the PCA-transformed data

new_data = pd.DataFrame(pca.transform(data), columns=['0', '1'])

This first creates the original data, as shown in the left pane. It then transforms it using PCA. Once this is done, we have the data in the new space, shown in the right pane.

Here I created a simple synthetic dataset, with the data highly correlated. There are two outliers, one following the general pattern, but extreme (Point A) and one with typical values in each dimension, but not following the general pattern (Point B).

We then use scikit-learn’s PCA class to transform the data. The output of this is placed in another pandas dataframe, which can then be plotted (as shown), or examined for outliers.

Looking at the original data, the data tends to appear along a diagonal. Drawing a line from the bottom-left to the top-right (the blue line in the plot), we can create a new, single dimension that represents the data very well. In fact, executing PCA, this will be the first component, with the line orthogonal to this (the orange line, also shown in the left pane) as the second component, which represents the remaining variance.

With more realistic data, we will not have such strong linear relationships, but we do almost always have some associations between the features — it’s rare for the features to be completely independent. And given this, PCA can usually be an effective way to reduce the dimensionality of a dataset. That is, while it’s usually necessary to use all components to completely describe each item, using only a fraction of the components can often describe every record (or almost every record) sufficiently well.

The right pane shows the data in the new space created by the PCA transformation, with the first component (which captures most of the variance) on the x-axis and the second (which captures the remaining variance) on the y-axis. In the case of 2D data, a PCA transformation will simply rotate and stretch the data. The transformation is harder to visualize in higher dimensions, but works similarly.

Printing the explained variance (the code above included a print statement to display this) indicates component 0 contains 0.99 of the variance and component 1 contains 0.01, which matches the plot well.

Often the components would be examined one at a time (for example, as histograms), but in this example, we use a scatter plot, which saves space as we can view two components at a time. The outliers stand out as extreme values in the two components.

Looking a little closer at the details of how PCA works, it first finds the line through the data that best describes the data. This is the line where the squared distances to the line, for all points, is minimized. This is, then, the first component. The process then finds a line orthogonal to this that best captures the remaining variance. This dataset contains only two dimensions, and so there is only one choice for direction of the second component, at right angles with the first component.

Where there are more dimensions in the original data, this process will continue some number of extra steps: the process continues until all variance in the data is captured, which will create as many components as the original data had dimensions. Given this, PCA has three properties:

- All components are uncorrelated.

- The first component has the most variation, then the second, and so on.

- The total variance of the components equals the variance in the original features.

PCA also has some nice properties that lend themselves well to outlier detection. As we can see in the figure, the outliers become separated well within the components, which allows simple tests to identify them.

We can also see another interesting result of PCA transformation: points that are in keeping with the general pattern tend to fall along the early components, but can be extreme in these (such as Point A), while points that do not follow the general patterns of the data tend to not fall along the main components, and will be extreme values in the later components (such as Point B).

There are two common ways to identify outliers using PCA:

- We can transform the data using PCA and then use a set of tests (conveniently, these can generally be very simple tests), on each component to score each row. This is quite straightforward to code.

- We can look at the reconstruction error. In the figure, we can see that using only the first component describes the majority of the data quite well. The second component is necessary to fully describe all the data, but by simply projecting the data onto the first component, we can describe reasonably well where most data is located. The exception is point B; its position on the first component does not describe its full location well and there would be a large reconstruction error using only a single component for this point, though not for the other points. In general, the more components necessary to describe a point’s location well (or the higher the error given a fixed number of components), the stronger of an outlier a point is.

Another method is possible where we remove rows one at a time and identify which rows affect the final PCA calculations the most significantly. Although this can work well, it is often slow and not commonly used. I may cover this in future articles, but for this article will look at reconstruction error, and in the next article at running simple tests on the PCA components.

Assumptions behind PCA for outlier detection

PCA does assume there are correlations between the features. The data above is possible to transform such that the first component captures much more variance than the second because the data is correlated. PCA provides little value for outlier detection where the features have no associations, but, given most datasets have significant correlation, it is very often applicable. And given this, we can usually find a reasonably small number of components that capture the bulk of the variance in a dataset.

As with some other common techniques for outlier detection, including Elliptic Envelope methods, Gaussian mixture models, and Mahalanobis distance calculations, PCA works by creating a covariance matrix representing the general shape of the data, which is then used to transform the space. In fact, there is a strong correspondence between elliptic envelope methods, the Mahalanobis distance, and PCA.

The covariance matrix is a d x d matrix (where d is the number of features, or dimensions, in the data), that stores the covariance between each pair of features, with the variance of each feature stored on the main diagonal (that is, the covariance of each feature to itself). The covariance matrix, along with the data center, is a concise description of the data — that is, the variance of each feature and the covariances between the features are very often a very good description of the data.

A covariance matrix for a dataset with three features may look like:

Here the variance of the three features are shown on the main diagonal: 1.57, 2.33, and 6.98. We also have the covariance between each feature. For example, the covariance between the 1st & 2nd features is 1.50. The matrix is symmetrical across the main diagonal, as the covariance between the 1st and 2nd features is the same as between the 2nd & 1st features, and so on.

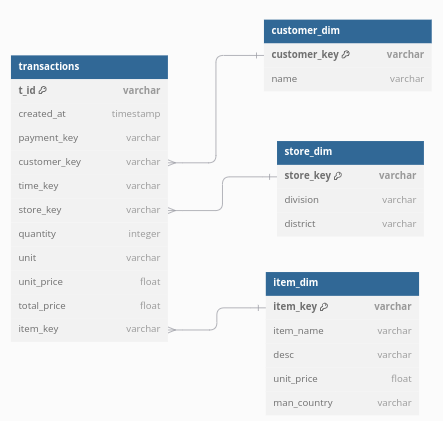

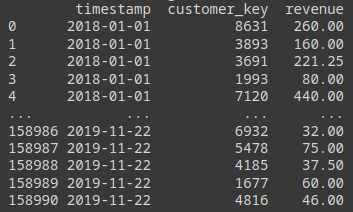

Scikit-learn (and other packages) provide tools that can calculate the covariance matrix for any given numeric dataset, but this is unnecessary to do directly using the techniques described in this and the next article. In this article, we look at tools provided by a popular package for outlier detection called PyOD (probably the most complete and well-used tool for outlier detection on tabular data available in Python today). These tools handle the PCA transformations, as well as the outlier detection, for us.

Limitations of PCA for outlier detection

One limitation of PCA is, it is sensitive to outliers. It’s based on minimizing squared distances of the points to the components, so it can be heavily affected by outliers (remote points can have very large squared distances). To address this, robust PCA is often used, where the extreme values in each dimension are removed before performing the transformation. The example below includes this.

Another limitation of PCA (as well as Mahalanobis distances and similar methods), is it can break down if the correlations are in only certain regions of the data, which is frequently true if the data is clustered. Where data is well-clustered, it may be necessary to cluster (or segment) the data first, and then perform PCA on each subset of the data.

PCA-based tests for outliers in PyOD

Now that we’ve gone over how PCA works and, at a high level, how it can be applied to outlier detection, we can look at the detectors provided by PyOD.

PyOD actually provides three classes based on PCA: PyODKernelPCA, PCA, and KPCA. We’ll look at each of these.

PyODKernelPCA

PyOD provides a class called PyODKernelPCA, which is simply a wrapper around scikit-learn’s KernelPCA class. Either may be more convenient in different circumstances. This is not an outlier detector in itself and provides only PCA transformation (and inverse transformation), similar to scikit-learn’s PCA class, which was used in the previous example.

The KernelPCA class, though, is different than the PCA class, in that KernelPCA allows for nonlinear transformations of the data and can better model some more complex relationships. Kernels work similarly in this context as with SVM models: they transform the space (in a very efficient manner) in a way that allows outliers to be separated more easily.

Scikit-learn provides several kernels. These are beyond the scope of this article, but can improve the PCA process where there are complex, nonlinear relationships between the features. If used, outlier detection works, otherwise, the same as with using the PCA class. That is, we can either directly run outlier detection tests on the transformed space, or measure the reconstruction error.

The former method, running tests on the transformed space is quite straightforward and effective. We look at this in more detail in the next article. The latter method, checking for reconstruction error, is a bit more difficult. It’s not unmanageable at all, but the two detectors provided by PyOD we look at next handle the heavy lifting for us.

The PCA detector

PyOD provides two PCA-based outlier detectors: the PCA class and KPCA. The latter, as with PyODKernelPCA, allows kernels to handle more complex data. PyOD recommends using the PCA class where the data contains linear relationships, and KPCA otherwise.

Both classes use the reconstruction error of the data, using the Euclidean distance of points to the hyperplane that’s created using the first k components. The idea, again, is that the first k components capture the main patterns of the data well, and any points not well modeled by these are outliers.

In the plot above, this would not capture Point A, but would capture Point B. If we set k to 1, we’d use only one component (the first component), and would measure the distance of every point from its actual location to its location on this component. Point B would have a large distance, and so can be flagged as an outlier.

As with PCA generally, it’s best to remove any obvious outliers before fitting the data. In the example below, we use another detector provided by PyOD called ECOD (Empirical Cumulative Distribution Functions) for this purpose. ECOD is a detector you may not be familiar with, but is a quite strong tool. In fact PyOD recommends, when looking at detectors for a project, to start with Isolation Forest and ECOD.

ECOD is beyond the scope of this article. It’s covered in Outlier Detection in Python, and PyOD also provides a link to the original journal paper. But, as a quick sketch: ECOD is based on empirical cumulative distributions, and is designed to find the extreme (very small and very large) values in columns of numeric values. It does not check for rare combinations of values, only extreme values. As such, it is not able to find all outliers, but it is quite fast, and quite capable of finding outliers of this type. In this case, we remove the top 1% of rows identified by ECOD before fitting a PCA detector.

In general when performing outlier detection (not just when using PCA), it’s useful to first clean the data, which in the context of outlier detection often refers to removing any strong outliers. This allows the outlier detector to be fit on more typical data, which allows it to better capture the strong patterns in the data (so that it is then better able to identify exceptions to these strong patterns). In this case, cleaning the data allows the PCA calculations to be performed on more typical data, so as to capture better the main distribution of the data.

Before executing, it’s necessary to install PyOD, which may be done with:

pip install pyod

The code here uses the speech dataset (Public license) from OpenML, which has 400 numeric features. Any numeric dataset, though, may be used (any categorical columns will need to be encoded). As well, generally, any numeric features will need to be scaled, to be on the same scale as each other (skipped for brevity here, as all features here use the same encoding).

import pandas as pd

from pyod.models.pca import PCA

from pyod.models.ecod import ECOD

from sklearn.datasets import fetch_openml

#A Collects the data

data = fetch_openml("speech", version=1, parser='auto')

df = pd.DataFrame(data.data, columns=data.feature_names)

scores_df = df.copy()

# Creates an ECOD detector to clean the data

clf = ECOD(contamination=0.01)

clf.fit(df)

scores_df['ECOD Scores'] = clf.predict(df)

# Creates a clean version of the data, removing the top

# outliers found by ECOD

clean_df = df[scores_df['ECOD Scores'] == 0]

# Fits a PCA detector to the clean data

clf = PCA(contamination=0.02)

clf.fit(clean_df)

# Predicts on the full data

pred = clf.predict(df)

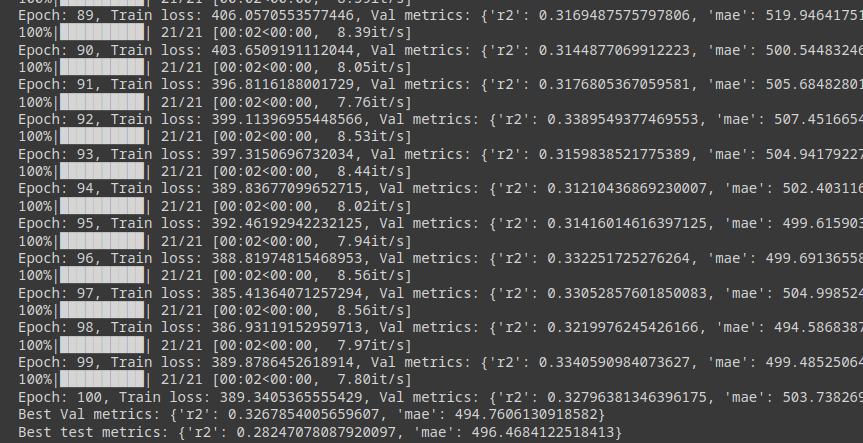

Running this, the pred variable will contain the outlier score for each record in the the data.

The KPCA detector

The KPCA detector works very much the same as the PCA detector, with the exception that a specified kernel is applied to the data. This can transform the data quite significantly. The two detectors can flag very different records, and, as both have low interpretability, it can be difficult to determine why. As is common with outlier detection, it may take some experimentation to determine which detector and parameters work best for your data. As both are strong detectors, it may also be useful to use both. Likely this can best be determined (along with the best parameters to use) using doping, as described in Doping: A Technique to Test Outlier Detectors.

To create a KPCA detector using a linear kernel, we use code such as:

det = KPCA(kernel='linear')

KPCA also supports polynomial, radial basis function, sigmoidal, and cosine kernels.

Conclusions

In this article we went over the ideas behind PCA and how it can aid outlier detection, particularly looking at standard outlier detection tests on PCA-transformed data and at reconstruction error. We also looked at two outlier detectors provided by PyOD for outlier detection based on PCA (both using reconstruction error), PCA and KPCA, and provided an example using the former.

PCA-based outlier detection can be very effective, but does suffer from low interpretability. The PCA and KPCA detectors produce outliers that are very difficult to understand.

In fact, even when using interpretable outlier detectors (such as Counts Outlier Detector, or tests based on z-score or interquartile range), on the PCA-transformed data (as we’ll look at in the next article), the outliers can be difficult to understand since the PCA transformation itself (and the components it generates) are nearly inscrutable. Unfortunately, this is a common theme in outlier detection. The other main tools used in outlier detection, including Isolation Forest, Local Outlier Factor (LOF), k Nearest Neighbors (KNN), and most others are also essentially black boxes (their algorithms are easily understandable — but the specific scores given to individual records can be difficult to understand).

In the 2d example above, when viewing the PCA-transformed space, it can be easy to see how Point A and Point B are outliers, but it is difficult to understand the two components that are the axes.

Where interpretability is necessary, it may be impossible to use PCA-based methods. Where this is not necessary, though, PCA-based methods can be extremely effective. And again, PCA has no lower interpretability than most outlier detectors; unfortunately, only a handful of outlier detectors provide a high level of interpretability.

In the next article, we will look further at performing tests on the PCA-transformed space. This includes simple univariate tests, as well as other standard outlier detectors, considering the time required (for PCA transformation, model fitting, and prediction), and the accuracy. Using PCA can very often improve outlier detection in terms of speed, memory usage, and accuracy.

All images are by the author

Using PCA for Outlier Detection was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Using PCA for Outlier Detection