A question-based extraction approach

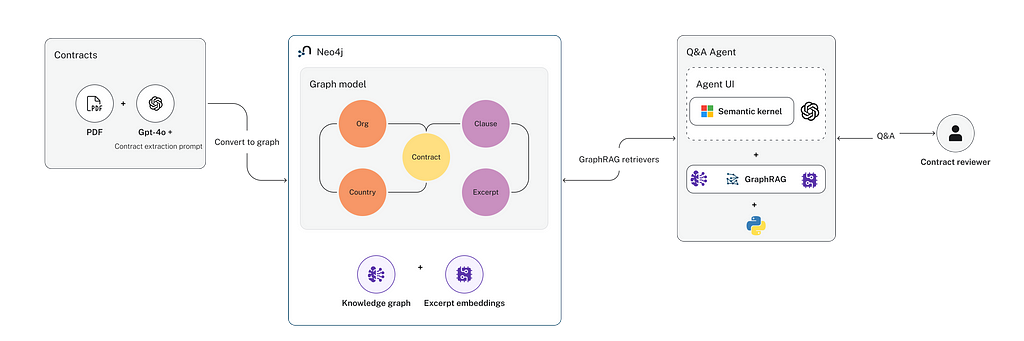

In this blog post, we introduce an approach that leverages a Graph Retrieval Augmented Generation (GraphRAG) method — to streamline the process of ingesting commercial contract data and building a Q&A Agent.

This approach diverges from traditional RAG (Retrieval-Augmented Generation) by emphasizing efficiency in data extraction, rather than breaking down and vectorizing entire documents indiscriminately, which is the predominant RAG approach.

In conventional RAG, every document is split into chunks and vectorized for retrieval, which can result in a large volume of unnecessary data being split, chunked and stored in vector indexes. Here, however, the focus is on extracting only the most relevant information from every contract for a specifc use case, Commercial Contract Review. The data is then structured into a knowledge graph, which organizes key entities and relationships, allowing for more precise graph data retrieval through Cypher queries and vector search.

By minimizing the amount of vectorized content and focusing on highly relevant knowledge extracted, this method enhances the accuracy and performance of the Q&A agent, making it suitable to handle complex and domain-specific questions.

The 4-stage approach includes: targeted information extraction (LLM + Prompt) to create a knowledge graph (LLM + Neo4J) and simple set of graph data retrieval functions (Cypher, Text to Cypher, Vector Search). Finally, a Q&A agent leveraging the data retrieval functions is built with (Microsoft Semantic Kernel)

The diagram below illustrates the approach

But first, for those of us not familiar with commercial law, let’s start with a brief intro to the contract review problem.

Contract Review and Large Language Models

Commercial contract review is a labor-intensive process involving paralegals and junior lawyers meticulously identifying critical information in a contract.

“Contract review is the process of thoroughly reading a contract to understand the rights and obligations of an individual or company signing it and assess the associated impact”.

Hendrycks, Burns et al, NeurIPS 2021, in CUAD an Expert-Annotated NLP Dataset for Legal Contract Review

The first stage of contract review involves reviewing hundreds of pages of contracts to find the relevant clauses or obligations. Contract reviewers must identify whether relevant clauses exist, what they say if they do exist, and keep track of where they are described.

For example, They must determine whether the contract is a 3-year contract or a 1-year contract. They must determine the end date of a contract. They must determine whether a clause is, say, an Anti-assignment or an Exclusivity clause…”

Hendrycks, Burns et al, NeurIPS 2021, in CUAD an Expert-Annotated NLP Dataset for Legal Contract Review

It’s a task that demands thoroughness but often suffers from inefficiencies but it is suitable for a Large Language Model!

Once the first stage is completed, senior law practitioners can start to examine contracts for weaknesses and risks. This is an area where a Q&A agent powered by an LLM and grounded by information stored in Knowledge Graph is a perfect Copilot for a legal expert.

A 4-Step Approach to Build a Commercial Contract Review Agent with LLMs, Function Calling & GraphRAG

The remainder of this blog will describe each of the steps in this process. Along the way, I will use code snippets to illustrate the main ideas.

The four steps are:

- Extracting Relevant Information from Contracts (LLM + Contract)

- Storing information extracted into a Knowledge Graph (Neo4j)

- Developing simple KG Data Retrieval Functions (Python)

- Building a Q&A Agent handling complex questions (Semantic Kernel, LLM, Neo4j)

The Dataset:

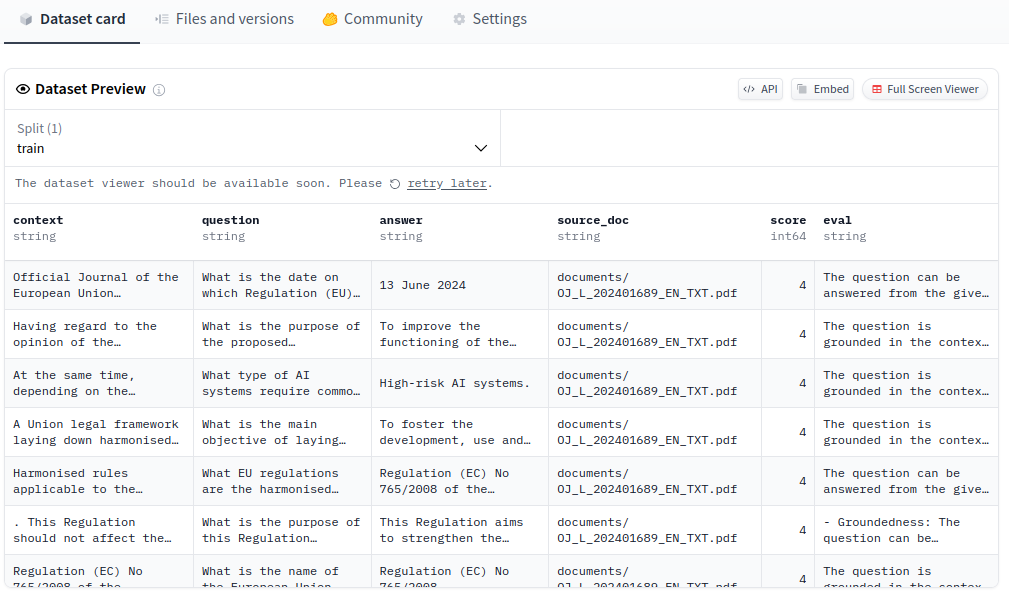

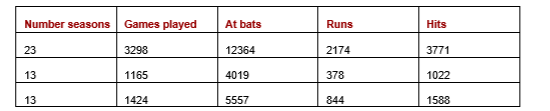

The CUAD (Contract Understanding Atticus Dataset) is a CC BY 4.0 licensed and publicly available dataset of over 13,000 expert-labeled clauses across 510 legal contracts, designed to help build AI models for contract review. It covers a wide range of important legal clauses, such as confidentiality, termination, and indemnity, which are critical for contract analysis.

We will use three contracts from this dataset to showcase how our approach to effectively extract and analyze key legal information, building a knowledge graph and leveraging it for precise, complex question answering.

The three contracts combined contain a total of 95 pages.

Step 1: Extracting Relevant Information from Contracts

It is relatively straightforward to prompt an LLM to extract precise information from contracts and generate a JSON output, representing the relevant information from the contract.

In commercial review, a prompt can be drafted to to locate each of the critical elements mentioned above — parties, dates, clauses — and summarize them neatly in a machine-readable (JSON) file.

Extraction Prompt (simplified)

Answer the following questions using information exclusively on this contract

[Contract.pdf]

1) What type of contract is this?

2) Who are the parties and their roles? Where are they incorporated? Name state and country (use ISO 3166 Country name)

3) What is the Agreement Date?

4) What is the Effective date?

For each of the following types of contract clauses, extract two pieces of information:

a) A Yes/No that indicates if you think the clause is found in this contract

b) A list of excerpts that indicates this clause type exists.

Contract Clause types: Competitive Restriction Exception, Non-Compete Clause, Exclusivity, No-Solicit Of Customers, No-Solicit Of Employees, Non-Disparagement, Termination For Convenience, Rofr/Rofo/Rofn, Change Of Control, Anti-Assignment, Uncapped Liability, Cap On Liability

Provide your final answer in a JSON document.

Please note that the above section shows a simplified version of the extraction prompt. A full version can be seen here. You will find that the the last part of the prompt specifies the desired format of the JSON document. This is useful in ensuring a consistent JSON schema output.

This task is relatively simple in Python. The main()function below is designed to process a set of PDF contract files by extracting relevant legal information (extraction_prompt), using OpenAI gpt-4o and saving the results in JSON format.

def main():

pdf_files = [filename for filename in os.listdir('./data/input/') if filename.endswith('.pdf')]

for pdf_filename in pdf_files:

print('Processing ' + pdf_filename + '...')

# Extract content from PDF using the assistant

complete_response = process_pdf('./data/input/' + pdf_filename)

# Log the complete response to debug

save_json_string_to_file(complete_response, './data/debug/complete_response_' + pdf_filename + '.json')

The “process_pdf” function uses “OpenAI gpt-4o” to perform knowledge extraction from the contract with an “extraction prompt”.

def process_pdf(pdf_filename):

# Create OpenAI message thread

thread = client.beta.threads.create()

# Upload PDF file to the thread

file = client.files.create(file=open(pdf_filename, "rb"), purpose="assistants")

# Create message with contract as attachment and extraction_prompt

client.beta.threads.messages.create(thread_id=thread.id,role="user",

attachments=[

Attachment(

file_id=file.id, tools=[AttachmentToolFileSearch(type="file_search")])

],

content=extraction_prompt,

)

# Run the message thread

run = client.beta.threads.runs.create_and_poll(

thread_id=thread.id, assistant_id=pdf_assistant.id, timeout=1000)

# Retrieve messages

messages_cursor = client.beta.threads.messages.list(thread_id=thread.id)

messages = [message for message in messages_cursor]

# Return last message in Thread

return messages[0].content[0].text.value

For each contract, the message returned by “process_pdf” looks like

{

"agreement": {

"agreement_name": "Marketing Affiliate Agreement",

"agreement_type": "Marketing Affiliate Agreement",

"effective_date": "May 8, 2014",

"expiration_date": "December 31, 2014",

"renewal_term": "1 year",

"Notice_period_to_Terminate_Renewal": "30 days",

"parties": [

{

"role": "Company",

"name": "Birch First Global Investments Inc.",

"incorporation_country": "United States Virgin Islands",

"incorporation_state": "N/A"

},

{

"role": "Marketing Affiliate",

"name": "Mount Knowledge Holdings Inc.",

"incorporation_country": "United States",

"incorporation_state": "Nevada"

}

],

"governing_law": {

"country": "United States",

"state": "Nevada",

"most_favored_country": "United States"

},

"clauses": [

{

"clause_type": "Competitive Restriction Exception",

"exists": false,

"excerpts": []

},

{

"clause_type": "Exclusivity",

"exists": true,

"excerpts": [

"Company hereby grants to MA the right to advertise, market and sell to corporate users, government agencies and educational facilities for their own internal purposes only, not for remarketing or redistribution."

]

},

{

"clause_type": "Non-Disparagement",

"exists": true,

"excerpts": [

"MA agrees to conduct business in a manner that reflects favorably at all times on the Technology sold and the good name, goodwill and reputation of Company."

]

},

{

"clause_type": "Termination For Convenience",

"exists": true,

"excerpts": [

"This Agreement may be terminated by either party at the expiration of its term or any renewal term upon thirty (30) days written notice to the other party."

]

},

{

"clause_type": "Anti-Assignment",

"exists": true,

"excerpts": [

"MA may not assign, sell, lease or otherwise transfer in whole or in part any of the rights granted pursuant to this Agreement without prior written approval of Company."

]

},

{

"clause_type": "Price Restrictions",

"exists": true,

"excerpts": [

"Company reserves the right to change its prices and/or fees, from time to time, in its sole and absolute discretion."

]

},

{

"clause_type": "Minimum Commitment",

"exists": true,

"excerpts": [

"MA commits to purchase a minimum of 100 Units in aggregate within the Territory within the first six months of term of this Agreement."

]

},

{

"clause_type": "IP Ownership Assignment",

"exists": true,

"excerpts": [

"Title to the Technology and all copyrights in Technology shall remain with Company and/or its Affiliates."

]

},

{

"clause_type": "License grant",

"exists": true,

"excerpts": [

"Company hereby grants to MA the right to advertise, market and sell the Technology listed in Schedule A of this Agreement."

]

},

{

"clause_type": "Non-Transferable License",

"exists": true,

"excerpts": [

"MA acknowledges that MA and its Clients receive no title to the Technology contained on the Technology."

]

},

{

"clause_type": "Cap On Liability",

"exists": true,

"excerpts": [

"In no event shall Company be liable to MA, its Clients, or any third party for any tort or contract damages or indirect, special, general, incidental or consequential damages."

]

},

{

"clause_type": "Warranty Duration",

"exists": true,

"excerpts": [

"Company's sole and exclusive liability for the warranty provided shall be to correct the Technology to operate in substantial accordance with its then current specifications."

]

}

]

}

}

Step 2: Creating a Knowledge Graph

With each contract now as a JSON file, the next step is to create a Knowledge Graph in Neo4J.

At this point is useful to spend some time designing the data model. You need to consider some key questions:

- What do nodes and relationships in this graph represent?

- What are the main properties for each node and relationship?,

- Should there be any properties indexed?

- Which properties need vector embeddings to enable semantic similarity search on them?

In our case, a suitable design (schema) includes the main entities: Agreements (contracts), their clauses, the organizations who are parties to the agreement and the relationships amongst them.

A visual representation of the schema is shown below.

Node properties:

Agreement {agreement_type: STRING, contract_id: INTEGER,

effective_date: STRING, expiration_date: STRING,

renewal_term: STRING, name: STRING}

ContractClause {name: STRING, type: STRING}

ClauseType {name: STRING}

Country {name: STRING}

Excerpt {text: STRING}

Organization {name: STRING}

Relationship properties:

IS_PARTY_TO {role: STRING}

GOVERNED_BY_LAW {state: STRING}

HAS_CLAUSE {type: STRING}

INCORPORATED_IN {state: STRING}

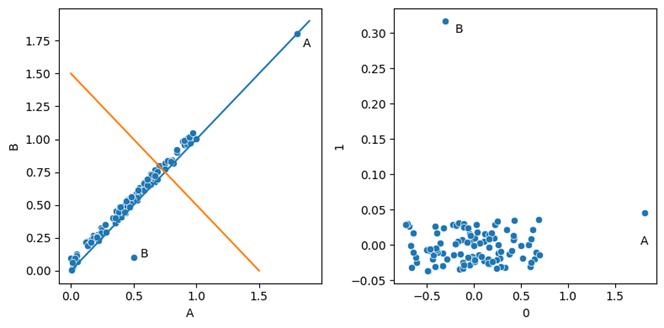

Only the “Excerpts” — the short text pieces identified by the LLM in Step 1 — require text embeddings. This approach dramatically reduces the number of vectors and the size of the vector index needed to represent each contract, making the process more efficient and scalable.

A simplified version of a python script loading each JSON into a Knowledge Graph with the above schema looks like

NEO4J_URI=os.getenv('NEO4J_URI', 'bolt://localhost:7687')

NEO4J_USER=os.getenv('NEO4J_USERNAME', 'neo4j')

NEO4J_PASSWORD=os.getenv('NEO4J_PASSWORD')

OPENAI_API_KEY = os.getenv('OPENAI_API_KEY')

JSON_CONTRACT_FOLDER = './data/output/'

driver = GraphDatabase.driver(NEO4J_URI, auth=(NEO4J_USER, NEO4J_PASSWORD))

contract_id = 1

json_contracts = [filename for filename in os.listdir(JSON_CONTRACT_FOLDER) if filename.endswith('.json')]

for json_contract in json_contracts:

with open(JSON_CONTRACT_FOLDER + json_contract,'r') as file:

json_string = file.read()

json_data = json.loads(json_string)

agreement = json_data['agreement']

agreement['contract_id'] = contract_id

driver.execute_query(CREATE_GRAPH_STATEMENT, data=json_data)

contract_id+=1

create_full_text_indices(driver)

driver.execute_query(CREATE_VECTOR_INDEX_STATEMENT)

print ("Generating Embeddings for Contract Excerpts...")

driver.execute_query(EMBEDDINGS_STATEMENT, token = OPENAI_API_KEY)

Here the “CREATE_GRAPH_STATEMENT” is the only “complex” piece. It is a CYPHER statement that maps the Contract (JSON) into the nodes and relationships in the Knowledge Graph.

The full Cypher statement is below

CREATE_GRAPH_STATEMENT = """

WITH $data AS data

WITH data.agreement as a

MERGE (agreement:Agreement {contract_id: a.contract_id})

ON CREATE SET

agreement.contract_id = a.contract_id,

agreement.name = a.agreement_name,

agreement.effective_date = a.effective_date,

agreement.expiration_date = a.expiration_date,

agreement.agreement_type = a.agreement_type,

agreement.renewal_term = a.renewal_term,

agreement.most_favored_country = a.governing_law.most_favored_country

//agreement.Notice_period_to_Terminate_Renewal = a.Notice_period_to_Terminate_Renewal

MERGE (gl_country:Country {name: a.governing_law.country})

MERGE (agreement)-[gbl:GOVERNED_BY_LAW]->(gl_country)

SET gbl.state = a.governing_law.state

FOREACH (party IN a.parties |

// todo proper global id for the party

MERGE (p:Organization {name: party.name})

MERGE (p)-[ipt:IS_PARTY_TO]->(agreement)

SET ipt.role = party.role

MERGE (country_of_incorporation:Country {name: party.incorporation_country})

MERGE (p)-[incorporated:INCORPORATED_IN]->(country_of_incorporation)

SET incorporated.state = party.incorporation_state

)

WITH a, agreement, [clause IN a.clauses WHERE clause.exists = true] AS valid_clauses

FOREACH (clause IN valid_clauses |

CREATE (cl:ContractClause {type: clause.clause_type})

MERGE (agreement)-[clt:HAS_CLAUSE]->(cl)

SET clt.type = clause.clause_type

// ON CREATE SET c.excerpts = clause.excerpts

FOREACH (excerpt IN clause.excerpts |

MERGE (cl)-[:HAS_EXCERPT]->(e:Excerpt {text: excerpt})

)

//link clauses to a Clause Type label

MERGE (clType:ClauseType{name: clause.clause_type})

MERGE (cl)-[:HAS_TYPE]->(clType)

)"""

Here’s a breakdown of what the statement does:

Data Binding

WITH $data AS data

WITH data.agreement as a

- $data is the input data being passed into the query in JSON format. It contains information about an agreement (contract).

- The second line assigns data.agreement to the alias a, so the contract details can be referenced in the subsequent query.

Upsert the Agreement Node

MERGE (agreement:Agreement {contract_id: a.contract_id})

ON CREATE SET

agreement.name = a.agreement_name,

agreement.effective_date = a.effective_date,

agreement.expiration_date = a.expiration_date,

agreement.agreement_type = a.agreement_type,

agreement.renewal_term = a.renewal_term,

agreement.most_favored_country = a.governing_law.most_favored_country

- MERGE attempts to find an existing Agreement node with the specified contract_id. If no such node exists, it creates one.

- The ON CREATE SET clause sets various properties on the newly created Agreement node, such as contract_id, agreement_name, effective_date, and other agreement-related fields from the JSON input.

Create Governing Law Relationship

MERGE (gl_country:Country {name: a.governing_law.country})

MERGE (agreement)-[gbl:GOVERNED_BY_LAW]->(gl_country)

SET gbl.state = a.governing_law.state

- This creates or merges a Country node for the governing law country associated with the agreement.

- Then, it creates or merges a relationship GOVERNED_BY_LAW between the Agreement and Country.

- It also sets the state property of the GOVERNED_BY_LAW relationship

Create Party and Incorporation Relationships

FOREACH (party IN a.parties |

MERGE (p:Organization {name: party.name})

MERGE (p)-[ipt:IS_PARTY_TO]->(agreement)

SET ipt.role = party.role

MERGE (country_of_incorporation:Country {name: party.incorporation_country})

MERGE (p)-[incorporated:INCORPORATED_IN]->(country_of_incorporation)

SET incorporated.state = party.incorporation_state

)

For each party in the contract (a.parties), it:

- Upserts (Merge) an Organization node for the party.

- Creates an IS_PARTY_TO relationship between the Organization and the Agreement, setting the role of the party (e.g., buyer, seller).

- Merges a Country node for the country in which the organization is incorporated.

- Creates an INCORPORATED_IN relationship between the organization and the incorporation country, and sets the state where the organization is incorporated

Create Contract Clauses and Excerpts

WITH a, agreement, [clause IN a.clauses WHERE clause.exists = true] AS valid_clauses

FOREACH (clause IN valid_clauses |

CREATE (cl:ContractClause {type: clause.clause_type})

MERGE (agreement)-[clt:HAS_CLAUSE]->(cl)

SET clt.type = clause.clause_type

FOREACH (excerpt IN clause.excerpts |

MERGE (cl)-[:HAS_EXCERPT]->(e:Excerpt {text: excerpt})

)

MERGE (clType:ClauseType{name: clause.clause_type})

MERGE (cl)-[:HAS_TYPE]->(clType)

)

- This part first filters the list of clauses (a.clauses) to include only those where clause.exists = true (i.e., clauses with excerpts identified by the LLM in Step 1)

- For each clause:

- It creates a ContractClause node with a name and type corresponding to the clause type.

- A HAS_CLAUSE relationship is established between the Agreement and the ContractClause.

- For each excerpt associated with the clause, it creates an Excerpt node and links it to the ContractClause using a HAS_EXCERPT relationship.

- Finally, a ClauseType node is created (or merged) for the type of the clause, and the ContractClause is linked to the ClauseType using a HAS_TYPE relationship.

Once the import script runs, a single contract can be visualized in Neo4J as a Knowledge Graph

The three contracts in the knowledge graph required only a small graph (under 100 nodes and less than 200 relationships). Most importantly, only 40–50 vector embeddings for the Excerpts are needed. This knowledge graph with a small number of vectors can now be used to power a reasonably powerful Q&A agent.

Step 3: Developing data retrieval functions for GraphRAG

With the contracts now structured in a Knowledge Graph, the next step involves creating a small set of graph data retrieval functions. These functions serve as the core building blocks, allowing us to develop a Q&A agent in step 4.

Let’s define a few basic data retrieval functions:

- Retrieve basic details about a contract (given a contract ID)

- Find contracts involving a specific organization (given a partial organization name)

- Find contracts that DO NOT contain a particular clause type

- Find contracts contain a specific type of clause

- Find contracts based on the semantic similarity with the text (Excerpt) in a clause (e.g., contracts mentioning the use of “prohibited items”)

- Run a natural language query against all contracts in the database. For example, an aggregation query that counts “how many contracts meet certain conditions”.

In step 4, we will build a Q&A using the Microsoft Semantic Kernel library. This library simplifies the agent building process. It allows developers to define the functions and tools that an Agent will have at its disposal to answer a question.

In order to simplify the integration between Neo4J and the Semantic Kernel library, let’s define a ContractPlugin that defines the “signature” of each our data retrieval functions. Note the @kernel_function decorator for each of the functions and also the type information and description provided for each function.

Semantic Kernel uses the concept of a “Plugin” class to encapsulate a group of functions available to an Agent. It will use the decorated functions, type information and documentation to inform the LLM function calling capabilities about functions available.

from typing import List, Optional, Annotated

from AgreementSchema import Agreement, ClauseType

from semantic_kernel.functions import kernel_function

from ContractService import ContractSearchService

class ContractPlugin:

def __init__(self, contract_search_service: ContractSearchService ):

self.contract_search_service = contract_search_service

@kernel_function

async def get_contract(self, contract_id: int) -> Annotated[Agreement, "A contract"]:

"""Gets details about a contract with the given id."""

return await self.contract_search_service.get_contract(contract_id)

@kernel_function

async def get_contracts(self, organization_name: str) -> Annotated[List[Agreement], "A list of contracts"]:

"""Gets basic details about all contracts where one of the parties has a name similar to the given organization name."""

return await self.contract_search_service.get_contracts(organization_name)

@kernel_function

async def get_contracts_without_clause(self, clause_type: ClauseType) -> Annotated[List[Agreement], "A list of contracts"]:

"""Gets basic details from contracts without a clause of the given type."""

return await self.contract_search_service.get_contracts_without_clause(clause_type=clause_type)

@kernel_function

async def get_contracts_with_clause_type(self, clause_type: ClauseType) -> Annotated[List[Agreement], "A list of contracts"]:

"""Gets basic details from contracts with a clause of the given type."""

return await self.contract_search_service.get_contracts_with_clause_type(clause_type=clause_type)

@kernel_function

async def get_contracts_similar_text(self, clause_text: str) -> Annotated[List[Agreement], "A list of contracts with similar text in one of their clauses"]:

"""Gets basic details from contracts having semantically similar text in one of their clauses to the to the 'clause_text' provided."""

return await self.contract_search_service.get_contracts_similar_text(clause_text=clause_text)

@kernel_function

async def answer_aggregation_question(self, user_question: str) -> Annotated[str, "An answer to user_question"]:

"""Answer obtained by turning user_question into a CYPHER query"""

return await self.contract_search_service.answer_aggregation_question(user_question=user_question)

I would recommend exploring the “ContractService” class that contains the implementations of each of the above functions. Each function exercises a a different data retrieval technique.

Let’s walk through the implementation of some of these functions as they showcase different GraphRAG data retrieval techniques / patterns

Get Contract (from contract ID) — A Cypher-based retrieval function

The get_contract(self, contract_id: int), is an asynchronous method designed to retrieve details about a specific contract (Agreement) from a Neo4J database using a Cypher query. The function returns an Agreement object populated with information about the agreement, clauses, parties, and their relationships.

Here’s the implementation of this function

async def get_contract(self, contract_id: int) -> Agreement:

GET_CONTRACT_BY_ID_QUERY = """

MATCH (a:Agreement {contract_id: $contract_id})-[:HAS_CLAUSE]->(clause:ContractClause)

WITH a, collect(clause) as clauses

MATCH (country:Country)-[i:INCORPORATED_IN]-(p:Organization)-[r:IS_PARTY_TO]-(a)

WITH a, clauses, collect(p) as parties, collect(country) as countries, collect(r) as roles, collect(i) as states

RETURN a as agreement, clauses, parties, countries, roles, states

"""

agreement_node = {}

records, _, _ = self._driver.execute_query(GET_CONTRACT_BY_ID_QUERY,{'contract_id':contract_id})

if (len(records)==1):

agreement_node = records[0].get('agreement')

party_list = records[0].get('parties')

role_list = records[0].get('roles')

country_list = records[0].get('countries')

state_list = records[0].get('states')

clause_list = records[0].get('clauses')

return await self._get_agreement(

agreement_node, format="long",

party_list=party_list, role_list=role_list,

country_list=country_list,state_list=state_list,

clause_list=clause_list

)

The most important component is the The Cypher query in GET_CONTRACT_BY_ID_QUERY This query is executed using contract_id supplied as input parameter. The output is the matching Agreement, its clauses and parties involved (each party has a role and country/state of incorporation)

The data is then passed to an utility function _get_agreementwhich simply maps the data to an “Agreement”. The agreement is a TypedDict defined as

class Agreement(TypedDict):

contract_id: int

agreement_name: str

agreement_type: str

effective_date: str

expiration_date: str

renewal_term: str

notice_period_to_terminate_Renewal: str

parties: List[Party]

clauses: List[ContractClause]

Get Contracts WITHOUT a Clause type — Another Cypher retrieval function

This function illustrate a powerful feature of a knowledge graph, which is to test for the absence of a relationship.

The get_contracts_without_clause() function retrieves all contracts (Agreements) from the Neo4J database that do not contain a specific type of clause. The function takes a ClauseType as input and returns a list of Agreement objects that match the condition.

This type of data retrieval information can’t be easily implemented with vector search. The full implementation follows

async def get_contracts_without_clause(self, clause_type: ClauseType) -> List[Agreement]:

GET_CONTRACT_WITHOUT_CLAUSE_TYPE_QUERY = """

MATCH (a:Agreement)

OPTIONAL MATCH (a)-[:HAS_CLAUSE]->(cc:ContractClause {type: $clause_type})

WITH a,cc

WHERE cc is NULL

WITH a

MATCH (country:Country)-[i:INCORPORATED_IN]-(p:Organization)-[r:IS_PARTY_TO]-(a)

RETURN a as agreement, collect(p) as parties, collect(r) as roles, collect(country) as countries, collect(i) as states

"""

#run the Cypher query

records, _ , _ = self._driver.execute_query(GET_CONTRACT_WITHOUT_CLAUSE_TYPE_QUERY,{'clause_type':clause_type.value})

all_agreements = []

for row in records:

agreement_node = row['agreement']

party_list = row['parties']

role_list = row['roles']

country_list = row['countries']

state_list = row['states']

agreement : Agreement = await self._get_agreement(

format="short",

agreement_node=agreement_node,

party_list=party_list,

role_list=role_list,

country_list=country_list,

state_list=state_list

)

all_agreements.append(agreement)

return all_agreements

Once again, the format is similar to the previous function. A Cypher query,GET_CONTRACTS_WITHOUT_CLAUSE_TYPE_QUERY , defines the nodes and relationship patterns to be matched. It performs an optional match to filters out contracts that do contain a clause type, and collects related data about the agreement, such as the involved parties and their details.

The function then constructs and returns a list of Agreement objects, which encapsulate all the relevant information for each matching agreement.

Get Contract with Semantically Similar Text — A Vector-Search + Graph data retrieval function

The get_contracts_similar_text() function is designed to find agreements (contracts) that contain clauses with text similar to a provided clause_text. It uses semantic vector search to identify related Excerpts and then traverses the graph to return information about the corresponding agreements and clauses, where those excerpts came from.

This function leverages a vector index defined on the “text” property of each Excerpt. It uses the recently released Neo4J GraphRAG package to simplify the Cypher code needed to run semantic search + Graph traversal code.

async def get_contracts_similar_text(self, clause_text: str) -> List[Agreement]:

#Cypher to traverse from the semantically similar excerpts back to the agreement

EXCERPT_TO_AGREEMENT_TRAVERSAL_QUERY="""

MATCH (a:Agreement)-[:HAS_CLAUSE]->(cc:ContractClause)-[:HAS_EXCERPT]-(node)

RETURN a.name as agreement_name, a.contract_id as contract_id, cc.type as clause_type, node.text as excerpt

"""

#Set up vector Cypher retriever

retriever = VectorCypherRetriever(

driver= self._driver,

index_name="excerpt_embedding",

embedder=self._openai_embedder,

retrieval_query=EXCERPT_TO_AGREEMENT_TRAVERSAL_QUERY,

result_formatter=my_vector_search_excerpt_record_formatter

)

# run vector search query on excerpts and get results containing the relevant agreement and clause

retriever_result = retriever.search(query_text=clause_text, top_k=3)

#set up List of Agreements (with partial data) to be returned

agreements = []

for item in retriever_result.items:

//extract information from returned items and append agreement to results

// full code not shown here but available on the Github repo

return agreements

Let’s go over the main components of this data retrieval function

- The Neo4j GraphRAG VectorCypherRetriever allows a developer to perform semantic similarity on a vector index. In our case, for each semantically similar Excerpt “node” found, an additional Cypher expression is used to fetch additional nodes in the graph related to the node.

- The parameters of the VectorCypherRetriever are straightforward. The index_name is the vector index on which to run semantic similarity. The embedder generates a vector embedding for a piece of text. The driver is just an instance of a Neo4j Python driver. The retrieval_query specify the additional nodes and relationships connected with ever “Excerpt” node identified by semantic similarity

- The EXCERPT_TO_AGREEMENT_TRAVERSAL_QUERY

specifies the additional nodes to be retrieved. In this case, for every Excerpt, we are retrieving its related Contract Clause and corresponding Agreement

EXCERPT_TO_AGREEMENT_TRAVERSAL_QUERY="""

MATCH (a:Agreement)-[:HAS_CLAUSE]->(cc:ContractClause)-[:HAS_EXCERPT]-(node)

RETURN a.name as agreement_name, a.contract_id as contract_id, cc.type as clause_type, node.text as excerpt

"""

Run a Natural Language Query — A Text 2Cypher data retrieval function

The answer_aggregation_question() function leverages Neo4j GraphRAG package “Text2CypherRetriever” to answer a question in natural language. The Text2CypherRetriever uses an LLM to turn the user question into a Cypher query and runs it against the Neo4j database.

The function leverages OpenAI gpt-4o to generate the required Cypher query. Let’s walk through the main components of this data retrieval function.

async def answer_aggregation_question(self, user_question) -> str:

answer = ""

NEO4J_SCHEMA = """

omitted for brevity (see below for the full value)

"""

# Initialize the retriever

retriever = Text2CypherRetriever(

driver=self._driver,

llm=self._llm,

neo4j_schema=NEO4J_SCHEMA

)

# Generate a Cypher query using the LLM, send it to the Neo4j database, and return the results

retriever_result = retriever.search(query_text=user_question)

for item in retriever_result.items:

content = str(item.content)

if content:

answer += content + 'nn'

return answer

This function leverages Neo4j GraphRAG package “Text2CypherRetriever”. It uses an LLM, in this case OpenAI LLM is used to turn a user question (natural language) into a Cypher query that is executed against the database. The result of this query is returned.

A key element to ensure that the LLM generates a query that uses the nodes, relationships and properties defined in the database is to provide the LLM with a text description of the schema.

In our case, we used the following representation of the data model is sufficient.

NEO4J_SCHEMA = """

Node properties:

Agreement {agreement_type: STRING, contract_id: INTEGER,effective_date: STRING,renewal_term: STRING, name: STRING}

ContractClause {name: STRING, type: STRING}

ClauseType {name: STRING}

Country {name: STRING}

Excerpt {text: STRING}

Organization {name: STRING}

Relationship properties:

IS_PARTY_TO {role: STRING}

GOVERNED_BY_LAW {state: STRING}

HAS_CLAUSE {type: STRING}

INCORPORATED_IN {state: STRING}

The relationships:

(:Agreement)-[:HAS_CLAUSE]->(:ContractClause)

(:ContractClause)-[:HAS_EXCERPT]->(:Excerpt)

(:ContractClause)-[:HAS_TYPE]->(:ClauseType)

(:Agreement)-[:GOVERNED_BY_LAW]->(:Country)

(:Organization)-[:IS_PARTY_TO]->(:Agreement)

(:Organization)-[:INCORPORATED_IN]->(:Country)

"""

Step 4: Building a Q&A Agent

Armed with our Knowledge Graph data retrieval functions, we are ready to build an agent grounded by GraphRAG 🙂

Let’s sets up a chatbot agent capable of answering user queries about contracts using a combination of OpenAI’s gpt-4o model, our data retrieval functions and a Neo4j-powered knowledge graph.

We will use Microsoft Semantic Kernel, a framework that allows developers to integrate LLM function calling with existing APIs and data retrieval functions

The framework uses a concept called Plugins to represent specific functionality that the kernel can perform. In our case, all of our data retrieval functions defined in the “ContractPlugin” can be used by the LLM to answer the question.

The framework uses the concept of Memory to keep all interactions between user and agent, as well as functions executed and data retrieved.

A extremely simple Terminal-based agent can be implemented with a few lines of code. The snippet below shows the main parts of the agent (imports and environment vars removed).

logging.basicConfig(level=logging.INFO)

# Initialize the kernel

kernel = Kernel()

# Add the Contract Search plugin to the kernel

contract_search_neo4j = ContractSearchService(NEO4J_URI,NEO4J_USER,NEO4J_PASSWORD)

kernel.add_plugin(ContractPlugin(contract_search_service=contract_search_neo4j),plugin_name="contract_search")

# Add the OpenAI chat completion service to the Kernel

kernel.add_service(OpenAIChatCompletion(ai_model_id="gpt-4o",api_key=OPENAI_KEY, service_id=service_id))

# Enable automatic function calling

settings: OpenAIChatPromptExecutionSettings = kernel.get_prompt_execution_settings_from_service_id(service_id=service_id)

settings.function_choice_behavior = FunctionChoiceBehavior.Auto(filters={"included_plugins": ["contract_search"]})

# Create a history of the conversation

history = ChatHistory()

async def basic_agent() :

userInput = None

while True:

# Collect user input

userInput = input("User > ")

# Terminate the loop if the user says "exit"

if userInput == "exit":

break

# Add user input to the history

history.add_user_message(userInput)

# 3. Get the response from the AI with automatic function calling

chat_completion : OpenAIChatCompletion = kernel.get_service(type=ChatCompletionClientBase)

result = (await chat_completion.get_chat_message_contents(

chat_history=history,

settings=settings,

kernel=kernel,

arguments=KernelArguments(),

))[0]

# Print the results

print("Assistant > " + str(result))

# Add the message from the agent to the chat history

history.add_message(result)

if __name__ == "__main__":

asyncio.run(basic_agent())

Let ask our agent some non-trivial questions

1. Find me contracts with Price Restrictions but no insurance clauses

Assistant > I found the following contract that contains a Price Restrictions clause but does not contain an Insurance clause:

- **Name:** Birch First Global Investments Inc. and Mount Knowledge Holdings Inc. MARKETING AFFILIATE AGREEMENT

- **Agreement Type:** MARKETING AFFILIATE AGREEMENT

- **Parties:**

- **MOUNT KNOWLEDGE HOLDINGS INC.** (Marketing Affiliate), incorporated in Nevada, USA

- **BIRCH FIRST GLOBAL INVESTMENTS INC.** (Company), incorporated in the Virgin Islands (VIR)

Would you like to know more details about this contract?

Here, The Semantic Agent planner has reviewed the available functions in the Kernel and determined that answering this question requires invoking two functions that, on their own, won’t provide the complete answer.

First, it executes the “Get Contracts with [Price Restriction] Clauses” function, followed by the “Get Contracts WITHOUT [Insurance] Clauses” function. The planner then combines the results from both functions to identify the intersection, ultimately arriving at the correct answer.

If you look at the debugging information, you can confirm the sequence of calls performed by the LLM, along with information on the number of tokens consumed by the call. Notice the calls with the 2 data retrieval functions.

2. Yes, please. Provide more details

Assistant > Here are the detailed information about the contract:

### Contract Details:

- **Name:** Birch First Global Investments Inc. and Mount Knowledge Holdings Inc. MARKETING AFFILIATE AGREEMENT

- **Agreement Type:** MARKETING AFFILIATE AGREEMENT

- **Expiration Date:** December 31, 2014

- **Renewal Term:** One (1) year

### Parties Involved:

1. **MOUNT KNOWLEDGE HOLDINGS INC.**

- **Role:** Marketing Affiliate

- **Incorporation Country:** USA

- **Incorporation State:** Nevada

2. **BIRCH FIRST GLOBAL INVESTMENTS INC.**

- **Role:** Company

- **Incorporation Country:** Virgin Islands (VIR)

### Clauses Included:

- No-Solicit Of Employees

- Third Party Beneficiary

- Price Restrictions

- Affiliate License-Licensee

- Affiliate License-Licensor

- Cap On Liability

- Audit Rights

- Warranty Duration

- Post-Termination Services

- Non-Transferable License

- License grant

- IP Ownership Assignment

- Minimum Commitment

- Anti-Assignment

- Non-Disparagement

### Clauses Not Included:

- Insurance

Is there anything specific you would like to know or any particular clause details you are interested in?

In this case, behind the scenes, the LLM used the “Find Contract by ID”. You may have noticed that the output shown to the user did not include the id of the contract. However, the Semantic Kernel stored all information returned from the functions from previous calls in the conversation memory!

This powerful combination of having the agent decide which function(s) to call, the use of memory and the automatic marshalling of the user queries into function calls with the right parameters explain the “Magic” behind an agent built with Semantic Kernel!

3. Does any contract mention the ability to make price changes from time to time

Assistant > Yes, there is a contract that mentions

the ability to make price changes from time to time.

Here is the relevant excerpt:

1. **Birch First Global Investments Inc. and Mount Knowledge Holdings Inc. MARKETING AFFILIATE AGREEMENT**

- **Contract ID:** 1

- **Clause Type:** Price Restrictions

- **Excerpt:**

```

Company reserves the right to change its process and/or fees, from time to time, in its sole and absolute discretion. In the event of a price and/or fee change for Technology, Company shall notify MA in writing ninety (90) days prior to the effective date of any such change. All other prices and/or fees may be adjusted without prior notice to MA.

```

Would you like more information about this contract or any other details?

In order to answer this question, Semantic Kernel is executing the get_contract_similar_text data retrieval function.

User > any contract mentions the ability to make price changes from time to time

INFO:semantic_kernel.connectors.ai.open_ai.services.open_ai_chat_completion_base:processing 1 tool calls in parallel.

INFO:semantic_kernel.kernel:Calling contract_search-get_contracts_similar_text

function with args: {"clause_text":"price changes from time to time"}

Assistant > Yes, there is a contract that mentions the ability to make price changes from time to time. Here is the relevant excerpt:

1. **Birch First Global Investments Inc. and Mount Knowledge Holdings Inc. MARKETING AFFILIATE AGREEMENT**

- **Contract ID:** 1

- **Clause Type:** Price Restrictions

- **Excerpt:**

```

Company reserves the right to change its process and/or fees, from time to time, in its sole and absolute discretion. In the event of a price and/or fee change for Technology, Company shall notify MA in writing ninety (90) days prior to the effective date of any such change. All other prices and/or fees may be adjusted without prior notice to MA.

```

As explained earlier, this function uses vector search and a Cypher query to retrieve not just semantically similar excerpts but also the related contract and clauses that includes them.

Finally, let’s ask how many contracts are there on the database

4. How many contracts are there in the database?

This questions requires the LLM to use the Text2Cypher data retriever

Assistant > There are a total of 3 contracts in the database.

The debug information confirms that Semantic Kernel ran the Text2Cypher data retrieval function with “user_question” = “How many contracts are there in the database’?

User > how many contracts are there on the database?

INFO:httpx:HTTP Request: POST https://api.openai.com/v1/chat/completions "HTTP/1.1 200 OK"

INFO:semantic_kernel.connectors.ai.open_ai.services.open_ai_chat_completion_base:processing 1 tool calls in parallel.

INFO:semantic_kernel.kernel:Calling contract_search-answer_aggregation_question function

with args: {"user_question":"How many contracts are there in the database?"}

INFO:semantic_kernel.functions.kernel_function:Function completed. Duration: 0.588805s

INFO:semantic_kernel.connectors.ai.open_ai.services.open_ai_handler:OpenAI usage: CompletionUsage(completion_tokens=13, prompt_tokens=3328, total_tokens=3341, completion_tokens_details={'reasoning_tokens': 0})

Assistant > There are a total of 3 contracts in the database.

Try it Yourself

The github repo contains a Streamlit app that provides a more elegant Agent UI. You are encouraged to interact with the agent and make changes to the ContractPlugin so your agent’s ability to handle more questions!

Conclusion

In this blog, we explored a Graph Retrieval Augmented Generation (GraphRAG) approach to transform labor-intensive tasks of commercial contract review into a more efficient, AI-driven process.

By focusing on targeted information extraction using LLMs and prompts, building a structured knowledge graph with Neo4j, implementing simple data retrieval functions, and ultimately developing a Q&A agent, we created an intelligent solution that handles complex questions effectively.

This approach minimizes inefficiencies found in traditional vector search based RAG, focusing instead on extracting only relevant information, reducing the need for unnecessary vector embeddings, and simplifying the overall process. We hope this journey from contract ingestion to an interactive Q&A agent inspires you to leverage GraphRAG in your own projects for improved efficiency and smarter AI-driven decision-making.

Start building your own commercial contract review agent today and experience the power of GraphRAG firsthand!

Resources

For those eager to take a deeper dive, please check out the resources linked below:

- GitHub repository with the code and detailed instructions

- Contract Understanding Atticus Dataset (CUAD) for Legal Contracts (Github)

- CUAD: An Expert-Annotated NLP Dataset for Legal Contract Review. Hendrycks, Burns, Chen, Ball. NeurIPS 2021

- Neo4j GraphRAG package launch blog post

- Microsoft Semantic Kernel library

Unless otherwise noted, all images are by the author

GraphRAG in Action: From Commercial Contracts to a Dynamic Q&A Agent was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.