Originally appeared here:

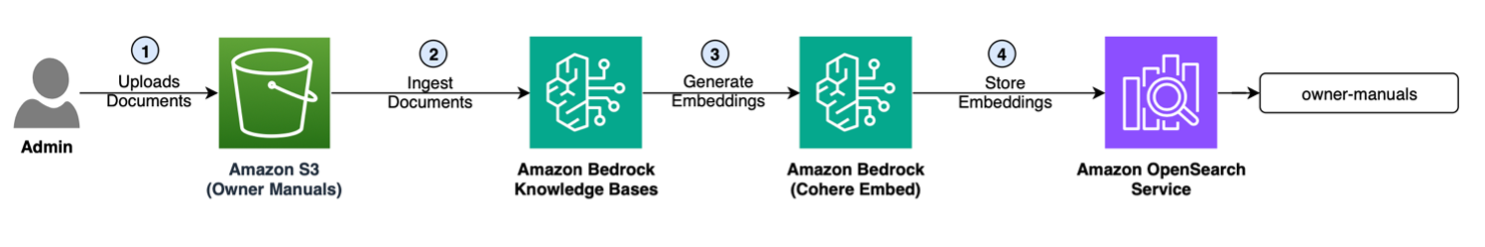

Enhance customer support with Amazon Bedrock Agents by integrating enterprise data APIs

Originally appeared here:

Enhance customer support with Amazon Bedrock Agents by integrating enterprise data APIs

Feeling inspired to write your first TDS post? We’re always open to contributions from new authors.

The roadmap to success in data science offers many different paths, but most of them include a strong focus on math and programming skills (case in point: this excellent guide for aspiring data professionals that Saankhya Mondal published earlier this week). Once you’ve got your bases covered in those areas, however, what’s next? What topics do data scientists need to build expertise in to differentiate themselves from the pack in a crowded job market?

Our weekly highlights zoom in on some of the areas you may want to explore in the coming weeks and months, and provide actionable advice from authors who are deeply embedded in a wide cross-section of industry and academic roles. From mastering the ins and outs of data infrastructure to expanding one’s storytelling skills, let’s take a close look at some of those peripheral—but still crucial—areas of potential growth.

Thank you for supporting the work of our authors! As we mentioned above, we love publishing articles from new authors, so if you’ve recently written an interesting project walkthrough, tutorial, or theoretical reflection on any of our core topics, don’t hesitate to share it with us.

Until the next Variable,

TDS Team

Beyond Math and Python: The Other Key Data Science Skills You Should Develop was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Beyond Math and Python: The Other Key Data Science Skills You Should Develop

Apache Spark has been one of the leading analytical engines in recent years due to its power in distributed data processing. PySpark, the Python API for Spark, is often used for personal and enterprise projects to address data challenges. For example, we can efficiently implement feature engineering for time-series data using PySpark, including ingestion, extraction, and visualization. However, despite its capacity to handle large datasets, performance bottlenecks can still arise under various scenarios such as extreme data distribution and complex data transformation workflow.

This article will examine different common performance issues in data processing with PySpark on Databricks, and walk through various strategies for fine-tuning to achieve faster execution.

Imagine you open an online retail shop that offers a variety of products and is primarily targeted at U.S. customers. You plan to analyze buying habits from current transactions to satisfy more needs of current customers and serve more new ones. This motivates you to put much effort into processing the transaction records as a preparation step.

We first simulate 1 million transaction records (surely expected to handle much larger datasets in real big data scenarios) in a CSV file. Each record includes a customer ID, product purchased, and transaction details such as payment methods and total amounts. One note worth mentioning is that a product agent with customer ID #100 has a significant customer base, and thus occupies a significant portion of purchases in your shop for drop-shipping.

Below are the codes demonstrating this scenario:

import csv

import datetime

import numpy as np

import random

# Remove existing ‘retail_transactions.csv’ file, if any

! rm -f /p/a/t/h retail_transactions.csv

# Set the no of transactions and othet configs

no_of_iterations = 1000000

data = []

csvFile = 'retail_transactions.csv'

# Open a file in write mode

with open(csvFile, 'w', newline='') as f:

fieldnames = ['orderID', 'customerID', 'productID', 'state', 'paymentMthd', 'totalAmt', 'invoiceTime']

writer = csv.DictWriter(f, fieldnames=fieldnames)

writer.writeheader()

for num in range(no_of_iterations):

# Create a transaction record with random values

new_txn = {

'orderID': num,

'customerID': random.choice([100, random.randint(1, 100000)]),

'productID': np.random.randint(10000, size=random.randint(1, 5)).tolist(),

'state': random.choice(['CA', 'TX', 'FL', 'NY', 'PA', 'OTHERS']),

'paymentMthd': random.choice(['Credit card', 'Debit card', 'Digital wallet', 'Cash on delivery', 'Cryptocurrency']),

'totalAmt': round(random.random() * 5000, 2),

'invoiceTime': datetime.datetime.now().isoformat()

}

data.append(new_txn)

writer.writerows(data)

After mocking the data, we load the CSV file into the PySpark DataFrame using Databrick’s Jupyter Notebook.

# Set file location and type

file_location = "/FileStore/tables/retail_transactions.csv"

file_type = "csv"

# Define CSV options

schema = "orderID INTEGER, customerID INTEGER, productID INTEGER, state STRING, paymentMthd STRING, totalAmt DOUBLE, invoiceTime TIMESTAMP"

first_row_is_header = "true"

delimiter = ","

# Read CSV files into DataFrame

df = spark.read.format(file_type)

.schema(schema)

.option("header", first_row_is_header)

.option("delimiter", delimiter)

.load(file_location)

We additionally create a reusable decorator utility to measure and compare the execution time of different approaches within each function.

import time

# Measure the excution time of a given function

def time_decorator(func):

def wrapper(*args, **kwargs):

begin_time = time.time()

output = func(*args, **kwargs)

end_time = time.time()

print(f"Execution time of function {func.__name__}: {round(end_time - begin_time, 2)} seconds.")

return output

return wrapper

Okay, all the preparation is completed. Let’s explore different potential challenges of execution performance in the following sections.

Spark uses Resilient Distributed Dataset (RDD) as its core building blocks, with data typically kept in memory by default. Whether executing computations (like joins and aggregations) or storing data across the cluster, all operations contribute to memory usage in a unified region.

If we design improperly, the available memory may become insufficient. This causes excess partitions to spill onto the disk, which results in performance degradation.

Caching and persisting intermediate results or frequently accessed datasets are common practices. While both cache and persist serve the same purposes, they may differ in their storage levels. The resources should be used optimally to ensure efficient read and write operations.

For example, if transformed data will be reused repeatedly for computations and algorithms across different subsequent stages, it is advisable to cache that data.

Code example: Assume we want to investigate different subsets of transaction records using a digital wallet as the payment method.

from pyspark.sql.functions import col

@time_decorator

def without_cache(data):

# 1st filtering

df2 = data.where(col("paymentMthd") == "Digital wallet")

count = df2.count()

# 2nd filtering

df3 = df2.where(col("totalAmt") > 2000)

count = df3.count()

return count

display(without_cache(df))

from pyspark.sql.functions import col

@time_decorator

def after_cache(data):

# 1st filtering with cache

df2 = data.where(col("paymentMthd") == "Digital wallet").cache()

count = df2.count()

# 2nd filtering

df3 = df2.where(col("totalAmt") > 2000)

count = df3.count()

return count

display(after_cache(df))

After caching, even if we want to filter the transformed dataset with different transaction amount thresholds or other data dimensions, the execution times will still be more manageable.

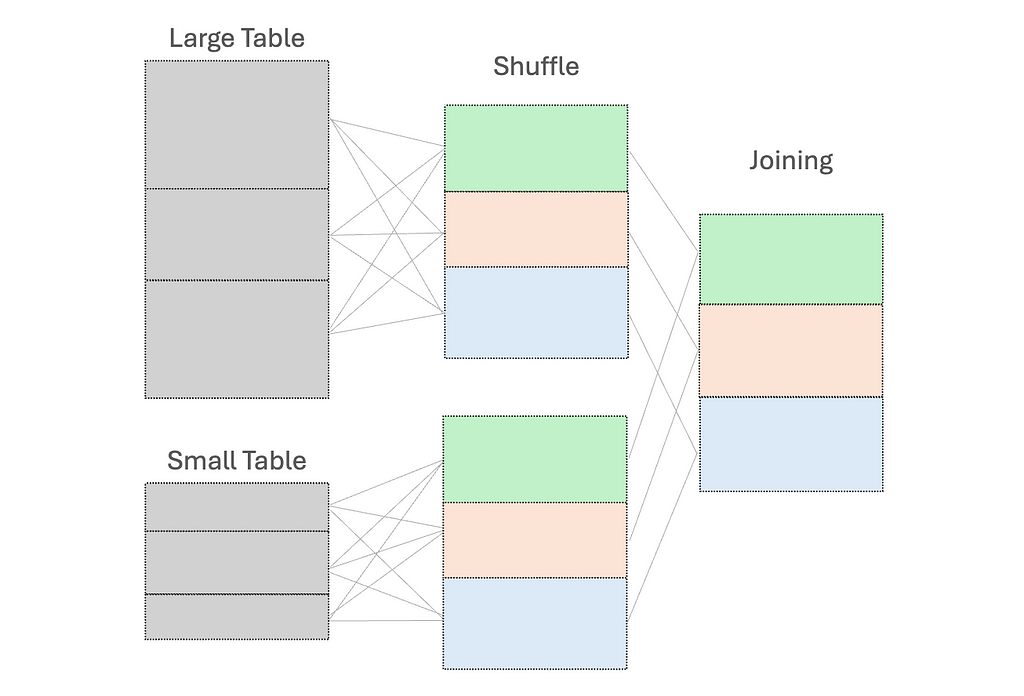

When we perform operations like joining DataFrames or grouping by data fields, shuffling occurs. This is necessary to redistribute all records across the cluster and to ensure those with the same key are on the same node. This in turn facilitates simultaneous processing and combining of the results.

However, this shuffle operation is costly — high execution times and additional network overhead due to data movement between nodes.

To reduce shuffling, there are several strategies:

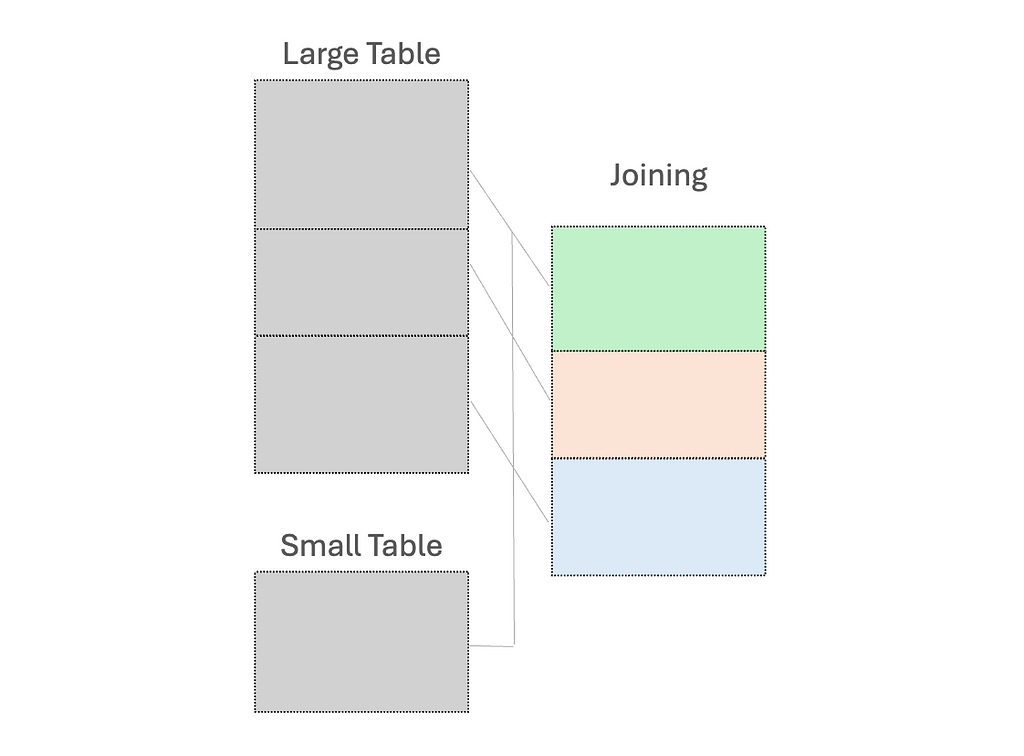

(1) Use broadcast variables for the small dataset, to send a read-only copy to every worker node for local processing

While “small” dataset is often defined by a maximum memory threshold of 8GB per executor, the ideal size for broadcasting should be determined through experimentation on specific case.

(2) Early filtering, to minimize the amount of data processed as early as possible; and

(3) Control the number of partitions to ensure optimal performance

Code examples: Assume we want to return the transaction records that match our list of states, along with their full names

from pyspark.sql.functions import col

@time_decorator

def no_broadcast_var(data):

# Create small dataframe

small_data = [("CA", "California"), ("TX", "Texas"), ("FL", "Florida")]

small_df = spark.createDataFrame(small_data, ["state", "stateLF"])

# Perform joining

result_no_broadcast = data.join(small_df, "state")

return result_no_broadcast.count()

display(no_broadcast_var(df))

from pyspark.sql.functions import col, broadcast

@time_decorator

def have_broadcast_var(data):

small_data = [("CA", "California"), ("TX", "Texas"), ("FL", "Florida")]

small_df = spark.createDataFrame(small_data, ["state", "stateFullName"])

# Create broadcast variable and perform joining

result_have_broadcast = data.join(broadcast(small_df), "state")

return result_have_broadcast.count()

display(have_broadcast_var(df))

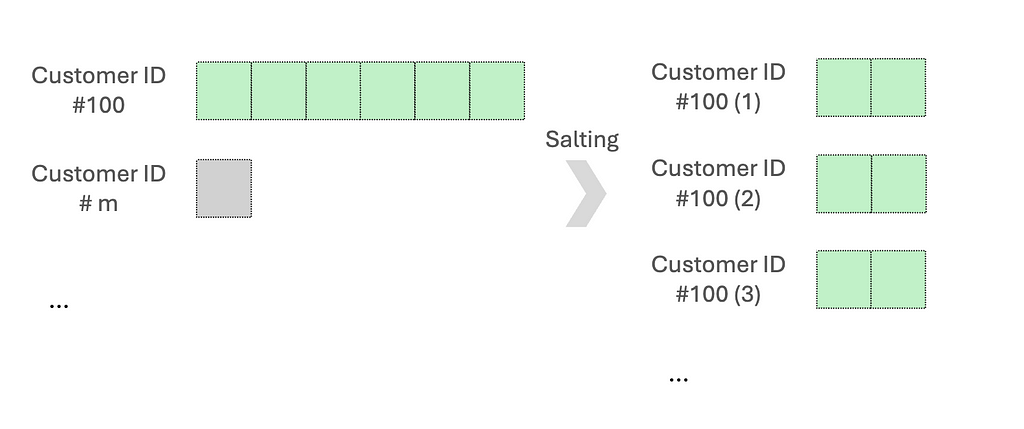

Data can sometimes be unevenly distributed, especially for data fields used as the key for processing. This leads to imbalanced partition sizes, in which some partitions are significantly larger or smaller than the average.

Since the execution performance is limited by the longest-running tasks, it is necessary to address the over-burdened nodes.

One common approach is salting. This works by adding randomized numbers to the skewed key so that there is a more uniform distribution across partitions. Let’s say when aggregating data based on the skewed key, we will aggregate using the salted key and then aggregate with the original key. Another method is re-partitioning, which increases the number of partitions to help distribute the data more evenly.

Code examples: We want to aggregate an asymmetric dataset, mainly skewed by customer ID #100.

from pyspark.sql.functions import col, desc

@time_decorator

def no_salting(data):

# Perform aggregation

agg_data = data.groupBy("customerID").agg({"totalAmt": "sum"}).sort(desc("sum(totalAmt)"))

return agg_data

display(no_salting(df))

from pyspark.sql.functions import col, lit, concat, rand, split, desc

@time_decorator

def have_salting(data):

# Salt the customerID by adding the suffix

salted_data = data.withColumn("salt", (rand() * 8).cast("int"))

.withColumn("saltedCustomerID", concat(col("customerID"), lit("_"), col("salt")))

# Perform aggregation

agg_data = salted_data.groupBy("saltedCustomerID").agg({"totalAmt": "sum"})

# Remove salt for further aggregation

final_result = agg_data.withColumn("customerID", split(col("saltedCustomerID"), "_")[0]).groupBy("customerID").agg({"sum(totalAmt)": "sum"}).sort(desc("sum(sum(totalAmt))"))

return final_result

display(have_salting(df))

A random prefix or suffix to the skewed keys will both work. Generally, 5 to 10 random values are a good starting point to balance between spreading out the data and maintaining high complexity.

People often prefer using user-defined functions (UDFs) since it is flexible in customizing the data processing logic. However, UDFs operate on a row-by-row basis. The code shall be serialized by the Python interpreter, sent to the executor JVM, and then deserialized. This incurs high serialization costs and prevents Spark from optimizing and processing the code efficiently.

The simple and direct approach is to avoid using UDFs when possible.

We should first consider using the built-in Spark functions, which can handle tasks such as aggregation, arrays/maps operations, date/time stamps, and JSON data processing. If the built-in functions do not satisfy your desired tasks indeed, we can consider using pandas UDFs. They are built on top of Apache Arrow for lower overhead costs and higher performance, compared to UDFs.

Code examples: The transaction price is discounted based on the originating state.

from pyspark.sql.functions import udf

from pyspark.sql.types import DoubleType

from pyspark.sql import functions as F

import numpy as np

# UDF to calculate discounted amount

def calculate_discount(state, amount):

if state == "CA":

return amount * 0.90 # 10% off

else:

return amount * 0.85 # 15% off

discount_udf = udf(calculate_discount, DoubleType())

@time_decorator

def have_udf(data):

# Use the UDF

discounted_data = data.withColumn("discountedTotalAmt", discount_udf("state", "totalAmt"))

# Show the results

return discounted_data.select("customerID", "totalAmt", "state", "discountedTotalAmt").show()

display(have_udf(df))

from pyspark.sql.functions import when

@time_decorator

def no_udf(data):

# Use when and otherwise to discount the amount based on conditions

discounted_data = data.withColumn(

"discountedTotalAmt",

when(data.state == "CA", data.totalAmt * 0.90) # 10% off

.otherwise(data.totalAmt * 0.85)) # 15% off

# Show the results

return discounted_data.select("customerID", "totalAmt", "state", "discountedTotalAmt").show()

display(no_udf(df))

In this example, we use the built-in PySpark functions “when and otherwise” to effectively check multiple conditions in sequence. There are unlimited examples based on our familiarity with those functions. For instance, pyspark.sql.functions.transforma function that aids in applying a transformation to each element in the input array has been introduced since PySpark version 3.1.0.

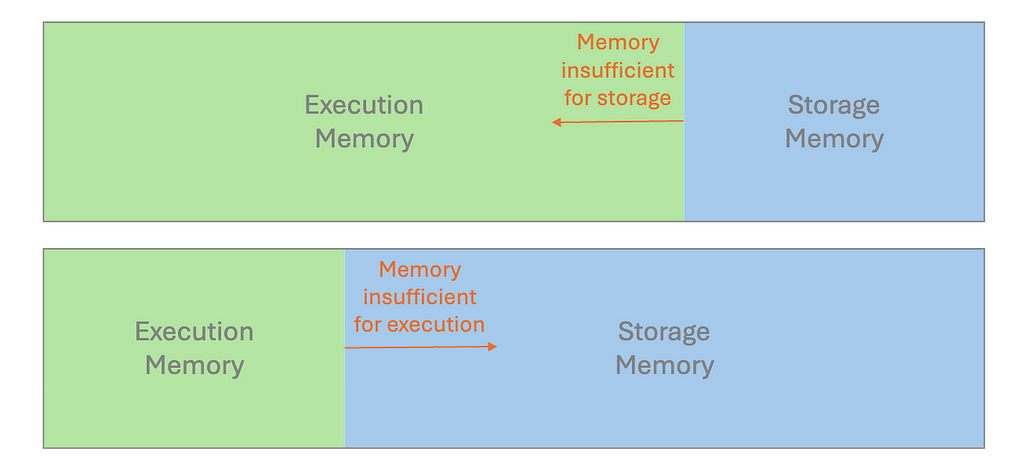

As discussed in the Storage section, a spill occurs by writing temporary data from memory to disk due to insufficient memory to hold all the required data. Many performance issues we have covered are related to spills. For example, operations that shuffle large amounts of data between partitions can easily lead to memory exhaustion and subsequent spill.

It is crucial to examine the performance metrics in Spark UI. If we discover the statistics for Spill(Memory) and Spill(Disk), the spill is probably the reason for long-running tasks. To remediate this, try to instantiate a cluster with more memory per worker, e.g. increase the executor process size, by tuning the configuration value spark.executor.memory; Alternatively, we can configure spark.memory.fraction to adjust how much memory is allocated for execution and storage.

We came across several common factors leading to performance degradation in PySpark, and the possible improvement methods:

Recently, Adaptive Query Execution (AQE) has been newly addressed for dynamic planning and re-planning of queries based on runtime stats. This supports different features of query re-optimization that occur during query execution, which creates a great optimization technique. However, understanding data characteristics during the initial design is still essential, as it informs better strategies for writing effective codes and queries while using AQE for fine-tuning.

If you enjoy this reading, I invite you to follow my Medium page and LinkedIn page. By doing so, you can stay updated with exciting content related to data science side projects, Machine Learning Operations (MLOps) demonstrations, and project management methodologies.

Optimizing the Data Processing Performance in PySpark was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Optimizing the Data Processing Performance in PySpark

Go Here to Read this Fast! Optimizing the Data Processing Performance in PySpark

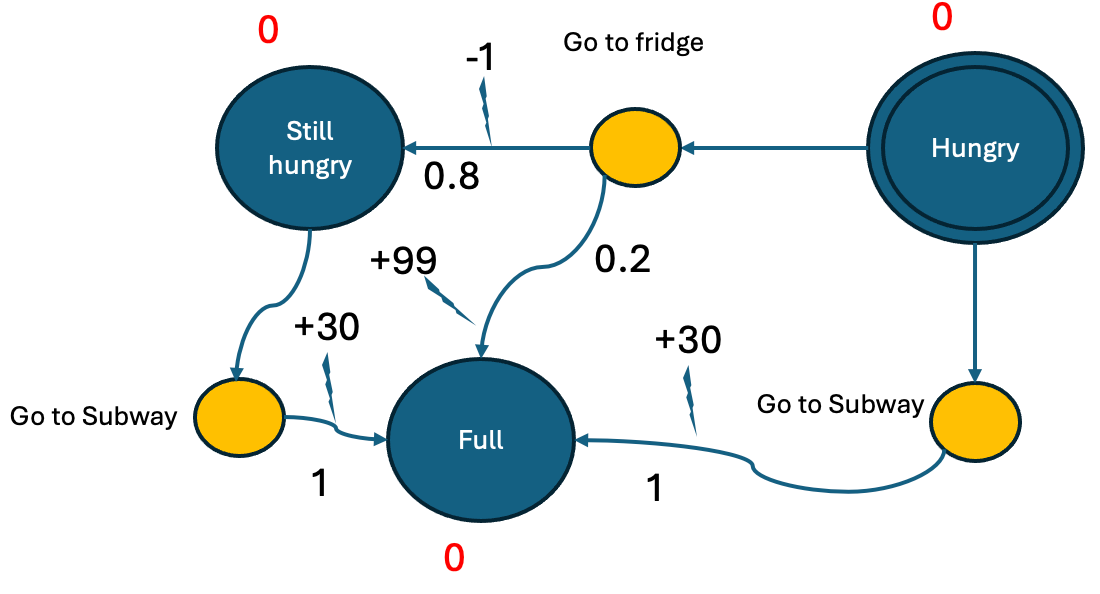

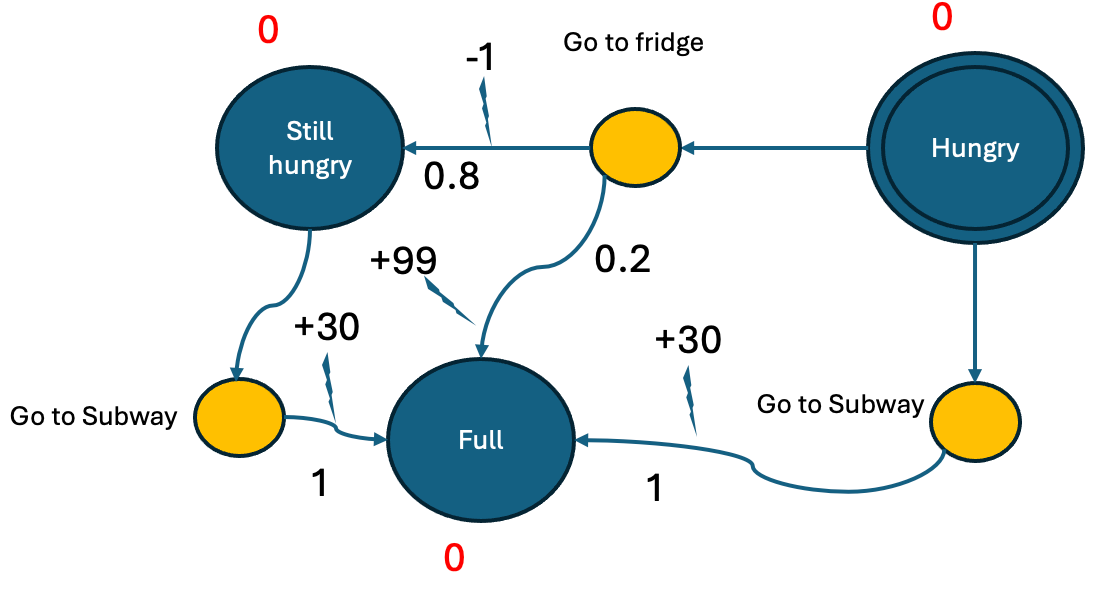

Using a real-world example to explain MDPs, the Bellman equation and value iteration

Originally appeared here:

Markov Decision Problems in Robotics

Go Here to Read this Fast! Markov Decision Problems in Robotics

Last week I was listening to an Acquired episode on Nvidia. The episode talks about transformers: the T in GPT and a candidate for the biggest invention of the 21st century.

Walking down Beacon Street, listening, I was thinking, I understand transformers, right? You mask out tokens during training, you have these attention heads that learn to connect concepts in text, you predict the probability of the next word. I’ve downloaded LLMs from Hugging Face and played with them. I used GPT-3 in the early days before the “chat” part was figured out. At Klaviyo we even built one of the first GPT-powered generative AI features in our subject line assistant. And way back I worked on a grammar checker powered by an older style language model. So maybe.

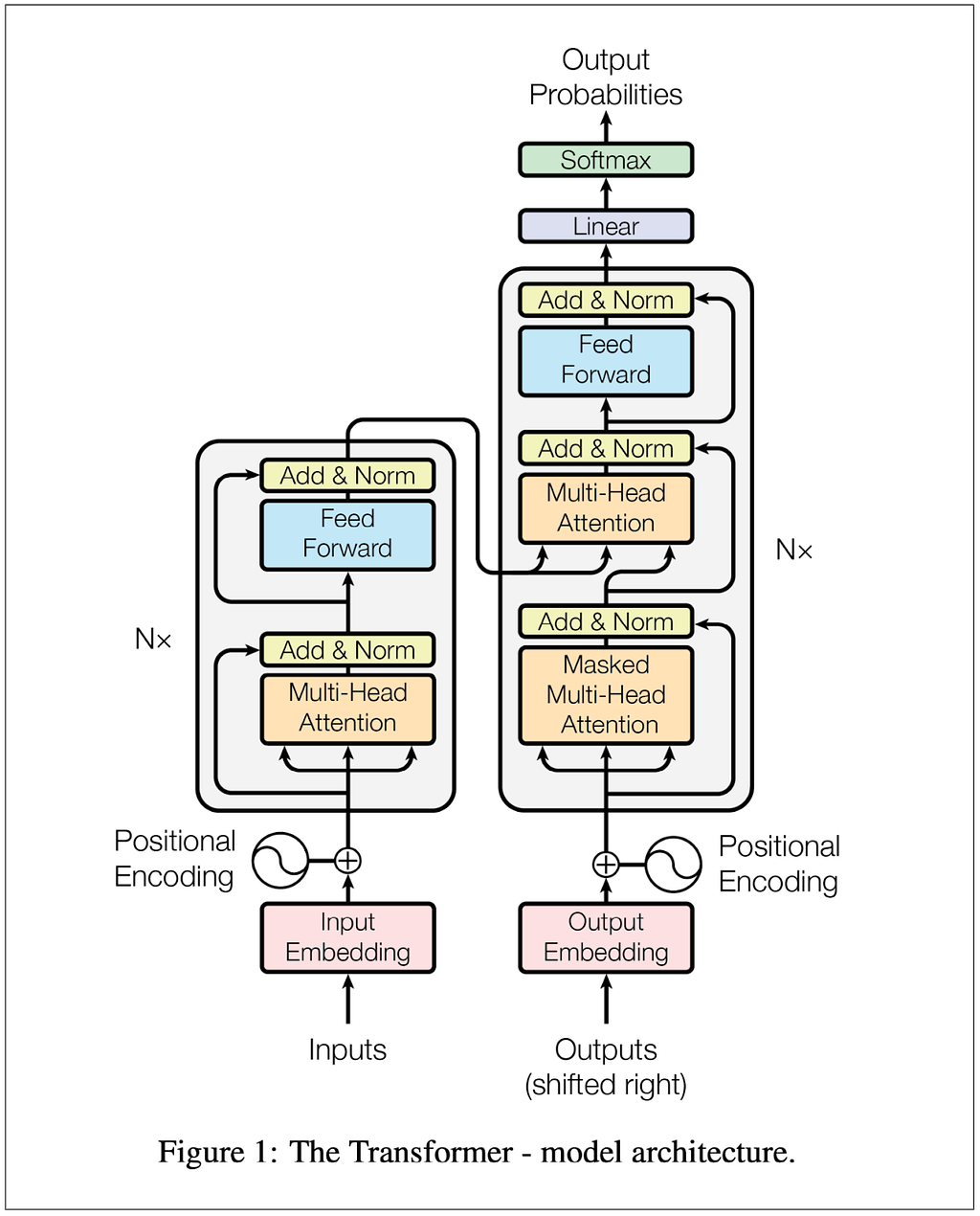

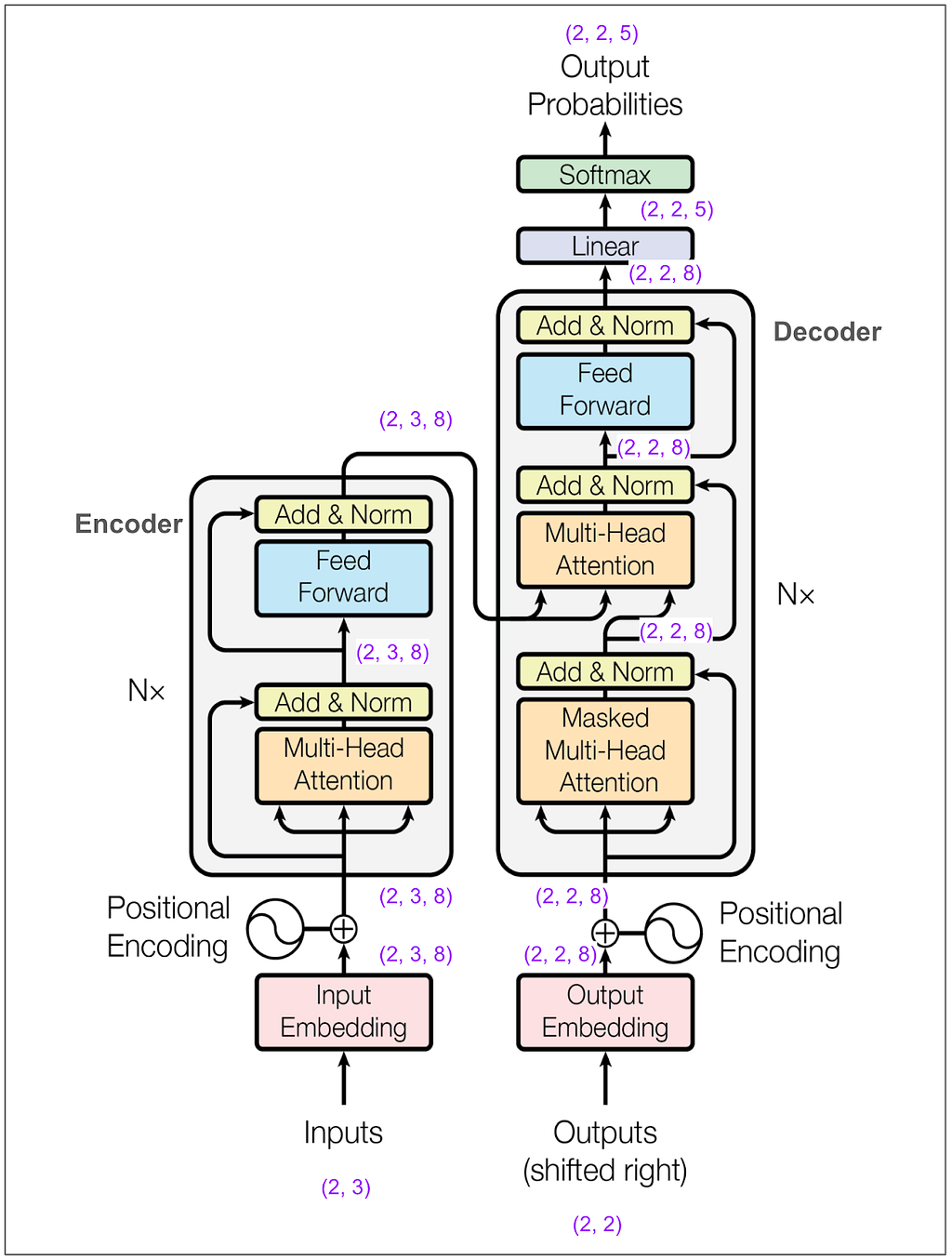

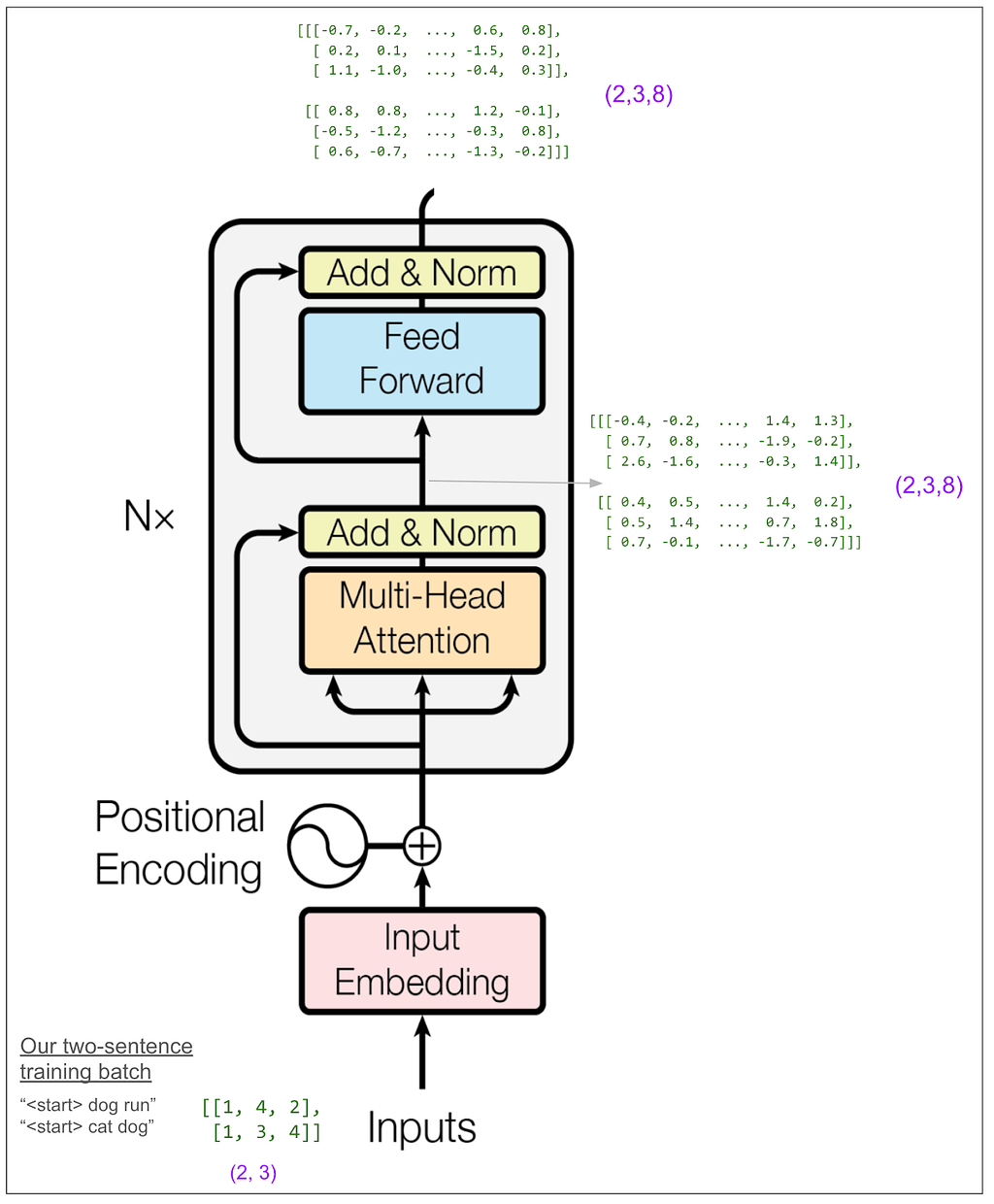

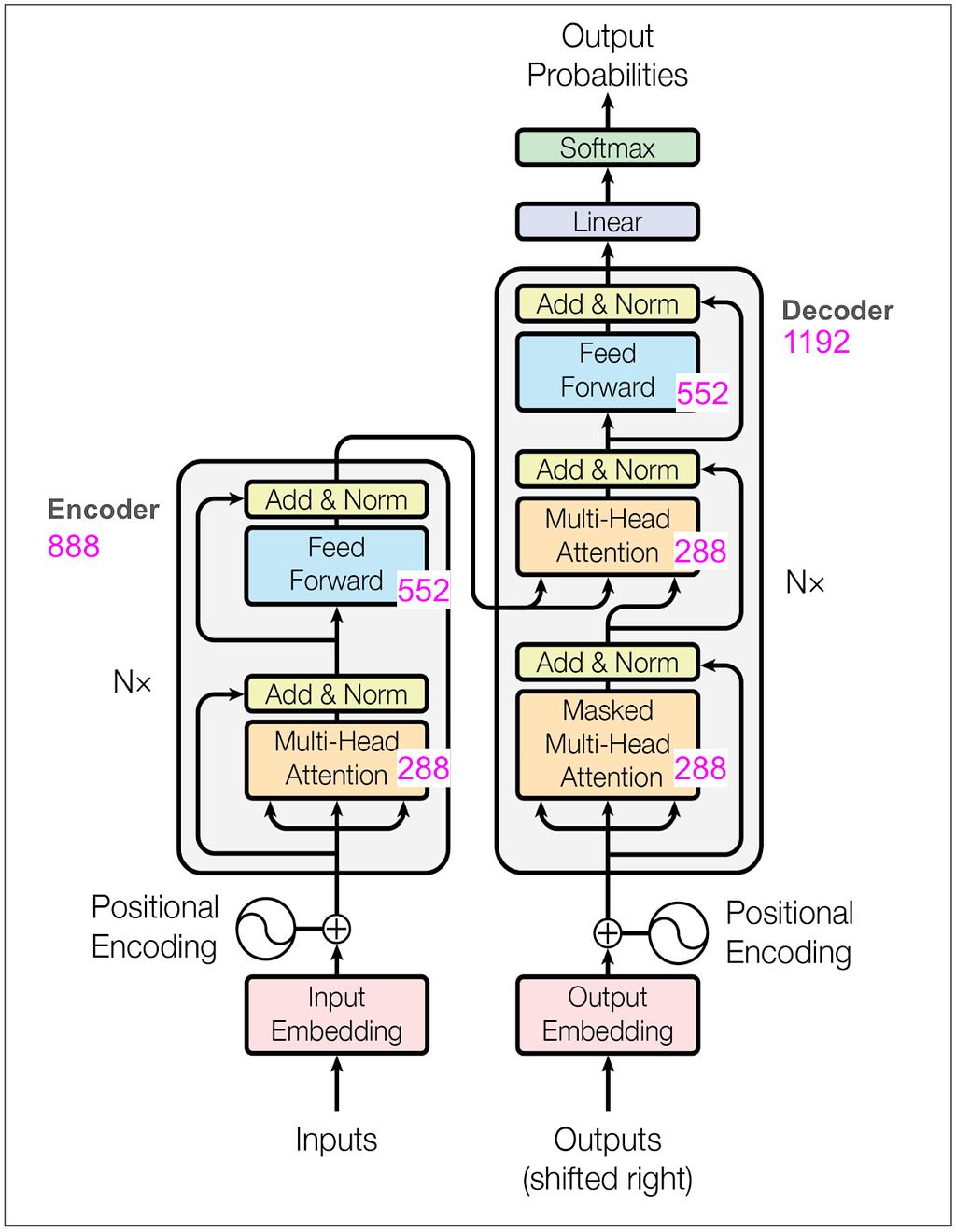

The transformer was invented by a team at Google working on automated translation, like from English to German. It was introduced to the world in 2017 in the now famous paper Attention Is All You Need. I pulled up the paper and looked at Figure 1:

Hmm…if I understood, it was only at the most hand-wavy level. The more I looked at the diagram and read the paper, the more I realized I didn’t get the details. Here are a few questions I wrote down:

I’m sure those questions are easy and sound naive to two categories of people. The first is people who were already working with similar models (e.g. RNN, encoder-decoder) to do similar things. They must have instantly understood what the Google team accomplished and how they did it when they read the paper. The second is the many, many more people who realized how important transformers were these last seven years and took the time to learn the details.

Well, I wanted to learn, and I figured the best way was to build the model from scratch. I got lost pretty quickly and instead decided to trace code someone else wrote. I found this terrific notebook that explains the paper and implements the model in PyTorch. I copied the code and trained the model. I kept everything (inputs, batches, vocabulary, dimensions) tiny so that I could trace what was happening at each step. I found that noting the dimensions and the tensors on the diagrams helped me keep things straight. By the time I finished I had pretty good answers to all the questions above, and I’ll get back to answering them after the diagrams.

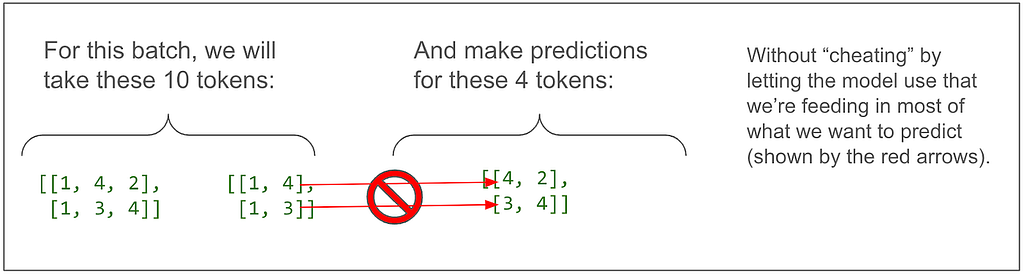

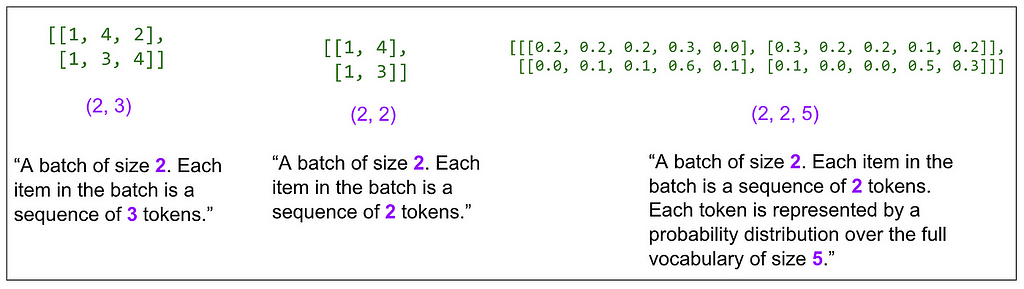

Here are cleaned up versions of my notes. Everything in this part is for training one single, tiny batch, which means all the tensors in the different diagrams go together.

To keep things easy to follow, and copying an idea from the notebook, we’re going to train the model to copy tokens. For example, once trained, “dog run” should translate to “dog run”.

In other words:

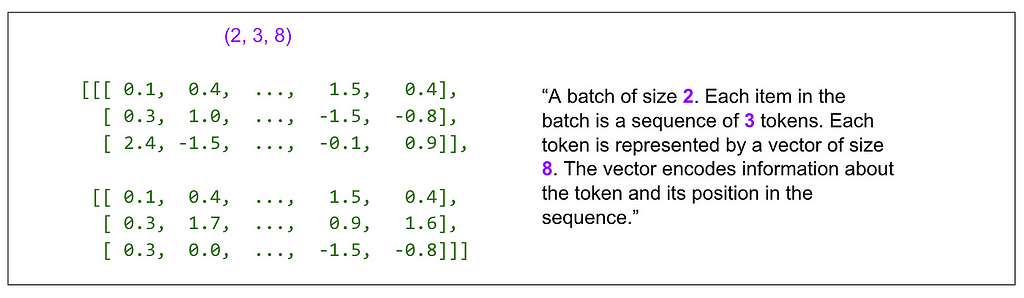

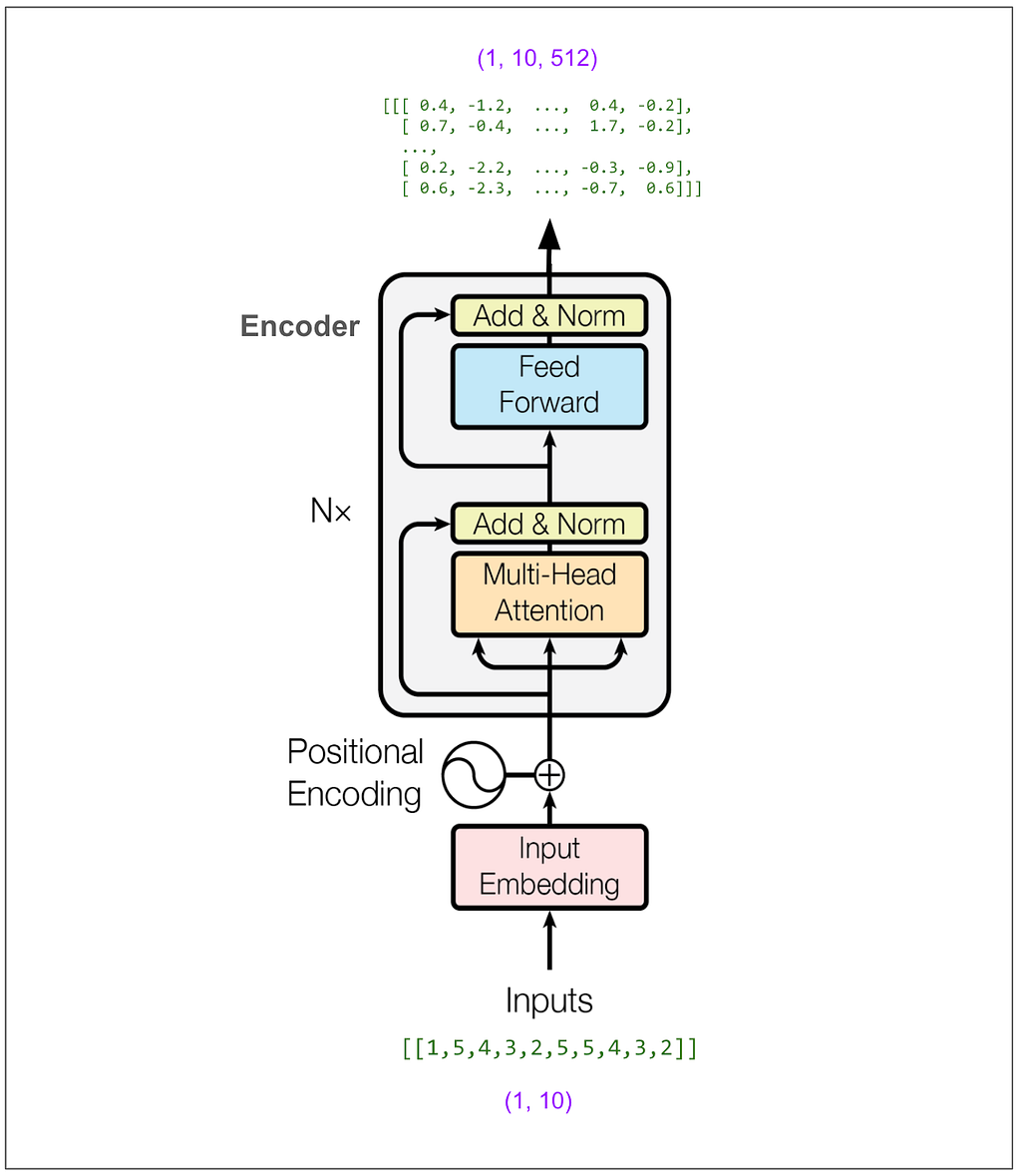

And here’s trying to put into words what the tensor dimensions (shown in purple) on the diagram so far mean:

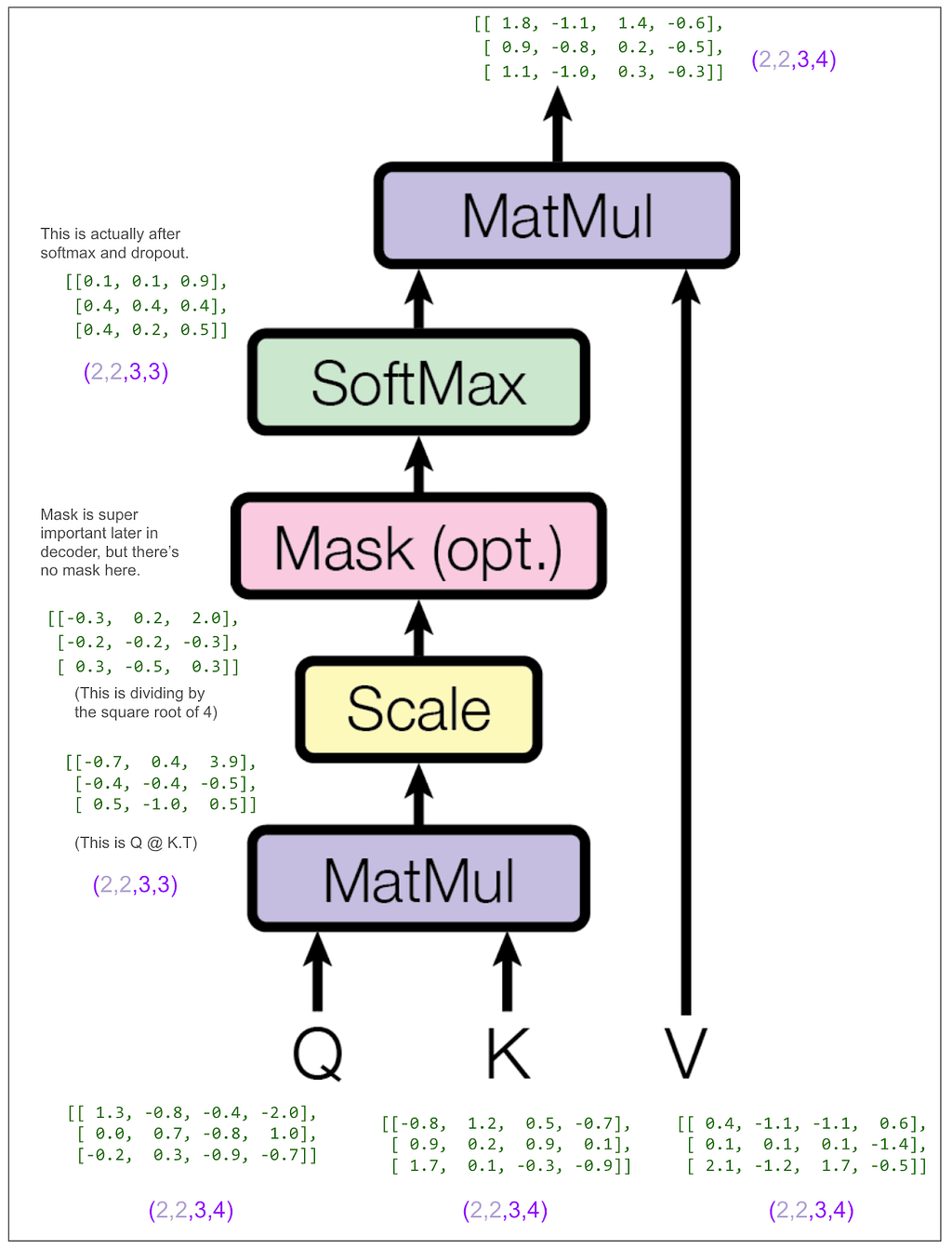

One of the hyperparameters is d-model and in the base model in the paper it’s 512. In this example I made it 8. This means our embedding vectors have length 8. Here’s the main diagram again with dimensions marked in a bunch of places:

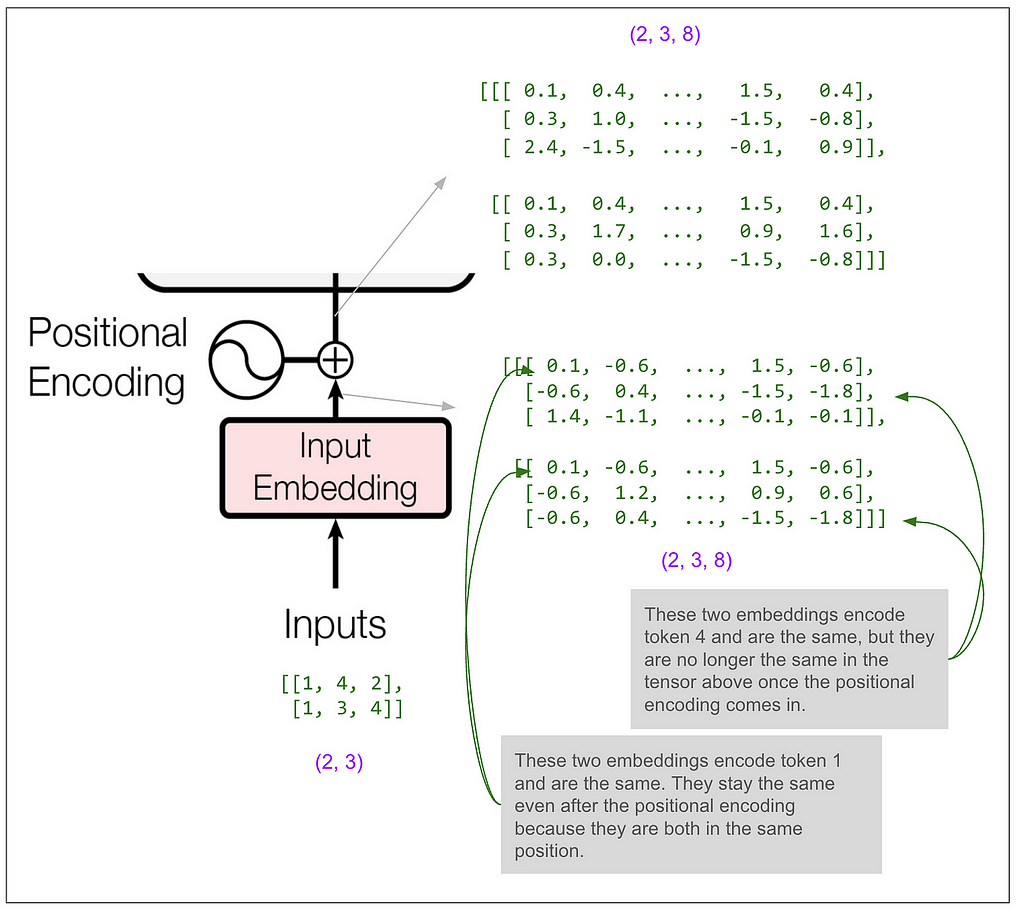

Let’s zoom in on the input to the encoder:

Most of the blocks shown in the diagram (add & norm, feed forward, the final linear transformation) act only on the last dimension (the 8). If that’s all that was happening then the model would only get to use the information in a single position in the sequence to predict a single position. Somewhere it must get to “mix things up” among positions and that magic happens in the multi-head attention blocks.

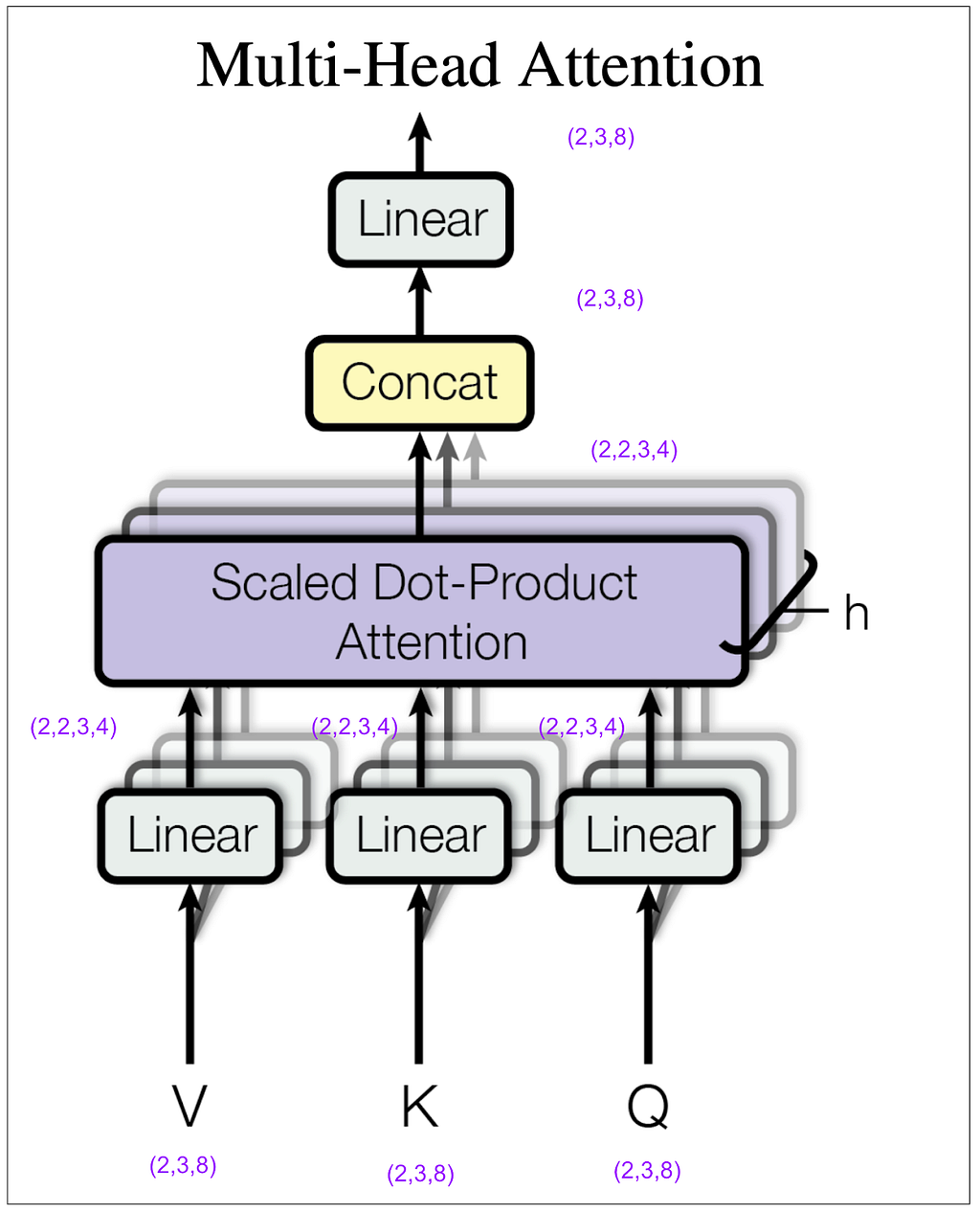

Let’s zoom in on the multi-head attention block within the encoder. For this next diagram, keep in mind that in my example I set the hyperparameter h (number of heads) to 2. (In the base model in the paper it’s 8.)

How did (2,3,8) become (2,2,3,4)? We did a linear transformation, then took the result and split it into number of heads (8 / 2 = 4) and rearranged the tensor dimensions so that our second dimension is the head. Let’s look at some actual tensors:

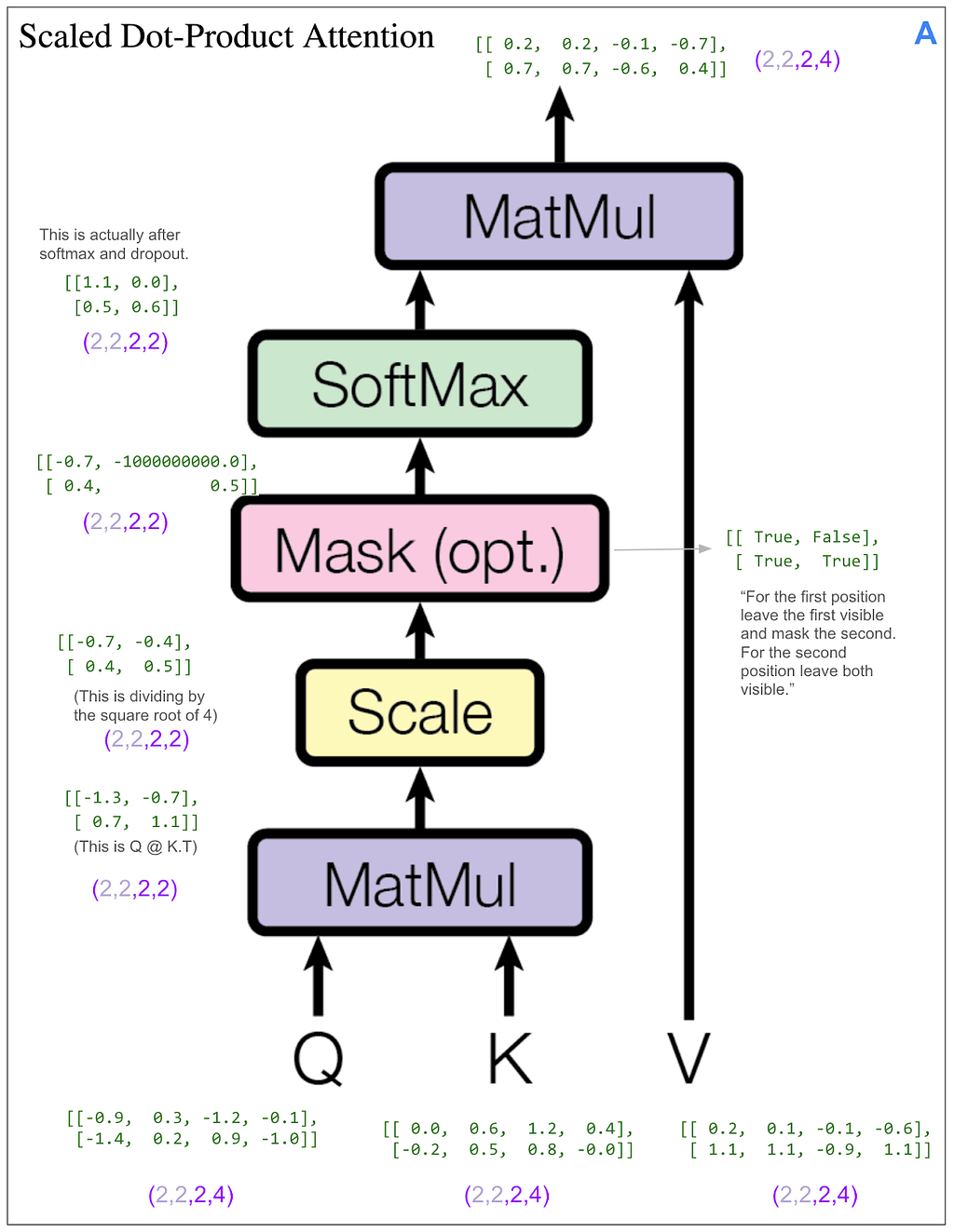

We still haven’t done anything that mixes information among positions. That’s going to happen next in the scaled dot-product attention block. The “4” dimension and the “3” dimension will finally touch.

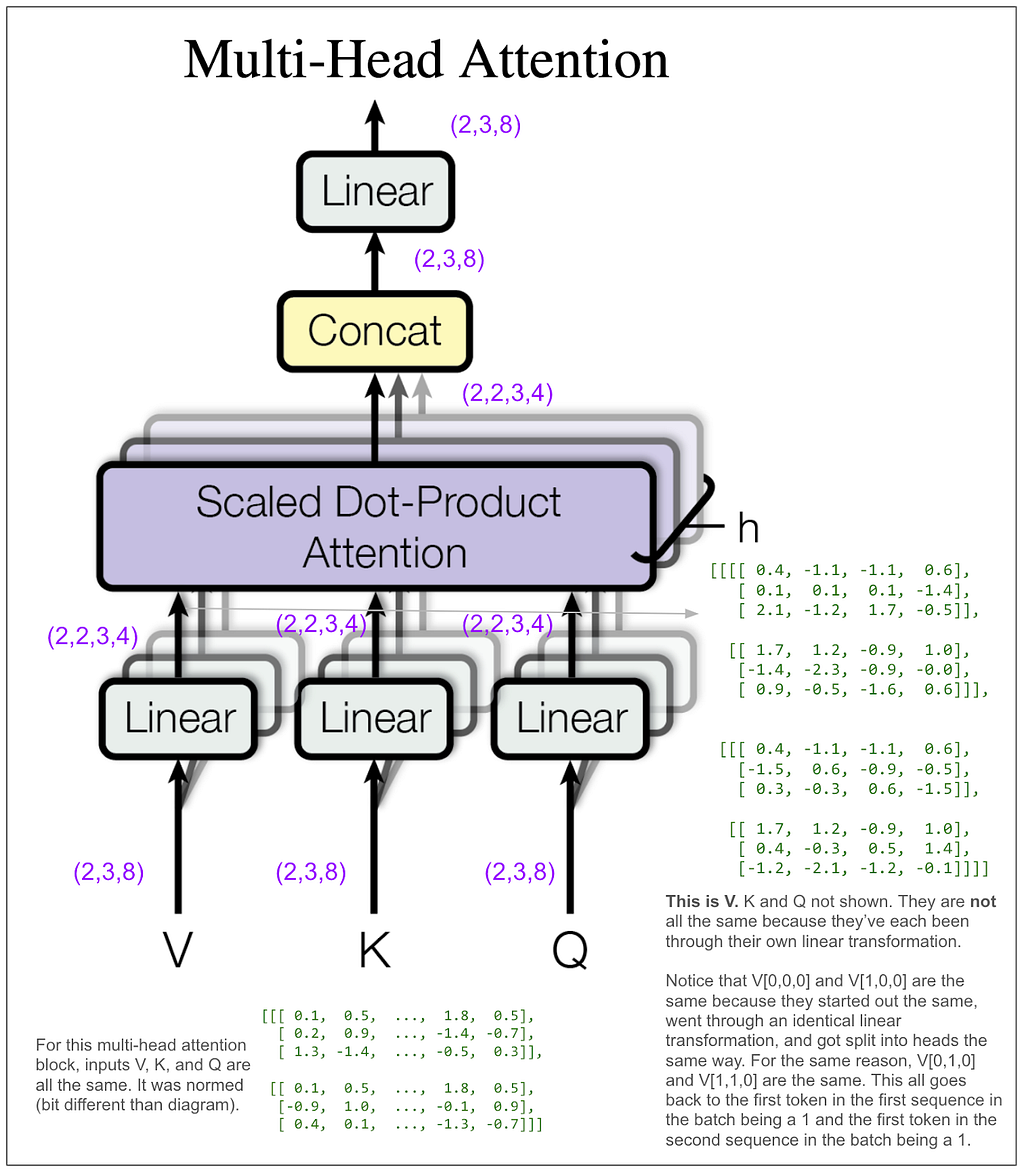

Let’s look at the tensors, but to make it easier to follow, we’ll look only at the first item in the batch and the first head. In other words, Q[0,0], K[0,0], etc. The same thing will be happening to the other three.

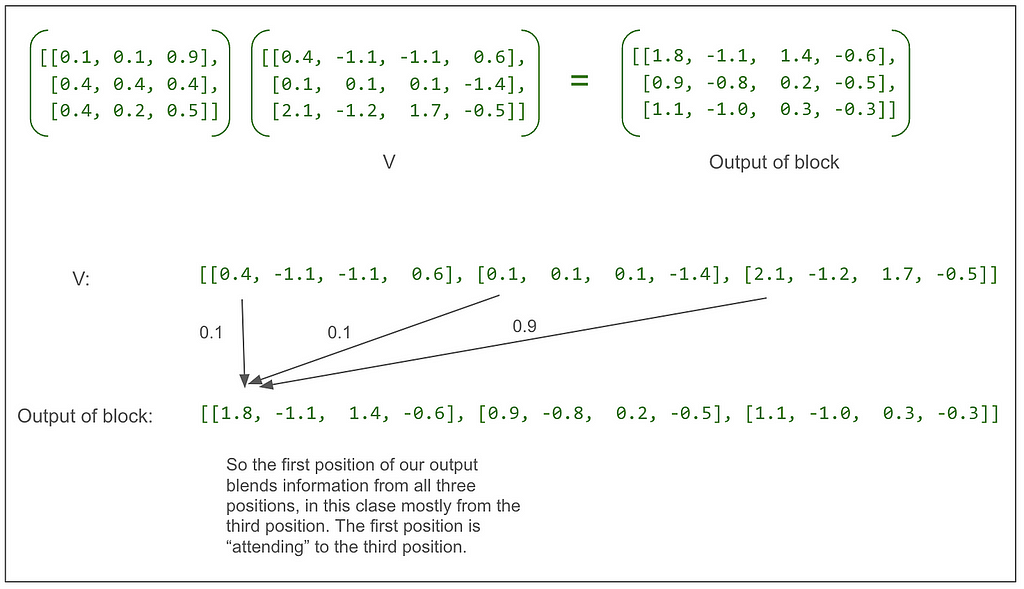

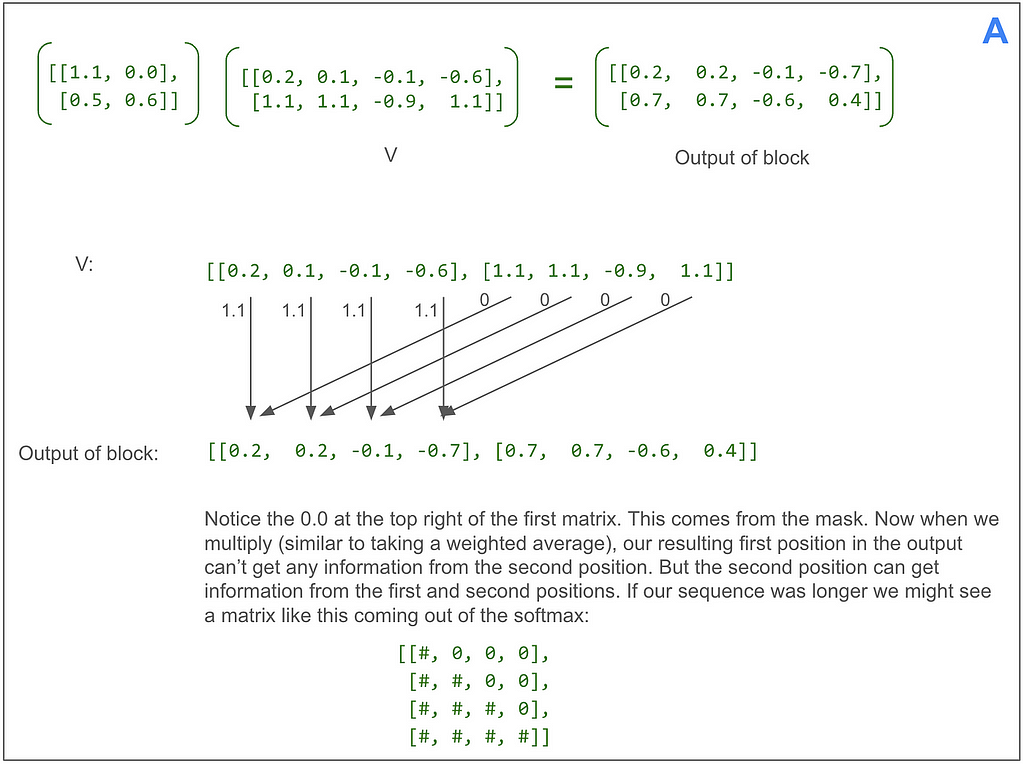

Let’s look at that final matrix multiplication between the output of the softmax and V:

Following from the very beginning, we can see that up until that multiplication, each of the three positions in V going all the way back to our original sentence “<start> dog run” has only been operated on independently. This multiplication blends in information from other positions for the first time.

Going back to the multi-head attention diagram, we can see that the concat puts the output of each head back together so each position is now represented by a vector of length 8. Notice that the 1.8 and the -1.1 in the tensor after concat but before linear match the 1.8 and -1.1 from the first two elements in the vector for the first position of the first head in the first item in the batch from the output of the scaled dot-product attention shown above. (The next two numbers match too but they’re hidden by the ellipses.)

Now let’s zoom back out to the whole encoder:

At first I thought I would want to trace the feed forward block in detail. It’s called a “position-wise feed-forward network” in the paper and I thought that meant it might bring information from one position to positions to the right of it. However, it’s not that. “Position-wise” means that it operates independently on each position. It does a linear transform on each position from 8 elements to 32, does ReLU (max of 0 and number), then does another linear transform to get back to 8. (That’s in our small example. In the base model in the paper it goes from 512 to 2048 and then back to 512. There are a lot of parameters here and probably this is where a lot of the learning happens!) The output of the feed forward is back to (2,3,8).

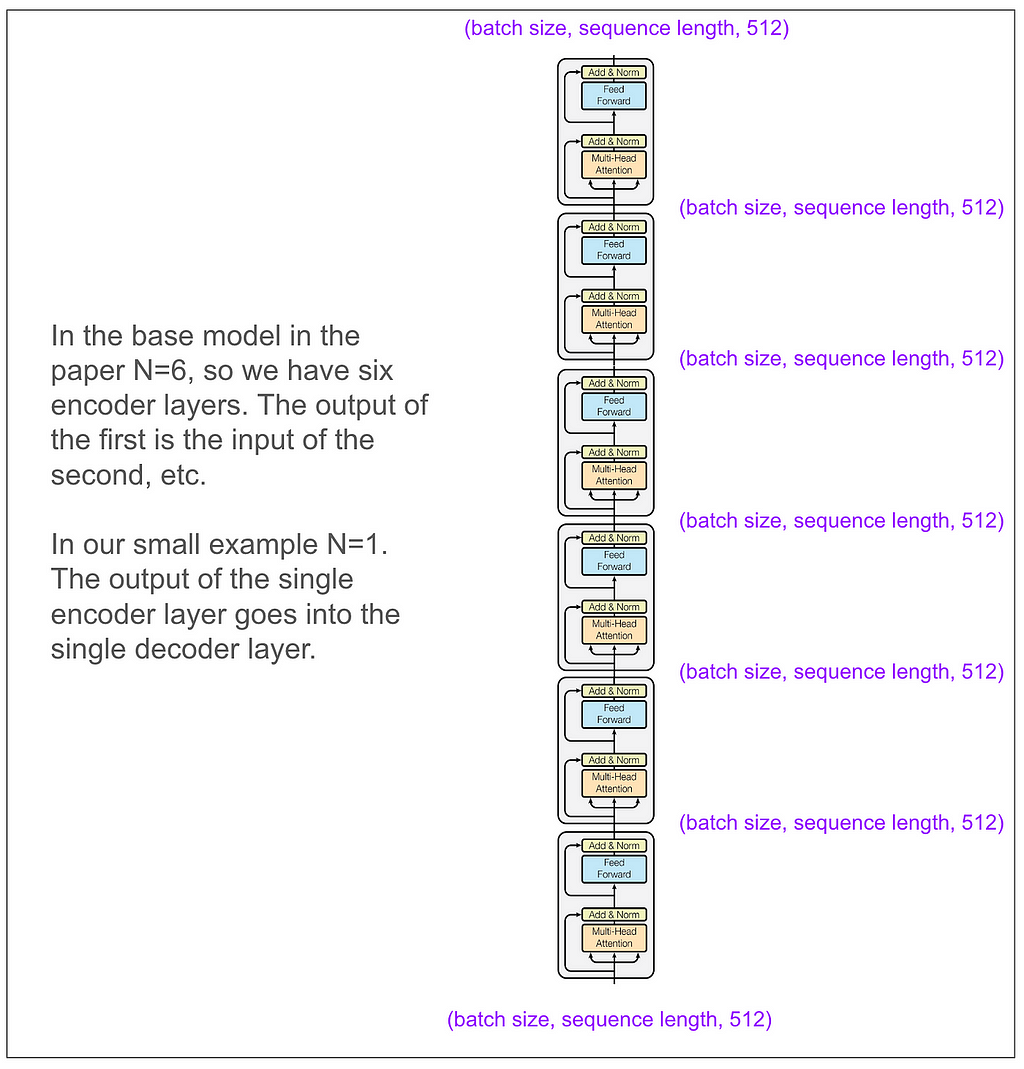

Getting away from our toy model for a second, here’s how the encoder looks in the base model in the paper. It’s very nice that the input and output dimensions match!

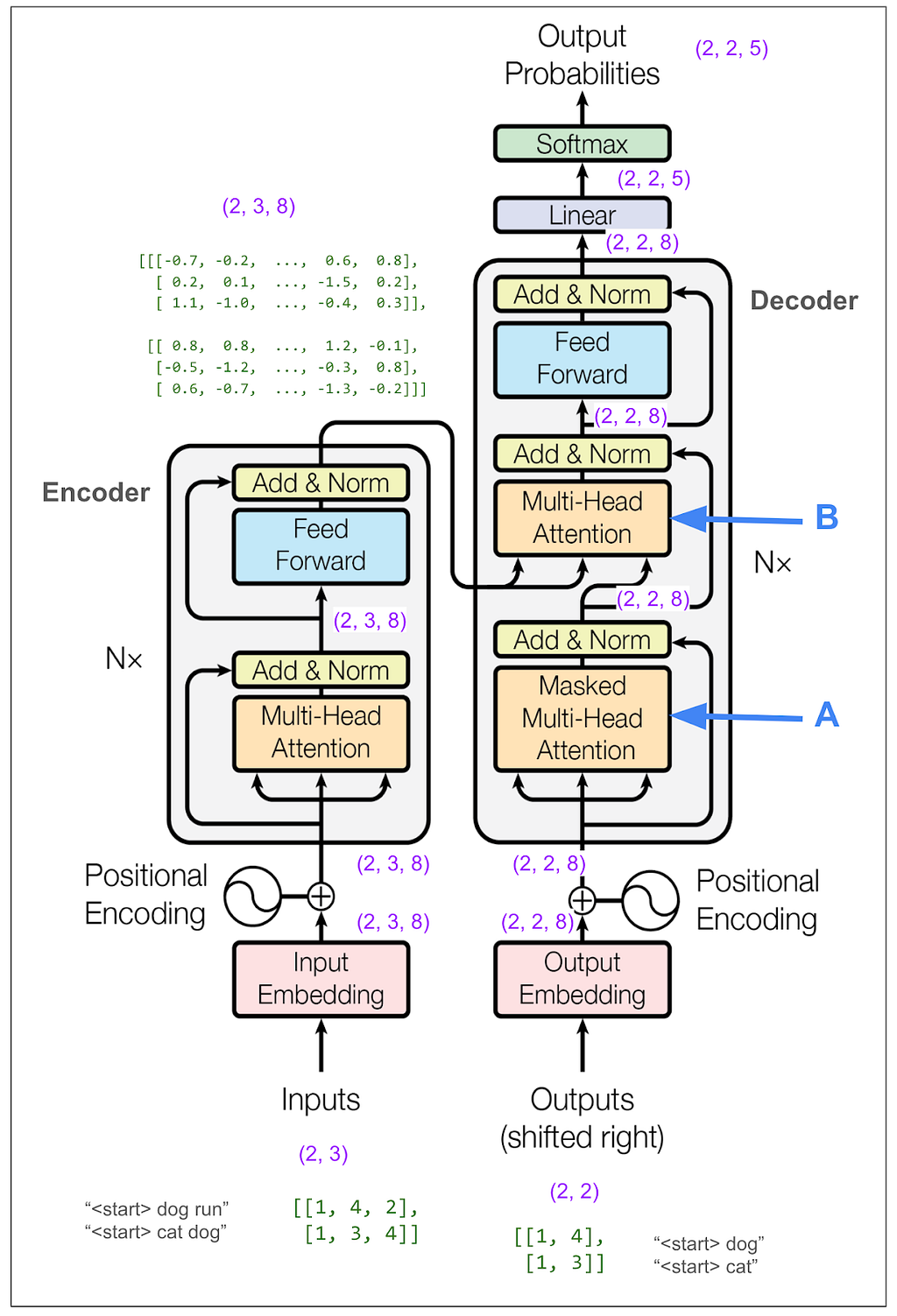

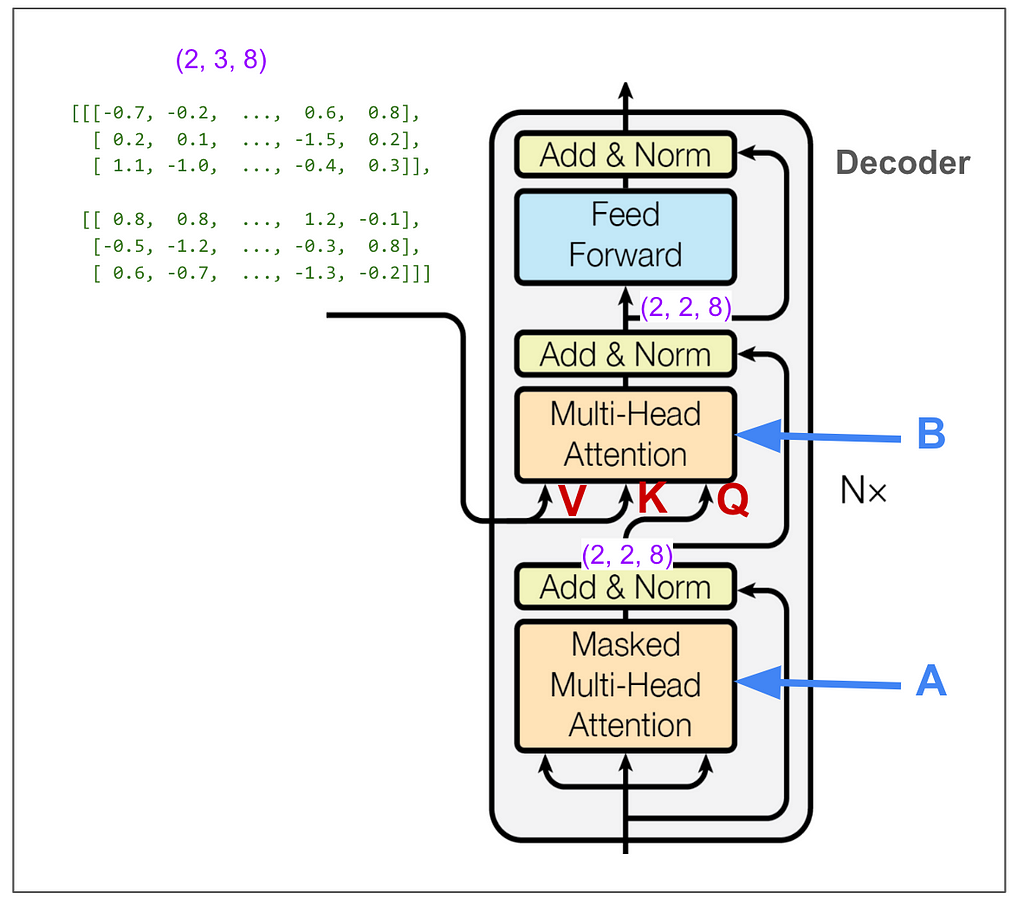

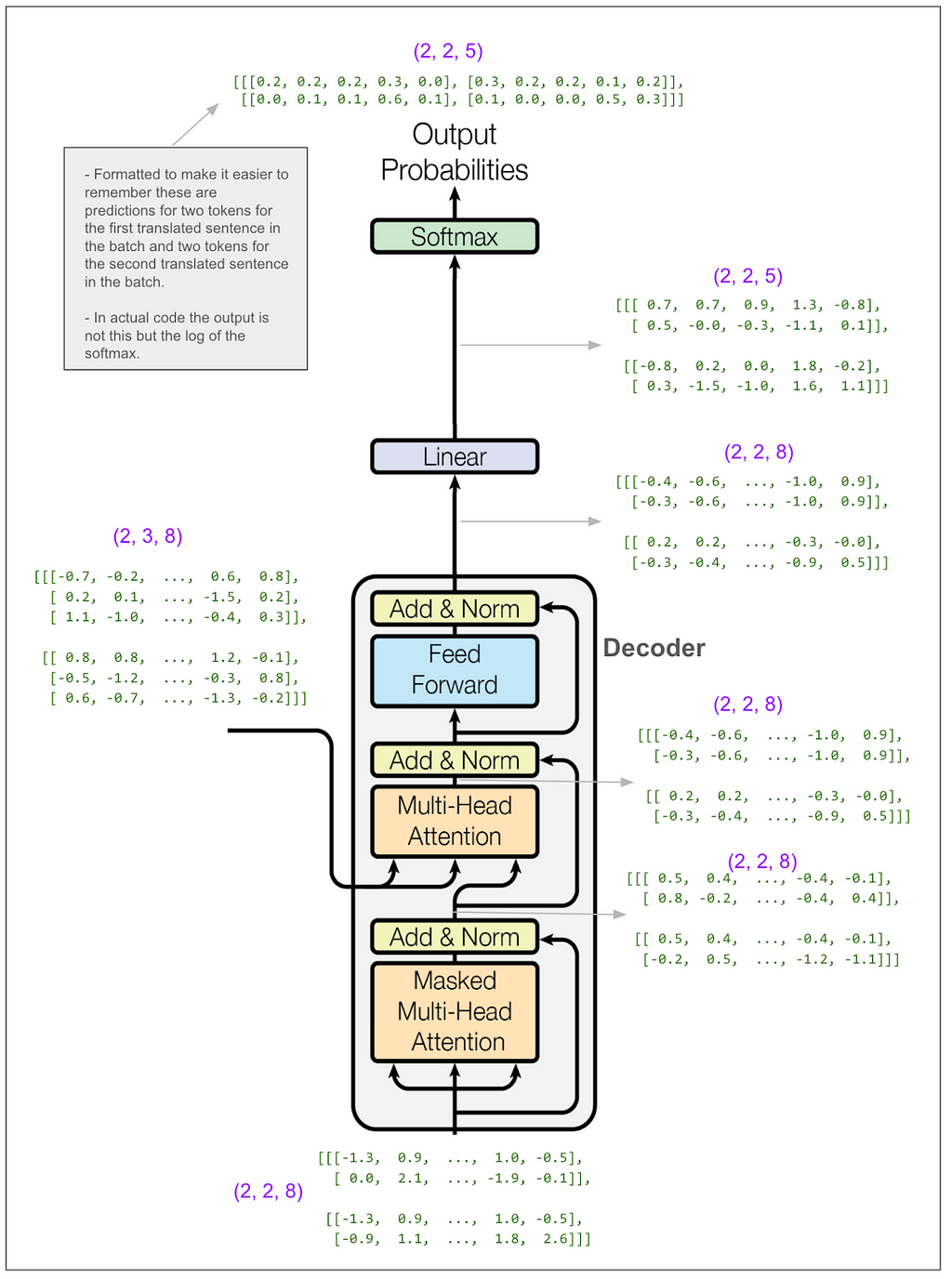

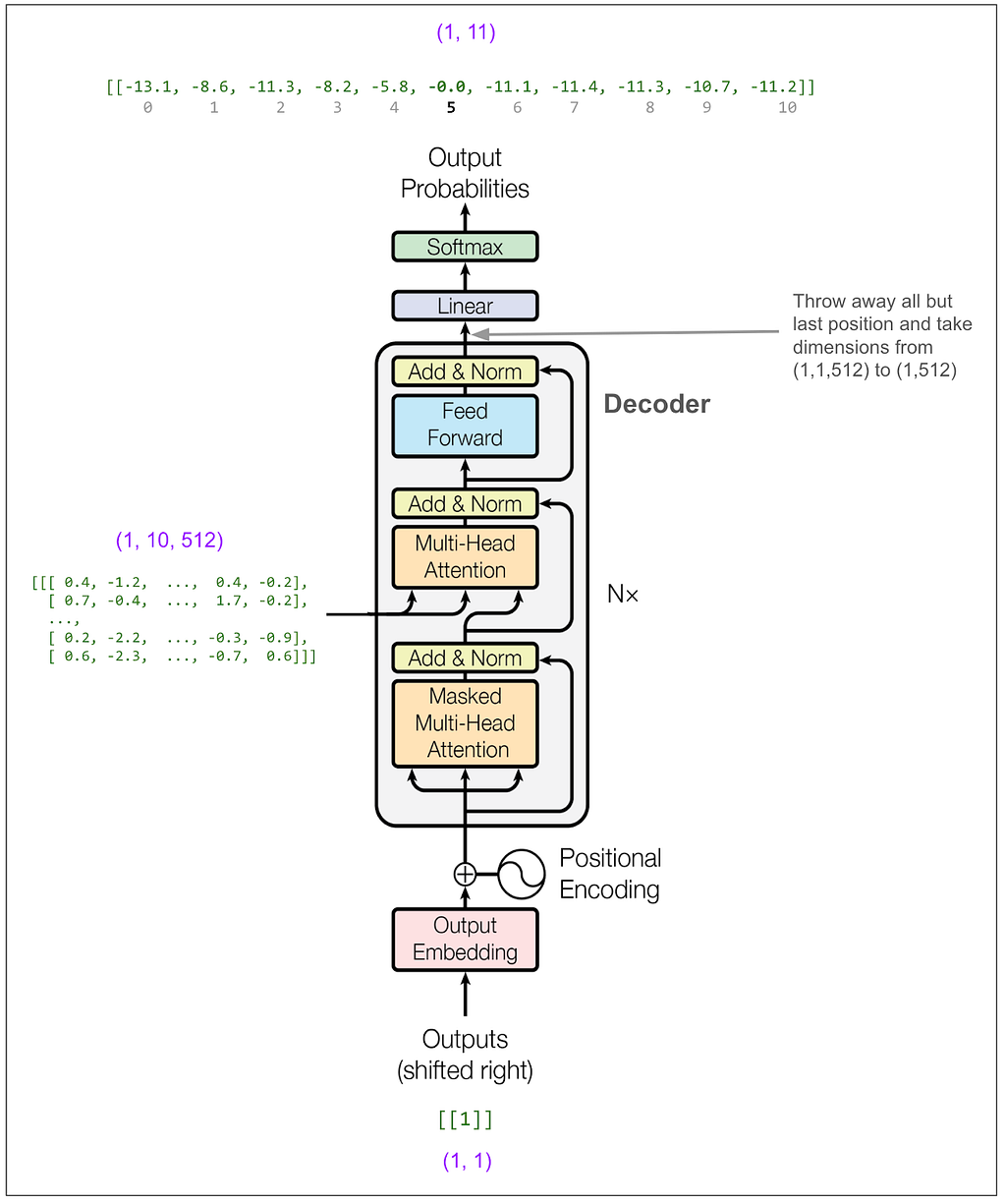

Now let’s zoom out all the way so we can look at the decoder.

We don’t need to trace most of the decoder side because it’s very similar to what we just looked at on the encoder side. However, the parts I labeled A and B are different. A is different because we do masked multi-head attention. This must be where the magic happens to not “cheat” while training. B we’ll come back to later. But first let’s hide the internal details and keep in mind the big picture of what we want to come out of the decoder.

And just to really drive home this point, suppose our English sentence is “she pet the dog” and our translated Pig Latin sentence is “eshay etpay ethay ogday”. If the model has “eshay etpay ethay” and is trying to come up with the next word, “ogday” and “atcay” are both high probability choices. Given the context of the full English sentence of “she pet the dog,” it really should be able to choose “ogday.” However, if the model could see the “ogday” during training, it wouldn’t need to learn how to predict using the context, it would just learn to copy.

Let’s see how the masking does this. We can skip ahead a bit because the first part of A works exactly the same as before where it applies linear transforms and splits things up into heads. The only difference is the dimensions coming into the scaled dot-product attention part are (2,2,2,4) instead of (2,2,3,4) because our original input sequence is of length two. Here’s the scaled dot-product attention part. As we did on the encoder side, we’re looking at only the first item in the batch and the first head.

This time we have a mask. Let’s look at the final matrix multiplication between the output of the softmax and V:

Now we’re ready to look at B, the second multi-head attention in the decoder. Unlike the other two multi-head attention blocks, we’re not feeding in three identical tensors, so we need to think about what’s V, what’s K and what’s Q. I labeled the inputs in red. We can see that V and K come from the output of the encoder and have dimension (2,3,8). Q has dimension (2,2,8).

As before, we skip ahead to the scaled dot-product attention part. It makes sense, but is also confusing, that V and K have dimensions (2,2,3,4) — two items in the batch, two heads, three positions, vectors of length four, and Q has dimension (2,2,2,4).

Even though we’re “reading from” the encoder output where the “sequence” length is three, somehow all the matrix math works out and we end up with our desired dimension (2,2,2,4). Let’s look at the final matrix multiplication:

The outputs of each multi-head attention block get added together. Let’s skip ahead to see the output from the decoder and turning that into predictions:

The linear transform takes us from (2,2,8) to (2,2,5). Think about that as reversing the embedding, except that instead of going from a vector of length 8 to the integer identifier for a single token, we go to a probability distribution over our vocabulary of 5 tokens. The numbers in our tiny example make that seem a little funny. In the paper, it’s more like going from a vector of size 512 to a vocabulary of 37,000 when they did English to German.

In a moment we’ll calculate the loss. First, though, even at a glance, you can get a feel for how the model is doing.

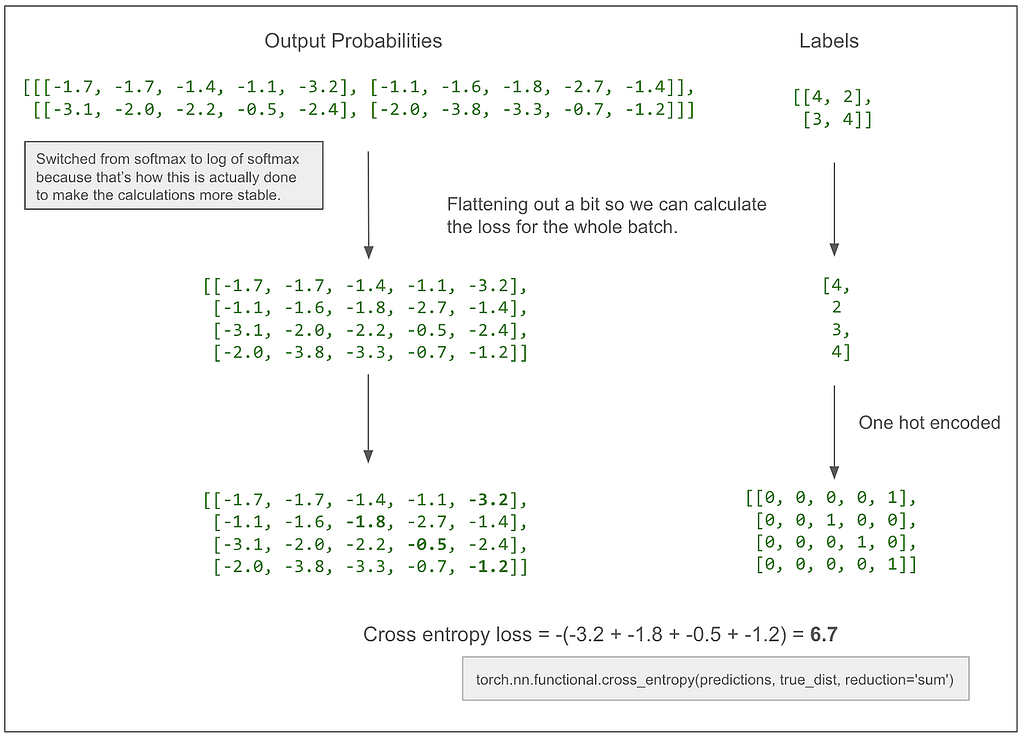

It got one token right. No surprise because this is our first training batch and it’s all just random. One nice thing about this diagram is it makes clear that this is a multi-class classification problem. The classes are the vocabulary (5 classes in this case) and, this is what I was confused about before, we make (and score) one prediction per token in the translated sentence, NOT one prediction per sentence. Let’s do the actual loss calculation.

If, for example, the -3.2 became a -2.2, our loss would decrease to 5.7, moving in the desired direction, because we want the model to learn that the correct prediction for that first token is 4.

The diagram above leaves out label smoothing. In the actual paper, the loss calculation smooths labels and uses KL Divergence loss. I think that works out to be the same or simialr to cross entropy when there is no smoothing. Here’s the same diagram as above but with label smoothing.

Let’s also take a quick look at the number of parameters being learned in the encoder and decoder:

As a sanity check, the feed forward block in our toy model has a linear transformation from 8 to 32 and back to 8 (as explained above) so that’s 8 * 32 (weights) + 32 (bias) + 32 * 8 (weights) + 8 (bias) = 52. Keep in mind that in the base model in the paper, where d-model is 512 and d-ff is 2048 and there are 6 encoders and 6 decoders there will be many more parameters.

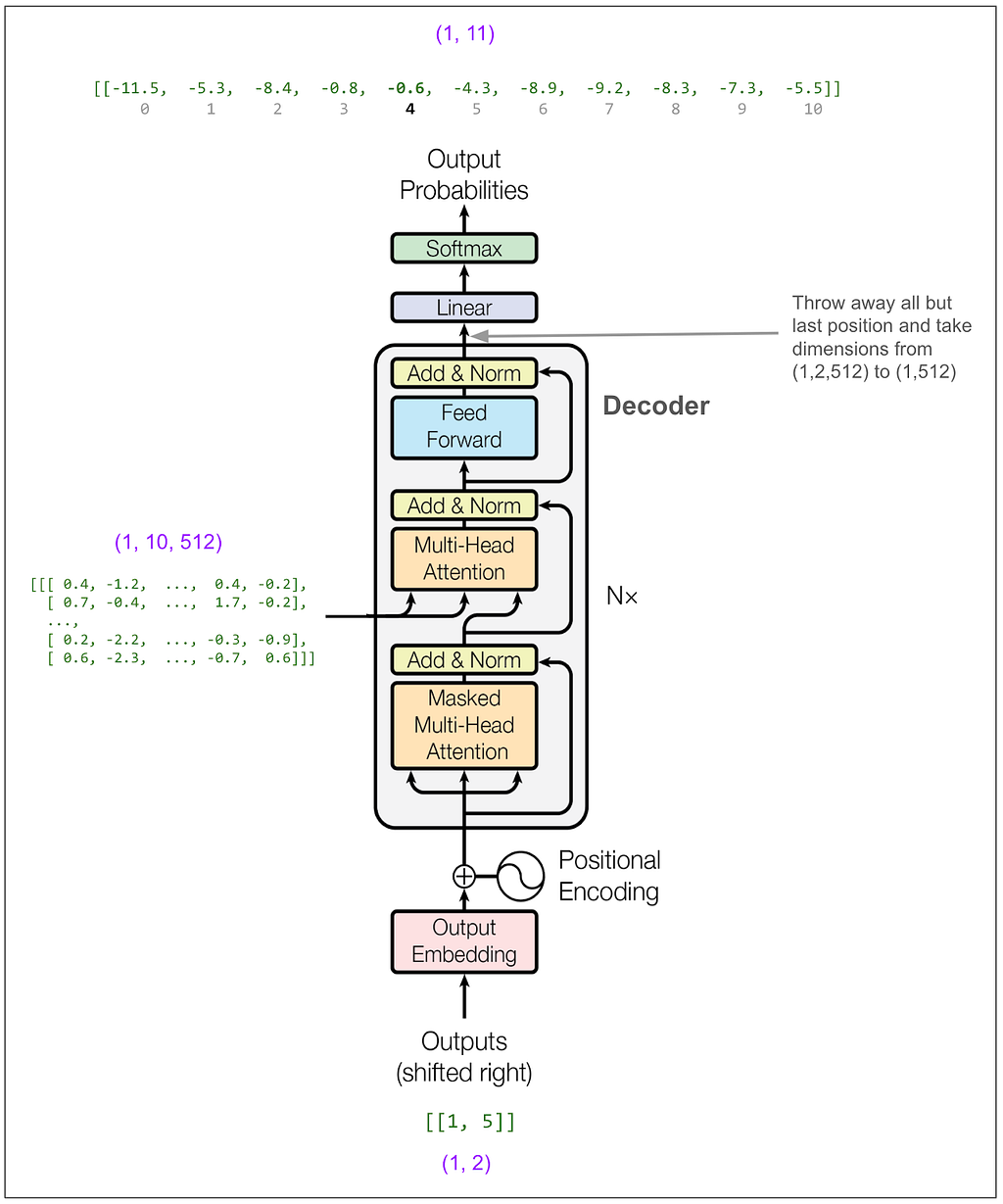

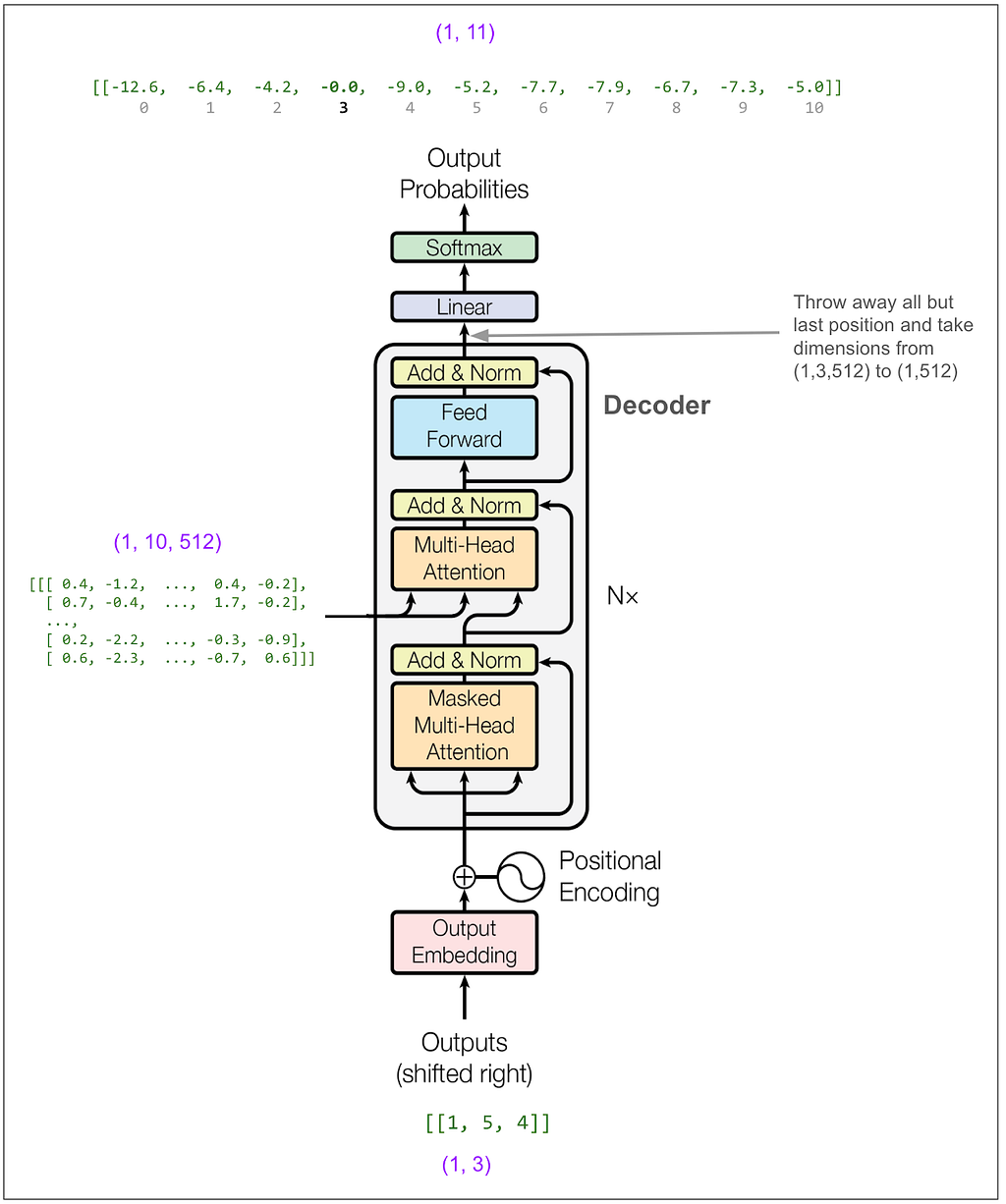

Now let’s see how we put source language text in and get translated text out. I’m still using a toy model here trained to “translate” by coping tokens, but instead of the example above, this one uses a vocabulary of size 11 and d-model is 512. (Above we had vocabulary of size 5 and d-model was 8.)

First let’s do a translation. Then we’ll see how it works.

Step one is to feed the source sentence into the encoder and hold onto its output, which in this case is a tensor with dimensions (1, 10, 512).

Step two is to feed the first token of the output into the decoder and predict the second token. We know the first token because it’s always <start> = 1.

In the paper, they use beam search with a beam size of 4, which means we would consider the 4 highest probability tokens at this point. To keep things simple I’m going to instead use greedy search. You can think of that as a beam search with a beam size of 1. So, reading off from the top of the diagram, the highest probability token is number 5. (The outputs above are logs of probabilities. The highest probability is still the highest number. In this case that’s -0.0 which is actually -0.004 but I’m only showing one decimal place. The model is really confident that 5 is correct! exp(-0.004) = 99.6%)

Now we feed [1,5] into the decoder. (If we were doing beam search with a beam size of 2, we could instead feed in a batch containing [1,5] and [1,4] which is the next most likely.)

Now we feed [1,5,4]:

And get out 3. And so on until we get a token that indicates the end of the sentence (not present in our example vocabulary) or hit a maximum length.

Now I can mostly answer my original questions.

Yes, more or less.

Each item corresponds to one translated sentence pair.

What’s a little subtle is that if this were a classification task where say the model had to take an image and output a class (house, car, rabbit, etc.), we would think of each item in the batch as contributing one “classification” to the loss calculation. Here, however, each item in the batch will contribute (number_of_tokens_in_target_sentence — 1) “classifications” to the loss calculation.

You feed the output so the model can learn to predict the translation based both on the meaning of the source sentence and the words translated so far. Although lots of things are going on in the model, the only time information moves between positions is during the attention steps. Although we do feed the translated sentence into the decoder, the first attention calculation uses a mask to zero out all information from positions beyond the one we’re predicting.

I probably should have asked what exactly is attention, because that’s the more central concept. Multi-head attention means slicing the vectors up into groups, doing attention on the groups, and then putting the groups back together. For example, if the vectors have size 512 and there are 8 heads, attention will be done independently on 8 groups each containing a full batch of the full positions, each position having a vector of size 64. If you squint, you can see how each head could end up learning to give attention to certain connected concepts as in the famous visualizations showing how a head will learn what word a pronoun references.

Right. We’re not translating a full sentence in one go and calculating overall sentence similarity or something like that. Loss is calculated just like in other multi-class classification problems. The classes are the tokens in our vocabulary. The trick is we’re independently predicting a class for every token in the target sentence using only the information we should have at that point. The labels are the actual tokens from our target sentence. Using the predictions and labels we calculate loss using cross entropy. (In reality we “smooth” our labels to account for the fact that they’re notabsolute, a synonym could sometimes work equally well.)

You can’t feed something in and have the model spit out the translation in a single evaluation. You need to use the model multiple times. You first feed the source sentence into the encoder part of the model and get an encoded version of the sentence that represents its meaning in some abstract, deep way. Then you feed that encoded information and the start token <start> into the decoder part of the model. That lets you predict the second token in the target sentence. Then you feed in the <start> and second token to predict the third. You repeat this until you have a full translated sentence. (In reality, though, you consider multiple high probability tokens for each position, feed multiple candidate sequences in each time, and pick the final translated sentence based on total probability and a length penalty.)

I’m guessing three reasons. 1) To show that the second multi-head attention block in the decoder gets some of its input from the encoder and some from the prior block in the decoder. 2) To hint at how the attention algorithm works. 3) To hint that each of the three inputs undergoes its own independent linear transformation before the actual attention happens.

It’s beautiful! I probably wouldn’t think that if it weren’t so incredibly useful. I now get the feeling people must have had when they first saw this thing working. This elegant and trainable model expressible in very little code learned how to translate human languages and beat out complicated machine translations systems built over decades. It’s amazing and clever and unbelievable. You can see how the next step was to say, forget about translated sentence pairs, let’s use this technique on every bit of text on the internet — and LLMs were born!

(I bet have some mistakes above. Please LMK.)

Unless otherwise noted, all images are by author, or contain annotations by the author on figures from Attention Is All You Need.

Tracing the Transformer in Diagrams was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Tracing the Transformer in Diagrams

Go Here to Read this Fast! Tracing the Transformer in Diagrams

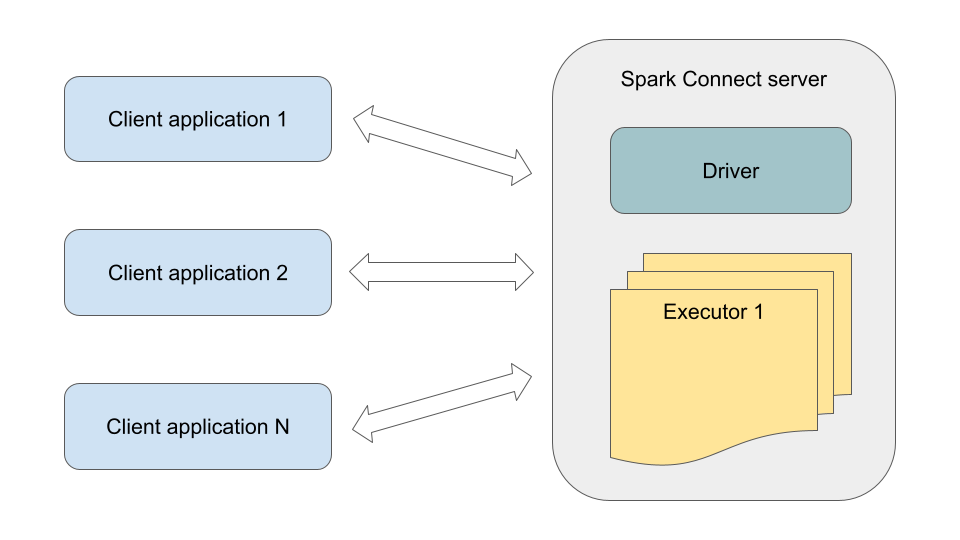

Spark Connect is a relatively new component in the Spark ecosystem that allows thin clients to run Spark applications on a remote Spark cluster. This technology can offer some benefits to Spark applications that use the DataFrame API. Spark has long allowed to run SQL queries on a remote Thrift JDBC server. However, this ability to remotely run client applications written in any supported language (Scala, Python) appeared only in Spark 3.4.

In this article, I will share our experience using Spark Connect (version 3.5). I will talk about the benefits we gained, technical details related to running Spark client applications, and some tips on how to make your Spark Connect setup more efficient and stable.

Spark is one of the key components of the analytics platform at Joom. We have a large number of internal users and over 1000 custom Spark applications. These applications run at different times of day, have different complexity, and require very different amounts of computing resources (ranging from a few cores for a couple of minutes to over 250 cores for several days). Previously, all of them were always executed as separate Spark applications (with their own driver and executors), which, in the case of small and medium-sized applications (we historically have many such applications), led to noticeable overhead. With the introduction of Spark Connect, it is now possible to set up a shared Spark Connect server and run many Spark client applications on it. Technically, the Spark Connect server is a Spark application with an embedded Spark Connect endpoint.

Here are the benefits we were able to get from this:

At the moment, we do not run long-running heavy applications on Spark Connect for the following reasons:

Therefore, heavy applications still run as separate Spark applications.

We use Spark on Kubernetes/EKS and Airflow. Some code examples will be specific to this environment.

We have too many different, constantly changing Spark applications, and it would take too much time to manually determine for each one whether it should run on Spark Connect according to our criteria or not. Furthermore, the list of applications running on Spark Connect needs to be updated regularly. For example, suppose today, some application is light enough, so we have decided to run it on Spark Connect. But tomorrow, its developers may add several large joins, making it quite heavy. Then, it will be preferable to run it as a separate Spark application. The reverse situation is also possible.

Eventually, we created a service to automatically determine how to launch each specific client application. This service analyzes the history of previous runs for each application, evaluating such metrics as Total Task Time, Shuffle Write, Disk Spill, and others (this data is collected using SparkListener). Custom parameters set for the applications by developers (e.g., memory settings of drivers and executors) are also considered. Based on this data, the service automatically determines for each application whether it should be run this time on the Spark Connect server or as a separate Spark application. Thus, all our applications should be ready to run in either of the two ways.

In our environment, each client application is built independently of the others and has its own JAR file containing the application code, as well as specific dependencies (for example, ML applications often use third-party libraries like CatBoost and so on). The problem is that the SparkSession API for Spark Connect is somewhat different from the SparkSession API used for separate Spark applications (Spark Connect clients use the spark-connect-client-jvm artifact). Therefore, we are supposed to know at the build time of each client application whether it will run via Spark Connect or not. But we do not know that. The following describes our approach to launching client applications, which eliminates the need to build and manage two versions of JAR artifact for the same application.

For each Spark client application, we build only one JAR file containing the application code and specific dependencies. This JAR is used both when running on Spark Connect and when running as a separate Spark application. Therefore, these client JARs do not contain specific Spark dependencies. The appropriate Spark dependencies (spark-core/spark-sql or spark-connect-client-jvm) will be provided later in the Java classpath, depending on the run mode. In any case, all client applications use the same Scala code to initialize SparkSession, which operates depending on the run mode. All client application JARs are built for the regular Spark API. So, in the part of the code intended for Spark Connect clients, the SparkSession methods specific to the Spark Connect API (remote, addArtifact) are called via reflection:

val sparkConnectUri: Option[String] = Option(System.getenv("SPARK_CONNECT_URI"))

val isSparkConnectMode: Boolean = sparkConnectUri.isDefined

def createSparkSession(): SparkSession = {

if (isSparkConnectMode) {

createRemoteSparkSession()

} else {

SparkSession.builder

// Whatever you need to do to configure SparkSession for a separate

// Spark application.

.getOrCreate

}

}

private def createRemoteSparkSession(): SparkSession = {

val uri = sparkConnectUri.getOrElse(throw new Exception(

"Required environment variable 'SPARK_CONNECT_URI' is not set."))

val builder = SparkSession.builder

// Reflection is used here because the regular SparkSession API does not

// contain these methods. They are only available in the SparkSession API

// version for Spark Connect.

classOf[SparkSession.Builder]

.getDeclaredMethod("remote", classOf[String])

.invoke(builder, uri)

// A set of identifiers for this application (to be used later).

val scAppId = s"spark-connect-${UUID.randomUUID()}"

val airflowTaskId = Option(System.getenv("AIRFLOW_TASK_ID"))

.getOrElse("unknown_airflow_task_id")

val session = builder

.config("spark.joom.scAppId", scAppId)

.config("spark.joom.airflowTaskId", airflowTaskId)

.getOrCreate()

// If the client application uses your Scala code (e.g., custom UDFs),

// then you must add the jar artifact containing this code so that it

// can be used on the Spark Connect server side.

val addArtifact = Option(System.getenv("ADD_ARTIFACT_TO_SC_SESSION"))

.forall(_.toBoolean)

if (addArtifact) {

val mainApplicationFilePath =

System.getenv("SPARK_CONNECT_MAIN_APPLICATION_FILE_PATH")

classOf[SparkSession]

.getDeclaredMethod("addArtifact", classOf[String])

.invoke(session, mainApplicationFilePath)

}

Runtime.getRuntime.addShutdownHook(new Thread() {

override def run(): Unit = {

session.close()

}

})

session

}

In the case of Spark Connect mode, this client code can be run as a regular Java application anywhere. Since we use Kubernetes, this runs in a Docker container. All dependencies specific to Spark Connect are packed into a Docker image used to run client applications (a minimal example of this image can be found here). The image contains not only the spark-connect-client-jvm artifact but also other common dependencies used by almost all client applications (e.g., hadoop-aws since we almost always have interaction with S3 storage on the client side).

FROM openjdk:11-jre-slim

WORKDIR /app

# Here, we copy the common artifacts required for any of our Spark Connect

# clients (primarily spark-connect-client-jvm, as well as spark-hive,

# hadoop-aws, scala-library, etc.).

COPY build/libs/* /app/

COPY src/main/docker/entrypoint.sh /app/

RUN chmod +x ./entrypoint.sh

ENTRYPOINT ["./entrypoint.sh"]

This common Docker image is used to run all our client applications when it comes to running them via Spark Connect. At the same time, it does not contain client JARs with the code of particular applications and their dependencies because there are many such applications that are constantly updated and may depend on any third-party libraries. Instead, when a particular client application is launched, the location of its JAR file is passed using an environment variable, and that JAR is downloaded during initialization in entrypoint.sh:

#!/bin/bash

set -eo pipefail

# This variable will also be used in the SparkSession builder within

# the application code.

export SPARK_CONNECT_MAIN_APPLICATION_FILE_PATH="/tmp/$(uuidgen).jar"

# Download the JAR with the code and specific dependencies of the client

# application to be run. All such JAR files are stored in S3, and when

# creating a client Pod, the path to the required JAR is passed to it

# via environment variables.

java -cp "/app/*" com.joom.analytics.sc.client.S3Downloader

${MAIN_APPLICATION_FILE_S3_PATH} ${SPARK_CONNECT_MAIN_APPLICATION_FILE_PATH}

# Launch the client application. Any MAIN_CLASS initializes a SparkSession

# at the beginning of its execution using the code provided above.

java -cp ${SPARK_CONNECT_MAIN_APPLICATION_FILE_PATH}:"/app/*" ${MAIN_CLASS} "$@"

Finally, when it comes time to launch the application, our custom SparkAirflowOperator automatically determines the execution mode (Spark Connect or separate) based on the statistics of previous runs of this application.

Not all existing Spark applications can be successfully executed on Spark Connect since its SparkSession API is different from the SparkSession API used for separate Spark applications. For example, if your code uses sparkSession.sparkContext or sparkSession.sessionState, it will fail in the Spark Connect client because the Spark Connect version of SparkSession does not have these properties.

In our case, the most common cause of problems was using sparkSession.sessionState.catalog and sparkSession.sparkContext.hadoopConfiguration. In some cases, sparkSession.sessionState.catalog can be replaced with sparkSession.catalog, but not always. sparkSession.sparkContext.hadoopConfiguration may be needed if the code executed on the client side contains operations on your data storage, such as this:

def delete(path: Path, recursive: Boolean = true)

(implicit hadoopConfig: Configuration): Boolean = {

val fs = path.getFileSystem(hadoopConfig)

fs.delete(path, recursive)

}

Fortunately, it is possible to create a standalone SessionCatalog for use within the Spark Connect client. In this case, the class path of the Spark Connect client must also include org.apache.spark:spark-hive_2.12, as well as libraries for interacting with your storage (since we use S3, so in our case, it is org.apache.hadoop:hadoop-aws).

import org.apache.spark.SparkConf

import org.apache.hadoop.conf.Configuration

import org.apache.spark.sql.hive.StandaloneHiveExternalCatalog

import org.apache.spark.sql.catalyst.catalog.{ExternalCatalogWithListener, SessionCatalog}

// This is just an example of what the required properties might look like.

// All of them should already be set for existing Spark applications in one

// way or another, and their complete list can be found in the UI of any

// running separate Spark application on the Environment tab.

val sessionCatalogConfig = Map(

"spark.hadoop.hive.metastore.uris" -> "thrift://metastore.spark:9083",

"spark.sql.catalogImplementation" -> "hive",

"spark.sql.catalog.spark_catalog" -> "org.apache.spark.sql.delta.catalog.DeltaCatalog",

)

val hadoopConfig = Map(

"hive.metastore.uris" -> "thrift://metastore.spark:9083",

"fs.s3.impl" -> "org.apache.hadoop.fs.s3a.S3AFileSystem",

"fs.s3a.aws.credentials.provider" -> "com.amazonaws.auth.DefaultAWSCredentialsProviderChain",

"fs.s3a.endpoint" -> "s3.amazonaws.com",

// and others...

)

def createStandaloneSessionCatalog(): (SessionCatalog, Configuration) = {

val sparkConf = new SparkConf().setAll(sessionCatalogConfig)

val hadoopConfiguration = new Configuration()

hadoopConfig.foreach {

case (key, value) => hadoopConfiguration.set(key, value)

}

val externalCatalog = new StandaloneHiveExternalCatalog(

sparkConf, hadoopConfiguration)

val sessionCatalog = new SessionCatalog(

new ExternalCatalogWithListener(externalCatalog)

)

(sessionCatalog, hadoopConfiguration)

}

You also need to create a wrapper for HiveExternalCatalog accessible in your code (because the HiveExternalCatalog class is private to the org.apache.spark package):

package org.apache.spark.sql.hive

import org.apache.hadoop.conf.Configuration

import org.apache.spark.SparkConf

class StandaloneHiveExternalCatalog(conf: SparkConf, hadoopConf: Configuration)

extends HiveExternalCatalog(conf, hadoopConf)

Additionally, it is often possible to replace code that does not work on Spark Connect with an alternative, for example:

Unfortunately, it is not always easy to fix a particular Spark application to make it work as a Spark Connect client. For example, third-party Spark components used in the project pose a significant risk, as they are often written without considering compatibility with Spark Connect. Since, in our environment, any Spark application can be automatically launched on Spark Connect, we found it reasonable to implement a fallback to a separate Spark application in case of failure. Simplified, the logic is as follows:

This approach is somewhat simpler than maintaining code that identifies the reasons for failures from logs, and it works well in most cases. Attempts to run incompatible applications on Spark Connect usually do not have any significant negative impact because, in the vast majority of cases, if an application is incompatible with Spark Connect, it fails immediately after launch without wasting time and resources. However, it is important to mention that all our applications are idempotent.

As I already mentioned, we collect Spark statistics for each Spark application (most of our platform optimizations and alerts depend on it). This is easy when the application runs as a separate Spark application. In the case of Spark Connect, the stages and tasks of each client application need to be separated from the stages and tasks of all other client applications that run simultaneously within the shared Spark Connect server.

You can pass any identifiers to the Spark Connect server by setting custom properties for the client SparkSession:

val session = builder

.config("spark.joom.scAppId", scAppId)

.config("spark.joom.airflowTaskId", airflowTaskId)

.getOrCreate()

Then, in the SparkListener on the Spark Connect server side, you can retrieve all the passed information and associate each stage/task with the particular client application.

class StatsReportingSparkListener extends SparkListener {

override def onStageSubmitted(stageSubmitted: SparkListenerStageSubmitted): Unit = {

val stageId = stageSubmitted.stageInfo.stageId

val stageAttemptNumber = stageSubmitted.stageInfo.attemptNumber()

val scAppId = stageSubmitted.properties.getProperty("spark.joom.scAppId")

// ...

}

}

Here, you can find the code for the StatsReportingSparkListener we use to collect statistics. You might also be interested in this free tool for finding performance issues in your Spark applications.

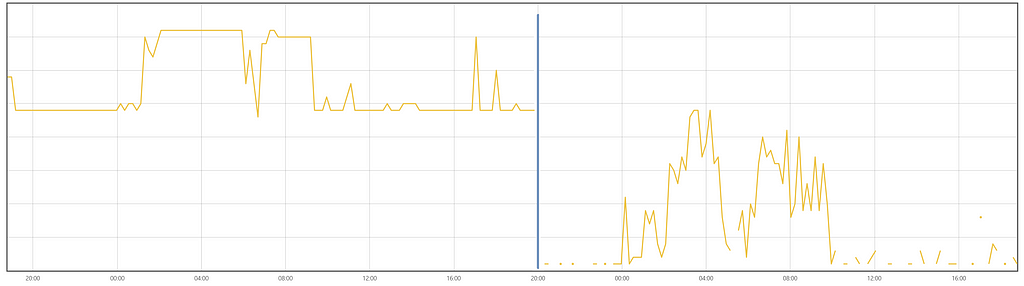

The Spark Connect server is a permanently running Spark application where a large number of clients can run their Jobs. Therefore, it can be worthwhile to customize its properties, which can make it more reliable and prevent waste of resources. Here are some settings that turned out to be useful in our case:

// Using dynamicAllocation is important for the Spark Connect server

// because the workload can be very unevenly distributed over time.

spark.dynamicAllocation.enabled: true // default: false

// This pair of parameters is responsible for the timely removal of idle

// executors:

spark.dynamicAllocation.cachedExecutorIdleTimeout: 5m // default: infinity

spark.dynamicAllocation.shuffleTracking.timeout: 5m // default: infinity

// To create new executors only when the existing ones cannot handle

// the received tasks for a significant amount of time. This allows you

// to save resources when a small number of tasks arrive at some point

// in time, which do not require many executors for timely processing.

// With increased schedulerBacklogTimeout, unnecessary executors do not

// have the opportunity to appear by the time all incoming tasks are

// completed. The time to complete the tasks increases slightly with this,

// but in most cases, this increase is not significant.

spark.dynamicAllocation.schedulerBacklogTimeout: 30s // default: 1s

// If, for some reason, you need to stop the execution of a client

// application (and free up resources), you can forcibly terminate the client.

// Currently, even explicitly closing the client SparkSession does not

// immediately end the execution of its corresponding Jobs on the server.

// They will continue to run for a duration equal to 'detachedTimeout'.

// Therefore, it may be reasonable to reduce it.

spark.connect.execute.manager.detachedTimeout: 2m // default: 5m

// We have encountered a situation when killed tasks may hang for

// an unpredictable amount of time, leading to bad consequences for their

// executors. In this case, it is better to remove the executor on which

// this problem occurred.

spark.task.reaper.enabled: true // default: false

spark.task.reaper.killTimeout: 300s // default: -1

// The Spark Connect server can run for an extended period of time. During

// this time, executors may fail, including for reasons beyond our control

// (e.g., AWS Spot interruptions). This option is needed to prevent

// the entire server from failing in such cases.

spark.executor.maxNumFailures: 1000

// In our experience, BroadcastJoin can lead to very serious performance

// issues in some cases. So, we decided to disable broadcasting.

// Disabling this option usually does not result in a noticeable performance

// degradation for our typical applications anyway.

spark.sql.autoBroadcastJoinThreshold: -1 // default: 10MB

// For many of our client applications, we have to add an artifact to

// the client session (method sparkSession.addArtifact()).

// Using 'useFetchCache=true' results in double space consumption for

// the application JAR files on executors' disks, as they are also duplicated

// in a local cache folder. Sometimes, this even causes disk overflow with

// subsequent problems for the executor.

spark.files.useFetchCache: false // default: true

// To ensure fair resource allocation when multiple applications are

// running concurrently.

spark.scheduler.mode: FAIR // default: FIFO

For example, after we adjusted the idle timeout properties, the resource utilization changed as follows:

In our environment, the Spark Connect server (version 3.5) may become unstable after a few days of continuous operation. Most often, we face randomly hanging client application jobs for an infinite amount of time, but there may be other problems as well. Also, over time, the probability of a random failure of the entire Spark Connect server increases dramatically, and this can happen at the wrong moment.

As this component evolves, it will likely become more stable (or we will find out that we have done something wrong in our Spark Connect setup). But currently, the simplest solution has turned out to be a daily preventive restart of the Spark Connect server at a suitable moment (i.e., when no client applications are running on it). An example of what the restart code might look like can be found here.

In this article, I described our experience using Spark Connect to run a large number of diverse Spark applications.

To summarize the above:

Overall, we have had a positive experience using Spark Connect in our company. We will continue to watch the development of this technology with great interest, and there is a plan to expand its use.

Adopting Spark Connect was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Adopting Spark Connect

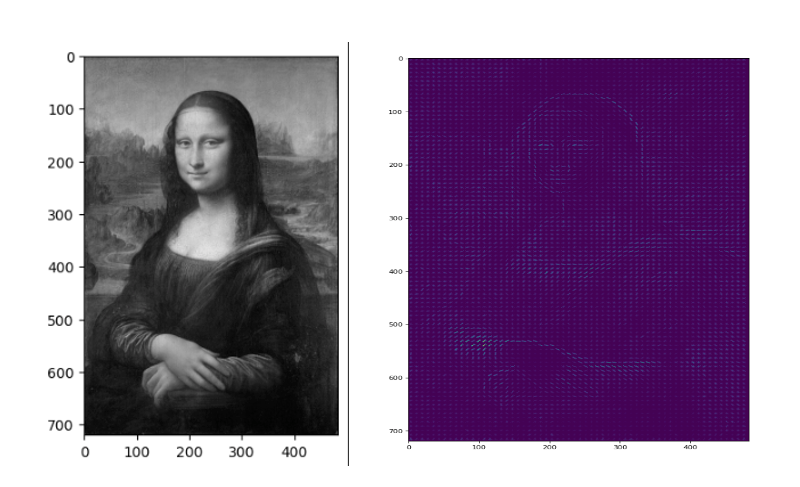

Histogram of Oriented Gradients was first introduced by Navneet Dalal and Bill Trigs in their CVPR paper [“Histograms of Oriented Gradients for Human Detection”]

There are many different algorithms for feature extraction, depending on the type of features it focuses on, such as texture, color, or shape, whether it describes the image as a whole or just local information.

The HOG algorithm is one of the most essential techniques in feature extraction as it is a fundamental step for object detection and recognition tasks.

In this article, we will explore the principles and implementation of the HOG algorithm.

What is a Histogram of Oriented Gradients (HOG)?

The HOG is a global descriptor (feature extraction) method applied to each pixel within an image to extract neighborhood information(neighborhood of pixel) like texture and compress/abstract that information from a given image into a reduced/condensed vector form called a feature vector that could describe the feature of this image which is very useful when it came to captures edge and gradient structures in an image. Moreover, we can compare this processed image for object recognition or object detection.

1- Calculate the Gradient Image

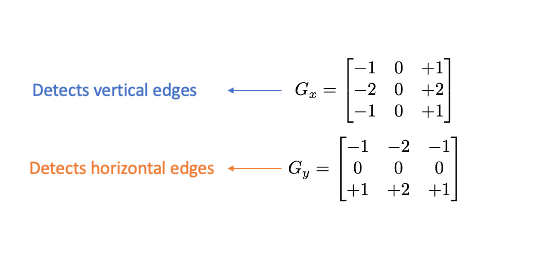

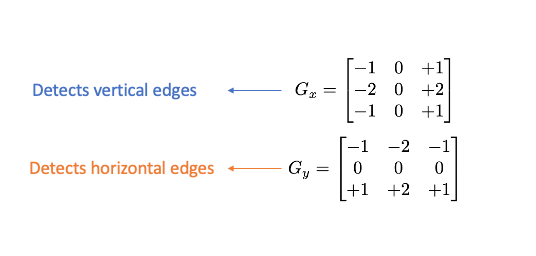

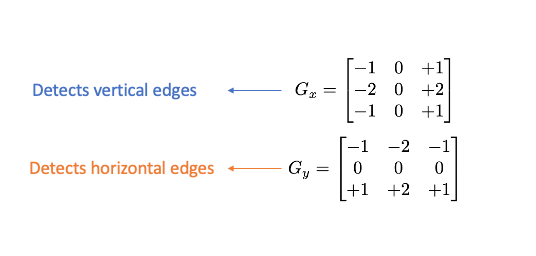

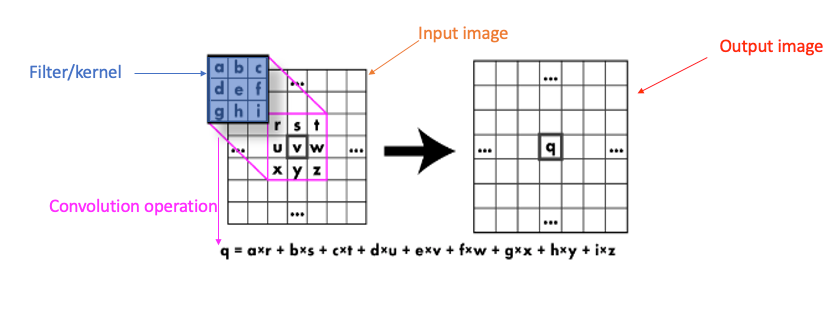

To extract and capture edge information, we apply a Sobel operator consisting of two small matrices (filter/kernel) that measure the difference in intensity at grayscale(wherever there is a sharp change in intensity).

We apply this kernel on pixels of the image by convolution :

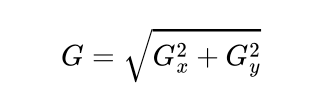

After applying Sobel Kernel to the image now, we can calculate the magnitude and orientation of an image :

The gradients determine the edges’ strength (magnitude) and direction (orientation) at a specific point. The edge direction is perpendicular to the gradient vector’s direction at the location where the gradient is calculated. In other words, the length and direction of the vector.

2- Calculate the Histogram of the Gradient

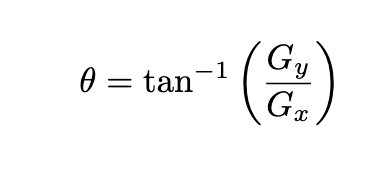

For each pixel, we have two values: magnitude and orientation. To combine this information into something meaningful, we use a histogram, which helps organize and interpret these values effectively.

We create a histogram of gradients in these cells ( 8 x 8 ), which have 64 values distributed to bins in the histogram, which are quantized into 9 bins each of 20 degrees ( spanning 0° to 180°). Each pixel’s magnitude value (edge strength) is added as a “vote” to the corresponding orientation bin so the histogram’s peaks reveal the pixel’s dominant orientations.

3- Normalization

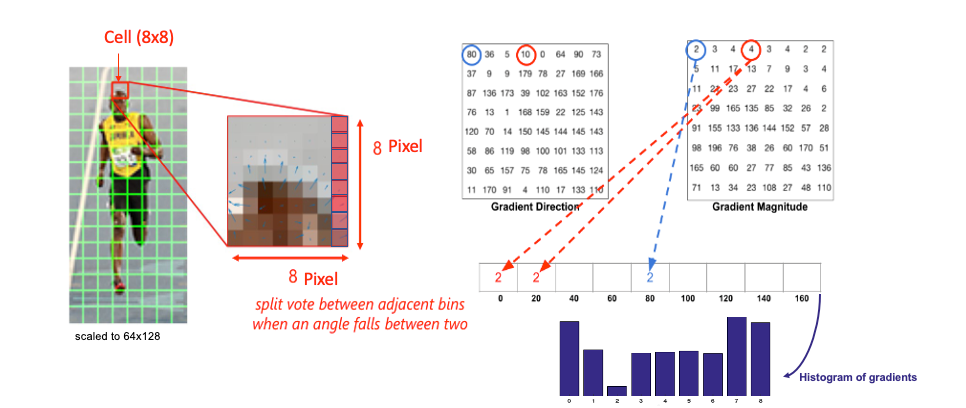

Since gradient magnitudes depend on lighting conditions, normalization scales the histograms to reduce the influence of lighting and contrast variations.

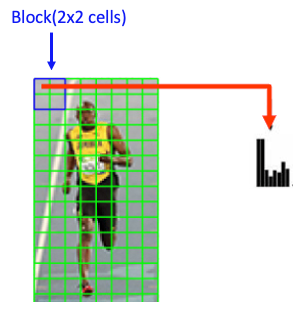

Each block typically consists of a grid of cells (2×2). This block slides across the image with overlap, meaning each cell is included in multiple blocks.

Each block has 4 histograms ( 4 cells ), which can be concatenated to form a 4(cells) x 9 (bins )x1 vector = 36 x 1 element vector for each block; overall image in the example: 7 rows x 15 cols = 7x15x36=3780 elements. This feature vector is called the HOG descriptor, and the resulting vector is used as input for classification algorithms like SVM.

# we will be using the hog descriptor from skimage since it has visualization tools available for this example

import cv2

import matplotlib.pyplot as plt

from skimage import color, feature, exposure

# Load the image

img = cv2.imread('The path of the image ..')

# Convert the original image to RGB

img_rgb = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

# Convert the original image to gray scale

img_gray = cv2.cvtColor(img_rgb,cv2.COLOR_RGB2GRAY)

plt.imshow(img_gray,cmap="gray")

# These are the usual main parameters to tune in the HOG algorithm.

(H,Himage) = feature.hog(img_gray, orientations=9, pixels_per_cell=(8,8), cells_per_block=(2,2),visualize=True)

Himage = exposure.rescale_intensity(Himage, out_range=(0,255))

Himage = Himage.astype("uint8")

fig = plt.figure(figsize=(15, 12), dpi=80)

plt.imshow(Himage)

As you can see, HOG effectively captures the general face shape (eyes, nose, head) and hands. This is due to HOG’s focus on gradient information across the image, making it highly effective for detecting lines and shapes. Additionally, we can observe the dominant gradients and their intensity at each point in the image.

(HOG) algorithm for human detection in an image

HOG is a popular feature descriptor in computer vision, particularly effective for detecting shapes and outlines, such as the human form. This code leverages OpenCV’s built-in HOG descriptor and a pre-trained Support Vector Machine (SVM) model specifically trained to detect people

# Load the image

img = cv2.imread('The path of the image ..')

# Convert the original image to RGB

img_rgb = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

# Convert the original image to gray scale

img_gray = cv2.cvtColor(img_rgb,cv2.COLOR_RGB2GRAY)

#Initialize the HOG descriptor and set the default SVM detector for people

hog = cv2.HOGDescriptor()

hog.setSVMDetector(cv2.HOGDescriptor_getDefaultPeopleDetector())

# Detect people in the image using the HOG descriptor

bbox, weights = hog.detectMultiScale(img_gray ,winStride = (2,2), padding=(10,10),scale=1.02)

# Draw bounding boxes around detected people

for (x, y, w, h) in bbox:

cv2.rectangle(img_rgb, (x, y),

(x + w, y + h),

(255, 0, 0), 4)

plt.imshow(img_rgb)

After loading the test image, we use HOG’s detectMultiScale method to detect people, with winStride set to skip one pixel per step, improving speed by trading off a bit of accuracy, which is crucial in object detection since it is a computationally intensive process. Although the detector may identify all people, sometimes there’s a false positive where part of one person’s body is detected as another person. To fix this, we can apply Non-Maxima Suppression (NMS) to eliminate overlapping bounding boxes, though bad configuration(winterize, padding, scale) can occasionally fail to detect objects.

wrap –up The HOG descriptor computation involves several steps:

1. Gradient Computation

2. Orientation Binning

3. Descriptor Blocks

4. Normalization

5. Feature Vector Formation

In this article, we explored the math behind HOG and how easy it is to apply in just a few lines of code, thanks to OpenCV! I hope you found this guide helpful and enjoyed working through the concepts. Thanks for reading, and see you in the next post!

Histogram of Oriented Gradients (HOG) in Computer Vision was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Histogram of Oriented Gradients (HOG) in Computer Vision

Go Here to Read this Fast! Histogram of Oriented Gradients (HOG) in Computer Vision

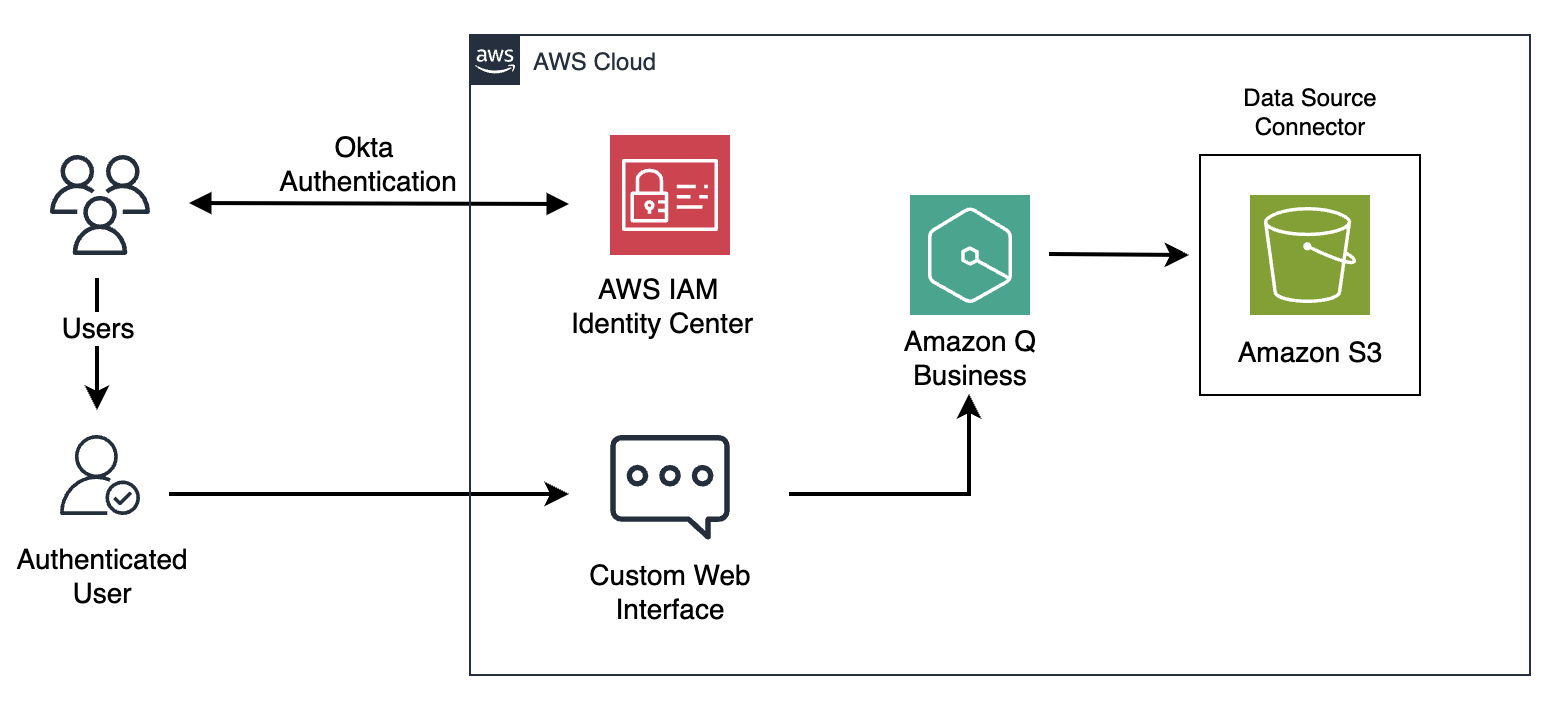

Originally appeared here:

Unleash the power of generative AI with Amazon Q Business: How CCoEs can scale cloud governance best practices and drive innovation

Until recently, AI models were narrow in scope and limited to understanding either language or specific images, but rarely both.

In this respect, general language models like GPTs were a HUGE leap since we went from specialized models to general yet much more powerful models.

But even as language models progressed, they remained separate from computer vision аreas, each domain advancing in silos without bridging the gap. Imagine what would happen if you could only listen but not see, or vice versa.

My name is Roman Isachenko, and I’m part of the Computer Vision team at Yandex.

In this article, I’ll discuss visual language models (VLMs), which I believe are the future of compound AI systems.

I’ll explain the basics and training process for developing a multimodal neural network for image search and explore the design principles, challenges, and architecture that make it all possible.

Towards the end, I’ll also show you how we used an AI-powered search product to handle images and text and what changed with the introduction of a VLM.

Let’s begin!

LLMs with billions or even hundreds of billions of parameters are no longer a novelty.

We see them everywhere!

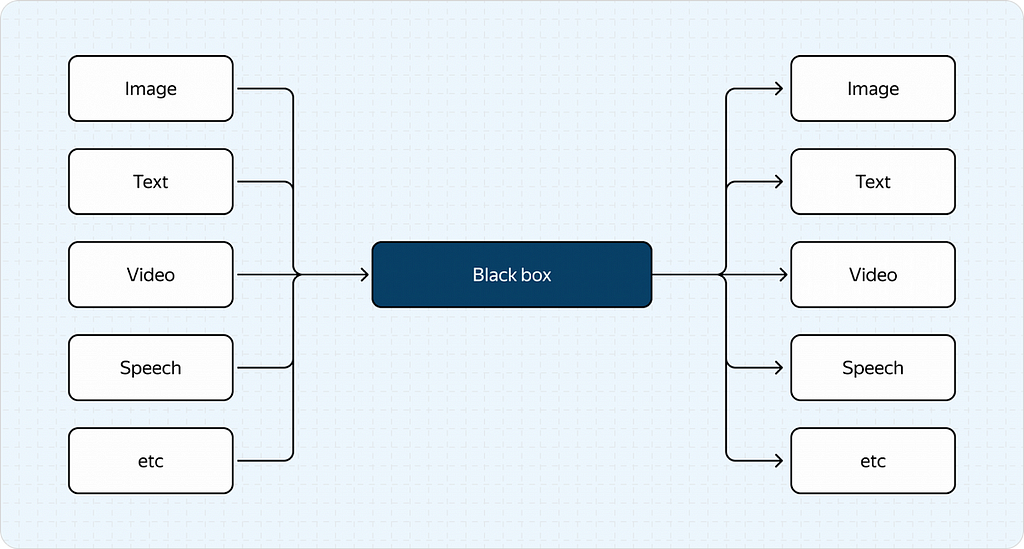

The next key focus in LLM research has been more inclined towards developing multimodal models (omni-models) — models that can understand and process multiple data types.

As the name suggests, these models can handle more than just text. They can also analyze images, video, and audio.

But why are we doing this?

Jack of all trades, master of none, oftentimes better than master of one.

In recent years, we’ve seen a trend where general approaches dominate narrow ones.

Think about it.

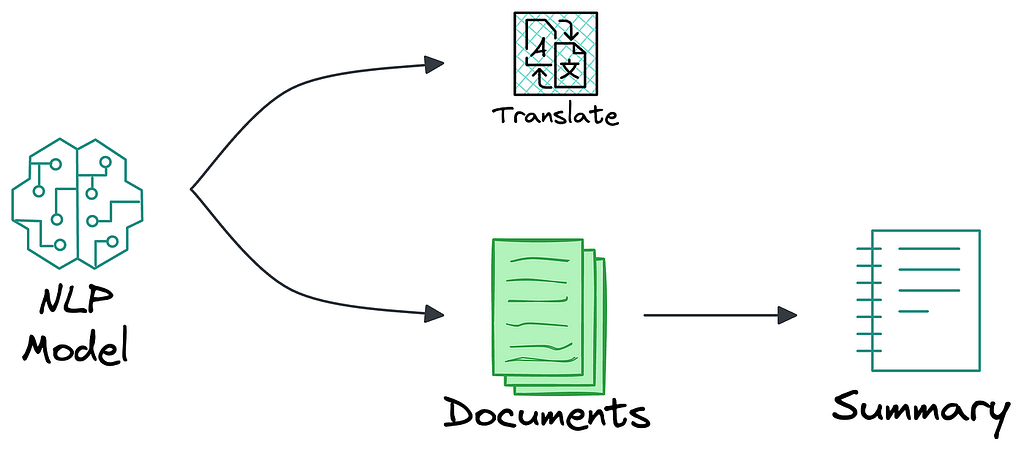

Today’s language-driven ML models have become relatively advanced and general-purpose. One model can translate, summarize, identify speech tags, and much more.

But earlier, these models used to be task-specific (we have them now as well, but fewer than before).

In other words, today’s NLP models (LLMs, specifically) can serve multiple purposes that previously required developing highly specific solutions.

Second, this approach allows us to exponentially scale the data available for model training, which is crucial given the finite amount of text data. Earlier, however, one would need task-specific data:

Third, we believe that training a multimodal model can enhance the performance of each data type, just like it does for humans.

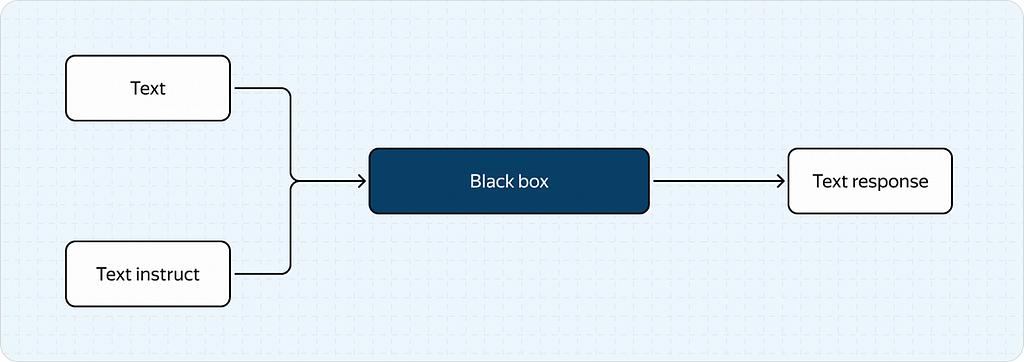

For this article, we’ll simplify the “black box” concept to a scenario where the model receives an image and some text (which we call the “instruct”) as input and outputs only text (the response).

As a result, we end up with a much simpler process as shown below:

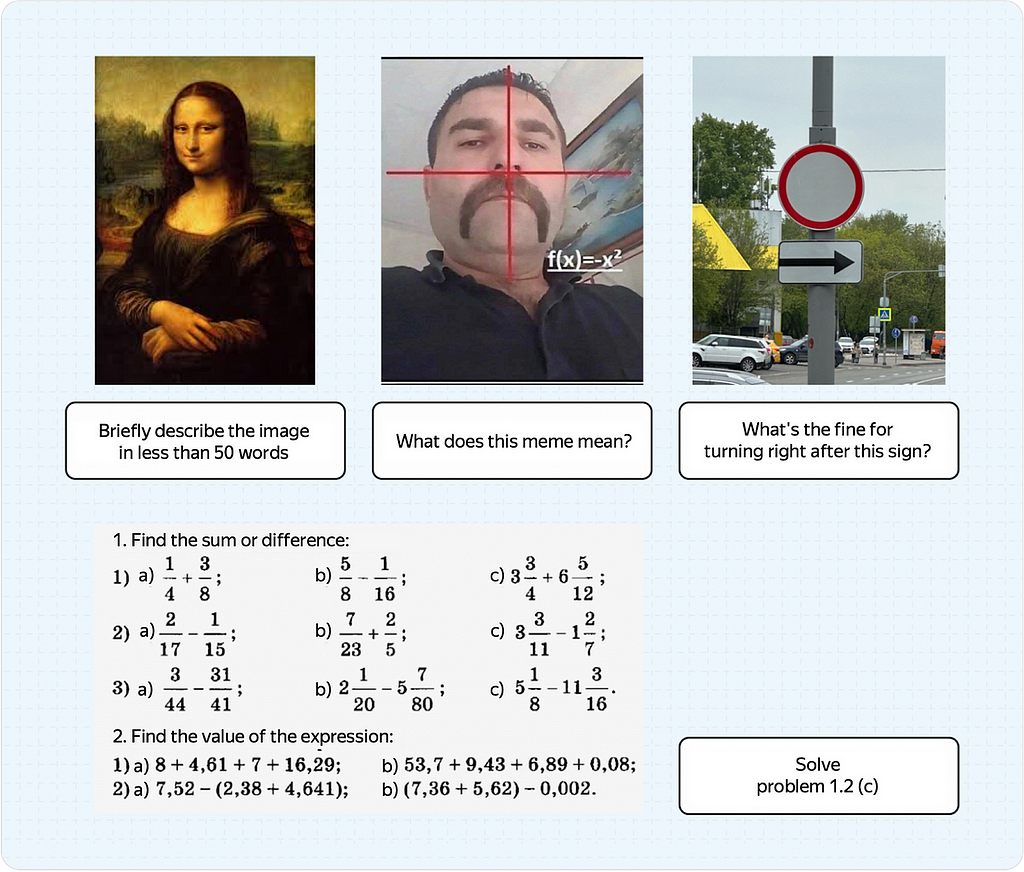

We’ll discuss image-discriminative models that analyze and interpret what an image depicts.

Before delving into the technical details, consider the problems these models can solve.

A few examples are shown below:

VLMs are a new frontier in computer vision that can solve various fundamental CV-related tasks (classification, detection, description) in zero-shot and one-shot modes.

While VLMs may not excel in every standard task yet, they are advancing quickly.

Now, let’s understand how they work.

These models typically have three main components:

The pipeline is pretty straightforward:

The adapter is the most exciting and important part of the model, as it precisely facilitates the communication/interaction between the LLM and the image encoder.

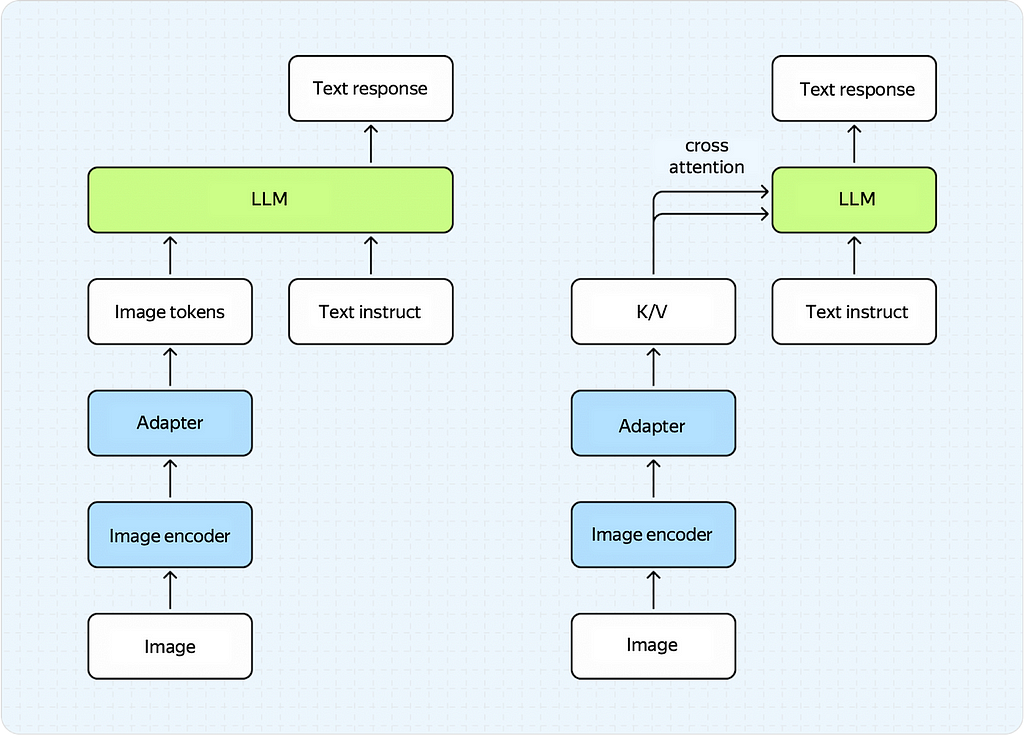

There are two types of adapters:

Prompt-based adapters were first proposed in BLIP-2 and LLaVa models.

The idea is simple and intuitive, as evident from the name itself.

We take the output of the image encoder (a vector, a sequence of vectors, or a tensor — depending on the architecture) and transform it into a sequence of vectors (tokens), which we feed into the LLM. You could take a simple MLP model with a couple of layers and use it as an adapter, and the results will likely be pretty good.

Cross-attention-based adapters are a bit more sophisticated in this respect.

They were used in recent papers on Llama 3.2 and NVLM.

These adapters aim to transform the image encoder’s output to be used in the LLM’s cross-attention block as key/value matrices. Examples of such adapters include transformer architectures like perceiver resampler or Q‑former.

Prompt-based adapters (left) and Cross-attention-based adapters (right)

Both approaches have pros and cons.

Currently, prompt-based adapters deliver better results but take away a large chunk of the LLM’s input context, which is important since LLMs have limited context length (for now).

Cross-attention-based adapters don’t take away from the LLM’s context but require a large number of parameters to achieve good quality.

With the architecture sorted out, let’s dive into training.

Firstly, note that VLMs aren’t trained from scratch (although we think it’s only a matter of time) but are built on pre-trained LLMs and image encoders.

Using these pre-trained models, we fine-tune our VLM in multimodal text and image data.

This process involves two steps:

Training procedure of VLMs (Image by Author)

Notice how these stages resemble LLM training?

This is because the two processes are similar in concept. Let’s take a brief look at these stages.

Here’s what we want to achieve at this stage:

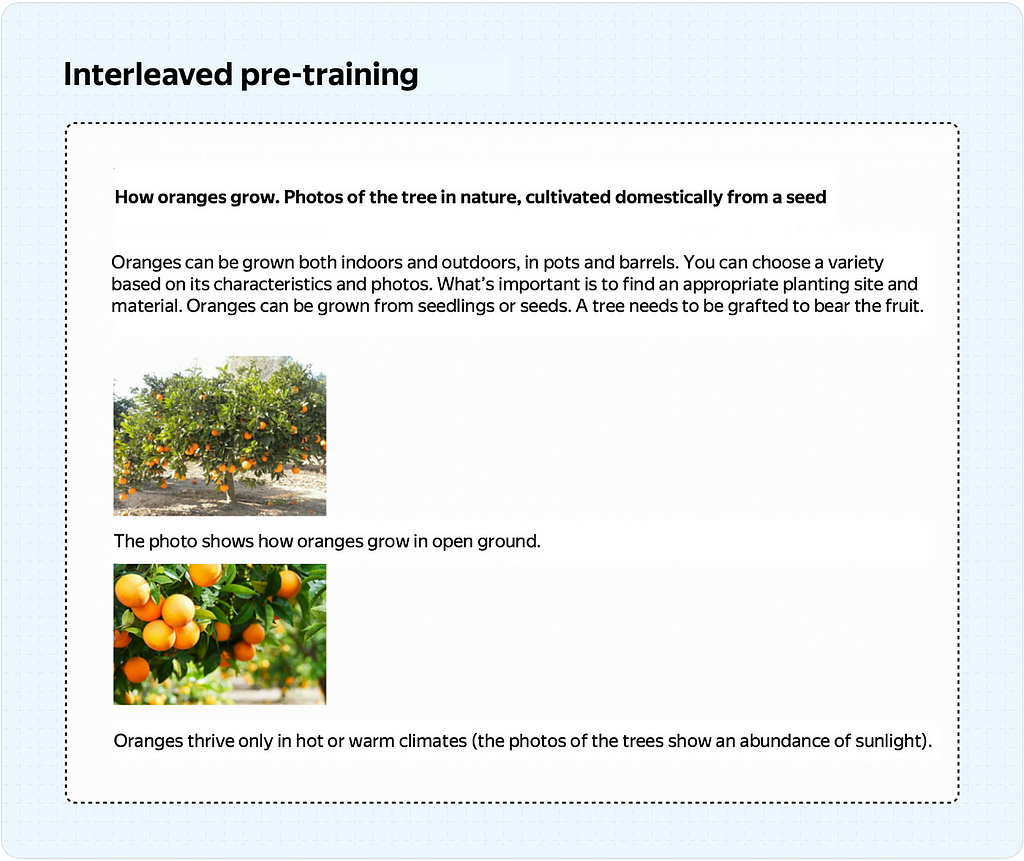

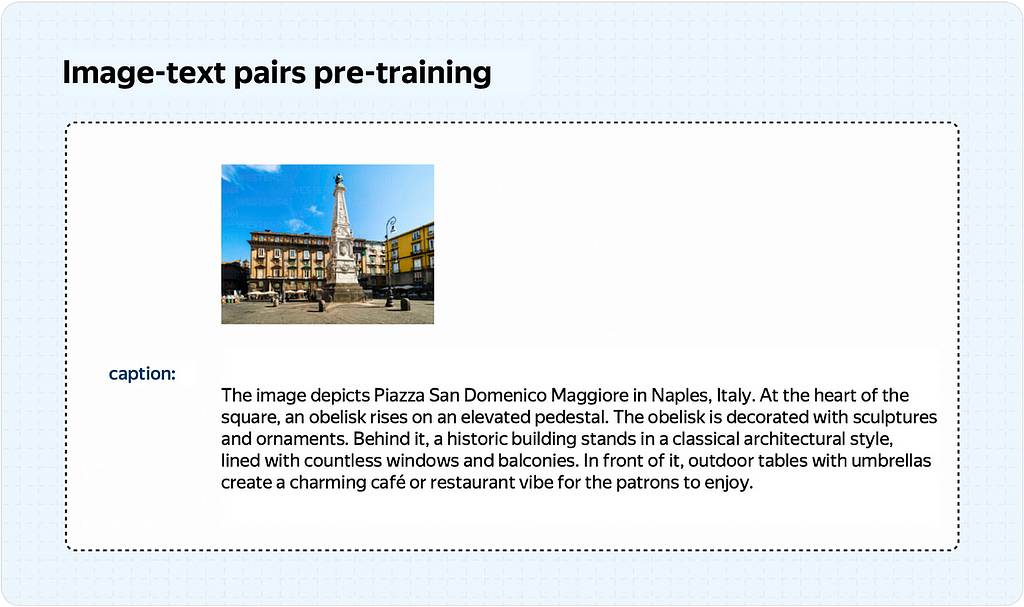

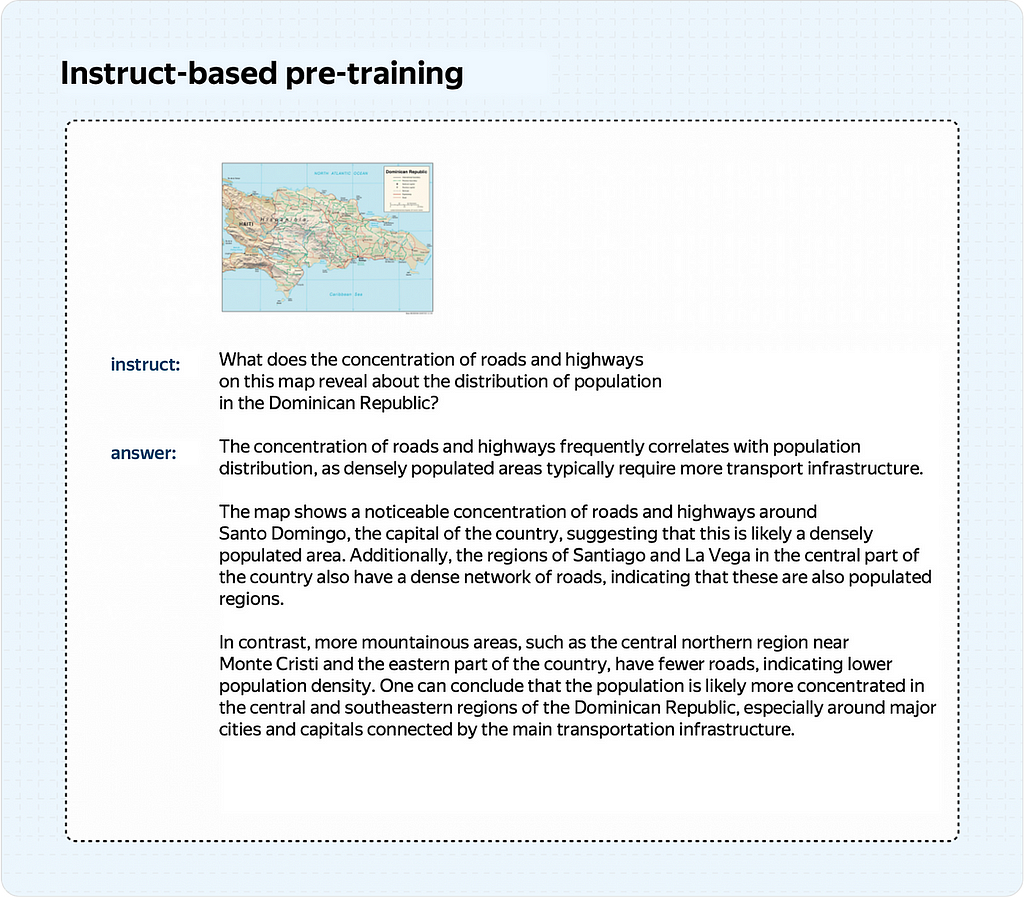

There are three types of data used in pre-training VLMs:

Image-Text Pairs Pre-training: We train the model to perform one specific task: captioning images. You need a large corpus of images with relevant descriptions to do that. This approach is more popular because many such corpora are used to train other models (text-to-image generation, image-to-text retrieval).

Instruct-Based Pre-training: During inference, we’ll feed the model images and text. Why not train the model this way from the start? This is precisely what instruct-based pre-training does: It trains the model on a massive dataset of image-instruct-answer triplets, even if the data isn’t always perfect.

How much data is needed to train a VLM model properly is a complex question. At this stage, the required dataset size can vary from a few million to several billion (thankfully, not a trillion!) samples.

Our team used instruct-based pre-training with a few million samples. However, we believe interleaved pre-training has great potential, and we’re actively working in that direction.

Once pre-training is complete, it’s time to start on alignment.

It comprises SFT training and an optional RL stage. Since we only have the SFT stage, I’ll focus on that.

Still, recent papers (like this and this) often include an RL stage on top of VLM, which uses the same methods as for LLMs (DPO and various modifications differing by the first letter in the method name).

Anyway, back to SFT.

Strictly speaking, this stage is similar to instruct-based pre-training.

The distinction lies in our focus on high-quality data with proper response structure, formatting, and strong reasoning capabilities.

This means that the model must be able to understand the image and make inferences about it. Ideally, it should respond equally well to text instructs without images, so we’ll also add high-quality text-only data to the mix.

Ultimately, this stage’s data typically ranges between hundreds of thousands to a few million examples. In our case, the number is somewhere in the six digits.

Let’s discuss the methods for evaluating the quality of VLMs. We use two approaches:

The first method allows us to measure surrogate metrics (like accuracy in classification tasks) on specific subsets of data.

However, since most benchmarks are in English, they can’t be used to compare models trained in other languages, like German, French, Russian, etc.

While translation can be used, the errors introduced by translation models make the results unreliable.

The second approach allows for a more in-depth analysis of the model but requires meticulous (and expensive) manual data annotation.

Our model is bilingual and can respond in both English and Russian. Thus, we can use English open-source benchmarks and run side-by-side comparisons.

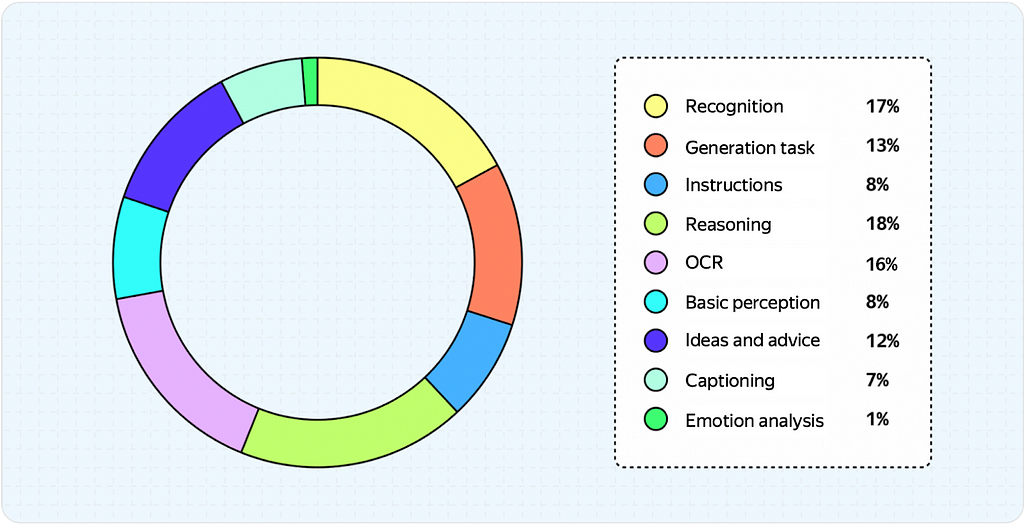

We trust this method and invest a lot in it. Here’s what we ask our assessors to evaluate:

We strive to evaluate a complete and diverse subset of our model’s skills.

The following pie chart illustrates the distribution of tasks in our SbS evaluation bucket.

This summarizes the overview of VLM fundamentals and how one can train a model and evaluate its quality.

This spring, we added multimodality to Neuro, an AI-powered search product, allowing users to ask questions using text and images.

Until recently, its underlying technology wasn’t truly multimodal.

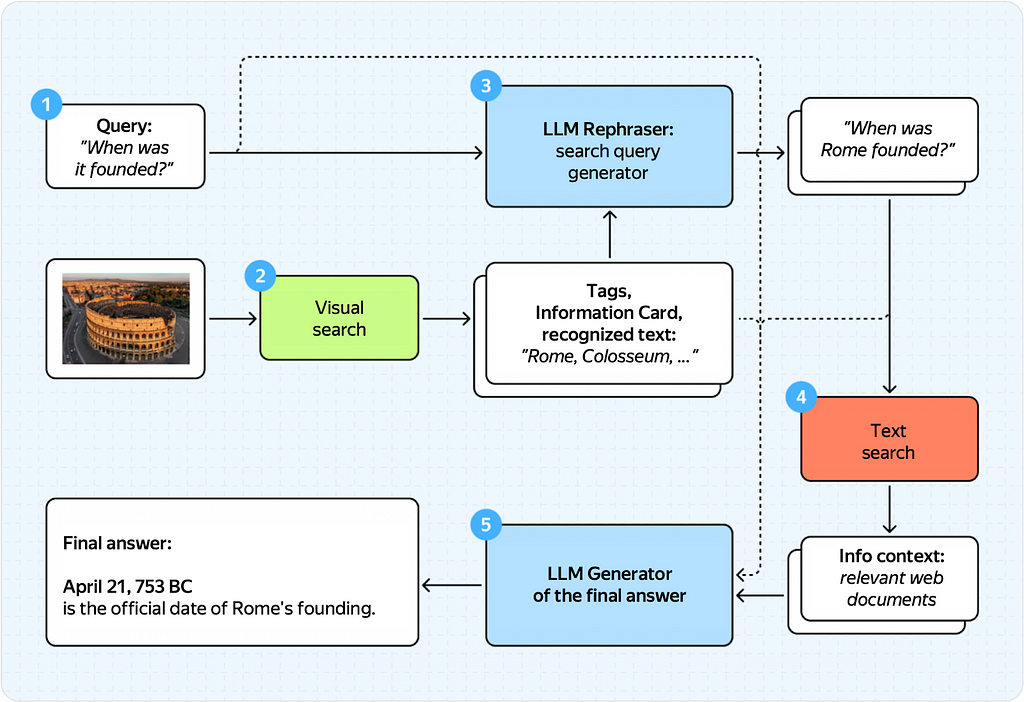

Here’s what this pipeline looked like before.

This diagram seems complex, but it’s straightforward once you break it down into steps.

Here’s what the process used to look like

Done!

As you can see, we used to rely on two unimodal LLMs and our visual search engine. This solution worked well on a small sample of queries but had limitations.

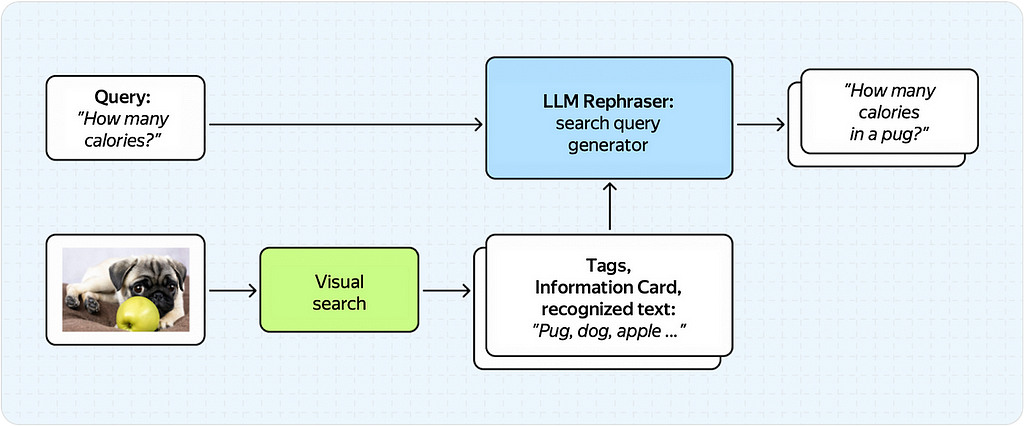

Below is an example (albeit slightly exaggerated) of how things could go wrong.

Here, the rephraser receives the output of the visual search service and simply doesn’t understand the user’s original intent.

In turn, the LLM model, which knows nothing about the image, generates an incorrect search query, getting tags about the pug and the apple simultaneously.

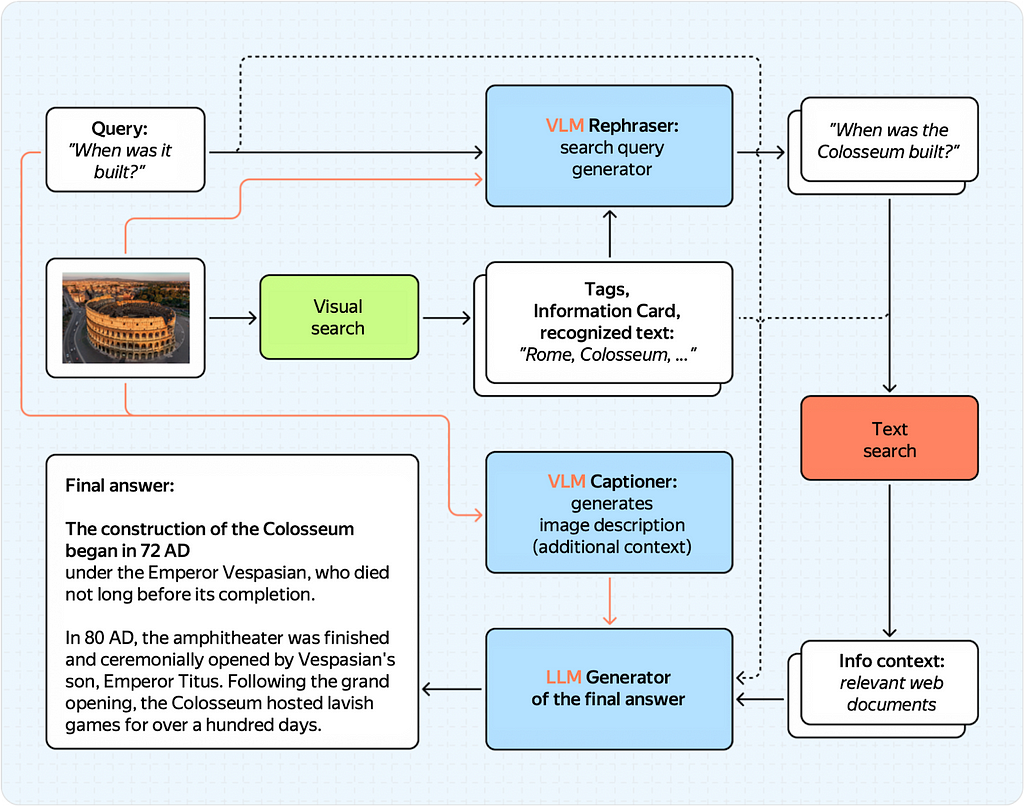

To improve the quality of our multimodal response and allow users to ask more complex questions, we introduced a VLM into our architecture.

More specifically, we made two major modifications:

You might wonder

Why not make the generator itself VLM-based?

That’s a good idea!

But there’s a catch.

Our generator training inherits from Neuro’s text model, which is frequently updated.

To update the pipeline faster and more conveniently, it was much easier for us to introduce a separate VLM block.

Plus, this setup works just as well, which is shown below:

Training VLM rephraser and VLM captioner are two separate tasks.

For this, we use mentioned earlierse VLM, as mentioned e for thise-tuned it for these specific tasks.

Fine-tuning these models required collecting separate training datasets comprising tens of thousands of samples.

We also had to make significant changes to our infrastructure to make the pipeline computationally efficient.

Now for the grand question:

Did introducing a VLM to a fairly complex pipeline improve things?

In short, yes, it did!

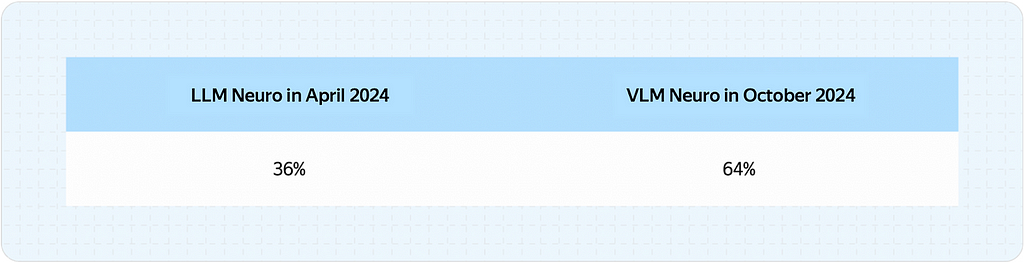

We ran side-by-side tests to measure the new pipeline’s performance and compared our previous LLM framework with the new VLM one.

This evaluation is similar to the one discussed earlier for the core technology. However, in this case, we use a different set of images and queries more aligned with what users might ask.

Below is the approximate distribution of clusters in this bucket.

Our offline side-by-side evaluation shows that we’ve substantially improved the quality of the final response.

The VLM pipeline noticeably increases the response quality and covers more user scenarios.

We also wanted to test the results on a live audience to see if our users would notice the technical changes that we believe would improve the product experience.

So, we conducted an online split test, comparing our LLM pipeline to the new VLM pipeline. The preliminary results show the following change:

To reiterate what was said above, we firmly believe that VLMs are the future of computer vision models.

VLMs are already capable of solving many out-of-the-box problems. With a bit of fine-tuning, they can absolutely deliver state-of-the-art quality.

Thanks for reading!

An Introduction to VLMs: The Future of Computer Vision Models was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

An Introduction to VLMs: The Future of Computer Vision Models

Go Here to Read this Fast! An Introduction to VLMs: The Future of Computer Vision Models

Are you curious about data science? Does math and artificial intelligence excite you? Do you want to explore data science and plan to pursue a data science career? Whether you’re unsure where to begin or just taking your first steps into data science, you’ve come to the right place. Trust me, this guide will help you take your first steps with confidence!

Data science is one of the most exciting fields in which to work. It’s a multidisciplinary field that combines various techniques and tools to analyze complex datasets, build predictive models, and guide decision-making in businesses, research, and technology.

Data science is applied in various industries such as finance, healthcare, social media, travel, e-commerce, robotics, military, and espionage.

The Internet has abundant information about how to start with data science, leading to myths and misconceptions about data science. The two most important misconceptions are —

Data scientists require a strong grasp of mathematics. It’s important for someone starting their data science journey to focus on mathematics and fundamentals before diving into fancy stuff like LLMs. I’ve stressed the importance of fundamentals throughout this article. The knowledge of basic concepts will help you stand out from the crowd of data science aspirants. It will help you ace this career and stay updated with the developments in this rapidly growing field. Think of it as laying a building’s foundation. It takes the maximum time and effort. It’s essential to support everything that follows. Once the base is solid, you can start building upwards, floor by floor, expanding your knowledge and skills.

Knowing where to start might seem overwhelming if you’re a beginner. With so many tools, concepts, and techniques to learn, it’s easy to feel lost. But don’t worry!

In this article —

Let’s get started!

The following technical skills are necessary.

Mathematics is everywhere. No doubt it’s the backbone and the core of data science. A good data scientist must have a deep and concise understanding of mathematics. Mastering mathematics will help you

Without mathematical understanding, you’ll have difficulty unboxing the black box. The following topics are super important.

Linear Algebra is a beautiful and elegant branch of mathematics that deals with vectors, matrices, and linear transformations. Linear Algebra concepts are fundamental for solving systems of linear equations and manipulating high-dimensional data.

Why is it required?

Nvidia folks are getting richer daily because they produce and sell the hardware (GPUs) and write open-source optimized software (Cuda) to perform efficient matrix operations!

Where to learn Linear Algebra?

Probability and statistics are essential for understanding uncertainty in data-driven fields. Probability theory provides a mathematical framework to quantify the likelihood of events. Statistics involves collecting, organizing, analyzing, and interpreting data to make informed decisions.

Why are they required?

Where to learn Probability and Statistics?

Calculus is about finding the rate of change of a function. Calculus, especially differential calculus, plays an integral role in ML. It calculates the slope or gradient of curves, which tells us how a quantity changes in response to changes in another.

Why is it required?

The 2024 Nobel Laureate Geoffrey Hinton co-authored the backpropagation algorithm paper in 1986!

Where to learn Calculus?

Wait! You’ll find it out soon!

Machine learning is built upon the core principles of Linear algebra, probability, statistics, and calculus. At its essence, ML is applied mathematics, and once you grasp the underlying mathematics, understanding fundamental ML concepts becomes much easier. These fundamentals are essential for building robust and accurate ML models.

Most comprehensive ML courses begin by introducing the various types of algorithms. There are supervised, unsupervised, self-supervised, and reinforcement learning methods, each designed for specific problems. ML algorithms are further categorized into classification, regression, and clustering, depending on whether the task predicts labels, continuous values, or identifies patterns.

Nearly all ML workflows follow a structured process, which includes the following key steps:

Where to learn ML?