Originally appeared here:

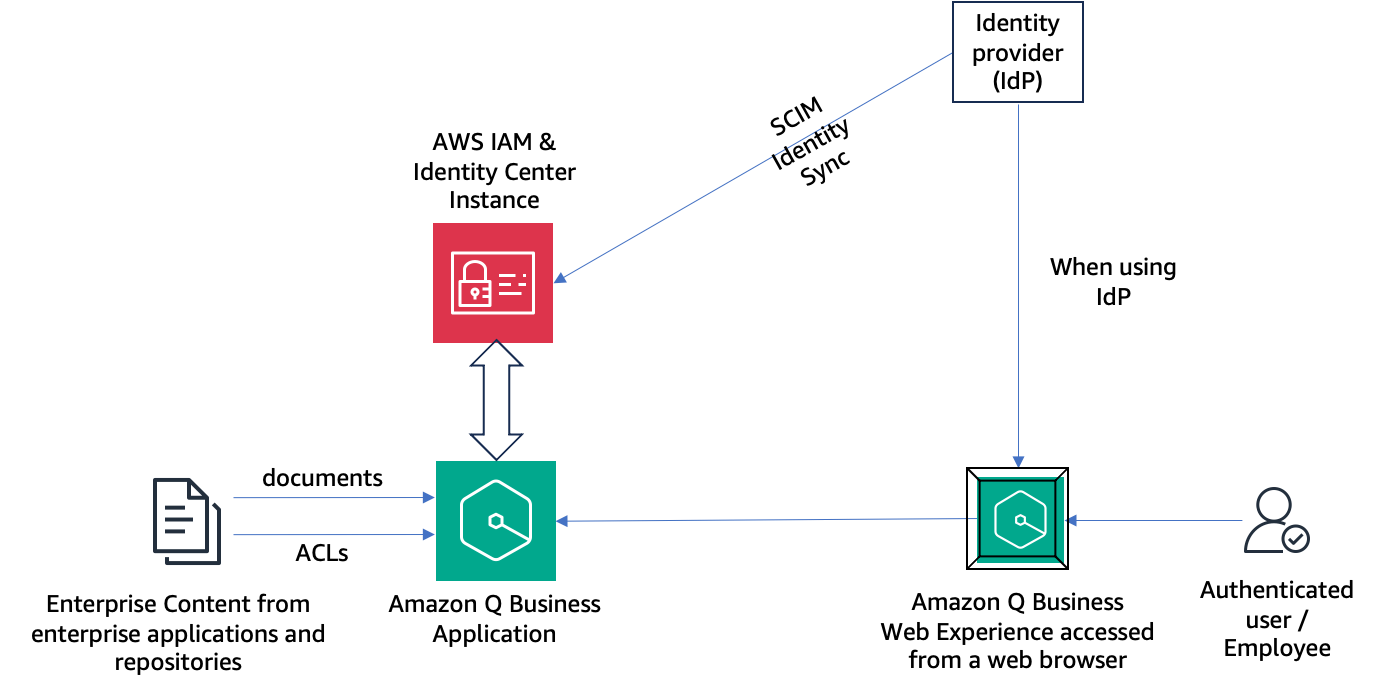

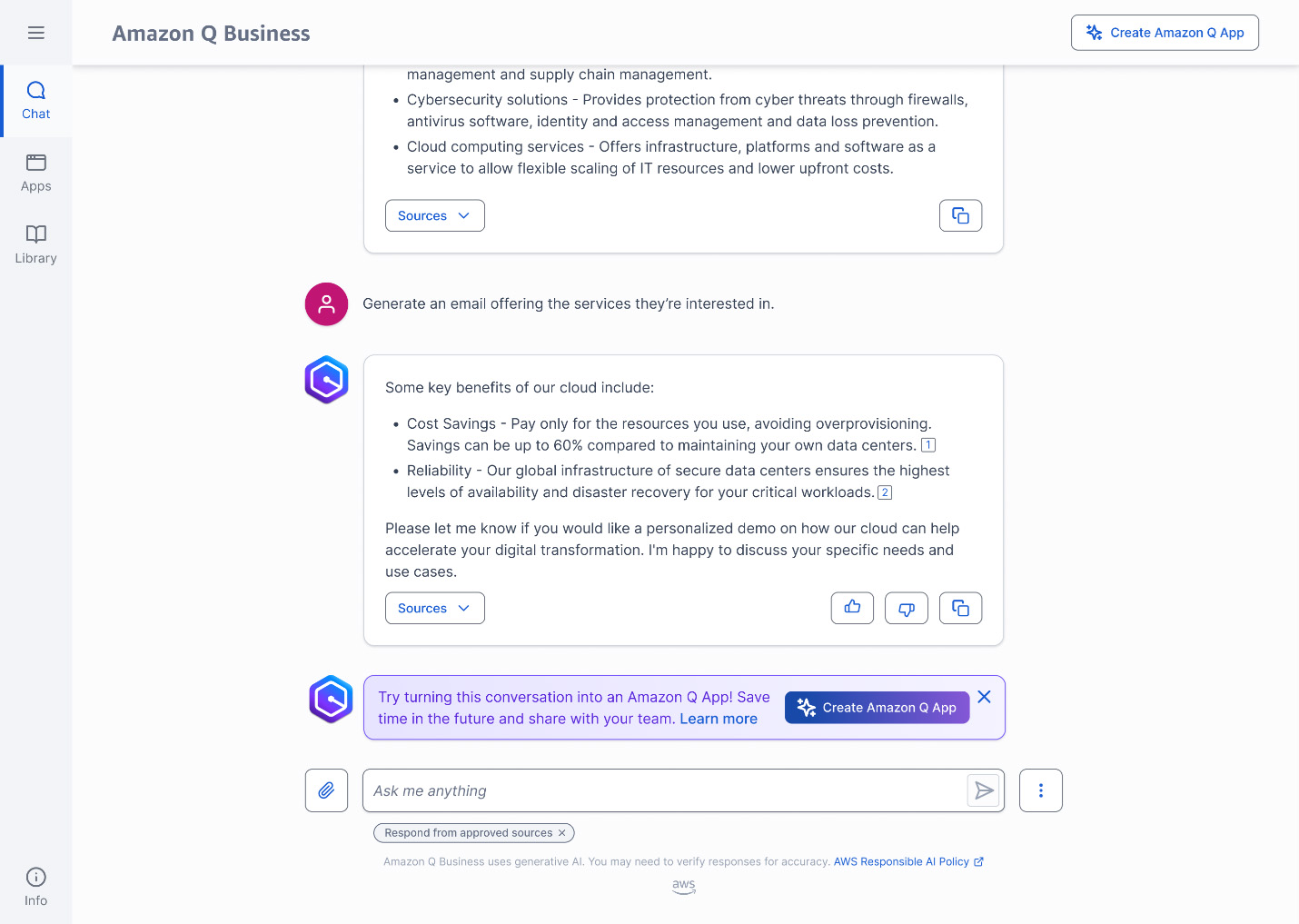

Build private and secure enterprise generative AI apps with Amazon Q Business and AWS IAM Identity Center

Originally appeared here:

Build private and secure enterprise generative AI apps with Amazon Q Business and AWS IAM Identity Center

Originally appeared here:

Enhance customer service efficiency with AI-powered summarization using Amazon Transcribe Call Analytics

Discussing the basic principles and methodology of data validation

Originally appeared here:

The Foundation of Data Validation

Go Here to Read this Fast! The Foundation of Data Validation

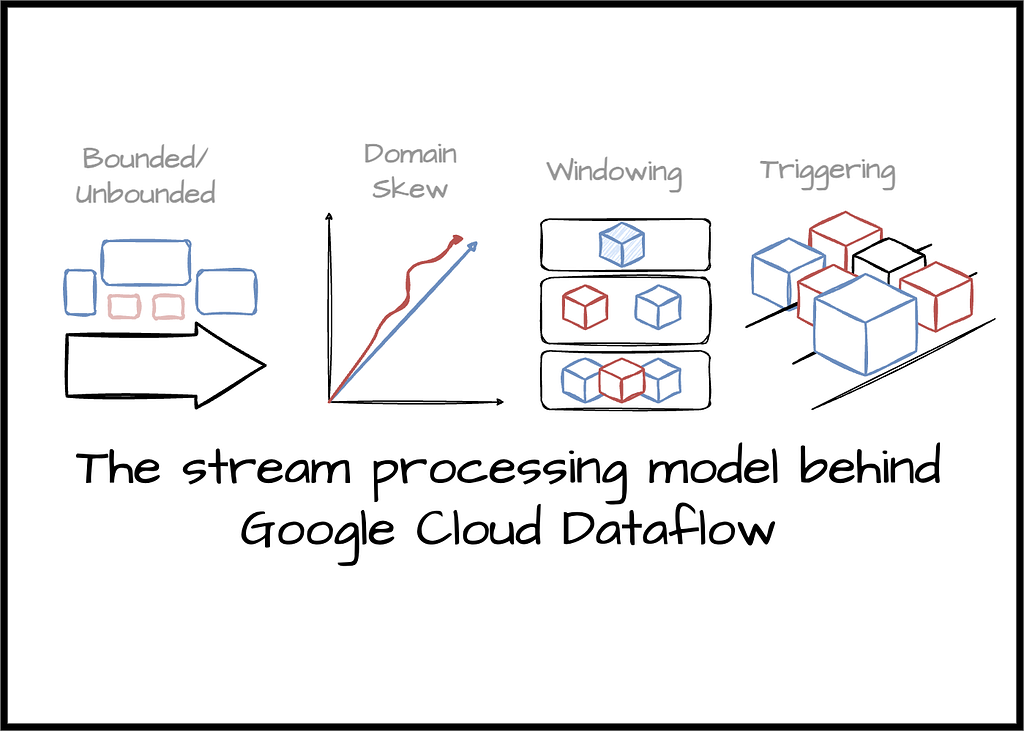

Google Dataflow is a fully managed data processing service that provides serverless unified stream and batch data processing. It is the first choice Google would recommend when dealing with a stream processing workload. The service promises to ensure correctness and latency regardless of how big your workload is. To achieve these characteristics, Google Dataflow is backed by a dedicated processing model, Dataflow, resulting from many years of Google research and development. This blog post is my note after reading the paper: The Dataflow Model: A Practical Approach to Balancing Correctness, Latency, and Cost in Massive-Scale, Unbounded, Out-of-Order Data Processing. If you want to learn more about stream processing, I strongly recommend this paper. It contains all the lessons and insights from Google’s introduction of the Dataflow model to deal with its global-scale stream processing demand. Despite being written in 2015, I believe this paper’s contribution will never be old.

Note: The paper was published in 2015, so some details may be changed or updated now; if you have any feedback or information that can supplement my blog, feel free to comment.

To avoid more confusing

Workflow: You define the unified processing logic using Apache Beam and decide to run the pipeline on the execution engine you want, such as Google Dataflow, Spark, Flink, etc.

Before we explore the Dataflow model in depth, the following sections will introduce some information, such as context, challenges, and concepts.

At the time of the paper writing, data processing frameworks like MapReduce and its “cousins “ like Hadoop, Pig, Hive, or Spark allow the data consumer to process batch data at scale. On the stream processing side, tools like MillWheel, Spark Streaming, or Storm came to support the user. Still, these existing models did not satisfy the requirement in some common use cases.

Consider an example: A streaming video provider’s business revenue comes from billing advertisers for the amount of advertising watched on their content. They want to know how much to bill each advertiser daily and aggregate statistics about the videos and ads. Moreover, they want to run offline experiments over large amounts of historical data. They want to know how often and for how long their videos are being watched, with which content/ads, and by which demographic groups. All the information must be available quickly to adjust their business in near real-time. The processing system must also be simple and flexible to adapt to the business’s complexity. They also require a system that can handle global-scale data since the Internet allows companies to reach more customers than ever. Here are some observations from people at Google about the state of the data processing systems of that time:

They conclude the major weakness of all the models and systems mentioned above is the assumption that the unbounded input data will eventually be complete. This approach does not make sense anymore when faced with the realities of today’s enormous, highly disordered data. They also believe that any approach to solving diverse real-time workloads must provide simple but powerful interfaces for balancing the correctness, latency, and cost based on specific use cases. From that perspective, the paper has the following conceptual contribution to the unified stream processing model:

– What results are being computed?

– Where in event time they are being computed.

– When they are materialized during processing time,

– How do earlier results relate to later refinements?

In the rest of this blog, we will see how Google enables this contribution. One last thing before we move to the next section: Google noted that there is “nothing magical about this model. “ The model doesn’t make your expensive-computed task suddenly run faster; it provides a general framework that allows for the simple expression of parallel computation, which is not tied to any specific execution engine like Spark or Flink.

The paper’s authors use the term unbounded/bounded to define infinite/finite data. They avoid using streaming/batch terms because they usually imply using a specific execution engine. The term unbound data describes the data that doesn’t have a predefined boundary, e.g., the user interaction events of an active e-commerce application; the data stream only stops when the application is inactive. Whereas bounded data refers to data that can be defined by clear start and end boundaries, e.g., daily data export from the operation database.

To continue with the introduction section, we will review some concepts used throughout the paper.

The organizer

Windowing divides the data into finite chunks. Usually, the system uses time notions to organize data into the window (e.g., all data in the last 1 hour will belong to one window). All data in the windows are processed as a group. Users require grouping operations on the window abstractions: aggregation or time-bounded operation when processing unbound data. On the other hand, some operations on unbounded data don’t need the window notion, like filtering, mapping, or inner join. Windows may be aligned, e.g., applied across all the data for a given window, or unaligned, e.g., applied across only specific subsets of the data in that window. There are three major types of windows:

When handling time-related events data, there are two domains of time to consider:

Given that definition, event time will never change, but processing time changes constantly for each event as it flows through the pipeline step. This is a critical factor when analyzing events in the context of when they occurred. The difference between the event_time and the processing_time is called time domain skew. The skew can result from many potential reasons, such as communication delays or time spent processing in each pipeline stage. Metrics, such as watermarks, are good ways to visualize the skew. For the paper, the authors considered a lower watermark on event times that the pipeline has processed. These watermarks provide a notion to tell the system that: “no more data which have event time sooner this point of time will appear in the pipeline.” the watermarks are used not only to observe the skew between time domains but also to monitor the overall system. In a super-ideal world, the skew would always be zero; we could always process all events right when they happen.

In the following sections, we will learn the details of the Dataflow model.

The model has two core transformations that operate on the (key, value) pair; both transformations can work on bounded and unbounded data:

The ParDo operates on each element so it can be translated to unbounded data. The GroupByKey collects all data for a given key before sending it to the downstream steps. If the input source is unbounded, it is impossible to define when it will end. The standard solution is data windowing.

Systems that support grouping typically redefine their GroupByKey operation to be GroupByKeyAndWindow. The authors’ significant contribution in this aspect is the unaligned window. The first is treating all windowing strategies as unaligned from the dataflow model and allowing custom adjustments to apply aligned windows when needed. The second is any windowing process can be broken apart into two related operations:

Although there is support for unaligned windows, event-time windows raised another challenge: The need to tell the system when to emit the results for a window because the data can appear in the pipeline in an unordered way. The initial solution of using event-time progress metrics like watermark (which is mentioned above) has some shortcomings:

A reminder so you don’t have to scroll up: The watermark is an indicator that tells the system that “no more data which have event time sooner this point of time will appear in the pipeline.” For example, at the given time, the watermark is “11:30”, meaning no events with event_time less than 11:30 will appear anymore.

This led to the observation that using only watermarks to decide when to emit the window’s result is likely to increase the latency (when the watermark is slow) or impact the accuracy of the pipeline (missing some data if the watermark is too fast ). The authors observe in the Lambda Architecture (which has two separate pipelines, streaming and batch, and the result from the two pipelines finally converge in the end) that the paradigm doesn’t solve the completeness problem by providing correct answers faster; instead, it gives the low-latency estimate of a result from the streaming pipeline, then promises to deliver the correctness result from the batch pipeline. They stated that if we want to achieve the same thing in a single pipeline, we need a mechanism to provide multiple panes (answers) for any given window. This feature, called trigger, allows the user to specify when to trigger the output results for a given window. Here is an illustration to provide you with a similar idea between the trigger and the semantics in Lambda Architecture

The system the authors introduce supports the following trigger implementation:

Besides controlling when the system will emit the window’s result, the trigger mechanism also provides a way to control how panes (answers) for a given window relate to each other via the following refinement modes:

The following section will describe how Google implements and designs the Dataflow model.

The paper’s authors say they’ve implemented this model internally using FlumeJava, a Java library that makes it easy to develop, test, and run efficient data-parallel pipelines. MillWheel acts as the beneath stream execution engine. Additionally, an external reimplementation for Google Cloud Dataflow is primarily complete at the time of the paper’s writing. Interestingly, the core windowing and triggering code is quite general, and a significant portion is shared across batch and streaming implementations.

The core principles of the Dataflow model:

As they designed the Model, they gained real-world experiences with FlumeJava and MillWheel. Things that worked well would be reflected in the model; things that were less well would motivate changes in approach. Here are some of their experiences that influenced the design choice:

In this week’s blog, we’ve discussed the design principle and implementation of the Dataflow model, the backbone behind the famous Google Cloud Dataflow service. If you want to dive deeper into the model, I highly recommend the book Streaming Systems: The What, Where, When, and How of Large-Scale Data Processing or the two-part blog from one of the paper’s authors: Streaming 101 and Streaming 102. I hope my work brings some value, especially to someone who wants to learn more about the stream processing world.

See you next blog!

[1] Google, The Dataflow Model: A Practical Approach to Balancing Correctness, Latency, and Cost in Massive-Scale, Unbounded, Out-of-Order Data (2015).

My newsletter is a weekly blog-style email in which I note things I learn from people smarter than me.

So, if you want to learn and grow with me, subscribe here: https://vutr.substack.com.

The Stream Processing Model Behind Google Cloud Dataflow was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

The Stream Processing Model Behind Google Cloud Dataflow

Go Here to Read this Fast! The Stream Processing Model Behind Google Cloud Dataflow

Leveraging the power of LLMs to significantly enhance the quality of document context retrieved for direct answer generation in your RAG…

Originally appeared here:

Enhancing Direct Answer Accuracy in RAG Setup with Self-Retrieval Mechanisms

Originally appeared here:

Accelerate software development and leverage your business data with generative AI assistance from Amazon Q

Originally appeared here:

Amazon Q Business and Amazon Q in QuickSight empowers employees to be more data-driven and make better, faster decisions using company knowledge

Faster and better than the transformer but more difficult to train

Originally appeared here:

Jamba: The New Hybrid Transformer/Mamba

Go Here to Read this Fast! Jamba: The New Hybrid Transformer/Mamba