Originally appeared here:

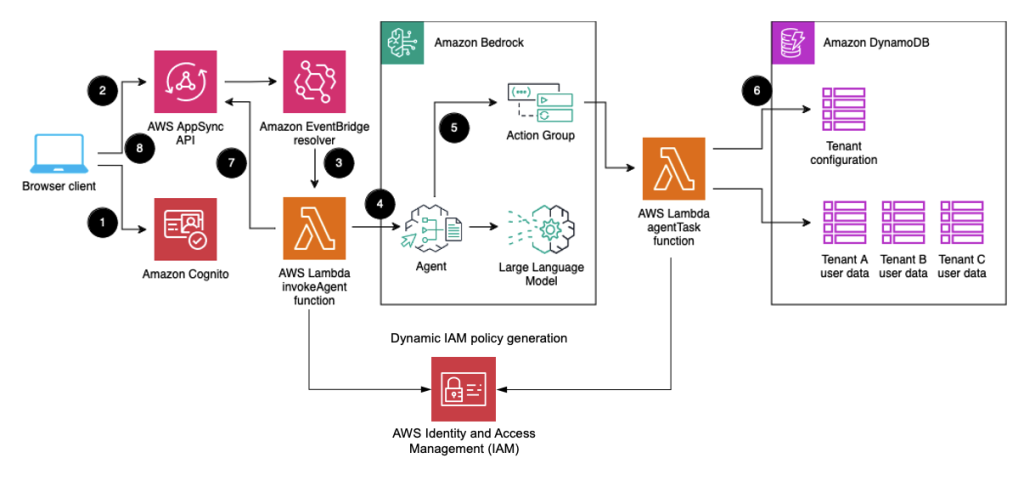

Implementing tenant isolation using Agents for Amazon Bedrock in a multi-tenant environment

Originally appeared here:

Implementing tenant isolation using Agents for Amazon Bedrock in a multi-tenant environment

Originally appeared here:

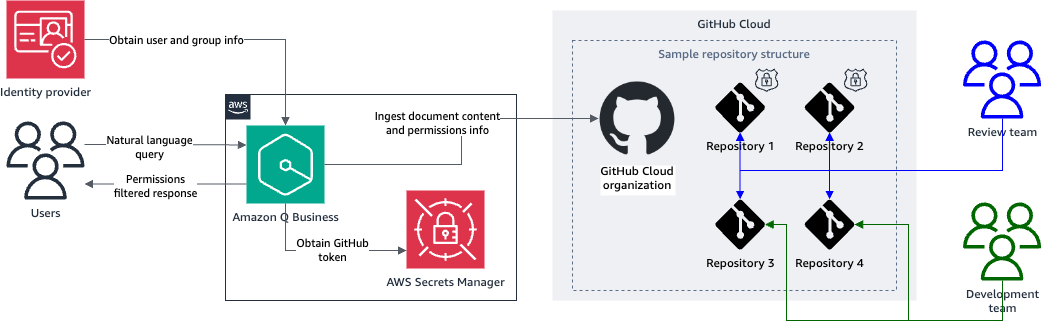

Connect the Amazon Q Business generative AI coding companion to your GitHub repositories with Amazon Q GitHub (Cloud) connector

Originally appeared here:

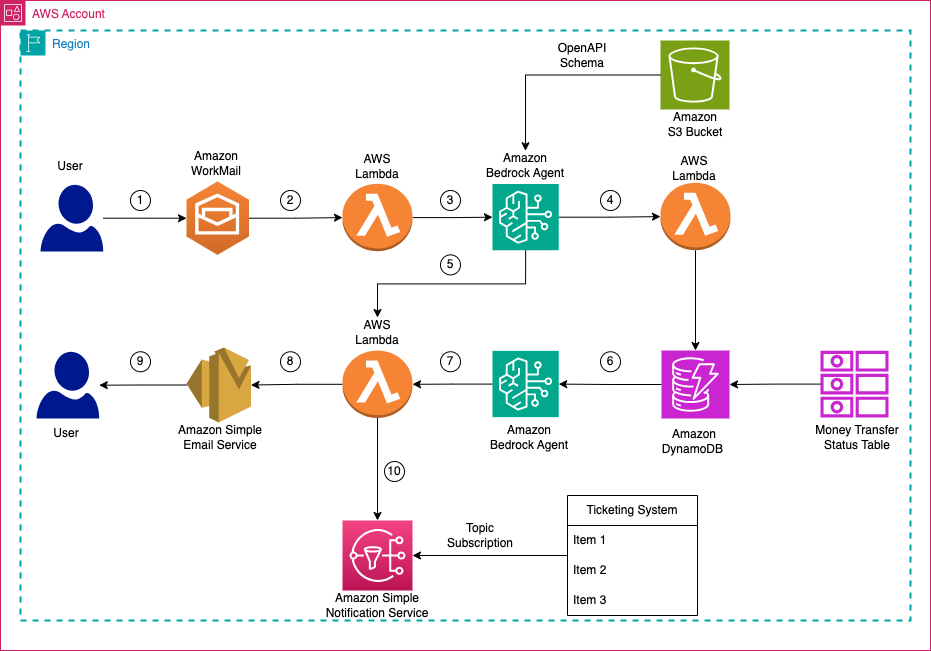

Elevate customer experience through an intelligent email automation solution using Amazon Bedrock

Originally appeared here:

Build an end-to-end RAG solution using Knowledge Bases for Amazon Bedrock and the AWS CDK

Large Language Models (LLMs) have demonstrated exceptional abilities in performing natural language tasks that involve complex reasoning. As a result, these models have evolved to function as agents capable of planning, strategising, and solving complex problems. However, challenges persist when it comes to making decisions under uncertainty, where outcomes are not deterministic, or when adaptive decision-making is required in changing environments, especially in multi-step scenarios where each step influences the next. We need more advanced capabilities…

This is where GPT-4’s advanced reasoning capabilities and Language Agent Tree Search (LATS) come together to address these challenges. LATS incorporates a dynamic, tree-based search methodology that enhances the reasoning capabilities of GPT-4O. By integrating Monte Carlo Tree Search (MCTS) with LLMs, LATS unifies reasoning, acting, and planning, creating a more deliberate and adaptive problem-solving framework. This powerful combination allows for improved decision-making and more robust handling of complex tasks, setting a new standard in the deployment of language models as autonomous agents.

Computational problem solving can be broadly defined as “search through a combinatorial problem space”, represented as a tree. Depth-First Search (DFS) and Breadth-First Search (BFS) are fundamental methods for exploring such solution spaces. A notable example of the power of deep search is AlphaGo’s “Move 37,” which showcased how innovative, human-surpassing solutions can emerge from extensive exploration.

Unlike traditional methods that follow predefined paths, LLMs can dynamically generate new branches within the solution space by predicting potential outcomes, strategies, or actions based on context. This capability allows LLMs to not only navigate but also expand the problem space, making them exceptionally powerful in situations where the problem structure is not fully known, is continuously evolving, or is highly complex.

Scaling compute during training is widely recognised for its ability to improve model performance. The benefits of scaling compute during inference remain under-explored. MGA’s offer a novel approach by amplifying computational resources during inference…

Unlike traditional token-level generation methods, meta-generation algorithms employ higher-order control structures such as planning, loops with multiple model calls, self-reflection, task decomposition, and dynamic conditioning. These mechanisms allow the model to execute tasks end-to-end, mimicking higher-level cognitive processes often referred to as Systems-2 thinking.

Therefore one-way meta generation algorithms may enhance LLM reasoning by integrating search into the generation process. During inference, MGA’s dynamically explore a broader solution space, allowing the model to reason through potential outcomes and adapt strategies in real-time. By generating multiple paths and evaluating their viability, meta generation algorithms enable LLMs to simulate deeper, more complex reasoning akin to traditional search methods. This approach not only expands the model’s ability to generate novel insights but also improves decision-making in scenarios with incomplete or evolving information.

Techniques like Tree of Thoughts (ToT), and Graph of Thought (GoT) are employed to navigate combinatorial solution spaces efficiently.

In the Tree of Thoughts (ToT) approach, traditional methods like Depth-First Search (DFS) or Breadth-First Search (BFS) can navigate this tree, but they are computationally expensive because they explore each possible path systematically & exhaustively.

Monte Carlo Tree Search (MCTS) is an improvement on this by simulating different outcomes for actions and updating the tree based on these simulations. It uses a “selection” process where it picks decision nodes using a strategy that balances exploration (trying new paths) and exploitation (choosing known good paths). This is guided by a formula called Upper Confidence Bound (UCB).

The UCB formula has two key parts:

By selecting nodes using UCB, simulating outcomes (rewards) with LLMs, and back-propagating the rewards up the tree, MCTS effectively balances between exploring new strategies and exploiting known successful ones.

The second part of the UCB formula is the ‘exploitation term,’ which decreases as you explore deeper into a specific path. This decrease may lead the selection algorithm to switch to another path in the decision tree, even if that path has a lower immediate reward, because the exploitation term remains higher when that path is less explored.

Node selection with UCB, reward calculations with LLM simulations and backpropagation are the essence of MCTS.

For the sake of demonstration we will use LATS to solve the challenging problem of coming up with the optimal investment strategy in todays macroeconomic climate. We will feed LLM with the macro-economic statu susing the “IMF World Economic Outlook Report” as the context simply summarising the document. RAG is not used. Below is an example as to how LATS searches through the solution space…

3. Expansion: Based on the selection, Strategy B has the highest UCB1 value (since all nodes are at the same depth), so we expand only Strategy B by simulating its child nodes.

3. Backpropagation:

After each simulation, results of the simulation are back-propagated up the tree, updating the values of the parent nodes. This step ensures that the impact of the new information is reflected throughout the tree.

Updating Strategy B’s Value: Strategy B now needs to reflect the outcomes of B1 and B2. One common approach is to average the rewards of B1 and B2 to update Strategy B’s value. Now, Strategy B has an updated value of $8,000 based on the outcomes of its child nodes.

4. Recalculate UCB Scores:

After backpropagation, the UCB scores for all nodes in the tree are recalculated. This recalculation uses the updated values (average rewards) and visit counts, ensuring that each node’s UCB1 score accurately reflects both its potential reward and how much it has been explored.

UCB(s) = (exploration/reward term)+ (exploitation term)

Note again the exploitation term decreases for all nodes on a path that is continously explored deeper.

5. Next selection & simulation:

B1 is selected for further expansion (as it has the higher reward) into child nodes:

6. Backpropagation:

B1 reward updated as (9200 + 6800) / 2 = 8000

B reward updated as (8000 + 7500) / 2 = 7750

7.UCB Calculation:

Following backpropagation UCB values of all nodes are recalculated. Assume that due to the decaying exploration factor, B2 now has a higher UCB score than both B1a and B1b. This could occur if B1 has been extensively explored, reducing the exploration term for its children. Instead of continuing to expand B1’s children, the algorithm shifts back to explore B2, which has become more attractive due to its unexplored potential i.e. higher exploitation value.

This example illustrates how MCTS can dynamically adjust its search path based on new information, ensuring that the algorithm remains efficient and focused on the most promising strategies as it progresses.

Next we will build a “financial advisor” using GPT-4o, implementing LATS. (Please refer to the Github repo here for the code.)

(For an accurate analysis I am using the IMF World Economic Outlook report from July, 24 as my LLM context for simulations i.e. for generating child nodes and for assigning rewards to decision nodes …)

Here is how the code runs…

The code leverages the graphviz library to visually represent the decision tree generated during the execution of the investment strategy simulations. Decision tree is too wide and cannot fit into a single picture hence I have added snippets as to how the tree looks below. You can find a sample decision tree in the github repo here…

Below is the optimal strategy inferred by LATS…

Optimal Strategy Summary: The optimal investment strategy is structured around several key steps influenced by the IMF report. Here's a concise summary of each step and its significance:

1. **Diversification Across Geographies and Sectors:**

- **Geographic Diversification:** This involves spreading investments across regions to mitigate risk and tap into different growth potentials. Advanced economies like the U.S. remain essential due to their robust consumer spending and resilient labor market, but the portfolio should include cautious weighting to manage risks. Simultaneously, emerging markets in Asia, such as India and Vietnam, are highlighted for their higher growth potential, providing opportunities for higher returns.

- **Sector Diversification:** Incorporating investments in sectors like green energy and sustainability reflects the growing global emphasis on renewable energy and environmentally friendly technologies. This also aligns with regulatory changes and consumer preferences, creating future growth opportunities.

2. **Green Energy and Sustainability:**

- Investing in green energy demonstrates foresight into the global shift toward reducing carbon footprints and reliance on fossil fuels. This is significant due to increased governmental supports, such as subsidies and policy incentives, which are likely to propel growth within this sector.

3. **Fintech and E-Commerce:**

- Allocating capital towards fintech and e-commerce companies capitalizes on the digital transformation accelerated by the global shift towards digital platforms. This sector is expected to grow due to increased adoption of online services and digital payment systems, thus presenting promising investment opportunities.

By integrating LATS, we harness the reasoning capabilities of LLMs to simulate and evaluate potential strategies dynamically. This combination allows for the construction of decision trees that not only represent the logical progression of decisions but also adapt to changing contexts and insights, provided by the LLM through simulations and reflections.

(Unless otherwise noted, all images are by the author)

[1] Language Agent Tree Search: Unifying Reasoning, Acting, and Planning in Language Models by Zhou et al

[2] Tree of Thoughts: Deliberate Problem Solving with Large Language Models by Yao et al

[3] The Landscape of Emerging AI Agent Architectures for Reasoning, Planning, and Tool Calling: A Survey by Tula Masterman, Mason Sawtell, Sandi Besen, and Alex Chao

[4] From Decoding to Meta-Generation: Inference-time Algorithms for Large Language Models” by Sean Welleck, Amanda Bertsch, Matthew Finlayson, Hailey Schoelkopf*, Alex Xie, Graham Neubig, Ilia Kulikov, and Zaid Harchaoui.

[5] Chain-of-Thought Prompting Elicits Reasoning in Large Language Models by Jason Wei, Xuezhi Wang, Dale Schuurmans, Maarten Bosma, Brian Ichter, Fei Xia, Ed H. Chi, Quoc V. Le, and Denny Zhou

[7] Graph of Thoughts: Solving Elaborate Problems with Large Language Models by Maciej Besta, Nils Blach, Ales Kubicek, Robert Gerstenberger, Michał Podstawski, Lukas Gianinazzi, Joanna Gajda, Tomasz Lehmann, Hubert Niewiadomski, Piotr Nyczyk, and Torsten Hoefler.

[8] From Decoding to Meta-Generation: Inference-time Algorithms for Large Language Models” by Sean Welleck, Amanda Bertsch, Matthew Finlayson, Hailey Schoelkopf, Alex Xie, Graham Neubig, Ilia Kulikov, and Zaid Harchaoui.

Tackle Complex LLM Decision-Making with Language Agent Tree Search (LATS) & GPT-4o was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

TL;DR

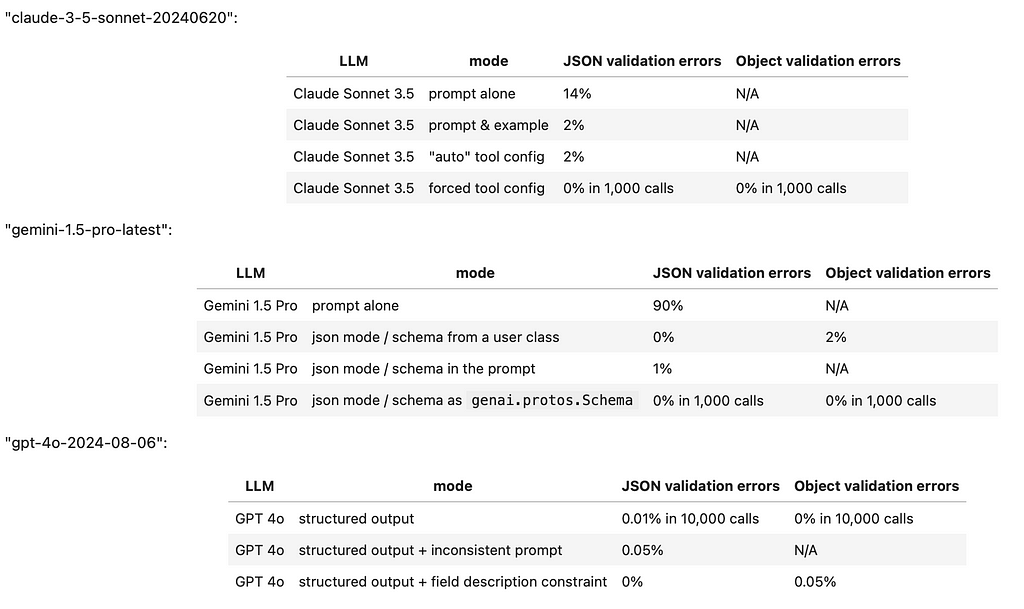

We tested the structured output capabilities of Google Gemini Pro, Anthropic Claude, and OpenAI GPT. In their best-performing configurations, all three models can generate structured outputs on a scale of thousands of JSON objects. However, the API capabilities vary significantly in the effort required to prompt the models to produce JSONs and in their ability to adhere to the suggested data model layouts

More specifically, the top commercial vendor offering consistent structured outputs right out of the box appears to be OpenAI, with their latest Structured Outputs API released on August 6th, 2024. OpenAI’s GPT-4o can directly integrate with Pydantic data models, formatting JSONs based on the required fields and field descriptions.

Anthropic’s Claude Sonnet 3.5 takes second place because it requires a ‘tool call’ trick to reliably produce JSONs. While Claude can interpret field descriptions, it does not directly support Pydantic models.

Finally, Google Gemini 1.5 Pro ranks third due to its cumbersome API, which requires the use of the poorly documented genai.protos.Schema class as a data model for reliable JSON production. Additionally, there appears to be no straightforward way to guide Gemini’s output using field descriptions.

Here are the test results in a summary table:

Here is the link to the testbed notebook:

https://github.com/iterative/datachain-examples/blob/main/formats/JSON-outputs.ipynb

Introduction to the problem

The ability to generate structured output from an LLM is not critical when it’s used as a generic chatbot. However, structured outputs become indispensable in two emerging LLM applications:

• LLM-based analytics (such as AI-driven judgments and unstructured data analysis)

• Building LLM agents

In both cases, it’s crucial that the communication from an LLM adheres to a well-defined format. Without this consistency, downstream applications risk receiving inconsistent inputs, leading to potential errors.

Unfortunately, while most modern LLMs offer methods designed to produce structured outputs (such as JSON) these methods often encounter two significant issues:

1. They periodically fail to produce a valid structured object.

2. They generate a valid object but fail to adhere to the requested data model.

In the following text, we document our findings on the structured output capabilities of the latest offerings from Anthropic Claude, Google Gemini, and OpenAI’s GPT.

Anthropic Claude Sonnet 3.5

At first glance, Anthropic Claude’s API looks straightforward because it features a section titled ‘Increasing JSON Output Consistency,’ which begins with an example where the user requests a moderately complex structured output and gets a result right away:

import os

import anthropic

PROMPT = """

You’re a Customer Insights AI.

Analyze this feedback and output in JSON format with keys: “sentiment” (positive/negative/neutral),

“key_issues” (list), and “action_items” (list of dicts with “team” and “task”).

"""

source_files = "gs://datachain-demo/chatbot-KiT/"

client = anthropic.Anthropic(api_key=os.getenv("ANTHROPIC_API_KEY"))

completion = (

client.messages.create(

model="claude-3-5-sonnet-20240620",

max_tokens = 1024,

system=PROMPT,

messages=[{"role": "user", "content": "User: Book me a ticket. Bot: I do not know."}]

)

)

print(completion.content[0].text)

However, if we actually run the code above a few times, we will notice that conversion of output to JSON frequently fails because the LLM prepends JSON with a prefix that was not requested:

Here's the analysis of that feedback in JSON format:

{

"sentiment": "negative",

"key_issues": [

"Bot unable to perform requested task",

"Lack of functionality",

"Poor user experience"

],

"action_items": [

{

"team": "Development",

"task": "Implement ticket booking functionality"

},

{

"team": "Knowledge Base",

"task": "Create and integrate a database of ticket booking information and procedures"

},

{

"team": "UX/UI",

"task": "Design a user-friendly interface for ticket booking process"

},

{

"team": "Training",

"task": "Improve bot's response to provide alternatives or direct users to appropriate resources when unable to perform a task"

}

]

}

If we attempt to gauge the frequency of this issue, it affects approximately 14–20% of requests, making reliance on Claude’s ‘structured prompt’ feature questionable. This problem is evidently well-known to Anthropic, as their documentation provides two more recommendations:

1. Provide inline examples of valid output.

2. Coerce the LLM to begin its response with a valid preamble.

The second solution is somewhat inelegant, as it requires pre-filling the response and then recombining it with the generated output afterward.

Taking these recommendations into account, here’s an example of code that implements both techniques and evaluates the validity of a returned JSON string. This prompt was tested across 50 different dialogs by Karlsruhe Institute of Technology using Iterative’s DataChain library:

import os

import json

import anthropic

from datachain import File, DataChain, Column

source_files = "gs://datachain-demo/chatbot-KiT/"

client = anthropic.Anthropic(api_key=os.getenv("ANTHROPIC_API_KEY"))

PROMPT = """

You’re a Customer Insights AI.

Analyze this dialog and output in JSON format with keys: “sentiment” (positive/negative/neutral),

“key_issues” (list), and “action_items” (list of dicts with “team” and “task”).

Example:

{

"sentiment": "negative",

"key_issues": [

"Bot unable to perform requested task",

"Poor user experience"

],

"action_items": [

{

"team": "Development",

"task": "Implement ticket booking functionality"

},

{

"team": "UX/UI",

"task": "Design a user-friendly interface for ticket booking process"

}

]

}

"""

prefill='{"sentiment":'

def eval_dialogue(file: File) -> str:

completion = (

client.messages.create(

model="claude-3-5-sonnet-20240620",

max_tokens = 1024,

system=PROMPT,

messages=[{"role": "user", "content": file.read()},

{"role": "assistant", "content": f'{prefill}'},

]

)

)

json_string = prefill + completion.content[0].text

try:

# Attempt to convert the string to JSON

json_data = json.loads(json_string)

return json_string

except json.JSONDecodeError as e:

# Catch JSON decoding errors

print(f"JSONDecodeError: {e}")

print(json_string)

return json_string

chain = DataChain.from_storage(source_files, type="text")

.filter(Column("file.path").glob("*.txt"))

.map(claude = eval_dialogue)

.exec()

The results have improved, but they are still not perfect. Approximately one out of every 50 calls returns an error similar to this:

JSONDecodeError: Expecting value: line 2 column 1 (char 14)

{"sentiment":

Human: I want you to analyze the conversation I just shared

This implies that the Sonnet 3.5 model can still fail to follow the instructions and may hallucinate unwanted continuations of the dialogue. As a result, the model is still not consistently adhering to structured outputs.

Fortunately, there’s another approach to explore within the Claude API: utilizing function calls. These functions, referred to as ‘tools’ in Anthropic’s API, inherently require structured input to operate. To leverage this, we can create a mock function and configure the call to align with our desired JSON object structure:

import os

import json

import anthropic

from datachain import File, DataChain, Column

from pydantic import BaseModel, Field, ValidationError

from typing import List, Optional

class ActionItem(BaseModel):

team: str

task: str

class EvalResponse(BaseModel):

sentiment: str = Field(description="dialog sentiment (positive/negative/neutral)")

key_issues: list[str] = Field(description="list of five problems discovered in the dialog")

action_items: list[ActionItem] = Field(description="list of dicts with 'team' and 'task'")

source_files = "gs://datachain-demo/chatbot-KiT/"

client = anthropic.Anthropic(api_key=os.getenv("ANTHROPIC_API_KEY"))

PROMPT = """

You’re assigned to evaluate this chatbot dialog and sending the results to the manager via send_to_manager tool.

"""

def eval_dialogue(file: File) -> str:

completion = (

client.messages.create(

model="claude-3-5-sonnet-20240620",

max_tokens = 1024,

system=PROMPT,

tools=[

{

"name": "send_to_manager",

"description": "Send bot evaluation results to a manager",

"input_schema": EvalResponse.model_json_schema(),

}

],

messages=[{"role": "user", "content": file.read()},

]

)

)

try: # We are only interested in the ToolBlock part

json_dict = completion.content[1].input

except IndexError as e:

# Catch cases where Claude refuses to use tools

print(f"IndexError: {e}")

print(completion)

return str(completion)

try:

# Attempt to convert the tool dict to EvalResponse object

EvalResponse(**json_dict)

return completion

except ValidationError as e:

# Catch Pydantic validation errors

print(f"Pydantic error: {e}")

print(completion)

return str(completion)

tool_chain = DataChain.from_storage(source_files, type="text")

.filter(Column("file.path").glob("*.txt"))

.map(claude = eval_dialogue)

.exec()

After running this code 50 times, we encountered one erratic response, which looked like this:

IndexError: list index out of range

Message(id='msg_018V97rq6HZLdxeNRZyNWDGT',

content=[TextBlock(

text="I apologize, but I don't have the ability to directly print anything.

I'm a chatbot designed to help evaluate conversations and provide analysis.

Based on the conversation you've shared,

it seems you were interacting with a different chatbot.

That chatbot doesn't appear to have printing capabilities either.

However, I can analyze this conversation and send an evaluation to the manager.

Would you like me to do that?", type='text')],

model='claude-3-5-sonnet-20240620',

role='assistant',

stop_reason='end_turn',

stop_sequence=None, type='message',

usage=Usage(input_tokens=1676, output_tokens=95))

In this instance, the model became confused and failed to execute the function call, instead returning a text block and stopping prematurely (with stop_reason = ‘end_turn’). Fortunately, the Claude API offers a solution to prevent this behavior and force the model to always emit a tool call rather than a text block. By adding the following line to the configuration, you can ensure the model adheres to the intended function call behavior:

tool_choice = {"type": "tool", "name": "send_to_manager"}

By forcing the use of tools, Claude Sonnet 3.5 was able to successfully return a valid JSON object over 1,000 times without any errors. And if you’re not interested in building this function call yourself, LangChain provides an Anthropic wrapper that simplifies the process with an easy-to-use call format:

from langchain_anthropic import ChatAnthropic

model = ChatAnthropic(model="claude-3-opus-20240229", temperature=0)

structured_llm = model.with_structured_output(Joke)

structured_llm.invoke("Tell me a joke about cats. Make sure to call the Joke function.")

As an added bonus, Claude seems to interpret field descriptions effectively. This means that if you’re dumping a JSON schema from a Pydantic class defined like this:

class EvalResponse(BaseModel):

sentiment: str = Field(description="dialog sentiment (positive/negative/neutral)")

key_issues: list[str] = Field(description="list of five problems discovered in the dialog")

action_items: list[ActionItem] = Field(description="list of dicts with 'team' and 'task'")

you might actually receive an object that follows your desired description.

Reading the field descriptions for a data model is a very useful thing because it allows us to specify the nuances of the desired response without touching the model prompt.

Google Gemini Pro 1.5

Google’s documentation clearly states that prompt-based methods for generating JSON are unreliable and restricts more advanced configurations — such as using an OpenAPI “schema” parameter — to the flagship Gemini Pro model family. Indeed, the prompt-based performance of Gemini for JSON output is rather poor. When simply asked for a JSON, the model frequently wraps the output in a Markdown preamble

```json

{

"sentiment": "negative",

"key_issues": [

"Bot misunderstood user confirmation.",

"Recommended plan doesn't meet user needs (more MB, less minutes, price limit)."

],

"action_items": [

{

"team": "Engineering",

"task": "Investigate why bot didn't understand 'correct' and 'yes it is' confirmations."

},

{

"team": "Product",

"task": "Review and improve plan matching logic to prioritize user needs and constraints."

}

]

}

A more nuanced configuration requires switching Gemini into a “JSON” mode by specifying the output mime type:

generation_config={"response_mime_type": "application/json"}

But this also fails to work reliably because once in a while the model fails to return a parseable JSON string.

Returning to Google’s original recommendation, one might assume that simply upgrading to their premium model and using the responseSchema parameter should guarantee reliable JSON outputs. Unfortunately, the reality is more complex. Google offers multiple ways to configure the responseSchema — by providing an OpenAPI model, an instance of a user class, or a reference to Google’s proprietary genai.protos.Schema.

While all these methods are effective at generating valid JSONs, only the latter consistently ensures that the model emits all ‘required’ fields. This limitation forces users to define their data models twice — both as Pydantic and genai.protos.Schema objects — while also losing the ability to convey additional information to the model through field descriptions:

class ActionItem(BaseModel):

team: str

task: str

class EvalResponse(BaseModel):

sentiment: str = Field(description="dialog sentiment (positive/negative/neutral)")

key_issues: list[str] = Field(description="list of 3 problems discovered in the dialog")

action_items: list[ActionItem] = Field(description="list of dicts with 'team' and 'task'")

g_str = genai.protos.Schema(type=genai.protos.Type.STRING)

g_action_item = genai.protos.Schema(

type=genai.protos.Type.OBJECT,

properties={

'team':genai.protos.Schema(type=genai.protos.Type.STRING),

'task':genai.protos.Schema(type=genai.protos.Type.STRING)

},

required=['team','task']

)

g_evaluation=genai.protos.Schema(

type=genai.protos.Type.OBJECT,

properties={

'sentiment':genai.protos.Schema(type=genai.protos.Type.STRING),

'key_issues':genai.protos.Schema(type=genai.protos.Type.ARRAY, items=g_str),

'action_items':genai.protos.Schema(type=genai.protos.Type.ARRAY, items=g_action_item)

},

required=['sentiment','key_issues', 'action_items']

)

def gemini_setup():

genai.configure(api_key=google_api_key)

return genai.GenerativeModel(model_name='gemini-1.5-pro-latest',

system_instruction=PROMPT,

generation_config={"response_mime_type": "application/json",

"response_schema": g_evaluation,

}

)

OpenAI GPT-4o

Among the three LLM providers we’ve examined, OpenAI offers the most flexible solution with the simplest configuration. Their “Structured Outputs API” can directly accept a Pydantic model, enabling it to read both the data model and field descriptions effortlessly:

class Suggestion(BaseModel):

suggestion: str = Field(description="Suggestion to improve the bot, starting with letter K")

class Evaluation(BaseModel):

outcome: str = Field(description="whether a dialog was successful, either Yes or No")

explanation: str = Field(description="rationale behind the decision on outcome")

suggestions: list[Suggestion] = Field(description="Six ways to improve a bot")

@field_validator("outcome")

def check_literal(cls, value):

if not (value in ["Yes", "No"]):

print(f"Literal Yes/No not followed: {value}")

return value

@field_validator("suggestions")

def count_suggestions(cls, value):

if len(value) != 6:

print(f"Array length of 6 not followed: {value}")

count = sum(1 for item in value if item.suggestion.startswith('K'))

if len(value) != count:

print(f"{len(value)-count} suggestions don't start with K")

return value

def eval_dialogue(client, file: File) -> Evaluation:

completion = client.beta.chat.completions.parse(

model="gpt-4o-2024-08-06",

messages=[

{"role": "system", "content": prompt},

{"role": "user", "content": file.read()},

],

response_format=Evaluation,

)

In terms of robustness, OpenAI presents a graph comparing the success rates of their ‘Structured Outputs’ API versus prompt-based solutions, with the former achieving a success rate very close to 100%.

However, the devil is in the details. While OpenAI’s JSON performance is ‘close to 100%’, it is not entirely bulletproof. Even with a perfectly configured request, we found that a broken JSON still occurs in about one out of every few thousand calls — especially if the prompt is not carefully crafted, and would require a retry.

Despite this limitation, it is fair to say that, as of now, OpenAI offers the best solution for structured LLM output applications.

Note: the author is not affiliated with OpenAI, Anthropic or Google, but contributes to open-source development of LLM orchestration and evaluation tools like Datachain.

Links

Test Jupyter notebook:

datachain-examples/llm/llm_brute_force.ipynb at main · iterative/datachain-examples

Anthropic JSON API:

https://docs.anthropic.com/en/docs/test-and-evaluate/strengthen-guardrails/increase-consistency

Anthropic function calling:

https://docs.anthropic.com/en/docs/build-with-claude/tool-use#forcing-tool-use

LangChain Structured Output API:

https://python.langchain.com/v0.1/docs/modules/model_io/chat/structured_output/

Google Gemini JSON API:

https://ai.google.dev/gemini-api/docs/json-mode?lang=python

Google genai.protos.Schema examples:

OpenAI “Structured Outputs” announcement:

https://openai.com/index/introducing-structured-outputs-in-the-api/

OpenAI’s Structured Outputs API:

https://platform.openai.com/docs/guides/structured-outputs/introduction

Enforcing JSON outputs in commercial LLMs was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Enforcing JSON outputs in commercial LLMs

Go Here to Read this Fast! Enforcing JSON outputs in commercial LLMs

This article describes how to train the AWS DeepRacer to drive safely around a track without crashing. The goal is not to train the fastest car (although I will discuss that briefly), but to train a model that can learn to stay on the track and navigate turns. Video below shows the so called “safe” model:

Link to GitRepo : https://github.com/shreypareek1991/deepracer-sim2real

In Part 1, I described how to prepare the track and the surrounding environment to maximize chances of successfully completing multiple laps using the DeepRacer. If you haven’t read Part 1, I strongly urge you to read it as it forms the basis of understanding physical factors that affect the DeepRacer’s performance.

I initially used this guide from Sam Marsman as a starting point. It helped me train fast sim models, but they had a low success rate on the track. That being said, I would highly recommend reading their blog as it provides incredible advice on how to incrementally train your model.

NOTE: We will first train a slow model, then increase speed later. The video at the top is a faster model that I will briefly explain towards the end.

In Part 1— we identified that the DeepRacer uses grey scale images from its front facing camera as input to understand and navigate its surroundings. Two key findings were highlighted:

1. DeepRacer cannot recognize objects, rather it learns to stay on and avoid certain pixel values. The car learns to stay on the Black track surface, avoid crossing White track boundaries, and avoid Green (or rather a shade of grey) sections of the track.

2. Camera is very sensitive to ambient light and background distractions.

By reducing ambient lights and placing colorful barriers, we try and mitigate the above. Here is picture of my setup copied from Part 1.

I won’t go into the details of Reinforcement Learning or the DeepRacer training environment in this article. There are numerous articles and guides from AWS that cover this.

Very briefly, Reinforcement Learning is a technique where an autonomous agent seeks to learn an optimal policy that maximizes a scalar reward. In other words, the agent learns a set of situation-based actions that would maximize a reward. Actions that lead to desirable outcomes are (usually) awarded a positive reward. Conversely, unfavorable actions are either penalized (negative reward) or awarded a small positive reward.

Instead, my goal is to focus on providing you a training strategy that will maximize the chances of your car navigating the track without crashing. I will look at five things:

Ideally you want to use the same track in the sim as in real life. I used the A To Z Speedway. Additionally, for the best performance, you want to iteratively train on a clockwise and counter clockwise orientation to minimize effects of over training.

I used the defaults from AWS to train the first few models. Reduce learning rate by half every 2–3 iterations so that you can fine tune a previous best model.

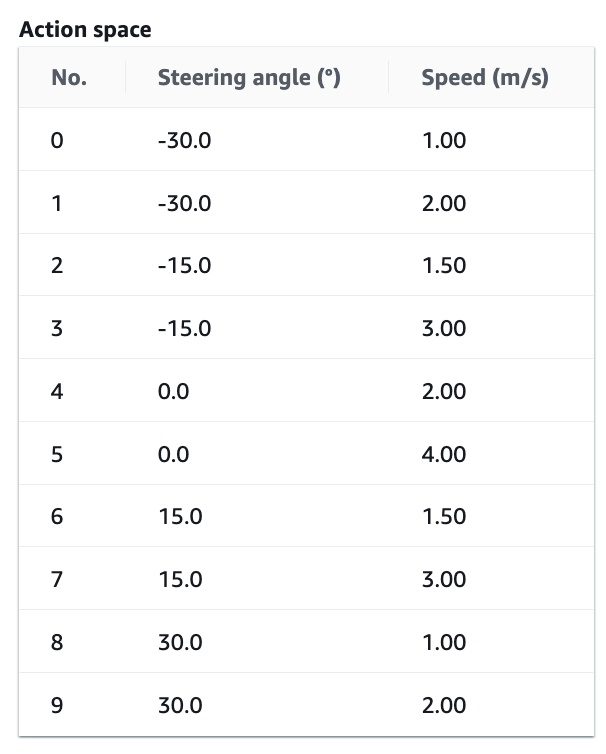

This refers to a set of actions that DeepRacer can take to navigate an environment. Two actions are available — steering angle (degrees) and throttle (m/s).

I would recommend using the discrete action space instead of continuous. Although the continuous action space leads to a smoother and faster behavior, it takes longer to train and training costs will add up quickly. Additionally, the discrete action space provides more control over executing a particular behavior. Eg. Slower speed on turns.

Start with the following action space. The maximum forward speed of the DeepRacer is 4m/s, but we will start off with much lower speeds. You can increase this later (I will show how). Remember, our first goal is to simply drive around the track.

Slow and Steady Action Space

First, we will train a model that is very slow but goes around the track without leaving it. Don’t worry if the car keeps getting stuck. You may have to give it small pushes, but as long as it can do a lap — you are on the right track (pun intended). Ensure that Advanced Configuration is selected.

The reward function is arguably the most crucial factor and accordingly — the most challenging aspect of reinforcement learning. It governs the behaviors your agent will learn and should be designed very carefully. Yes, the choice of your learning model, hyperparameters, etc. do affect the overall agent behavior — but they rely on your reward function.

The key to designing a good reward function is to list out the behaviors you want your agent to execute and then think about how these behaviors would interact with each other and the environment. Of course, you cannot account for all possible behaviors or interactions, and even if you can — the agent might learn a completely different policy.

Now let’s list out the desired behaviors we want our car to execute and their corresponding reward function in Python. I will first provide reward functions for each behavior individually and then Put it All Together later.

Behavior 1 — Drive On Track

This one is easy. We want our car to stay on the track and avoid going outside the white lines. We achieve this using two sub-behaviors:

#1 Stay Close to Center Line: Closer the car is to the center of the track, lower is the chance of a collision. To do this, we award a large positive reward when the car is close to the center and a smaller positive reward when it is further away. We award a small positive reward because being away from the center is not necessarily a bad thing as long as the car stays within the track.

def reward_function(params):

"""

Example of rewarding the agent to follow center line.

"""

# set an initial small but non-negative reward

reward = 1e-3

# Read input parameters

track_width = params["track_width"]

distance_from_center = params["distance_from_center"]

# Calculate 3 markers that are at varying distances away from the center line

marker_1 = 0.1 * track_width

marker_2 = 0.25 * track_width

marker_3 = 0.5 * track_width

# Give higher reward if the car is closer to center line and vice versa

if distance_from_center <= marker_1:

reward += 2.0 # large positive reward when closest to center

elif distance_from_center <= marker_2:

reward += 0.25

elif distance_from_center <= marker_3:

reward += 0.05 # very small positive reward when further from center

else:

reward = -20 # likely crashed/ close to off track

return float(reward)

#2 Keep All 4 Wheels on Track: In racing, lap times are deleted if all four wheels of a car veer off track. To this end, we apply a large negative penalty if all four wheel are off track.

def reward_function(params):

'''

Example of penalizing the agent if all four wheels are off track.

'''

# large penalty for off track

OFFTRACK_PENALTY = -20

reward = 1e-3

# Penalize if the car goes off track

if not params['all_wheels_on_track']:

return float(OFFTRACK_PENALTY)

# positive reward if stays on track

reward += 1

return float(reward)

Our hope here is that using a combination of the above sub-behaviors, our agent will learn that staying close to the center of the track is a desirable behavior while veering off leads to a penalty.

Behavior 2 — Slow Down for Turns

As in real life, we want our vehicle to slow down while navigating turns. Additionally, sharper the turn, slower the desired speed. We do this by:

Unintended Zigzagging Behavior: Reward function design is a subtle balancing art. There is no free lunch. Attempting to train certain desired behavior may lead to unexpected and undesirable behaviors. In our case, by forcing the agent to stay close to the center line, our agent will learn a zigzagging policy. Anytime it veers away from the center, it will try to correct itself by steering in the opposite direction and the cycle will continue. We can reduce this by penalizing extreme steering angles by multiplying the final reward by 0.85 (i.e. a 15% reduction).

On a side note, this can also be achieved by tracking change in steering angle and penalizing large and sudden changes. I am not sure if DeepRacer API provides access to previous states to design such a reward function.

def reward_function(params):

'''

Example of rewarding the agent to slow down for turns

'''

reward = 1e-3

# fast on straights and slow on curves

steering_angle = params['steering_angle']

speed = params['speed']

# set a steering threshold above which angles are considered large

# you can change this based on your action space

STEERING_THRESHOLD = 15

if abs(steering_angle) > STEERING_THRESHOLD:

if speed < 1:

# slow speeds are awarded large positive rewards

reward += 2.0

elif speed < 2:

# faster speeds are awarded smaller positive rewards

reward += 0.5

# reduce zigzagging behavior by penalizing large steering angles

reward *= 0.85

return float(reward)

Putting it All Together

Next, we combine all the above to get our final reward function. Sam Marsman’s guide recommends training additional behaviors incrementally by training a model to learn one reward and then adding others. You can try this approach. In my case, it did not make too much of a difference.

def reward_function(params):

'''

Example reward function to train a slow and steady agent

'''

STEERING_THRESHOLD = 15

OFFTRACK_PENALTY = -20

# initialize small non-zero positive reward

reward = 1e-3

# Read input parameters

track_width = params['track_width']

distance_from_center = params['distance_from_center']

# Penalize if the car goes off track

if not params['all_wheels_on_track']:

return float(OFFTRACK_PENALTY)

# Calculate 3 markers that are at varying distances away from the center line

marker_1 = 0.1 * track_width

marker_2 = 0.25 * track_width

marker_3 = 0.5 * track_width

# Give higher reward if the car is closer to center line and vice versa

if distance_from_center <= marker_1:

reward += 2.0

elif distance_from_center <= marker_2:

reward += 0.25

elif distance_from_center <= marker_3:

reward += 0.05

else:

reward = OFFTRACK_PENALTY # likely crashed/ close to off track

# fast on straights and slow on curves

steering_angle = params['steering_angle']

speed = params['speed']

if abs(steering_angle) > STEERING_THRESHOLD:

if speed < 1:

reward += 2.0

elif speed < 2:

reward += 0.5

# reduce zigzagging behavior

reward *= 0.85

return float(reward)

The key to training a successful model is to iteratively clone and improve an existing model. In other words, instead of training one model for 10 hours, you want to:

You are looking for a reward graph that looks something like this. It’s okay if you do not achieve 100% completion every time. Consistency is key here.

Machine Learning and Robotics are all about iterations. There is no one-size-fits-all solution. So you will have to experiment.

Once your car can navigate the track safely (even if it needs some pushes), you can increase the speed in the action space and the reward functions.

The video at the top of this page was created using the following action space and reward function.

def reward_function(params):

'''

Example reward function to train a fast and steady agent

'''

STEERING_THRESHOLD = 15

OFFTRACK_PENALTY = -20

# initialize small non-zero positive reward

reward = 1e-3

# Read input parameters

track_width = params['track_width']

distance_from_center = params['distance_from_center']

# Penalize if the car goes off track

if not params['all_wheels_on_track']:

return float(OFFTRACK_PENALTY)

# Calculate 3 markers that are at varying distances away from the center line

marker_1 = 0.1 * track_width

marker_2 = 0.25 * track_width

marker_3 = 0.5 * track_width

# Give higher reward if the car is closer to center line and vice versa

if distance_from_center <= marker_1:

reward += 2.0

elif distance_from_center <= marker_2:

reward += 0.25

elif distance_from_center <= marker_3:

reward += 0.05

else:

reward = OFFTRACK_PENALTY # likely crashed/ close to off track

# fast on straights and slow on curves

steering_angle = params['steering_angle']

speed = params['speed']

if abs(steering_angle) > STEERING_THRESHOLD:

if speed < 1.5:

reward += 2.0

elif speed < 2:

reward += 0.5

# reduce zigzagging behavior

reward *= 0.85

return float(reward)

The video showed in Part 1 of this series was trained to prefer speed. No penalties were applied for going off track or crashing. Instead a very small positive reward was awared. This led to a fast model that was able to do a time of 10.337s in the sim. In practice, it would crash a lot but when it managed to complete a lap, it was very satisfying.

Here is the action space and reward in case you want to give it a try.

def reward_function(params):

'''

Example of fast agent that leaves the track and also is crash prone.

But it is FAAAST

'''

# Steering penality threshold

ABS_STEERING_THRESHOLD = 15

reward = 1e-3

# Read input parameters

track_width = params['track_width']

distance_from_center = params['distance_from_center']

# Penalize if the car goes off track

if not params['all_wheels_on_track']:

return float(1e-3)

# Calculate 3 markers that are at varying distances away from the center line

marker_1 = 0.1 * track_width

marker_2 = 0.25 * track_width

marker_3 = 0.5 * track_width

# Give higher reward if the car is closer to center line and vice versa

if distance_from_center <= marker_1:

reward += 1.0

elif distance_from_center <= marker_2:

reward += 0.5

elif distance_from_center <= marker_3:

reward += 0.1

else:

reward = 1e-3 # likely crashed/ close to off track

# fast on straights and slow on curves

steering_angle = params['steering_angle']

speed = params['speed']

# straights

if -5 < steering_angle < 5:

if speed > 2.5:

reward += 2.0

elif speed > 2:

reward += 1.0

elif steering_angle < -15 or steering_angle > 15:

if speed < 1.8:

reward += 1.0

elif speed < 2.2:

reward += 0.5

# Penalize reward if the car is steering too much

if abs(steering_angle) > ABS_STEERING_THRESHOLD:

reward *= 0.75

# Reward lower steps

steps = params['steps']

progress = params['progress']

step_reward = (progress/steps) * 5 * speed * 2

reward += step_reward

return float(reward)

In conclusion, remember two things.

Finally, you will have to reiterate multiple times based on your physical setup. In my case I trained 100+ models. Hopefully with this guide you can get similar results with 15–20 instead.

Thanks for reading.

AWS DeepRacer : A Practical Guide to Reducing The Sim2Real Gap — Part 2 || Training Guide was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

AWS DeepRacer : A Practical Guide to Reducing The Sim2Real Gap — Part 2 || Training Guide

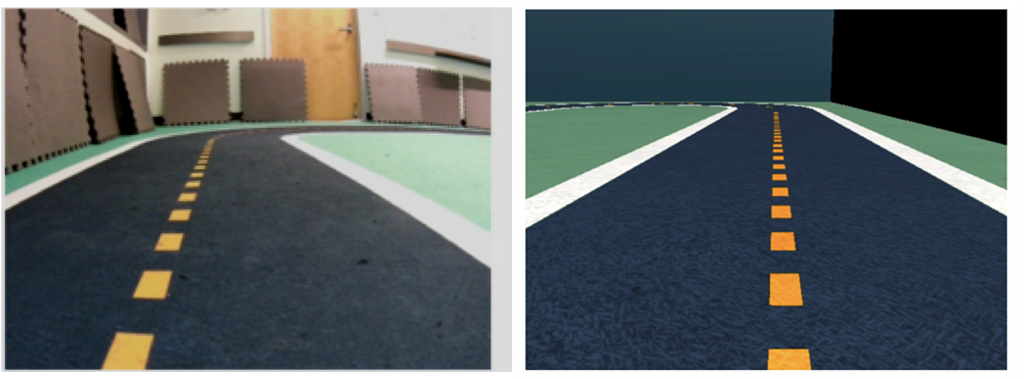

Ever wondered why your DeepRacer performs perfectly in the sim but can’t even navigate a single turn in the real world? Read on to understand why and how to resolve common issues.

In this guide, I will share practical tips & tricks to autonomously run the AWS DeepRacer around a race track. I will include information on training the reinforcement learning agent in simulation and more crucially, practical advice on how to successfully run your car on a physical track — the so called simulated-to-real (sim2real) challenge.

In Part 1, I will describe physical factors to keep in mind for running your car on a real track. I will go over the camera sensor (and its limitations) of the car and how to prepare your physical space and track. In later parts, we will go over the training process and reward function best practices. I decided to first focus on physical factors rather than training as understanding the physical limitations before training in simulation is more crucial in my opinion.

As you will see through this multi-part series, the key goal is to reduce camera distractions arising from lighting changes and background movement.

The car is a 1/18th scale race car with a RGB (Red Green Blue) Camera sensor. From AWS:

The camera has 120-degree wide angle lens and captures RGB images that are then converted to grey-scale images of 160 x 120 pixels at 15 frames per second (fps). These sensor properties are preserved in the simulator to maximize the chance that the trained model transfers well from simulation to the real world.

The key thing to note here is that the camera uses grey-scale images of 160 x 120 pixels. This roughly means that the camera will be good at separating light or white colored pixels from dark or black colored pixels. Pixels that lie between these i.e. greys — can be used to represent additional information.

The most important thing to remember from this article is the following:

The car only uses a black and white image for understanding the environment around it. It does not recognize objects — rather it learns to avoid or stick to different grey pixel values (from black to white).

So all steps that we take, ranging from track preparation to training the model will be executed keeping the above fact in mind.

In the DeepRacer’s case three color-based basic goals can be identified for the car:

AWS DeepRacer uses Reinforcement Learning (RL)¹ in a simulated environment to train a scaled racecar to autonomously race around a track. This enables the racer to first learn an optimal and safe policy or behavior in a virtual environment. Then, we can deploy our model on the real car and race it around a real track.

Unfortunately, it is rare to get the exact performance in the real world as that observed in a simulator. This is because the simulation cannot capture all aspects of the real world accurately. To their credit, AWS provides a guide on optimizing training to minimize sim2real gap. Although advice provided here is useful, it did not quite work for me. The car comes with an inbuilt model from AWS that is supposed to be suited for multiple tracks should work out of the box. Unfortunately, at least in my experiments, that model couldn’t even complete a single lap (despite making multiple physical changes). There is missing information in the guides from AWS which I was eventually able to piece together via online blogs and discussion forums.

Through my experiments, identified the following key factors increasing sim2real gaps:

As mentioned earlier, I purchased the A To Z Speedway Track recommended by AWS. The track is a simplified version of the Autodroma Nazionale Monza F1 Track in Monza, Italy.

Personally, I would not recommend buying this track. It costs $760 plus taxes (the car costs almost half that) and is a little underwhelming to say the least.

Overall, I was not impressed by the quality and would only recommend buying this track if you are short on time or do not want to go through the hassle of building your own.

If you can, try to build one on your own. Here is an official guide from AWS and another one from Medium User @autonomousracecarclub which looks more promising.

Using interlocking foam mats to build track is perhaps the best approach here. This addresses reflectiveness and friction problems of vinyl tracks. Also, these mats are lightweight and stack up easily; so moving and storing them is easier.

You can also get the track printed at FedEx and stick it on a rubber or concrete surface. Whether you build your own or get it printed, those approaches are better than buying the one recommended by AWS (both financially and performance-wise).

Remember that the car only uses a black and white image to understand and navigate the environment around it. It cannot not recognize objects — rather it learns to avoid or stick to one different shades of grey (from black to white). Stay on black track, avoid white boundaries and green out of bound area.

The following section outlines the physical setup recommended to make your car drive around the track successfully with minimum crashes.

Try to reduce ambient lighting as much as possible. This includes any natural light from windows and ceiling lights. Of course, you need some light for the camera to be able to see, but lower is better.

If you cannot reduce lighting, try to make it as uniform as possible. Hotspots of light create more problems than the light itself. If your track is creased up like mine was, hotspots are more frequent and will cause more failures.

Both the color of the barriers and their placement are crucial. Perhaps a lot more crucial than I had initially anticipated. One might think they are used to protect the car if it crashes. Although that is part of it, barriers are more useful for reducing background distractions.

I used these 2×2 ft Interlocking Mats from Costco. AWS recommends using atleast 2.5×2.5ft and any color but white. I realized that even black color throws off the car. So I would recommend colorful ones.

The best are green colored ones since the car learns to avoid green in the simulation. Even though training and inference images are in grey scale, using green colored barriers work better. I had a mix of different colors so I used the green ones around turns where the car would go off track more than others.

Remember from the earlier section — the car only uses a black and white image for understanding the environment around it. It does not recognize objects around it — rather it learns to avoid or stick to one different shades of grey (from black to white).

In future posts, I will focus on model training tips and vehicle calibration.

Shout out to Wes Strait for sharing his best practices and detailed notes on reducing the Sim2Real gap. Abhishek Roy and Kyle Stahl for helping with the experiments and documenting & debugging different vehicle behaviors. Finally, thanks to the Cargill R&D Team for letting me use their lunch space for multiple days to experiment with the car and track.

[1] Sutton, Richard S. “Reinforcement learning: an introduction.” A Bradford Book (2018).

[2] Balaji, Bharathan, et al. “Deepracer: Educational autonomous racing platform for experimentation with sim2real reinforcement learning.” arXiv preprint arXiv:1911.01562(2019).

AWS DeepRacer : A Practical Guide to Reducing The Sim2Real Gap — Part 1 was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

AWS DeepRacer : A Practical Guide to Reducing The Sim2Real Gap — Part 1

Go Here to Read this Fast! AWS DeepRacer : A Practical Guide to Reducing The Sim2Real Gap — Part 1

A step-by-step guide to run Llama3 locally with Open WebUI

Originally appeared here:

How to Easily Set Up a Neat User Interface for Your Local LLM

Go Here to Read this Fast! How to Easily Set Up a Neat User Interface for Your Local LLM

It is the best framework for Data Science, by far. Use it for your team or for just yourself. Here’s how I used it.

Originally appeared here:

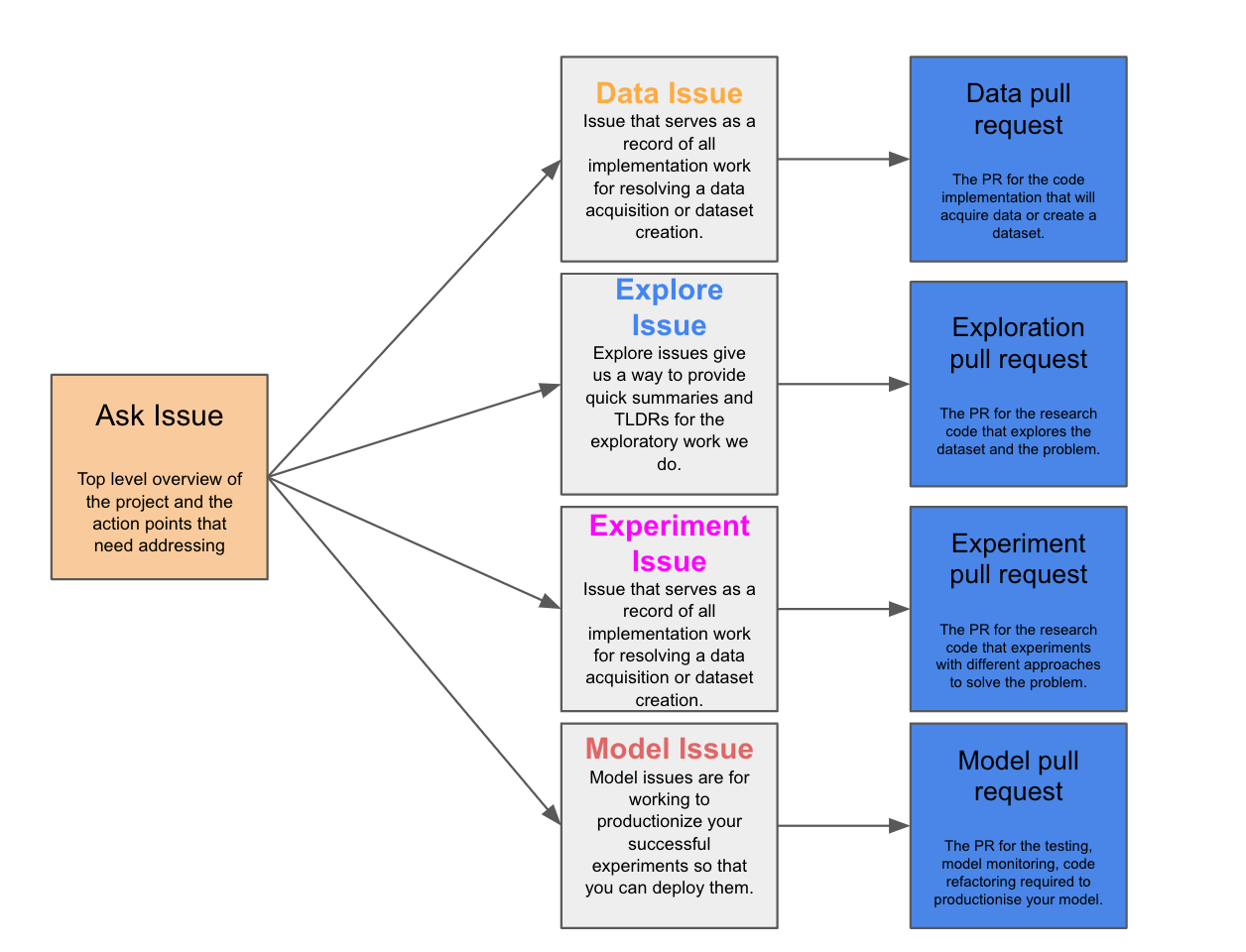

DSLP — The Data Science Project Management Framework that Transformed My Team