Recommendations on building robust memory capabilities based on experimentation with Autogen’s “Teachable Agents”

Memory is undoubtedly becoming a crucial aspect of Agentic AI. As the use cases for AI Agents grow in complexity, so does the need for these agents to learn from past experiences, utilize stored business-specific knowledge, and adapt to evolving scenarios based on accumulated information.

In my previous article, “Memory in AI: Key Benefits and Investment Considerations,” I explored why memory is pivotal for AI, discussing its role in recall, reasoning, and continuous learning. This piece, however, will dive directly into the implementation of memory by examining its impact through the “teachability” functionality in the popular agent framework, Autogen.

Note: While this article is technical in nature, it offers value for both technical professionals and business leaders looking to evaluate the role of memory in Agentic AI systems. I’ve structured it so that readers can skip over the code sections and still grasp the way memory can augment the responses of your AI systems. If you don’t wish to follow the code, you may read the descriptions of each step to get a sense of the process… or just the key findings and recommendations section.

Key Findings and Recommendations

My exploration of Autogen’s Teachable Agents revealed both their potential and limitations in handling both simple and complex memory tasks.

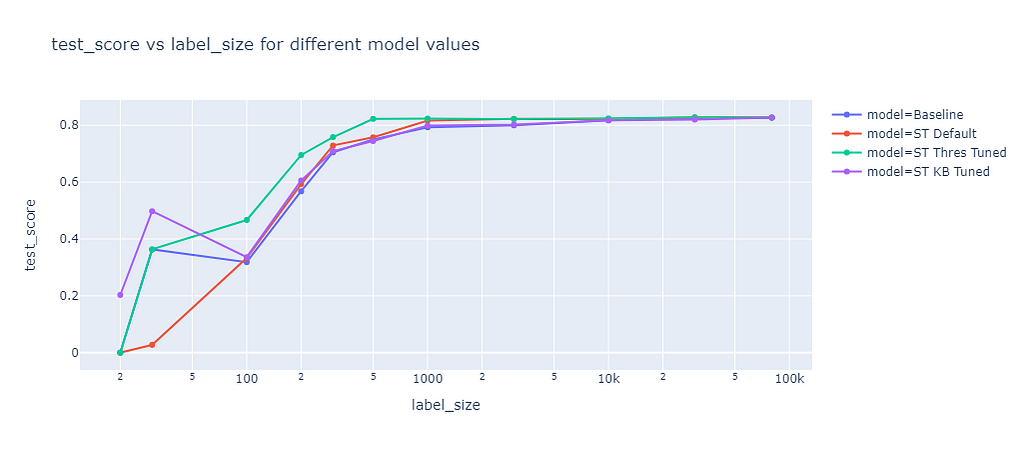

Out of the box, Autogen’s TeachableAgent performs less brilliantly than expected. The Agen’ts reasoning ability conflates memories together in a non productive way and the included retrieval mechanism is not set up for multi-step searches necessary for answering complex questions. This limitation suggests that if you would like to use Autogen’s Teachable Agents, there needs to be substantial customization to both supplement reasoning capabilities and achieve more sophisticated memory retrieval.

To build more robust memory capabilities, it’s crucial to implement multi-step search functionality. A single memory search often falls short of providing the comprehensive information needed for complex tasks. Implementing a series of interconnected searches could significantly enhance the agent’s ability to gather and synthesize relevant information.

The “teachability” feature, while powerful, should be approached with caution. Continuous activation without oversight risks data poisoning and compromise of trusted information sources. Business leaders and solution architects should consider implementing a human-in-the-loop approach, allowing users to approve what the system learns versus treating every inference as ground truth the system should learn from. This oversight in Autogen’s current Teachable Agent design could cause significant risks associated with unchecked learning.

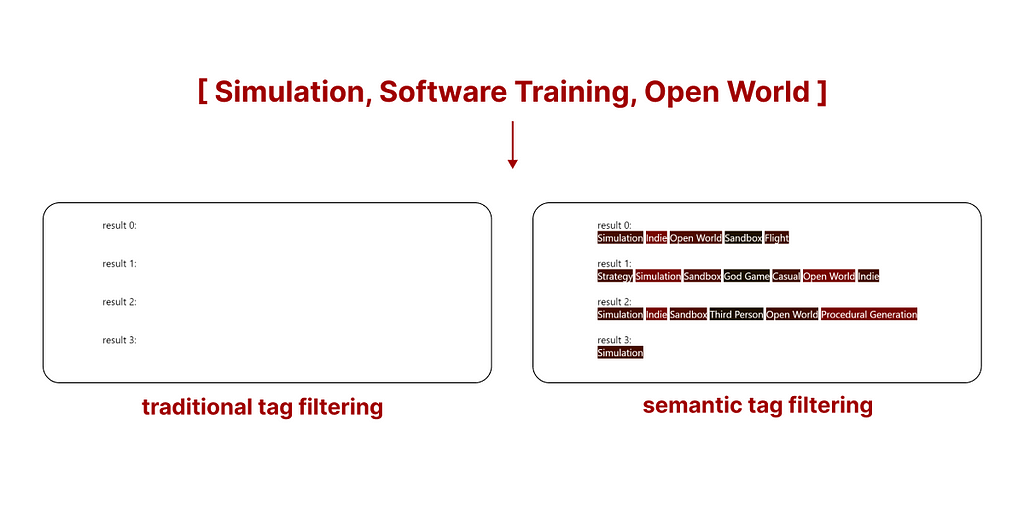

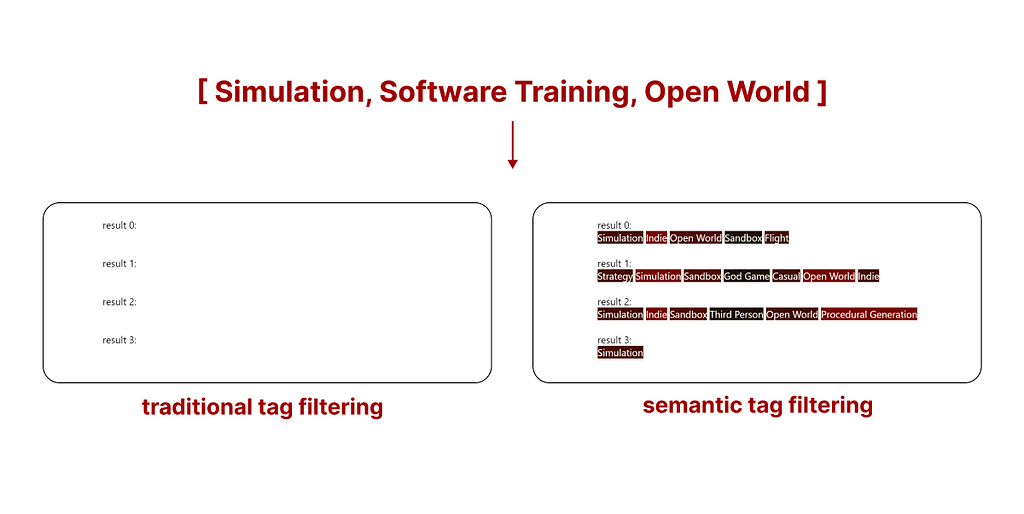

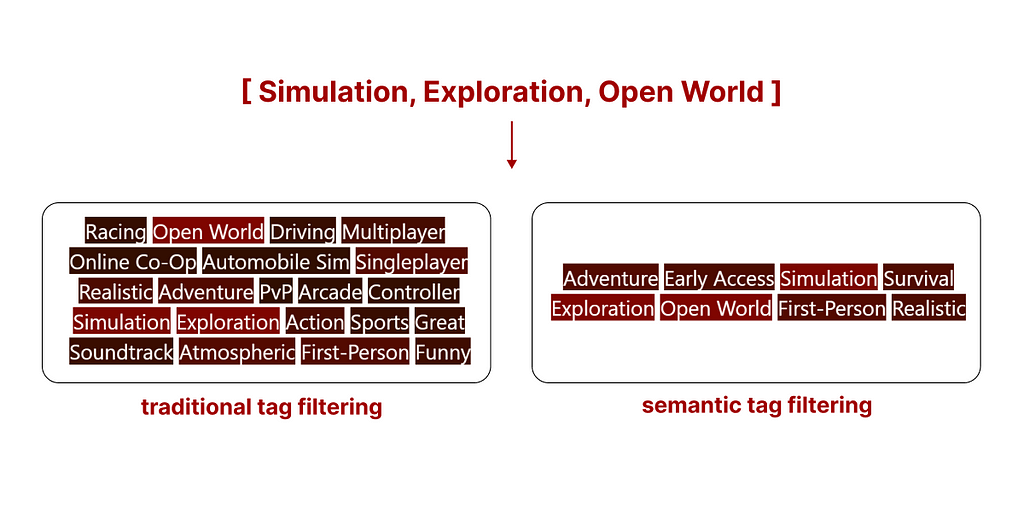

Lastly, the method of memory retrieval from a knowledge store plays a large role in the system’s effectiveness. Moving beyond simple nearest neighbor searches, which is the TeachableAgent’s default, to more advanced techniques such as hybrid search (combining keyword and vector approaches), semantic search, or knowledge graph utilization could dramatically improve the relevance and accuracy of retrieved information.

Descriptive Code Implementation

To appropriately demonstrate how external memory can be valuable, I created a fictitious scenario for a car parts manufacturing plant. Follow the code below to implement a Teachable Agent yourself.

Scenario: A car parts manufacturing facility needs to put a plan in place in case there are energy constraints. The plan needs to be flexible and adapt based on how much power consumption the facility can use and which parts and models are in demand.

Step 1:

Pre- set up requires you to pip install autogen if you don’t have it installed in your active environment and create a config JSON file.

Example of a compatible config file which uses Azure OpenAI’s service model GPT4–o:

[{

"model": "gpt-4o",

"api_key": "<YOUR API KEY>",

"azure_endpoint": "<YOUR ENDPOINT>",

"api_type": "azure",

"api_version": "2024-06-01"

}]

Install Autogen for python:

pip install pyautogen

Step 2:

Import the necessary libraries to your notebook or file and load the config file.

import autogen

from autogen.agentchat.contrib.capabilities.teachability import Teachability

from autogen import ConversableAgent, UserProxyAgent

config_list = autogen.config_list_from_json(

env_or_file="autogenconfig.json", #the json file name that stores the config

file_location=".", #this means the file is in the same directory

filter_dict={

"model": ["gpt-4o"], #select a subset of the models in your config

},

)

Step 3:

Create the Agents. We will need two agents because of the way that Autogen’s framework works. We use a UserProxyAgent to execute tasks and interact with or replace human involvement (depending on the desired amount of human in the loop). We also create a Conversable Agent as the “Teachable Agent” which is meant to interact with other agents (not the user). You can read more about the UserProxyAgents and ConversableAgents here.

teachable_agent = ConversableAgent(

name="teachable_agent", # the name can't contain spaces

llm_config={"config_list": config_list, "timeout": 120, "cache_seed": None}, # in this example we disable caching but if it is enabled it caches API requests so that they can be reused when the same request is used

)

user = UserProxyAgent(

name="user",

human_input_mode="ALWAYS", #I want to have full control over the code executed so I am setting human_input_mode to ALWAYS. Other options are NEVER and TERMINATE.

is_termination_msg=lambda x: True if "TERMINATE" in x.get("content") else False, #setting a termination message is VERY important because it tells the agent when to finish.

max_consecutive_auto_reply=0, #we don't need this agent to replies multiple times in a row

code_execution_config={

"use_docker": False

}, # If you are planning on running code look into setting use_docker=True. For this example I am not because then I have to walk through the docker setup, but it is safer than running the code directly.

)

Step 4:

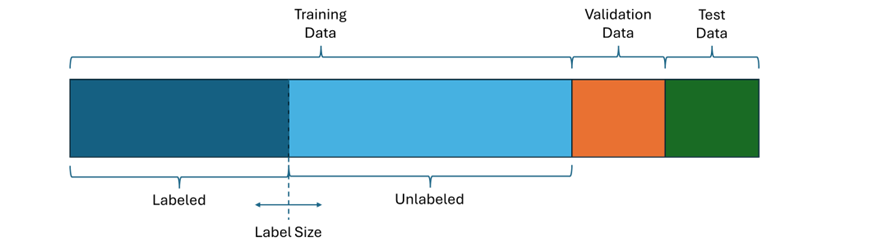

To have a baseline, let’s ask the same question to the agents before and after we “teach” it. I purposely decided to demonstrate different levels of question difficulty to show performance on tasks that require a single step vs multistep memory retrieval. To successfully address the intricate multi-step question, the retrieval mechanism must provide information from two separate memories as context to the language model responsible for generating a final response.

Simple Question: “The facility is experiencing a power shortage of 40%. What models need to be prioritized?”

Complex Multi Step Question: “The facility is experiencing a power shortage of 40%. Provide me a detailed breakdown of what machines should be deactivated and which machines should remain active.”

As expected the responses are generic and not particularly applicable to an individual business.

Response prior to enabling long term memory:

SIMPLE QUESTION RESPONSE

===========================

>>>>>>>> USING AUTO REPLY...

teachable_agent (to user):

To address a power shortage of 30% effectively, it's crucial to prioritize models that are essential to critical operations, safety, and those that have the highest impact on productivity. Here are steps to determine which models to prioritize:

1. **Identify Essential Systems:** Prioritize systems that are vital for safety, security, and essential operations. These might include:

- Emergency lighting

- Security systems (alarms, surveillance)

- Critical environmental controls (e.g., refrigeration for hazardous materials)

- Communication systems

2. **Evaluate Impact on Core Operations:** Determine which models are crucial to the facility’s core functions. This can include machinery, servers, or any processes that are indispensable for maintaining productivity and operations.

3. **Energy Efficiency:** Prioritize models and equipment that are more energy-efficient and can operate within the reduced power capacity.

4. **Downtime Cost:** Consider the cost of downtime for each model. Prioritize those where downtime would result in significant financial losses or production delays.

Based on these principles, here’s a generic prioritization framework:

1. **Safety and Security Systems:**

- Emergency Lighting

- Fire Alarms and Sprinkler Systems

- Security Cameras and Sensors

2. **Critical Operational Systems:**

- Essential Production Machinery (that cannot be shut down without major issues)

- Servers and IT Infrastructure (especially those critical for operations and data integrity)

- HVAC Systems (if necessary for maintaining safe operating conditions)

3. **Support Systems:**

- Backup Power Systems (uninterruptible power supplies, generators)

- Communication Systems (both internal and external communication)

Ensure that non-essential and high energy-consuming equipment is powered down or scheduled to operate during off-peak hours if possible.

Always re-evaluate periodically to ensure that the power allocation aligns with the dynamic needs of the facility. Communication with key stakeholders regarding priorities and decisions is also essential for smooth operations during a power shortage.

COMPLEX MULTISTEP QUESTION RESPONSE

====================================

>>>>>>>> USING AUTO REPLY...

teachable_agent (to user):

To effectively manage a facility experiencing a 30% power shortage, we need to prioritize essential operations and consider the criticality and energy consumption of each machine. Here is a detailed breakdown:

### Step 1: Identify Essential Operations

1. **Critical Infrastructure**: Safety systems, security systems, and essential IT infrastructure.

2. **Production Lines**: Machines involved in the primary production process.

3. **Support Systems**: HVAC systems, lighting, and other ancillary equipment necessary to maintain a safe working environment.

### Step 2: Categorize Machines by Importance and Energy Consumption

#### A. Essential and High-Energy Machines

1. **Safety and Security Systems**

- Fire alarms and sprinklers

- Surveillance cameras

- Security access controls

2. **Primary Production Machines**

- Core production lines

- Central processing units or mainframe servers (for IT-heavy operations)

3. **Critical Support Systems**

- Emergency lighting

- Essential HVAC systems (for maintaining operation-critical temperature and humidity)

#### B. Non-Essential and High-Energy Machines

1. **Secondary Production Lines**

- Non-primary or non-critical production lines

- Equipment used for non-essential or ancillary processes

2. **Supplementary IT Infrastructure**

- Servers used for non-essential operations

- Backup systems that are not in immediate use

3. **Non-Essential HVAC and Lighting**

- HVAC systems for non-critical areas

- Lighting in non-essential zones

#### C. Non-Essential and Low-Energy Machines

1. **Office Equipment**

- Computers, printers, and copiers in non-essential offices

2. **Non-essential Lab or Research Equipment**

- Devices not directly linked to current essential tasks

### Step 3: Prioritize Deactivation

#### Immediate Deactivation

1. **Non-Essential and High-Energy Machines**

- Secondary production lines

- Non-essential backup servers

2. **Non-Essential HVAC and Lighting**

- HVAC in non-critical areas

- Non-essential lighting

3. **Non-Essential Office Equipment**

- Printers, non-essential computers, and copiers

#### Limited Usage

1. **Essential and High-Energy Machines**

- Operate critical production lines at reduced capacity if possible

- Implement rotational operation schedules for essential processes

2. **Critical Support Systems**

- Optimize HVAC settings (e.g., raising or lowering set points within acceptable ranges)

- Reduce lighting to minimum safety standards

### Step 4: Implement Energy Conservation Measures

1. **Optimize Operations**

- Utilize energy-efficient settings

- Implement energy-saving protocols and encourage staff to conserve energy

2. **Scheduled Downtime**

- Plan for downtime during off-peak hours for essential machinery to balance the load

3. **Regular Monitoring**

- Monitor energy consumption in real-time to make quick adjustments as needed

### Summary

#### Remain Active:

1. Safety and security systems

2. Main production lines

3. Critical IT infrastructure

4. Essential HVAC and lighting for operational areas

#### Deactivate or Limit Use:

1. Non-essential production lines

2. Redundant IT systems

3. Non-critical HVAC and lighting

4. Office equipment in non-essential areas

**Note**: These recommendations should be adjusted based on the specific operational requirements of your facility. Additionally, it could be beneficial to communicate these changes to all staff to ensure compliance and understanding.

Code to ask baseline questions:

#simple question

user.initiate_chat(teachable_agent, message="The facility is experiencing a power shortage of 40%. What models need to be prioritized?", clear_history=True)

#multistep complex question

user.initiate_chat(teachable_agent, message="The facility is experiencing a power shortage of 30%. Provide me a detailed breakdown of what machines should be deactivated and which machines should remain active.", clear_history=True)

Step 5:

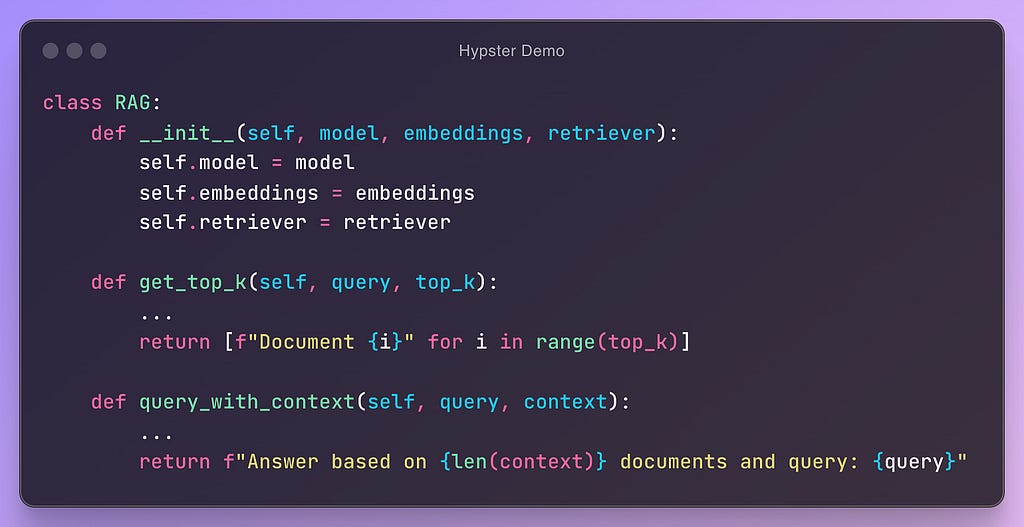

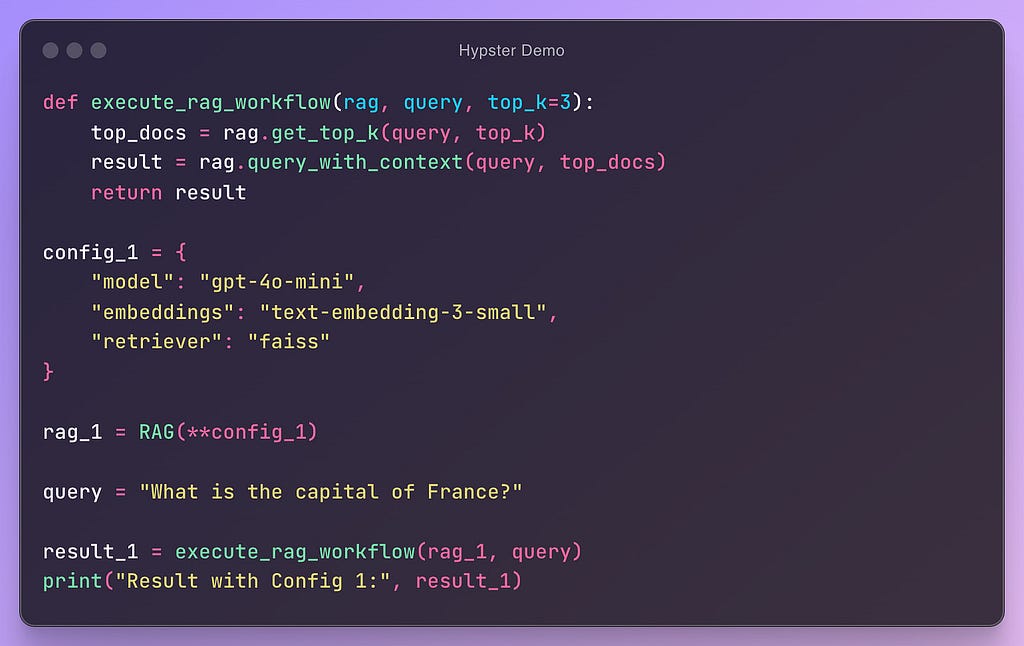

Create the “teachability” capability that you then add to the agent. The Teachability class inherits from the AgentCapabiliy class, which essentially allows you to add customizable capabilities to the Agents.

The Teachability class has many optional parameters that can be further explored here.

The out of the box Teachability class is a quick and convenient way of adding long term memory to the agents, but will likely need to be customized for use in a production setting, as outlined in the key findings section. It involves sending messages to an Analyzer Agent that evaluates the user messages for potential storage and retrieval. The Analyzer Agent looks for advice that could be applicable to similar tasks in the future and then summarizes and stores task-advice pairs in a binary database serving as the agent’s “memory”.

teachability = Teachability(

verbosity=0, # 0 for basic info, 1 to add memory operations, 2 for analyzer messages, 3 for memo lists.

reset_db=True, # we want to reset the db because we are creating a new agent so we don't want any existing memories. If we wanted to use an existing memory store we would set this to false.

path_to_db_dir="./tmp/notebook/teachability_db", #this is the default path you can use any path you'd like

recall_threshold=1.5, # Higher numbers allow more (but less relevant) memos to be recalled.

max_num_retrievals=10 #10 is default bu you can set the max number of memos to be retrieved lower or higher

)

teachability.add_to_agent(teachable_agent)

Step 6:

Now that the teachable_agent is configured, we need to provide it the information that we want the agent to “learn” (store in the database and retrieve from).

In line with our scenario, I wanted the agent to have basic understanding of the facility which consisted of:

- the types of components the manufacturing plant produces

- the types of car models the components need to be made for

- which machines are used to make each component

Additionally, I wanted to provide some operational guidance on the priorities of the facility depending on how power constrained it is. This includes:

- Guidance in case of energy capacity constraint of more than 50%

- Guidance in case of energy capacity constraint between 25–50%

- Guidance in case of energy capacity constraint between 0–25%

business_info = """

# This manufacturing plant manufactures the following vehicle parts:

- Body panels (doors, hoods, fenders, etc.)

- Engine components (pistons, crankshafts, camshafts)

- Transmission parts

- Suspension components (springs, shock absorbers)

- Brake system parts (rotors, calipers, pads)

# This manufactoring plant produces parts for the following models:

- Ford F-150

- Ford Focus

- Ford Explorer

- Ford Mustang

- Ford Escape

- Ford Edge

- Ford Ranger

# Equipment for Specific Automotive Parts and Their Uses

## 1. Body Panels (doors, hoods, fenders, etc.)

- Stamping presses: Form sheet metal into body panel shapes

- Die sets: Used with stamping presses to create specific panel shapes

- Hydraulic presses: Shape and form metal panels with high pressure

- Robotic welding systems: Automate welding of body panels and structures

- Laser cutting machines: Precisely cut sheet metal for panels

- Sheet metal forming machines: Shape flat sheets into curved or complex forms

- Hemming machines: Fold and crimp edges of panels for strength and safety

- Metal finishing equipment (grinders, sanders): Smooth surfaces and remove imperfections

- Paint booths and spraying systems: Apply paint and protective coatings

- Drying ovens: Cure paint and coatings

- Quality control inspection systems: Check for defects and ensure dimensional accuracy

## 2. Engine Components (pistons, crankshafts, camshafts)

- CNC machining centers: Mill and drill complex engine parts

- CNC lathes: Create cylindrical parts like pistons and camshafts

- Boring machines: Enlarge and finish cylindrical holes in engine blocks

- Honing machines: Create a fine surface finish on cylinder bores

- Grinding machines: Achieve precise dimensions and smooth surfaces

- EDM equipment: Create complex shapes in hardened materials

- Forging presses: Shape metal for crankshafts and connecting rods

- Die casting machines: Produce engine blocks and cylinder heads

- Heat treatment furnaces: Alter material properties for strength and durability

- Quenching systems: Rapidly cool parts after heat treatment

- Balancing machines: Ensure rotating parts are perfectly balanced

- Coordinate Measuring Machines (CMMs): Verify dimensional accuracy

## 3. Transmission Parts

- Gear cutting machines: Create precise gear teeth on transmission components

- CNC machining centers: Mill and drill complex transmission housings and parts

- CNC lathes: Produce shafts and other cylindrical components

- Broaching machines: Create internal splines and keyways

- Heat treatment equipment: Harden gears and other components

- Precision grinding machines: Achieve extremely tight tolerances on gear surfaces

- Honing machines: Finish internal bores in transmission housings

- Gear measurement systems: Verify gear geometry and quality

- Assembly lines with robotic systems: Put together transmission components

- Test benches: Evaluate completed transmissions for performance and quality

## 4. Suspension Components (springs, shock absorbers)

- Coil spring winding machines: Produce coil springs to exact specifications

- Leaf spring forming equipment: Shape and form leaf springs

- Heat treatment furnaces: Strengthen springs and other components

- Shot peening equipment: Increase fatigue strength of springs

- CNC machining centers: Create precision parts for shock absorbers

- Hydraulic cylinder assembly equipment: Assemble shock absorber components

- Gas charging stations: Fill shock absorbers with pressurized gas

- Spring testing machines: Verify spring rates and performance

- Durability test rigs: Simulate real-world conditions to test longevity

## 5. Brake System Parts (rotors, calipers, pads)

- High-precision CNC lathes: Machine brake rotors to exact specifications

- Grinding machines: Finish rotor surfaces for smoothness

- Die casting machines: Produce caliper bodies

- CNC machining centers: Mill and drill calipers for precise fit

- Precision boring machines: Create accurate cylinder bores in calipers

- Hydraulic press: Compress and form brake pad materials

- Powder coating systems: Apply protective finishes to calipers

- Assembly lines with robotic systems: Put together brake components

- Brake dynamometers: Test brake system performance and durability

"""

business_rules_over50 = """

- The engine components are critical and machinery should be kept online that corresponds to producing these components when capacity constraint is more or equal to 50%: engine components

- Components for the following models should be prioritized when capacity constraint is more or equal to 50%: 1.Ford F-150

"""

business_rules_25to50 = """

- The following components are critical and machinery should be kept online that corresponds to producing these components when capacity constraint is between 25-50%: engine components and transmission parts

- Components for the following models should be prioritized when capacity constraint is between 25-50%: 1.Ford F-150 2.Ford Explorer

"""

business_rules_0to25 = """

- The following components are critical and machinery should be kept online that corresponds to producing these components when capacity constraint is between 0-25%: engine components,transmission parts, Brake System Parts

- Components for the following models should be prioritized when capacity constraint is between 0-25%: 1.Ford F-150 2.Ford Explorer 3.Ford Mustang 4.Ford Focus

"""

user.initiate_chat(teachable_agent, message=business_info, clear_history=True)

user.initiate_chat(teachable_agent, message=business_rules_over50, clear_history=True)

user.initiate_chat(teachable_agent, message=business_rules_25to50, clear_history=True)

user.initiate_chat(teachable_agent, message=business_rules_0to25, clear_history=True)

Step 7:

Now that we have added important information to long term memory, let’s ask the Agent the same question and see the difference in its responses.

Simple Question: “The facility is experiencing a power shortage of 40%. What models need to be prioritized?”

Complex Multi Step Question: “The facility is experiencing a power shortage of 40%. Provide me a detailed breakdown of what machines should be deactivated and which machines should remain active.”

Results after implementing long term memory:

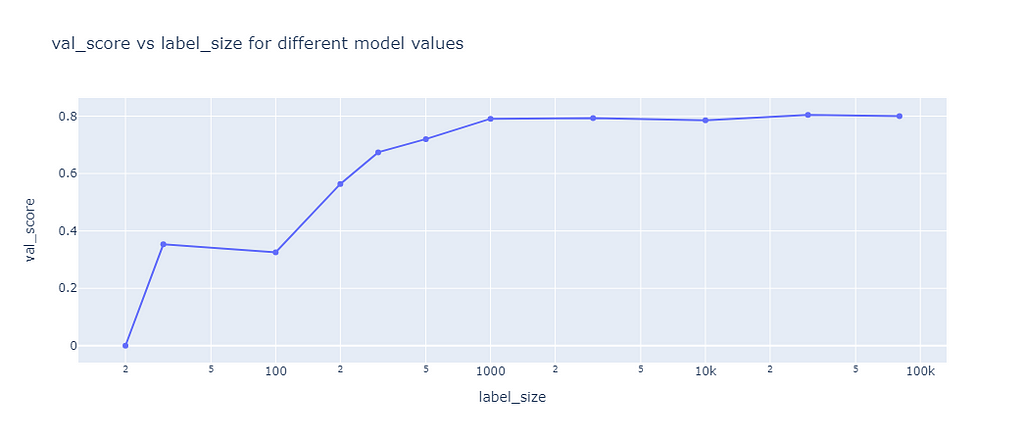

The Teachable Agent was able to partially answer the simple question. It was correct on the car models that need to be prioritized, but it provided additional incorrect information about which components needed to be included.

The complex multi-step question was not adequately addressed. It erroneously merged two distinct business guidelines for 0–25% and 25–50% capacity constraints into a single, generalized guideline, resulting in an inaccurate response. Additionally, it only provided a partial list of machines that should remain active or be deactivated for each component’s production, omitting crucial details from the complete operational protocol. This demonstrates the challenges in accurately retrieving and synthesizing information from multiple, separate memory sources for complex queries.

SIMPLE QUESTION RESPONSE

===========================

>>>>>>>> USING AUTO REPLY...

teachable_agent (to user):

Given the facility is experiencing a power shortage of 40%, this falls within the 25-50% capacity constraint range. According to your previously established priorities for such a scenario, the following models and components need to be prioritized:

### Components to Prioritize

- Engine components

- Transmission parts

- Brake System Parts

### Models to Prioritize

1. Ford F-150

2. Ford Explorer

You should ensure that the machinery necessary to produce these critical components for the Ford F-150 and Ford Explorer is kept online.

COMPLEX MULTISTEP QUESTION RESPONSE

====================================

>>>>>>>> USING AUTO REPLY...

teachable_agent (to user):

In response to the 40% power shortage, let's create a detailed plan to ensure the most critical machinery remains operational while deactivating less essential ones.

### Critical Prioritization (0-50% capacity constraint):

- **Critical Components**:

- Engine components (pistons, crankshafts, camshafts)

- Transmission parts

- Brake System Parts (rotors, calipers, pads)

- **Prioritized Models**:

- Ford F-150

- Ford Explorer

### Machines to Remain Active:

1. **Engine Component Machines**:

- Engine Block Milling Machine

- Crankshaft Grinding Machine

- Camshaft Milling Machine

2. **Transmission Part Machines**:

- Transmission Housing Caster

- Gear Cutting Machine

- Transmission Assembly Line

3. **Brake System Part Machines**:

- Brake Rotor Casting Machine

- Brake Caliper Milling Machine

- Brake Pad Press

### Machines to Deactivate:

1. **Body Panel Machines**:

- Door Stamping Machine

- Hood Assembly Machine

- Fender Bending Machine

2. **Suspension Component Machines**:

- Coil Spring Forming Machine

- Shock Absorber Assembly Line

### Detailed Breakdown:

#### Active Machines:

- **Engine Block Milling Machine**:

- Produces critical engine blocks, essential for Ford F-150 and Ford Explorer.

- **Crankshaft Grinding Machine**:

- Ensures high precision crankshafts; crucial for the performance of Ford F-150 and Ford Explorer.

- **Camshaft Milling Machine**:

- Produces camshafts, necessary for Ford F-150 and Ford Explorer engines.

- **Transmission Housing Caster**:

- Important for casting the housing of transmissions used in Ford F-150 and Ford Explorer.

- **Gear Cutting Machine**:

- Precision gears production, vital for Ford F-150 and Ford Explorer transmissions.

- **Transmission Assembly Line**:

- Assembly of complete transmission units for Ford F-150 and Ford Explorer.

- **Brake Rotor Casting Machine**:

- Produces brake rotors, crucial for the safe braking systems in Ford F-150 and Ford Explorer.

- **Brake Caliper Milling Machine**:

- Machining of brake calipers, essential for Ford F-150 and Ford Explorer.

- **Brake Pad Press**:

- Manufacturing of brake pads, necessary for ensuring braking efficiency in Ford F-150 and Ford Explorer.

#### Deactivated Machines:

- **Door Stamping Machine**:

- While important, body panels like doors are less critical than engine and transmission components under current constraints.

- **Hood Assembly Machine**:

- Deactivating hood assembly to free up power for critical components manufacturing.

- **Fender Bending Machine**:

- Non-critical compared to engine and brake components during a significant power shortage.

- **Coil Spring Forming Machine**:

- Suspension springs are lower priorities under current circumstances.

- **Shock Absorber Assembly Line**:

- Suspension components aren’t critical enough to keep operational during this level of power shortage.

This plan ensures that production focuses on maintaining the critical components needed for primary models, the Ford F-150 and Ford Explorer, thereby maximizing the utility of the remaining power while managing production constraints effectively.

Code:

#simple question

user.initiate_chat(teachable_agent, message="The facility is experiencing a power shortage of 40%. What models need to be prioritized?", clear_history=True)

#multistep complex question

user.initiate_chat(teachable_agent, message="The facility is experiencing a power shortage of 30%. Provide me a detailed breakdown of what machines should be deactivated and which machines should remain active.", clear_history=True)

Conclusion

While Autogen provides a straightforward introduction to AI systems with memory, it falls short in handling complex tasks effectively.

When developing your own AI Agent System with memory capabilities, consider focusing on these key capabilities:

- Implement multi-step searches to ensure comprehensive and relevant results. This allows the agent to assess the usefulness of search outcomes and address all aspects of a query using the retrieved information. Additionally, consider using more advanced retrieval approaches such as semantic search, hybrid search, or knowledge graphs for the best results.

- To limit the potential for data poisoning, develop a thoughtful approach to who should be able to “teach” the agent and when the agent should “learning”. Based on guidelines set by the business or developer, one can also use agent reasoning to determine if something should be added to memory and by whom.

- Remove the likelihood of retrieving out of date information by adding a memory decaying mechanism that determines when a memory is no longer relevant or a newer memory should replace it.

- For multi-agent systems involving group chats or inter-agent information sharing, explore various communication patterns. Determine the most effective methods for transferring supplemental knowledge and establish limits to prevent information overload.

Note: The opinions expressed both in this article and paper are solely those of the authors and do not necessarily reflect the views or policies of their respective employers.

If you still have questions or think that something needs to be further clarified? Drop me a DM on Linkedin! I‘m always eager to engage in food for thought and iterate on my work.

Key Insights for Teaching AI Agents to Remember was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Key Insights for Teaching AI Agents to Remember

Go Here to Read this Fast! Key Insights for Teaching AI Agents to Remember