Agentic AI, small data, and the search for value in the age of the unstructured data stack.

According to industry experts, 2024 was destined to be a banner year for generative AI. Operational use cases were rising to the surface, technology was reducing barriers to entry, and general artificial intelligence was obviously right around the corner.

So… did any of that happen?

Well, sort of. Here at the end of 2024, some of those predictions have come out piping hot. The rest need a little more time in the oven (I’m looking at you general artificial intelligence).

Here’s where leading futurist and investor Tomasz Tunguz thinks data and AI stands at the end of 2024 — plus a few predictions of my own.

2025 data engineering trends incoming.

1. We’re living in a world without reason (Tomasz)

Just three years into our AI dystopia, we’re starting to see businesses create value in some of the areas we would expect — but not all of them. According to Tomasz, the current state of AI can be summed up in three categories.

1. Prediction: AI copilots that can complete a sentence, correct code errors, etc.

2. Search: tools that leverage a corpus of data to answer questions

3. Reasoning: a multi-step workflow that can complete complex tasks

While AI copilots and search have seen modest success (particularly the former) among enterprise orgs, reasoning models still appear to be lagging behind. And according to Tomasz, there’s an obvious reason for that.

Model accuracy.

As Tomasz explained, current models struggle to break down tasks into steps effectively unless they’ve seen a particular pattern many times before. And that’s just not the case for the bulk of the work these models could be asked to perform.

“Today…if a large model were asked to produce an FP&A chart, it could do it. But if there’s some meaningful difference — for instance, we move from software billing to usage based billing — it will get lost.”

So for now, it looks like its AI copilots and partially accurate search results for the win.

2. Process > Tooling (Barr)

A new tool is only as good as the process that supports it.

As the “modern data stack” has continued to evolve over the years, data teams have sometimes found themselves in a state of perpetual tire-kicking. They would focus too heavily on the what of their platform without giving adequate attention to the (arguably more important) how.

But as the enterprise landscape inches ever-closer toward production-ready AI — figuring out how to operationalize all this new tooling is becoming all the more urgent.

Let’s consider the example of data quality for a moment. As the data feeding AI took center-stage in 2024, data quality took a step into the spotlight as well. Facing the real possibility of production-ready AI, enterprise data leaders don’t have time to sample from the data quality menu — a few dbt tests here, a couple point solutions there. They’re on the hook to deliver value now, and they need trusted solutions that they can onboard and deploy effectively today.

As enterprise data leaders grapple with the near-term possibility of production-ready AI, they don’t have time to sample from the data quality menu — a few dbt tests here, a couple point solutions there. They’re already on the hook to deliver business value, and they need trusted solutions that they can onboard and deploy effectively today.

The reality is, you could have the most sophisticated data quality platform on the market — the most advanced automations, the best copilots, the shiniest integrations — but if you can’t get your organization up and running quickly, all you’ve really got is a line item on your budget and a new tab on your desktop.

Over the next 12 months, I expect data teams to lean into proven end-to-end solutions over patchwork toolkits in order to prioritize more critical challenges like data quality ownership, incident management, and long-term domain enablement.

And the solution that delivers on those priorities is the solution that will win the day in AI.

3. AI is driving ROI — but not revenue (Tomasz)

Like any data product, GenAI’s value comes in one of two forms; reducing costs or generating revenue.

On the revenue side, you might have something like AI SDRS, enrichment machines, or recommendations. According to Tomasz, these tools can generate a lot of sales pipeline… but it won’t be a healthy pipeline. So, if it’s not generating revenue, AI needs to be cutting costs — and in that regard, this budding technology has certainly found some footing.

“Not many companies are closing business from it. It’s mostly cost reduction. Klarna cut two-thirds of their head count. Microsoft and ServiceNow have seen 50–75% increases in engineering productivity.”

According to Tomasz, an AI use-case presents the opportunity for cost reduction if one of three criteria are met:

- Repetitive jobs

- Challenging labor market

- Urgent hiring needs

One example Tomasz cited of an organization that is driving new revenue effectively was EvenUp — a transactional legal company that automates demand letters. Organizations like EvenUp that support templated but highly specialized services could be uniquely positioned to see an outsized impact from AI in its current form.

4. AI adoption is slower than expected — but leaders are biding their time (Tomasz)

In contrast to the tsunami of “AI strategies” that were being embraced a year ago, leaders today seem to have taken a unanimous step backward from the technology.

“There was a wave last year when people were trying all kinds of software just to see it. Their boards were asking about their AI strategy. But now there’s been a huge amount of churn in that early wave.”

While some organizations simply haven’t seen value from their early experiments, others have struggled with the rapid evolution of its underlying technology. According to Tomasz, this is one of the biggest challenges for investing in AI companies. It’s not that the technology isn’t valuable in theory — it’s that organizations haven’t figured out how to leverage it effectively in practice.

Tomasz believes that the next wave of adoption will be different from the first because leaders will be more informed about what they need — and where to find it.

Like the dress rehearsal before the big show, teams know what they’re looking for, they’ve worked out some of the kinks with legal and procurement — particularly data loss and prevention — and they’re primed to act when the right opportunity presents itself.

The big challenge of tomorrow? “How can I find and sell the value faster?”

5. Small data is the future of AI (Tomasz)

The open source versus managed debate is a tale as old as… well, something old. But when it comes to AI, that question gets a whole lot more complicated.

At the enterprise level, it’s not simply a question of control or interoperability — though that can certainly play a part — it’s a question of operational cost.

While Tomasz believes that the largest B2C companies will use off the shelf models, he expects B2B to trend toward their own proprietary and open-source models instead.

“In B2B, you’ll see smaller models on the whole, and more open source on the whole. That’s because it’s much cheaper to run a small open source model.”

But it’s not all dollars and cents. Small models also improve performance. Like Google, large models are designed to service a variety of use-cases. Users can ask a large model about effectively anything, so that model needs to be trained on a large enough corpus of data to deliver a relevant response. Water polo. Chinese history. French toast.

Unfortunately, the more topics a model is trained on, the more likely it is to conflate multiple concepts — and the more erroneous the outputs will be over time.

“You can take something like llama 2 with 8 billion parameters, fine tune it with 10,000 support tickets and it will perform much better,” says Tomasz.

What’s more, ChatGPT and other managed solutions are frequently being challenged in courts over claims that their creators didn’t have legal rights to the data those models were trained on.

And in many cases, that’s probably not wrong.

This, in addition to cost and performance, will likely have an impact on long-term adoption of proprietary models — particulary in highly regulated industries — but the severity of that impact remains uncertain.

Of course, proprietary models aren’t lying down either. Not if Sam Altman has anything to say about it. (And if Twitter has taught us anything, Sam Altman definitely has a lot to say.)

Proprietary models are already aggressively cutting prices to drive demand. Models like ChatGPT have already cut prices by roughly 50% and are expecting to cut by another 50% in the next 6 months. That cost cutting could be a much needed boon for the B2C companies hoping to compete in the AI arms race.

6. The lines are blurring for analysts and data engineers (Barr)

When it comes to scaling pipeline production, there are generally two challenges that data teams will run into: analysts who don’t have enough technical experience and data engineers don’t have enough time.

Sounds like a problem for AI.

As we look to how data teams might evolve, there are two major developments that — I believe — could drive consolidation of engineering and analytical responsibilities in 2025:

- Increased demand — as business leaders’ appetite for data and AI products grows, data teams will be on the hook to do more with less. In an effort to minimize bottlenecks, leaders will naturally empower previously specialized teams to absorb more responsibility for their pipelines — and their stakeholders.

- Improvements in automation — new demand always drives new innovation. (In this case, that means AI-enabled pipelines.) As technologies naturally become more automated, engineers will be empowered to do more with less, while analysts will be empowered to do more on their own.

The argument is simple — as demand increases, pipeline automation will naturally evolve to meet demand. As pipeline automation evolves to meet demand, the barrier to creating and managing those pipelines will decrease. The skill gap will decrease and the ability to add new value will increase.

The move toward self-serve AI-enabled pipeline management means that the most painful part of everyone’s job gets automated away — and their ability to create and demonstrate new value expands in the process. Sounds like a nice future.

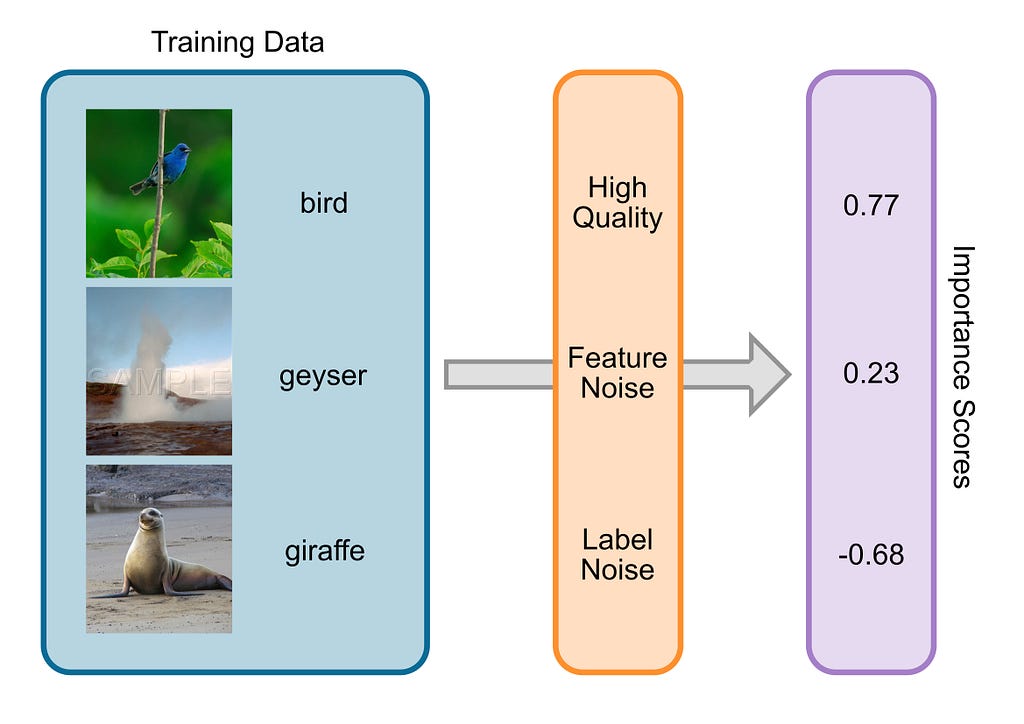

7. Synthetic data matters — but it comes at a cost (Tomasz)

You’ve probably seen the image of a snake eating its own tail. If you look closely, it bears a striking resemblance to contemporary AI.

There are approximately 21–25 trillion tokens (words) on the internet right now. The AI models in production today have used all of them. In order for data to continue to advance, it requires an infinitely greater corpus of data to be trained on. The more data it has, the more context it has available for outputs — and the more accurate those outputs will be.

So, what does an AI researcher do when they run out of training data?

They make their own.

As training data becomes more scarce, companies like OpenAI believe that synthetic data will be an important part of how they train their models in the future. And over the last 24 months, an entire industry has evolved to service that very vision — including companies like Tonic that generate synthetic structured data and Gretel that creates compliant data for regulated industries like finance and healthcare.

But is synthetic data a long-term solution? Probably not.

Synthetic data works by leveraging models to create artificial datasets that reflect what someone might find organically (in some alternate reality where more data actually exists), and then using that new data to train their own models. On a small scale, this actually makes a lot of sense. You know what they say about too much of a good thing…

You can think of it like contextual malnutrition. Just like food, if a fresh organic data source is the most nutritious data for model training, then data that’s been distilled from existing datasets must be, by its nature, less nutrient rich than the data that came before.

A little artificial flavoring is okay — but if that diet of synthetic training data continues into perpetuity without new grass-fed data being introduced, that model will eventually fail (or at the very least, have noticeably less attractive nail beds).

It’s not really a matter of if, but when.

According to Tomasz, we’re a long way off from model collapse at this point. But as AI research continues to push models to their functional limits, it’s not difficult to see a world where AI reaches its functional plateau — maybe sooner than later.

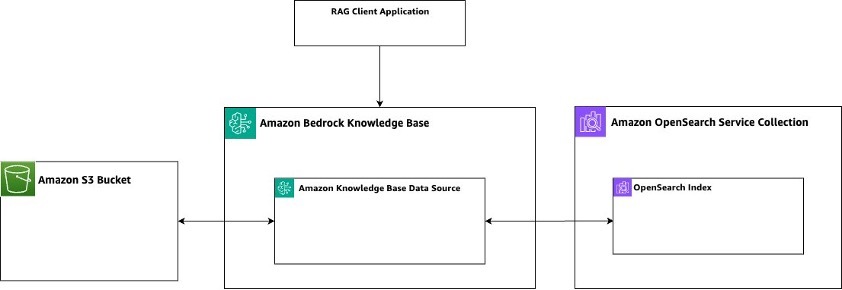

8. The unstructured data stack will emerge (Barr)

The idea of leveraging unstructured data in production isn’t new by any means — but in the age of AI, unstructured data has taken on a whole new role.

According to a report by IDC only about half of an organization’s unstructured data is currently being analyzed.

All that is about to change.

When it comes to generative AI, enterprise success depends largely on the panoply of unstructured data that’s used to train, fine-tune, and augment it. As more organizations look to operationalize AI for enterprise use cases, enthusiasm for unstructured data — and the burgeoning “unstructured data stack” — will continue to grow as well.

Some teams are even exploring how they can use additional LLMs to add structure to unstructured data to scale its usefulness in additional training and analytics use cases as well.

Identifying what unstructured first-party data exists within your organization — and how you could potentially activate that data for your stakeholders — is a greenfield opportunity for data leaders looking to demonstrate the business value of their data platform (and hopefully secure some additional budget for priority initiatives along the way).

If 2024 was about exploring the potential of unstructured data — 2025 will be all about realizing its value. The question is… what tools will rise to the surface?

9. Agentic AI is great for conversation — but not deployment (Tomasz)

If you’re swimming anywhere near the venture capital ponds these days, you’re likely to hear a couple terms tossed around pretty regularly: “copilot” which is a fancy term for an AI used to complete a single step (“correct my terrible code”), and “agents” which are a multi-step workflow that can gather information and use it to perform a task (“write a blog about my terrible code and publish it to my WordPress”).

No doubt, we’ve seen a lot of success around AI copilots in 2024, (just ask Github, Snowflake, the Microsoft paperclip, etc), but what about AI agents?

While “agentic AI” has had a fun time wreaking havoc on customer support teams, it looks like that’s all it’s destined to be in the near term. While these early AI agents are an important step forward, the accuracy of these workflows is still poor.

For context, 75%-90% accuracy is state of the art for AI. Most AI is equivalent to a high school student. But if you have three steps of 75–90% accuracy, your ultimate accuracy is around 50%.

We’ve trained elephants to paint with better accuracy than that.

Far from being a revenue driver for organizations, most AI agents would be actively harmful if released into production at their current performance. According to Tomasz, we need to solve that problem first.

It’s important to be able to talk about them, no one has had any success outside of a demo. Because regardless of how much people in the Valley might love to talk about AI agents, that talk doesn’t translate into performance.

10. Pipelines are expanding — but quality coverage isn’t (Tomasz)

“At a dinner with a bunch of heads of AI, I asked how many people were satisfied with the quality of the outputs, and no one raised their hands. There’s a real quality challenge in getting consistent outputs.”

Pipelines are expanding and they need to be monitoring them. He was talking to an end to end AI solution. Everyone wants AI in the workflows, so the pipelines will increase dramatically. The quality of that data is absolutely essential. The pipelines are massively expanding and you need to be monitoring or you’ll be making the wrong decisions. And the data volumes will be increasingly tremendous.

Each year, Monte Carlo surveys real data professionals about the state of their data quality. This year, we turned our gaze to the shadow of AI, and the message was clear.

Data quality risks are evolving — but data quality management isn’t.

“We’re seeing teams build out vector databases or embedding models at scale. SQLLite at scale. All of these 100 million small databases. They’re starting to be architected at the CDN layer to run all these small models. Iphones will have machine learning models. We’re going to see an explosion in the total number of pipelines but with much smaller data volumes.”

The pattern of fine-tuning will create an explosion in the number of data pipelines within an organization. But the more pipelines expand, the more difficult data quality becomes.

Data quality increases in direct proportion to the volume and complexity of your pipelines. The more pipelines you have (and the more complex they become), the more opportunities you’ll have for things to break — and the less likely you’ll be to find them in time.

+++

What do you think? Reach out to Barr at [email protected]. I’m all ears.

Top 10 Data & AI Trends for 2025 was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.