Get familiar with the building blocks of Agents in LangChain

Let’s build a simple agent in LangChain to help us understand some of the foundational concepts and building blocks for how agents work there.

By keeping it simple we can get a better grasp of the foundational ideas behind these agents, allowing us to build more complex agents in the future.

Contents

What are Agents?

Building the Agent

- The Tools

- The Toolkit

- The LLM

- The Prompt

The Agent

Testing our Agent

Observations

The Future

Conclusion

What are Agents

The LangChain documentation actually has a pretty good page on the high level concepts around its agents. It’s a short easy read, and definitely worth skimming through before getting started.

If you lookup the definition of AI Agents, you get something along the lines of “An entity that is able to perceive its environment, act on its environment, and make intelligent decisions about how to reach a goal it has been given, as well as the ability to learn as it goes”

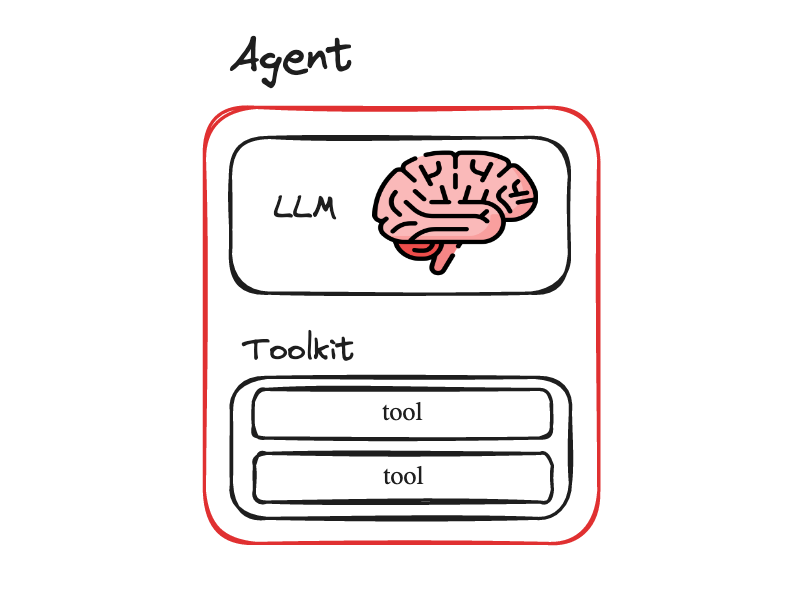

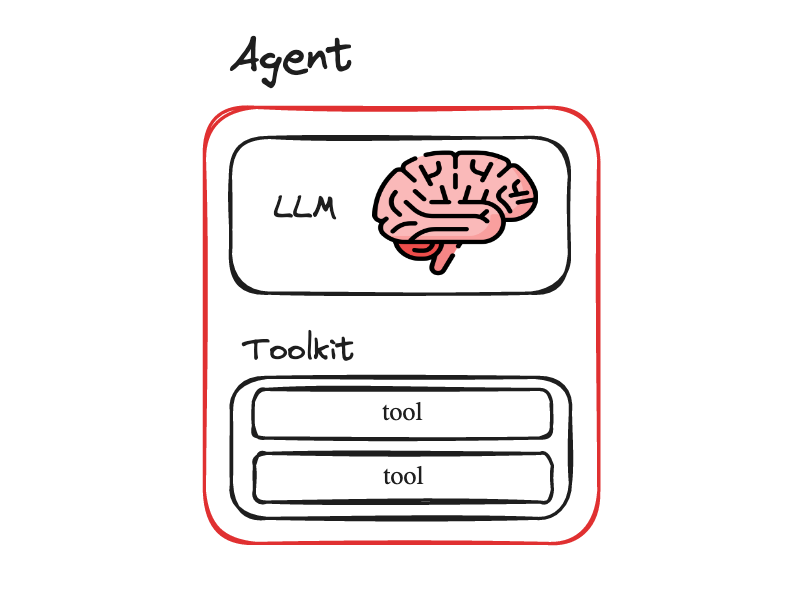

That fits the definition of LangChain agents pretty well I would say. What makes all this possible in software is the reasoning abilities of Large Language Model’s (LLM’s). The brains of a LangChain agent are an LLM. It is the LLM that is used to reason about the best way to carry out the ask requested by a user.

In order to carry out its task, and operate on things and retrieve information, the agent has what are called Tool’s in LangChain, at its disposal. It is through these tools that it is able to interact with its environment.

The tools are basically just methods/classes the agent has access to that can do things like interact with a Stock Market index over an API, update a Google Calendar event, or run a query against a database. We can build out tools as needed, depending on the nature of tasks we are trying to carry out with the agent to fulfil.

A collection of Tools in LangChain are called a Toolkit. Implementation wise, this is literally just an array of the Tools that are available for the agent. As such, the high level overview of an agent in LangChain looks something like this

So, at a basic level, an agent needs

- an LLM to act as its brain, and to give it its reasoning abilities

- tools so that it can interact with the environment around it and achieve its goals

Building the Agent

To make some of these concepts more concrete, let’s build a simple agent.

We will create a Mathematics Agent that can perform a few simple mathematical operations.

Environment setup

First lets setup our environment and script

mkdir simple-math-agent && cd simple-math-agent

touch math-agent.py

python3 -m venv .venv

. .venv/bin/activate

pip install langchain langchain_openai

Alternatively, you can also clone the code used here from GitHub

git clone [email protected]:smaameri/simple-math-agent.git

or check out the code inside a Google Colab also.

The Tools

The simplest place to start will be to fist define the tools for our Maths agent.

Let’s give it “add”, “multiply” and “square” tools, so that it can perform those operations on questions we pass to it. By keeping our tools simple we can focus on the core concepts, and build the tools ourselves, instead of relying on an existing and more complex tools like the WikipediaTool, that acts as a wrapper around the Wikipedia API, and requires us to import it from the LangChain library.

Again, we are not trying to do anything fancy here, just keeping it simple and putting the main building blocks of an agent together so we can understand how they work, and get our first agent up and running.

Let’s start with the “add” tool. The bottom up way to create a Tool in LangChain would be to extend the BaseTool class, set the name and description fields on the class, and implement the _run method. That would look like this

from langchain_core.tools import BaseTool

class AddTool(BaseTool):

name = "add"

description = "Adds two numbers together"

args_schema: Type[BaseModel] = AddInput

return_direct: bool = True

def _run(

self, a: int, b: int, run_manager: Optional[CallbackManagerForToolRun] = None

) -> str:

return a + b

Notice that we need to implement the _run method to show what our tool does with the parameters that are passed to it.

Notice also how it requires a pydantic model for the args_schema. We will define that here

AddInput

a: int = Field(description="first number")

b: int = Field(description="second number")

Now, LangChain does give us an easier way to define tools, then by needing to extend the BaseTool class each time. We can do this with the help of the @tool decorator. Defining the “add” tool in LangChain using the @tool decorator will look like this

from langchain.tools import tool

@tool

def add(a: int, b: int) -> int:

“””Adds two numbers together””” # this docstring gets used as the description

return a + b # the actions our tool performs

Much simpler right. Behind the scenes, the decorator magically uses the method provided to extend the BaseTool class, just as we did earlier. Some thing to note:

- the method name also becomes the tool name

- the method params define the input parameters for the tool

- the docstring gets converted into the tools description

You can access these properties on the tool also

print(add.name) # add

print(add.description) # Adds two numbers together.

print(add.args) # {'a': {'title': 'A', 'type': 'integer'}, 'b': {'title': 'B', 'type': 'integer'}}

Note that the description of a tool is very important as this is what the LLM uses to decide whether or not it is the right tool for the job. A bad description may lead to the not tool getting used when it should be, or getting used at the wrong times.

With the add tool done, let’s move on to the definitions for our multiply and square tools.

@tool

def multiply(a: int, b: int) -> int:

"""Multiply two numbers."""

return a * b

@tool

def square(a) -> int:

"""Calculates the square of a number."""

a = int(a)

return a * a

And that is it, simple as that.

So we have defined our own three custom tools. A more common use case might be to use some of the already provided and existing tools in LangChain, which you can see here. However, at the source code level, they would all be built and defined using a similar methods as described above.

And that is it as far as our Tools our concerned. Now time to combine our tools into a Toolkit.

The Toolkit

Toolkits sound fancy, but they are actually very simple. They are literally just a a list of tools. We can define our toolkit as an array of tools like so

toolkit = [add, multiply, square]

And that’s it. Really straightforward, and nothing to get confused over.

Usually Toolkits are groups of tools that are useful together, and would be helpful for agents trying to carry out certain kinds of tasks. For example an SQLToolkit might contain a tool for generating an SQL query, validating an SQL query, and executing an SQL query.

The Integrations Toolkit page on the LangChain docs has a large list of toolkits developed by the community that might be useful for you.

The LLM

As mentioned above, an LLM is the brains of an agent. It decides which tools to call based on the question passed to it, what are the best next steps to take based on a tools description. It also decides when it has reached its final answer, and is ready to return that to the user.

Let’s setup the LLM here

from langchain_openai import ChatOpenAI

llm = ChatOpenAI(model="gpt-3.5-turbo-1106", temperature=0)

The Prompt

Lastly we need a prompt to pass into our agent, so it has a general idea bout what kind of agent it is, and what sorts of tasks it should solve.

Our agent requires a ChatPromptTemplate to work (more on that later). This is what a barebones ChatPromptTemplate looks like. The main part we care about is the system prompt, and the rest are just the default settings we are required to pass in.

In our prompt we have included a sample answer, showing the agent how we want it to return the answer only, and not any descriptive text along with the answer

prompt = ChatPromptTemplate.from_messages(

[

("system", """

You are a mathematical assistant. Use your tools to answer questions.

If you do not have a tool to answer the question, say so.

Return only the answers. e.g

Human: What is 1 + 1?

AI: 2

"""),

MessagesPlaceholder("chat_history", optional=True),

("human", "{input}"),

MessagesPlaceholder("agent_scratchpad"),

]

)

That is it. We have setup our Tools and Toolkit, which our agent will need as part of its setup, so its knows what are the types of actions and capabilities it has at its disposal. And we have also setup the LLM and system prompt.

Now for the fun part. Setting up our Agent!

The Agent

LangChain has a number of different agents types that can be created, with different reasoning powers and abilities. We will be using the most capable and powerful agent currently available, the OpenAI Tools agent. As per the docs on the the OpenAI Tools agent, which uses newer OpenAI models also,

Newer OpenAI models have been fine-tuned to detect when one or more function(s) should be called and respond with the inputs that should be passed to the function(s). In an API call, you can describe functions and have the model intelligently choose to output a JSON object containing arguments to call these functions. The goal of the OpenAI tools APIs is to more reliably return valid and useful function calls than what can be done using a generic text completion or chat API.

In other words this agents is good at generating the correct structure for calling functions, and is able to understand if more than one function (tool) might be needed for our task also. This agent also has the ability to call functions (tools) with multiple input parameters, just like ours do. Some agents can only work with functions that have a single input parameter.

If you are familiar with OpenAI’s Function calling feature, where we can use the OpenAI LLM to generate the correct parameters to call a function with, the OpenAI Tools agent we are using here is leveraging some of that power in order to be able to call the correct tool, with the correct parameters.

In order to setup an agent in LangChain, we need to use one of the factory methods provided for creating the agent of our choice.

The factory method for creating an OpenAI tools agent is create_openai_tools_agent(). And it requires passing in the llm, tools and prompt we setup above. So let’s initialise our agent.

agent = create_openai_tools_agent(llm, toolkit, prompt)

Finally, in order to run agents in LangChain, we cannot just call a “run” type method on them directly. They need to be run via an AgentExecutor.

Am bringing up the Agent Executor only here at the end as I don’t think it’s a critical concept for understanding how the agents work, and bring it up at the start with everything else would just the whole thing seem more complicated than it needs to be, as well as distract from understanding some of the other more fundamental concepts.

So, now that we are introducing it, an AgentExecutor acts as the runtime for agents in LangChain, and allow an agent to keep running until it is ready to return its final response to the user. In pseudo-code, the AgentExecutor’s are doing something along the lines of (pulled directly from the LangChain docs)

next_action = agent.get_action(...)

while next_action != AgentFinish:

observation = run(next_action)

next_action = agent.get_action(..., next_action, observation)

return next_action

So they are basically a while loop that keep’s calling the next action methods on the agent, until the agent has returned its final response.

So, let us setup our agent inside the agent executor. We pass it the agent, and must also pass it the toolkit. And we are setting verbose to True so we can get an idea of what the agent is doing as it is processing our request

agent_executor = AgentExecutor(agent=agent, tools=toolkit, verbose=True)

And that is it. We are now ready to pass commands to our agent

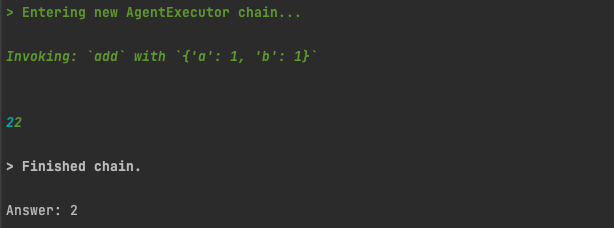

result = agent_executor.invoke({"input": "what is 1 + 1"})

Let run our script, and see the agent’s output

python3 math-agent.py

Since we have set verbose=True on the AgentExecutor, we can see the lines of Action our agent has taken. It has identified we should call the “add” tool, called the “add” tool with the required parameters, and returned us our result.

This is what the full source code looks like

import os

from langchain.agents import AgentExecutor, create_openai_tools_agent

from langchain_openai import ChatOpenAI

from langchain.tools import BaseTool, StructuredTool, tool

from langchain_core.prompts import ChatPromptTemplate, MessagesPlaceholder

os.environ["OPENAI_API_KEY"] = "sk-"

# setup the tools

@tool

def add(a: int, b: int) -> int:

"""Add two numbers."""

return a + b

@tool

def multiply(a: int, b: int) -> int:

"""Multiply two numbers."""

return a * b

@tool

def square(a) -> int:

"""Calculates the square of a number."""

a = int(a)

return a * a

prompt = ChatPromptTemplate.from_messages(

[

("system", """You are a mathematical assistant.

Use your tools to answer questions. If you do not have a tool to

answer the question, say so.

Return only the answers. e.g

Human: What is 1 + 1?

AI: 2

"""),

MessagesPlaceholder("chat_history", optional=True),

("human", "{input}"),

MessagesPlaceholder("agent_scratchpad"),

]

)

# Choose the LLM that will drive the agent

llm = ChatOpenAI(model="gpt-3.5-turbo-1106", temperature=0)

# setup the toolkit

toolkit = [add, multiply, square]

# Construct the OpenAI Tools agent

agent = create_openai_tools_agent(llm, toolkit, prompt)

# Create an agent executor by passing in the agent and tools

agent_executor = AgentExecutor(agent=agent, tools=toolkit, verbose=True)

result = agent_executor.invoke({"input": "what is 1 + 1?"})

print(result['output'])

Testing our agent

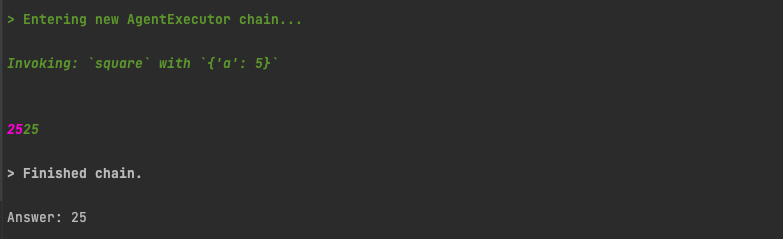

Let’s shoot a few questions at our agent to see how it performs.

what is 5 squared?

Again we get the correct result, and see that it does use our square tool

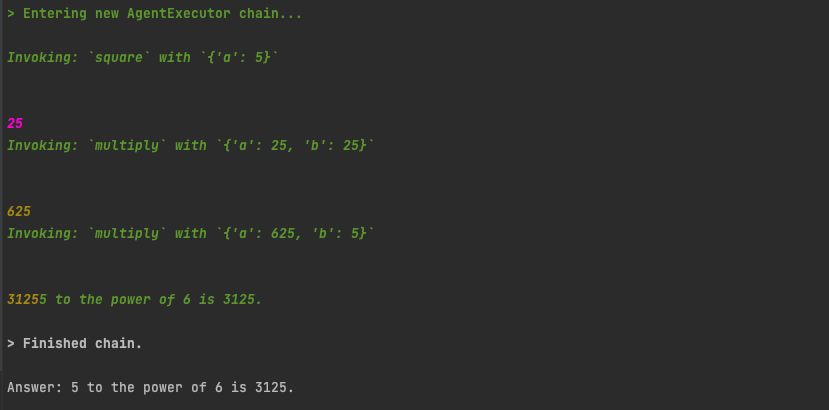

what is 5 to the power of 6?

It takes an interesting course of action. It first uses the square tool. And then, using the result of that, tries to use the multiply tool a few times to get the final answer. Admittedly, the final answer, 3125, is wrong, and needs to be multiplied by 5 one more time to get the correct answer. But it is interesting to see how the agent tried to use different tools, and multiple steps to try and get to the final answer.

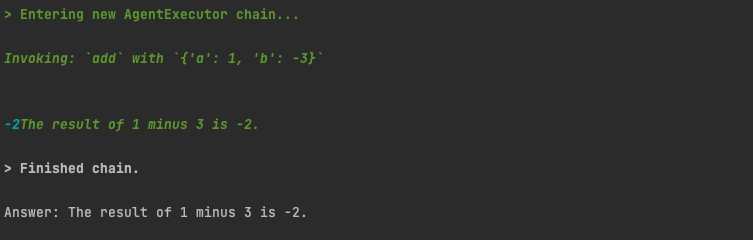

what is 1 minus 3?

We don’t have a minus tool. But it is smart enough to use our add tool, but set the second value to -3. Its funny and somewhat amazing sometimes how they are smart and creative like that.

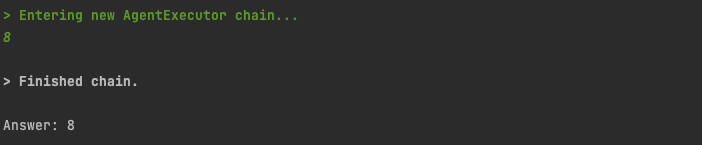

what is the square root of 64

As a final test, what if we ask it to carry out a mathematical operation that is not part of our tool set? Since we have no tools for square rooting, it does not attempt to call a tool, and instead calculates the value directly using just the LLM.

Our system prompt did tell it to answer that it “does not know” if it does not have the correct tool for the job, and it did do that sometimes during testing. An improved initial system prompt could probably help resolve that, at least to some extent

Observations

Based on using the agent a bit, I noticed the following

- when asking it direct questions which it had the tools to answer with, it was pretty consistent at using the correct tools for the job, and returning the correct answer. So, pretty reliable in that sense.

- if the question is a little complicated, for example our “5 to the power of 6” question, it does not always return the correct results.

- it can sometimes use just the pure power of the LLM to answer our question, without invoking our tools.

The Future

Agents, and programs that can reason from themselves, are a new paradigm in programming, and I think they are going to become a much more mainstream part of how lots of things are built. Obviously the non-deterministic (i.e not wholly predictable) nature of LLM’s means that agents results will also suffer from this, questioning how much we can rely on them for tasks where we need to be sure of the answers we have.

Perhaps as the technology matures, their results can be more and more predictable, and we may develop some work arounds for this.

I can also see agent type libraries and packages starting to become a thing. Similar to how we install third party libraries and packages into software, for example via the pip package manager for python, or Docker Hub for docker images, I wonder if we may start to see a library and package manager of agents start being developed, with agents developed that become very good at their specific tasks, which we can then also install as packages into out application.

Indeed LangChain’s library of Toolkits for agents to use, listed on their Integrations page, are sets of Tools built by the community for people to use, which could be an early example of agent type libraries built by the community.

Conclusion

Hope this was a useful introduction into getting you started building with agents in LangChain.

Remember, agents are basically just a brain (the LLM), and a bunch of tools, which they can use to get stuff done in the world around us.

Happy hacking!

If you enjoyed the article, and would like to stay up to date on future articles I release about building things with LangChain and AI tools, do subscribe here to be notified by email when they come out

Building a simple Agent with Tools and Toolkits in LangChain was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Building a simple Agent with Tools and Toolkits in LangChain

Go Here to Read this Fast! Building a simple Agent with Tools and Toolkits in LangChain