How to build a modern, scalable data platform to power your analytics and data science projects (updated)

Table of Contents:

- What’s changed?

- The Platform

- Integration

- Data Store

- Transformation

- Orchestration

- Presentation

- Transportation

- Observability

- Closing

What’s changed?

Since 2021, maybe a better question is what HASN’T changed?

Stepping out of the shadow of COVID, our society has grappled with a myriad of challenges — political and social turbulence, fluctuating financial landscapes, the surge in AI advancements, and Taylor Swift emerging as the biggest star in the … *checks notes* … National Football League!?!

Over the last three years, my life has changed as well. I’ve navigated the data challenges of various industries, lending my expertise through work and consultancy at both large corporations and nimble startups.

Simultaneously, I’ve dedicated substantial effort to shaping my identity as a Data Educator, collaborating with some of the most renowned companies and prestigious universities globally.

As a result, here’s a short list of what inspired me to write an amendment to my original 2021 article:

- Scale

Companies, big and small, are starting to reach levels of data scale previously reserved for Netflix, Uber, Spotify and other giants creating unique services with data. Simply cobbling together data pipelines and cron jobs across various applications no longer works, so there are new considerations when discussing data platforms at scale.

- Streaming

Although I briefly mentioned streaming in my 2021 article, you’ll see a renewed focus in the 2024 version. I’m a strong believer that data has to move at the speed of business, and the only way to truly accomplish this in modern times is through data streaming.

- Orchestration

I mentioned modularity as a core concept of building a modern data platform in my 2021 article, but I failed to emphasize the importance of data orchestration. This time around, I have a whole section dedicated to orchestration and why it has emerged as a natural compliment to a modern data stack.

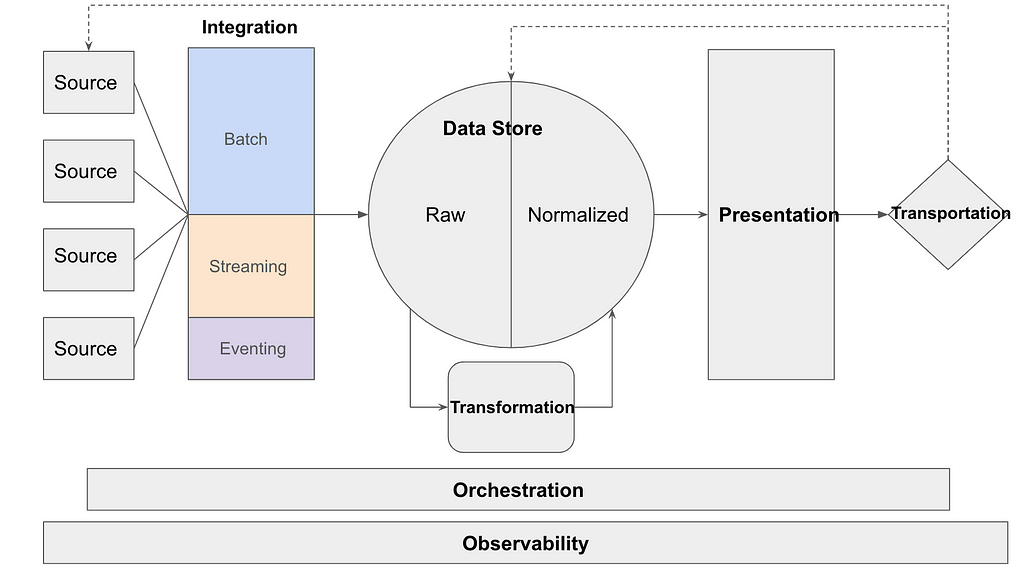

The Platform

To my surprise, there is still no single vendor solution that has domain over the entire data vista, although Snowflake has been trying their best through acquisition and development efforts (Snowpipe, Snowpark, Snowplow). Databricks has also made notable improvements to their platform, specifically in the ML/AI space.

All of the components from the 2021 articles made the cut in 2024, but even the familiar entries look a little different 3 years later:

- Source

- Integration

- Data Store

- Transformation

- Orchestration

- Presentation

- Transportation

- Observability

Integration

The integration category gets the biggest upgrade in 2024, splitting into three logical subcategories:

- Batch

- Streaming

- Eventing

Batch

The ability to process incoming data signals from various sources at a daily/hourly interval is the bread and butter of any data platform.

Fivetran still seems like the undeniable leader in the managed ETL category, but it has some stiff competition via up & comers like Airbyte and big cloud providers that have been strengthening their platform offerings.

Over the past 3 years, Fivetran has improved its core offering significantly, extended its connector library and even started to branch out into light orchestration with features like their dbt integration.

It’s also worth mentioning that many vendors, such as Fivetran, have merged the best of OSS and venture capital funding into something called Product Led Growth, offering free tiers in their product offering that lower the barrier to entry into enterprise grade platforms.

Even if the problems you are solving require many custom source integrations, it makes sense to use a managed ETL provider for the bulk and custom Python code for the rest, all held together by orchestration.

Streaming

Kafka/Confluent is king when it comes to data streaming, but working with streaming data introduces a number of new considerations beyond topics, producers, consumers, and brokers, such as serialization, schema registries, stream processing/transformation and streaming analytics.

Confluent is doing a good job of aggregating all of the components required for successful data streaming under one roof, but I’ll be pointing out streaming considerations throughout other layers of the data platform.

The introduction of data streaming doesn’t inherently demand a complete overhaul of the data platform’s structure. In truth, the synergy between batch and streaming pipelines is essential for tackling the diverse challenges posed to your data platform at scale. The key to seamlessly addressing these challenges lies, unsurprisingly, in data orchestration.

Eventing

In many cases, the data platform itself needs to be responsible for, or at the very least inform, the generation of first party data. Many could argue that this is a job for software engineers and app developers, but I see a synergistic opportunity in allowing the people who build your data platform to also be responsible for your eventing strategy.

I break down eventing into two categories:

- Change Data Capture — CDC

The basic gist of CDC is using your database’s CRUD commands as a stream of data itself. The first CDC platform I came across was an OSS project called Debezium and there are many players, big and small, vying for space in this emerging category.

- Click Streams — Segment/Snowplow

Building telemetry to capture customer activity on websites or applications is what I am referring to as click streams. Segment rode the click stream wave to a billion dollar acquisition, Amplitude built click streams into an entire analytical platform and Snowplow has been surging more recently with their OSS approach, demonstrating that this space is ripe for continued innovation and eventual standardization.

AWS has been a leader in data streaming, offering templates to establish the outbox pattern and building data streaming products such as MSK, SQS, SNS, Lambdas, DynamoDB and more.

Data Store

Another significant change from 2021 to 2024 lies in the shift from “Data Warehouse” to “Data Store,” acknowledging the expanding database horizon, including the rise of Data Lakes.

Viewing Data Lakes as a strategy rather than a product emphasizes their role as a staging area for structured and unstructured data, potentially interacting with Data Warehouses. Selecting the right data store solution for each aspect of the Data Lake is crucial, but the overarching technology decision involves tying together and exploring these stores to transform raw data into downstream insights.

Distributed SQL engines like Presto , Trino and their numerous managed counterparts (Pandio, Starburst), have emerged to traverse Data Lakes, enabling users to use SQL to join diverse data across various physical locations.

Amid the rush to keep up with generative AI and Large Language Model trends, specialized data stores like vector databases become essential. These include open-source options like Weaviate, managed solutions like Pinecone and many more.

Transformation

Few tools have revolutionized data engineering like dbt. Its impact has been so profound that it’s given rise to a new data role — the analytics engineer.

dbt has become the go-to choice for organizations of all sizes seeking to automate transformations across their data platform. The introduction of dbt core, the free tier of the dbt product, has played a pivotal role in familiarizing data engineers and analysts with dbt, hastening its adoption, and fueling the swift development of new features.

Among these features, dbt mesh stands out as particularly impressive. This innovation enables the tethering and referencing of multiple dbt projects, empowering organizations to modularize their data transformation pipelines, specifically meeting the challenges of data transformations at scale.

Stream transformations represent a less mature area in comparison. Although there are established and reliable open-source projects like Flink, which has been in existence since 2011, their impact hasn’t resonated as strongly as tools dealing with “at rest” data, such as dbt. However, with the increasing accessibility of streaming data and the ongoing evolution of computing resources, there’s a growing imperative to advance the stream transformations space.

In my view, the future of widespread adoption in this domain depends on technologies like Flink SQL or emerging managed services from providers like Confluent, Decodable, Ververica, and Aiven. These solutions empower analysts to leverage a familiar language, such as SQL, and apply those concepts to real-time, streaming data.

Orchestration

Reviewing the Ingestion, Data Store, and Transformation components of constructing a data platform in 2024 highlights the daunting challenge of choosing between a multitude of tools, technologies, and solutions.

From my experience, the key to finding the right iteration for your scenario is through experimentation, allowing you to swap out different components until you achieve the desired outcome.

Data orchestration has become crucial in facilitating this experimentation during the initial phases of building a data platform. It not only streamlines the process but also offers scalable options to align with the trajectory of any business.

Orchestration is commonly executed through Directed Acyclic Graphs (DAGs) or code that structures hierarchies, dependencies, and pipelines of tasks across multiple systems. Simultaneously, it manages and scales the resources utilized to run these tasks.

Airflow remains the go-to solution for data orchestration, available in various managed flavors such as MWAA, Astronomer, and inspiring spin-off branches like Prefect and Dagster.

Without an orchestration engine, the ability to modularize your data platform and unlock its full potential is limited. Additionally, it serves as a prerequisite for initiating a data observability and governance strategy, playing a pivotal role in the success of the entire data platform.

Presentation

Surprisingly, traditional data visualization platforms like Tableau, PowerBI, Looker, and Qlik continue to dominate the field. While data visualization witnessed rapid growth initially, the space has experienced relative stagnation over the past decade. An exception to this trend is Microsoft, with commendable efforts towards relevance and innovation, exemplified by products like PowerBI Service.

Emerging data visualization platforms like Sigma and Superset feel like the natural bridge to the future. They enable on-the-fly, resource-efficient transformations alongside world-class data visualization capabilities. However, a potent newcomer, Streamlit, has the potential to redefine everything.

Streamlit, a powerful Python library for building front-end interfaces to Python code, has carved out a valuable niche in the presentation layer. While the technical learning curve is steeper compared to drag-and-drop tools like PowerBI and Tableau, Streamlit offers endless possibilities, including interactive design elements, dynamic slicing, content display, and custom navigation and branding.

Streamlit has been so impressive that Snowflake acquired the company for nearly $1B in 2022. How Snowflake integrates Streamlit into its suite of offerings will likely shape the future of both Snowflake and data visualization as a whole.

Transportation

Transportation, Reverse ETL, or data activation — the final leg of the data platform — represents the crucial stage where the platform’s transformations and insights loop back into source systems and applications, truly impacting business operations.

Currently, Hightouch stands out as a leader in this domain. Their robust core offering seamlessly integrates data warehouses with data-hungry applications. Notably, their strategic partnerships with Snowflake and dbt emphasize a commitment to being recognized as a versatile data tool, distinguishing them from mere marketing and sales widgets.

The future of the transportation layer seems destined to intersect with APIs, creating a scenario where API endpoints generated via SQL queries become as common as exporting .csv files to share query results. While this transformation is anticipated, there are few vendors exploring the commoditization of this space.

Observability

Similar to data orchestration, data observability has emerged as a necessity to capture and track all the metadata produced by different components of a data platform. This metadata is then utilized to manage, monitor, and foster the growth of the platform.

Many organizations address data observability by constructing internal dashboards or relying on a single point of failure, such as the data orchestration pipeline, for observation. While this approach may suffice for basic monitoring, it falls short in solving more intricate logical observability challenges, like lineage tracking.

Enter DataHub, a popular open-source project gaining significant traction. Its managed service counterpart, Acryl, has further amplified its impact. DataHub excels at consolidating metadata exhaust from various applications involved in data movement across an organization. It seamlessly ties this information together, allowing users to trace KPIs on a dashboard back to the originating data pipeline and every step in between.

Monte Carlo and Great Expectations serve a similar observability role in the data platform but with a more opinionated approach. The growing popularity of terms like “end-to-end data lineage” and “data contracts” suggests an imminent surge in this category. We can expect significant growth from both established leaders and innovative newcomers, poised to revolutionize the outlook of data observability.

Closing

The 2021 version of this article is 1,278 words.

The 2024 version of this article is well ahead of 2K words before this closing.

I guess that means I should keep it short.

Building a platform that is fast enough to meet the needs of today and flexible enough to grow to the demands of tomorrow starts with modularity and is enabled by orchestration. In order to adopt the most innovative solution for your specific problem, your platform must make room for data solutions of all shapes in sizes, whether it’s an OSS project, a new managed service or a suite of products from AWS.

There are many ideas in this article but ultimately the choice is yours. I’m eager to hear how this inspires people to explore new possibilities and create new ways of solving problems with data.

Note: I’m not currently affiliated with or employed by any of the companies mentioned in this post, and this post isn’t sponsored by any of these tools.

Building a Data Platform in 2024 was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Building a Data Platform in 2024