Beyond Kleinberg’s Impossibility Theorem of Clustering: My Study Note of a Pragmatic Clustering Evaluation Framework

This article explores a pragmatic evaluation framework for clustering under the constraint of Kleinberg’s Impossible Theorem of Clustering

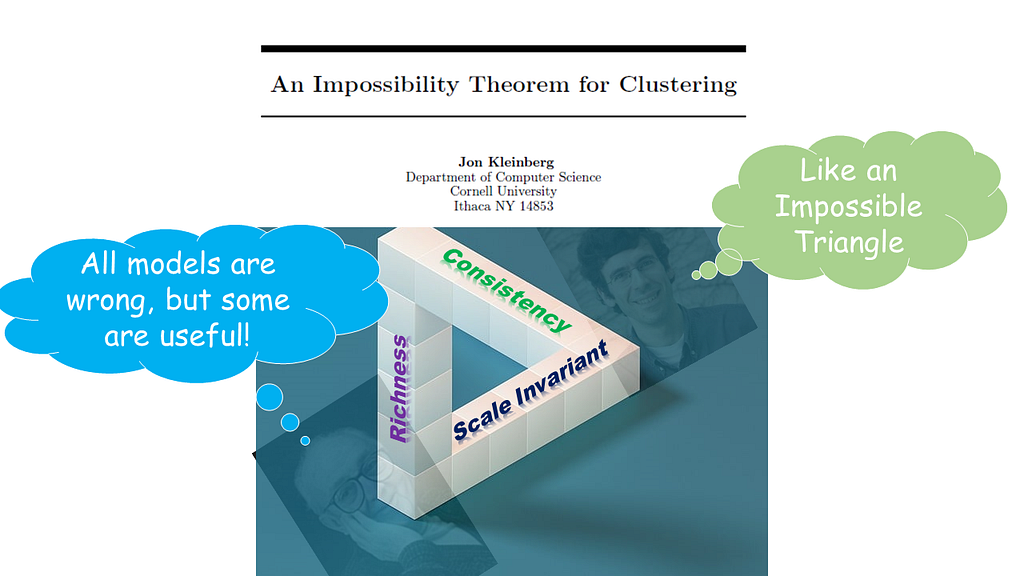

In his paper “an Impossibility Theorem of Clustering” published in 2002, Jon Kleinberg articulated that there is no clustering model that can satisfy all three desirable axioms of clustering simultaneously: scale invariance, richness, and consistency. (Kleinberg, 2002)

What do those three axioms mean? Here is an interpretation of the three axioms.

- Scale invariant means: a clustering algorithm should generate the same results when all distances among datapoints are scaled by the factor of a constant.

- Richness means: the clustering algorithm should demonstrate high capability in generating all possible partitions of a given dataset.

- Consistency means: when we enhance a set of clusters by increasing the inter-cluster distances and decreasing intra-cluster distances, the clustering algorithm should generate the same results.

To cut a long story short, Kleinberg demonstrated that a mathematically satisfactory clustering algorithm is non-existent.

This might be a (the?) death sentence for clustering analysis to some theoretical fundamentalists.

Nevertheless, I encountered one academic paper challenging the validity of Kleinberg Impossibility Theorem. I would not step into that territory. But if you are interested in that, here you are: “On the Discrepancy Between Kleinberg’s Clustering Axioms and k-Means Clustering Algorithm Behavior.”

Whatever the ground-truth may be, ever since Kleinberg released his impossibility theorem, many methodologies for clustering evaluation have been proposed from the field of engineering (e.g. applied mathematics, information theory, etc.).

To pursue pragmatism in filling the gap between theoretical/scientific limitations and practical functionality is the domain of engineering.

As a matter of fact, it seems that there is no universally accepted scientific theory that explains why airplanes can fly. Here is an article about it. In the absence of scientific theory, I have survived many flights, thanks to the art of engineering.

In appreciating the engineering spirit of pragmatism, I would need a reasonably good framework to fill the gap between Kleinberg’s Impossible Theorem and our daily practical applications for clustering analysis.

If airplanes can fly without universally accepted scientific theory, we can do clustering!

Maybe… why not!

Easier said than done, under Kleinberg’s Impossibility Theorem:

- How can we evaluate the results of clustering algorithms?

- How can we select algorithms best suited for a given objective?

A comprehensive understanding of these sorts of very simple questions remains elusive, at least to me.

In this backdrop, I encountered a paper written by Palacio-Niño & Berzal (2019), “Evaluation Metrics for Unsupervised Learning Algorithms”, in which they outlined a clustering validation framework in their attempt to better evaluate the quality of clustering performances under the mathematical limitations posed by “the Impossible Theorem”. Yes, they are quite conscious of Kleinberg’s Impossible Theorem in prescribing their framework.

To promote our pragmatic use of clustering algorithms, I thought that it would be constructive to share my study note about the pragmatic evaluation framework in this article.

Since this is my note, there are many modifications here and there for my own personal purposes deviating from the details of the paper written by Palacio-Niño & Berzal. In addition, because this article rather intends to paint an overall structure of the proposed clustering validation framework, it does not intend to get into details. If you wish, please read the full text of their original paper online to fill the gap between my article and their original paper.

As the final precaution, I don’t profess that this is a comprehensive or a standard guide of clustering validation framework. But I hope that novices to clustering analysis find it useful as a guide to shape their own clustering validation framework.

No more, no less.

Now, let’s start.

An Overall Framework of Cluster Validation

Here is the structure of their framework. We can see four stages of validation:

- preliminary evaluation,

- internal validation,

- external validation,

- relative validation.

Let’s check them one by one.

1. Preliminary evaluation:

The objective of this process is to simply confirm the presence of clusters in the dataset.

This process applies the framework of hypothesis testing to assess the presence of clustering tendency in the dataset. This process set the null hypothesis that the dataset is purely random so that there is no cluster tendency in the dataset.

Since hypothesis testing can be treated as a standalone subject, I will put it aside from this article going forward.

2. Internal Validation or Unsupervised Validation

The objective of the internal validation is to assess the quality of the clustering structure solely based on the given dataset without any external information about the ground-truth labels. In other words, when we do not have any advanced knowledge of the ground-truth labels, internal validation is a sole option.

A typical objective of clustering internal validation is to discover clusters that maximize intra-cluster similarities and minimize inter-cluster similarities. For this purpose, internal criteria are designed to measure intra-cluster similarities and inter-cluster dispersion. Simple!

That said, there is a catch:

“good scores on an internal criterion do not necessarily translate into good effectiveness in an application.” (Manning et al., 2008)

A better score on internal criterion do not necessarily guarantee a better effectiveness of the resulting model. Internal validation is not enough!

So, what would we do then?

This is the very reason why we do need external validations.

3. External Validation or Supervised Validation

In contrast to the internal validation, the external validation requires external class labels: ideally the ground-truth label, if not, potentially its representative surrogate. Because the very first reason why we use unsupervised clustering algorithm is because we do not have any idea about the labels to begin with, the idea of external validation appears absurd, paradoxical, or at least counterintuitive.

Nevertheless, when we have external information about the class labels — e.g. a set of results from a benchmark model or a gold standard model — we can implement the external validation.

Because we use reference class labels for validation, the objectives of external validation would naturally converge to the general validation framework of supervised classification analysis.

In broader terms, this category includes the model selection and human judgement.

4. Relative Validation:

Next, relative validation.

Here is an illustration of relative validation.

Particularly for the class of partitioning clustering (e.g. K-Means), the setting of the number of clusters is an important starting point to determine the configuration of algorithm, because it would materially affect the result of clustering.

In other words, for this class of clustering algorithms, the number of clusters is a hyperparameter of the algorithm. In this context, the number of clusters needs to be optimized from the perspective of algorithm’s parameters.

The problem here is the optimization needs to be simultaneously implemented together with other hyperparameters that determine the configuration of algorithm.

It requires comparisons to understand how a set of hyperparameters settings can affect the algorithmic configuration. This type of relative validation is typically treated within the domain of parameter optimization. Since parameter optimization of machine learning algorithm is a big subject of machine learning training (model development), I will put it aside from this article going forward.

By now, we have a fair idea about the overall profile of their validation framework.

Next, a relevant question is “What sort of metrics shall we use for each validation?”

In this context, I gathered some metrics as examples for the internal validation and the external validation in the next section.

Metrics for Internal & External Validations

Now, let’s focus on internal validation and external validation. Below, I will list some metrics of my choice with hyper-links where you can trace their definitions and formulas in details.

Since I will not cover the formulas for these metrics, the readers are advised to trace the hyper-links provided below to find them out!

A. Metrics used for Internal Validation

The objective of the internal validation is to establish the quality of the clustering structure solely based on the given dataset.

Classification of Internal evaluation methods:

Internal validation methods can be categorized accordingly to the classes of clustering methodologies. A typical classification of clustering can be formulated as follows:

- Partitioning methods (e.g. K-means),

- Hierarchical methods (e.g. agglomerative clustering),

- Density base methods (e.g. DBSCAN), and

- the rest

Here, I cover the first two: partitioning clustering and hierarchical clustering.

a) Partitioning Methods: e.g. K-means

For partitioning methods, there are three basis of evaluation metrics: cohesion, separation, and their hybrid.

Cohesion:

Cohesion evaluates the closeness of the inner-cluster data structure. The lower the value of cohesion metrics, the better quality the clusters are. An example of cohesion metrics is:

- SSW: Sum of Squared Errors Within Cluster.

Separation:

Separation is an inter-cluster metrics and evaluates the dispersion of the inter-cluster data structure. The idea behind a separation metric is to maximize the distance between clusters. An example of cohesion metrics is:

- SSB: Sum of Squared Errors between Clusters.

Hybrid of both cohesion and separation:

Hybrid type quantifies the level of separation and cohesion in a single metric. Here is a list of examples:

i) The silhouette coefficient: in the range of [-1, 1]

This metrics is a relative measure of the inter-cluster distance with neighboring cluster.

Here is a general interpretation of the metric:

- The best value: 1

- The worst value: -1.

- Values near 0: overlapping clusters.

- Negative values: high possibility that a sample is assigned to a wrong cluster.

Here is a use case example of the metric: https://www.geeksforgeeks.org/silhouette-index-cluster-validity-index-set-2/?ref=ml_lbp

ii) The Calisnki-Harabasz coefficient:

Also known as the Variance Ratio Criterion, this metrics measures the ratio of the sum of inter-clusters dispersion and of intra-cluster dispersion for all clusters.

For a given assignment of clusters, the higher the value of the metric, the better the clustering result is: since a higher value indicates that the resulting clusters are compact and well-separated.

Here is a use case example of the metric: https://www.geeksforgeeks.org/dunn-index-and-db-index-cluster-validity-indices-set-1/?ref=ml_lbp

iii) Dann Index:

For a given assignment of clusters, a higher Dunn index indicates better clustering.

Here is a use case example of the metric: https://www.geeksforgeeks.org/dunn-index-and-db-index-cluster-validity-indices-set-1/?ref=ml_lbp

iv) Davies Bouldin Score:

The metric measures the ratio of intra-cluster similarity to inter-cluster similarity. Logically, a higher metric suggests a denser intra-cluster structure and a more separated inter-cluster structure, thus, a better clustering result.

Here is a use case example of the metric: https://www.geeksforgeeks.org/davies-bouldin-index/

b) Hierarchical Methods: e.g. agglomerate clustering algorithm

i) Human judgement based on visual representation of dendrogram.

Although Palacio-Niño & Berzal did not include human judgement; it is one of the most useful tools for internal validation for hierarchical clustering based on dendrogram.

Instead, the co-authors listed the following two correlation coefficient metrics specialized in evaluating the results of a hierarchical clustering.

For both, their higher values indicate better results. Both take values in the range of [-1, 1].

ii) The Cophenetic Correlation Coefficient (CPCC): [-1, 1]

It measures distance between observations in the hierarchical clustering defined by the linkage.

iii) Hubert Statistic: [-1, 1]

A higher Hubert value corresponds to a better clustering of data.

c) Potential Category: Self-supervised learning

Self-supervised learning can generate the feature representations which can be used for clustering. Self-supervised learnings have no explicit labels in the dataset but use the input data itself as labels for learning. Palacio-Niño & Berzal did not include self-supervised framework, such as autoencoder and GANs, for their proposal in this section. Well, they are not clustering algorithm per se. Nevertheless, I will keep this particular domain pending for my note. Time will tell if any specialized metrics emerge from this particular domain.

Before closing the section of internal validation, here is a caveat from Gere (2023).

“Choosing the proper hierarchical clustering algorithm and number of clusters is always a key question … . In many cases, researchers do not publish any reason why it was chosen a given distance measure and linkage rule along with cluster numbers. The reason behind this could be that different cluster validation and comparison techniques give contradictory results in most cases. … The results of the validation methods deviate, suggesting that clustering depends heavily on the data set in question. Although Euclidean distance, Ward’s method seems a safe choice, testing, and validation of different clustering combinations is strongly suggested.”

Yes, it is a hard task.

Now, let’s move on to external validation.

B. Metrics used for External Validation

Repeatedly, a better score on internal criterion do not necessarily guarantee a better effectiveness of the resulting model. (Manning et al., 2008) In this context, it would be imperative for us to explore external validation.

In contrast to the internal validation, the external validation requires external class labels. When we have such external information — the ground-truth labels as an idea option or their surrogates as a practical option such as the results from benchmark models — the external validation objective of clustering converges to that of supervised learning by design.

The co-authors listed three classes of external validation methodologies: matching sets, peer-to-peer correlation, and information theory.

All of them, in one way or another, compare two sets of cluster results: the one obtained from the clustering algorithm under evaluation, call it C; the other, call it P, from an external reference — another benchmark algorithm or, if possible, the ground-truth classes.

- Matching Sets:

This class of methods identifies the relationship between each predicted cluster in C and its corresponding external reference classes of P. Some of them are popular validation metrics for supervised classification. I will just list up some metrics in this category here. Please follow their hyper-links for further details.

b) purity:

c) precision score:

d) recall score:

e) F-measure:

2. Peer-to-peer correlation:

This class of metrics is a group of similarity measures between a pair of equivalent partitions resulted from two different methods, C and P. Logically, the higher the similarity, the better the clustering result: in the sense that the predicted cluster classes resembles to the reference class labels.

a) Jaccard Score: [0, 1]

It compares the external reference class labels and the predicted labels by measuring the overlap between these 2 sets: the ratio of the size of the intersection to that of the union of the two labels sets.

A higher the metric, the more correlated these two sets are.

b) Rand Index: [0, 1]

Here is how to interpret the result of the metric.

- The value, 0: No agreement between the two sets of clustering results, C and P.

- The value, 1: Complete agreement between the two sets.

Here is a use case example of the metric:

https://www.geeksforgeeks.org/rand-index-in-machine-learning/?ref=ml_lbp

It measures “the geometric mean between of the precision and recall.”

Here is a use case example of the metric:

https://www.geeksforgeeks.org/ml-fowlkes-mallows-score/

3. Information Theory:

Now, we have another class of metrics from information theory. There are two basis for this class of metrics: entropy and mutual information.

Entropy is“a reciprocal measure of purity that allows us to measure the degree of disorder in the clustering results.”

Mutual Information measures “the reduction in uncertainty about the clustering results given knowledge of the prior partition.”

And we have the following metrics as examples.

4. Model Selection Metrics:

For external validation, I further would like to add model selection metrics below from another reference. (Karlsson et al., 2019)

- Akaike’s information criterion (AIC)

- the Hannan–Quinn information criterion (HQC) and

- the Bayesian information criterion (BIC)

We can use them to compare their values among multiple results. The result with the lowest values of these metrics is considered to be the best fit. Nevertheless, standalone these metrics cannot tell the quality of one single result.

Here is a precaution for the use of these model selection metrics. For any of those information criteria to be valid in evaluating models, it requires a certain set of preconditions: low multicollinearity, sufficient sample sizes, and good fitting of models with high R-squared metrics. When any of these conditions is not met, the reliability of these metrics could materially be impaired. (Karlsson et al., 2019)

That’s all for this article.

I would not profess that what I covered here is comprehensive or even a gold standard. Actually, there are different approaches. For example, R has a clustering validation package called clValid, which uses different approaches: from “internal”, “stability”, and “biological” modes. And I think that clValid is a wonderful tool.

Given that, I rather would hope that this article serves as a useful starting guide for novices to clustering analysis in shaping their own clustering evaluation framework.

Repeatedly, the intention of this article is to paint an overview of a potentially pragmatic evaluation framework for clustering under the theoretical constraints characterized by Kleinberg’s Impossible Theorem of Clustering.

At last, but not least, remember the following aphorism:

“All models are wrong, but some are useful”

This aphorism should continue resonating in our mind when we deal with any model. By the way, the aphorism is often associated with the renowned statistician of history, George E. P. Box.

Given the imperfect conditions that we live in, let’s promote together practical knowledge in the spirit of Aristotelian Phronesis.

Thanks for reading.

Michio Suginoo

References

- Davies_bouldin_score. (n.d.). Scikit-Learn. Retrieved June 20, 2024, from https://scikit-learn/stable/modules/generated/sklearn.metrics.davies_bouldin_score.html

- Gere, A. (2023). Recommendations for validating hierarchical clustering in consumer sensory projects. Current Research in Food Science, 6, 100522. https://doi.org/10.1016/j.crfs.2023.100522

- Karlsson, P. S., Behrenz, L., & Shukur, G. (2019). Performances of Model Selection Criteria When Variables are Ill Conditioned. Computational Economics, 54(1), 77–98. https://doi.org/10.1007/s10614-017-9682-8

- Kleinberg, J. (2002). An Impossibility Theorem for Clustering. Advances in Neural Information Processing Systems, 15.

- Manning, C. D., Raghavan, P., & Schütze, H. (2008). Introduction to Information Retrieval/ Flat Clustering/ Evaluation of clustering. Https://Nlp.Stanford.Edu/IR-Book. https://nlp.stanford.edu/IR-book/html/htmledition/evaluation-of-clustering-1.html

- Palacio-Niño, J.-O., & Berzal, F. (2019). Evaluation Metrics for Unsupervised Learning Algorithms (arXiv:1905.05667). arXiv. http://arxiv.org/abs/1905.05667

Beyond Kleinberg’s Impossibility Theorem of Clustering: A Pragmatic Clustering Evaluation Framework was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Beyond Kleinberg’s Impossibility Theorem of Clustering: A Pragmatic Clustering Evaluation Framework