Illustration of Python Threading, Processing and GIL by Diagrams

Originally appeared here:

Don’t Know What is Python GIL? This May be the Easiest Tutorial

Go Here to Read this Fast! Don’t Know What is Python GIL? This May be the Easiest Tutorial

Illustration of Python Threading, Processing and GIL by Diagrams

Originally appeared here:

Don’t Know What is Python GIL? This May be the Easiest Tutorial

Go Here to Read this Fast! Don’t Know What is Python GIL? This May be the Easiest Tutorial

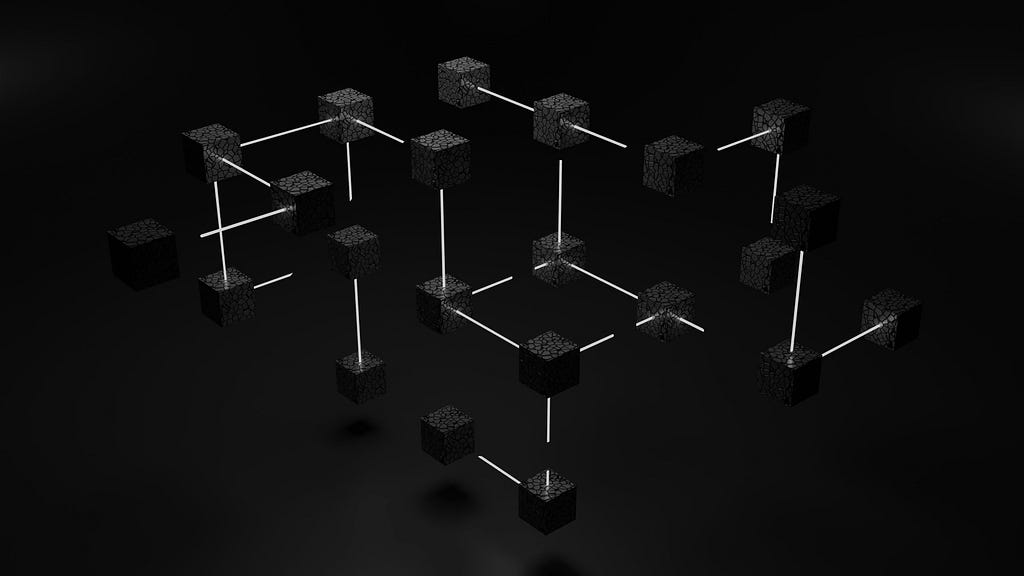

Before diving into the technical aspect of the article let’s set the context and answer the question that you might have, What is a knowledge graph ?

And to answer this, imagine instead of storing the knowledge in cabinets we store them in a fabric net. Each fact, concept, piece of information about people, places, events, or even abstract ideas are knots, and the line connecting them together is the relationship they have with each other. This intricate web, my friends, is the essence of a knowledge graph.

Think of it like a bustling city map, not just showing streets but revealing the connections between landmarks, parks, and shops. Similarly, a knowledge graph doesn’t just store cold facts; it captures the rich tapestry of how things are linked. For example, you might learn that Marie Curie discovered radium, then follow a thread to see that radium is used in medical treatments, which in turn connect to hospitals and cancer research. See how one fact effortlessly leads to another, painting a bigger picture?

So why is this map-like way of storing knowledge so popular? Well, imagine searching for information online. Traditional methods often leave you with isolated bits and pieces, like finding only buildings on a map without knowing the streets that connect them. A knowledge graph, however, takes you on a journey, guiding you from one fact to another, like having a friendly guide whisper fascinating stories behind every corner of the information world. Interesting right? I know.

Since I discovered this magic, it captured my attention and I explored and played around with many potential applications. In this article, I will show you how to build a pipeline that extracts audio from video, then transcribes that audio, and from the transcription, build a knowledge graph allowing for a more nuanced and interconnected representation of information within the video.

I will be using Google Drive to upload the video sample. I will also use Google Colab to write the code, and finally, you need access to the GPT Plus API for this project. I will break this down into steps to make it clear and easy for beginners:

By the end of this article, you will construct a graph with the following schema.

Let’s dive right into it!

As mentioned, we will be using Google Drive and Colab. In the first cell, let’s connect Google Drive to Colab and create our directory folders (video_files, audio_files, text_files). The following code can get this done. (If you want to follow along with the code, I have uploaded all the code for this project on GitHub; you can access it from here.)

# installing required libraries

!pip install pydub

!pip install git+https://github.com/openai/whisper.git

!sudo apt update && sudo apt install ffmpeg

!pip install networkx matplotlib

!pip install openai

!pip install requests

# connecting google drive to import video samples

from google.colab import drive

import os

drive.mount('/content/drive')

video_files = '/content/drive/My Drive/video_files'

audio_files = '/content/drive/My Drive/audio_files'

text_files = '/content/drive/My Drive/text_files'

folders = [video_files, audio_files, text_files]

for folder in folders:

# Check if the output folder exists

if not os.path.exists(folder):

# If not, create the folder

os.makedirs(folder)

Or you can create the folders manually and upload your video sample to the “video_files” folder, whichever is easier for you.

Now we have our three folders with a video sample in the “video_files” folder to test the code.

The next thing we want to do is to import our video and extract the audio from it. We can use the Pydub library, which is a high-level audio processing library that can help us to do that. Let’s see the code and then explain it underneath.

from pydub import AudioSegment

# Extract audio from videos

for video_file in os.listdir(video_files):

if video_file.endswith('.mp4'):

video_path = os.path.join(video_files, video_file)

audio = AudioSegment.from_file(video_path, format="mp4")

# Save audio as WAV

audio.export(os.path.join(audio_files, f"{video_file[:-4]}.wav"), format="wav")

After installing our package pydub, we imported the AudioSegment class from the Pydub library. Then, we created a loop that iterates through all the video files in the “video_files” folder we created earlier and passes each file through AudioSegment.from_file to load the audio from the video file. The loaded audio is then exported as a WAV file using audio.export and saved in the specified “audio_files” folder with the same name as the video file but with the extension .wav.

At this point, you can go to the “audio_files” folder in Google Drive where you will see the extracted audio.

In the third step, we will transcribe the audio file we have to a text file and save it as a .txt file in the “text_files” folder. Here I used the Whisper ASR (Automatic Speech Recognition) system from OpenAI to do this. I used it because it’s easy and fairly accurate, beside it has different models for different accuracy. But the more accurate the model is the larger the model the slower to load, hence I will be using the medium one just for demonstration. To make the code cleaner, let’s create a function that transcribes the audio and then use a loop to use the function on all the audio files in our directory

import re

import subprocess

# function to transcribe and save the output in txt file

def transcribe_and_save(audio_files, text_files, model='medium.en'):

# Construct the Whisper command

whisper_command = f"whisper '{audio_files}' --model {model}"

# Run the Whisper command

transcription = subprocess.check_output(whisper_command, shell=True, text=True)

# Clean and join the sentences

output_without_time = re.sub(r'[d+:d+.d+ --> d+:d+.d+] ', '', transcription)

sentences = [line.strip() for line in output_without_time.split('n') if line.strip()]

joined_text = ' '.join(sentences)

# Create the corresponding text file name

audio_file_name = os.path.basename(audio_files)

text_file_name = os.path.splitext(audio_file_name)[0] + '.txt'

file_path = os.path.join(text_files, text_file_name)

# Save the output as a txt file

with open(file_path, 'w') as file:

file.write(joined_text)

print(f'Text for {audio_file_name} has been saved to: {file_path}')

# Transcribing all the audio files in the directory

for audio_file in os.listdir(audio_files):

if audio_file.endswith('.wav'):

audio_files = os.path.join(audio_files, audio_file)

transcribe_and_save(audio_files, text_files)

Libraries Used:

We created a Whisper command and saved it as a variable to facilitate the process. After that, we used subprocess.check_output to run the Whisper command and save the resulting transcription in the transcription variable. But the transcription at this point is not clean (you can check it by printing the transcription variable out of the function; it has timestamps and a couple of lines that are not relevant to the transcription), so we added a cleaning code that removes the timestamp using re.sub and joins the sentences together. After that, we created a text file within the “text_files” folder with the same name as the audio and saved the cleaned transcription in it.

Now if you go to the “text_files” folder, you can see the text file that contains the transcription. Woah, step 3 done successfully! Congratulations!

This is the crucial part — and maybe the longest. I will follow a modular approach with 5 functions to handle this task, but before that, let’s begin with the libraries and modules necessary for making HTTP requests requests, handling JSON json, working with data frames pandas, and creating and visualizing graphs networkx and matplotlib. And setting the global constants which are variables used throughout the code. API_ENDPOINT is the endpoint for OpenAI’s API, API_KEY is where the OpenAI API key will be stored, and prompt_text will store the text used as input for the OpenAI prompt. All of this is done in this code

import requests

import json

import pandas as pd

import networkx as nx

import matplotlib.pyplot as plt

# Global Constants API endpoint, API key, prompt text

API_ENDPOINT = "https://api.openai.com/v1/chat/completions"

api_key = "your_openai_api_key_goes_here"

prompt_text = """Given a prompt, extrapolate as many relationships as possible from it and provide a list of updates.

If an update is a relationship, provide [ENTITY 1, RELATIONSHIP, ENTITY 2]. The relationship is directed, so the order matters.

Example:

prompt: Sun is the source of solar energy. It is also the source of Vitamin D.

updates:

[["Sun", "source of", "solar energy"],["Sun","source of", "Vitamin D"]]

prompt: $prompt

updates:"""

Then let’s continue with breaking down the structure of our functions:

The first function, create_graph(), the task of this function is to create a graph visualization using the networkx library. It takes a DataFrame df and a dictionary of edge labels rel_labels — which will be created on the following function — as input. Then, it uses the DataFrame to create a directed graph and visualizes it using matplotlib with some customization and outputs the beautiful graph we need

# Graph Creation Function

def create_graph(df, rel_labels):

G = nx.from_pandas_edgelist(df, "source", "target",

edge_attr=True, create_using=nx.MultiDiGraph())

plt.figure(figsize=(12, 12))

pos = nx.spring_layout(G)

nx.draw(G, with_labels=True, node_color='skyblue', edge_cmap=plt.cm.Blues, pos=pos)

nx.draw_networkx_edge_labels(

G,

pos,

edge_labels=rel_labels,

font_color='red'

)

plt.show()

The DataFrame df and the edge labels rel_labels are the output of the next function, which is: preparing_data_for_graph(). This function takes the OpenAI api_response — which will be created from the following function — as input and extracts the entity-relation triples (source, target, edge) from it. Here we used the json module to parse the response and obtain the relevant data, then filter out elements that have missing data. After that, build a knowledge base dataframe kg_df from the triples, and finally, create a dictionary (relation_labels) mapping pairs of nodes to their corresponding edge labels, and of course, return the DataFrame and the dictionary.

# Data Preparation Function

def preparing_data_for_graph(api_response):

#extract response text

response_text = api_response.text

entity_relation_lst = json.loads(json.loads(response_text)["choices"][0]["text"])

entity_relation_lst = [x for x in entity_relation_lst if len(x) == 3]

source = [i[0] for i in entity_relation_lst]

target = [i[2] for i in entity_relation_lst]

relations = [i[1] for i in entity_relation_lst]

kg_df = pd.DataFrame({'source': source, 'target': target, 'edge': relations})

relation_labels = dict(zip(zip(kg_df.source, kg_df.target), kg_df.edge))

return kg_df,relation_labels

The third function is call_gpt_api(), which is responsible for making a POST request to the OpenAI API and output the api_response. Here we construct the data payload with model information, prompt, and other parameters like the model (in this case: gpt-3.5-turbo-instruct), max_tokens, stop, and temperature. Then send the request using requests.post and return the response. I have also included simple error handling to print an error message in case an exception occurs. The try block contains the code that might raise an exception from the request during execution, so if an exception occurs during this process (for example, due to network issues, API errors, etc.), the code within the except block will be executed.

# OpenAI API Call Function

def call_gpt_api(api_key, prompt_text):

global API_ENDPOINT

try:

data = {

"model": "gpt-3.5-turbo",

"prompt": prompt_text,

"max_tokens": 3000,

"stop": "n",

"temperature": 0

}

headers = {"Content-Type": "application/json", "Authorization": "Bearer " + api_key}

r = requests.post(url=API_ENDPOINT, headers=headers, json=data)

response_data = r.json() # Parse the response as JSON

print("Response content:", response_data)

return response_data

except Exception as e:

print("Error:", e)

Then the function before the last is the main() function, which orchestrates the main flow of the script. First, it reads the text file contents from the “text_files” folder we had earlier and saves it in the variable kb_text. Bring the global variable prompt_text, which stores our prompt, then replace a placeholder in the prompt template ($prompt) with the text file content kb_text. Then call the call_gpt_api() function, give it the api_key and prompt_text to get the OpenAI API response. The response is then passed to preparing_data_for_graph() to prepare the data and get the DataFrame and the edge labels dictionary, finally pass these two values to the create_graph() function to build the knowledge graph.

# Main function

def main(text_file_path, api_key):

with open(file_path, 'r') as file:

kb_text = file.read()

global prompt_text

prompt_text = prompt_text.replace("$prompt", kb_text)

api_response = call_gpt_api(api_key, prompt_text)

df, rel_labels = preparing_data_for_graph(api_response)

create_graph(df, rel_labels)code

Finally, we have the start() function, which iterates through all the text files in our “text_files” folder — if we have more than one, gets the name and the path of the file, and passes it along with the api_key to the main function to do its job.

# Start Function

def start():

for filename in os.listdir(text_files):

if filename.endswith(".txt"):

# Construct the full path to the text file

text_file_path = os.path.join(text_files, filename)

main(text_file_path, api_key)

If you have correctly followed the steps, after running the start() function, you should see a similar visualization.

You can of course save this knowledge graph in the Neo4j database and take it further.

NOTE: This workflow ONLY applies to videos you own or whose terms allow this kind of download/processing.

Knowledge graphs use semantic relationships to represent data, enabling a more nuanced and context-aware understanding. This semantic richness allows for more sophisticated querying and analysis, as the relationships between entities are explicitly defined.

In this article, I outline detailed steps on how to build a pipeline that involves extracting audio from videos, transcribing with OpenAI’s Whisper ASR, and crafting a knowledge graph. As someone interested in this field, I hope that this article makes it easier to understand for beginners, demonstrating the potential and versatility of knowledge graph applications.

And as always the whole code is available in GitHub.

A Beginner’s Guide to Building Knowledge Graphs from Videos was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

A Beginner’s Guide to Building Knowledge Graphs from Videos

Go Here to Read this Fast! A Beginner’s Guide to Building Knowledge Graphs from Videos

The landscape of Apple’s Mac lineup in 2024 and beyond is shaping to be expansive and innovative. From the MacBook Air and Pro models poised to embrace the M3 chip’s efficiencies to the Mac mini’s more powerful internals, Apple’s roadmap has something for professionals and casual users alike.

We’ve rounded up the best Sonos deals that are running now through Super Bowl Sunday, as well as top TV discounts, in the sections below.

Super Bowl Sonos discounts

Go Here to Read this Fast! Bucks vs Jazz live stream: Can you watch the NBA game for free?

Originally appeared here:

Bucks vs Jazz live stream: Can you watch the NBA game for free?

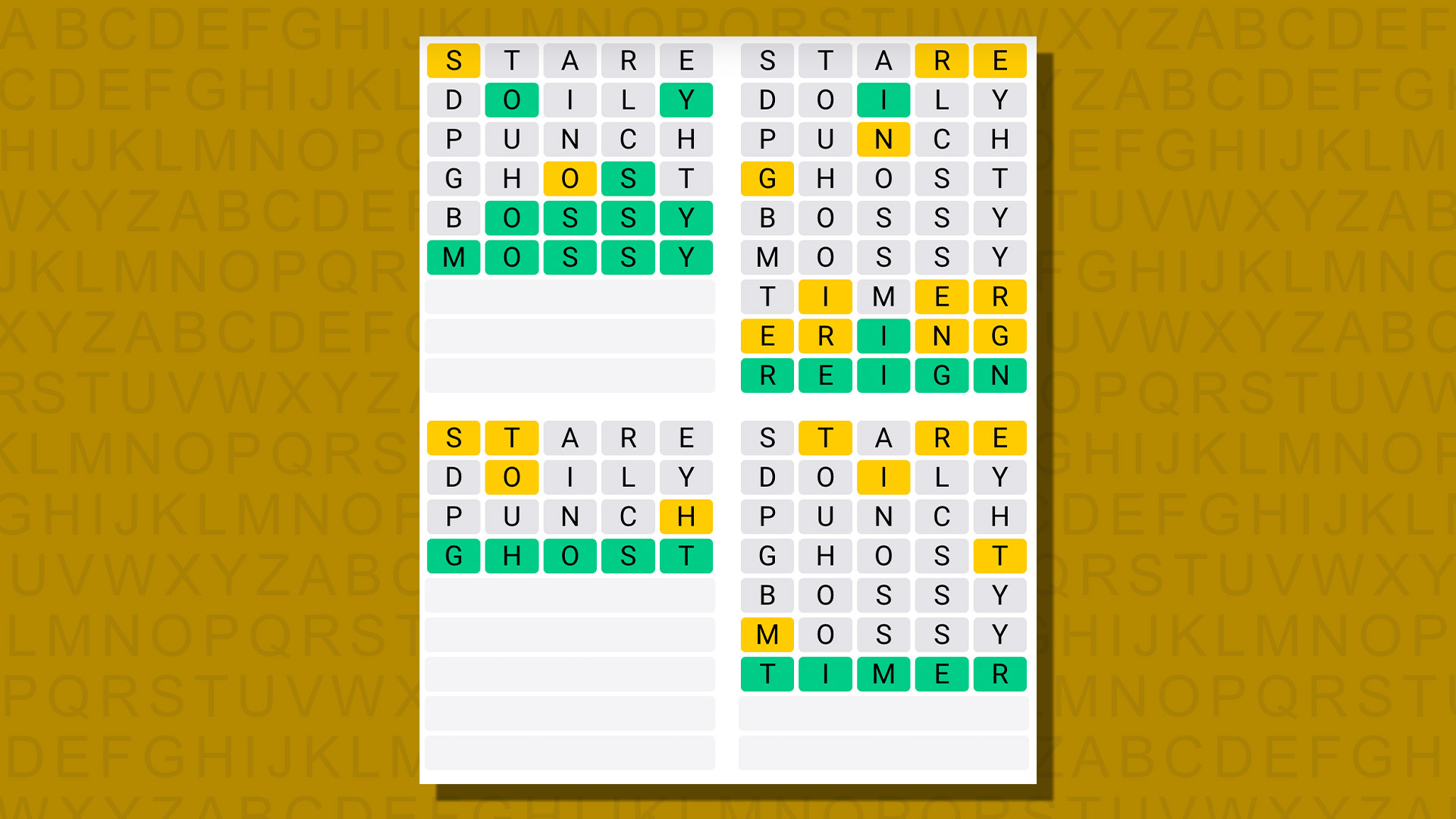

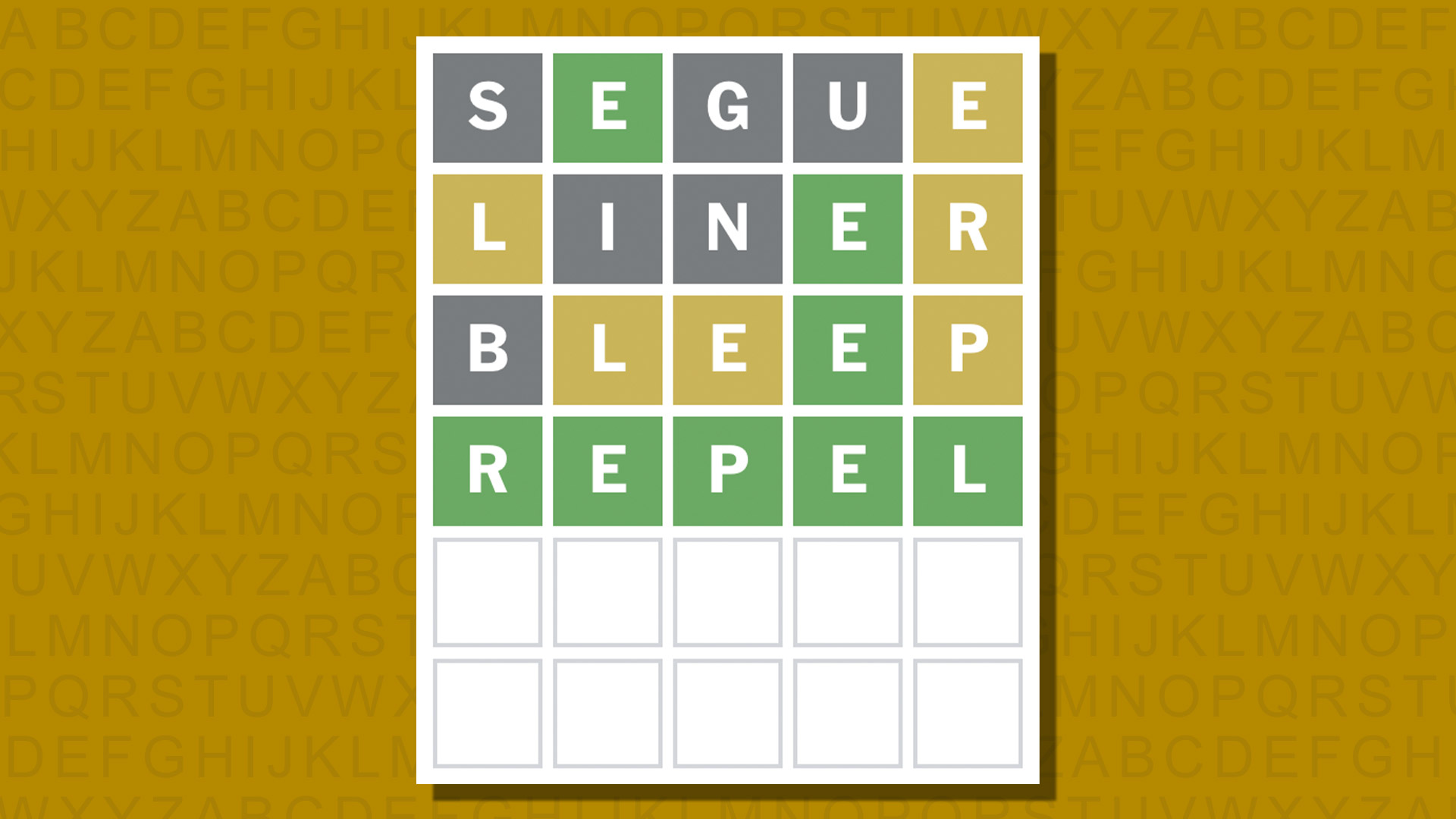

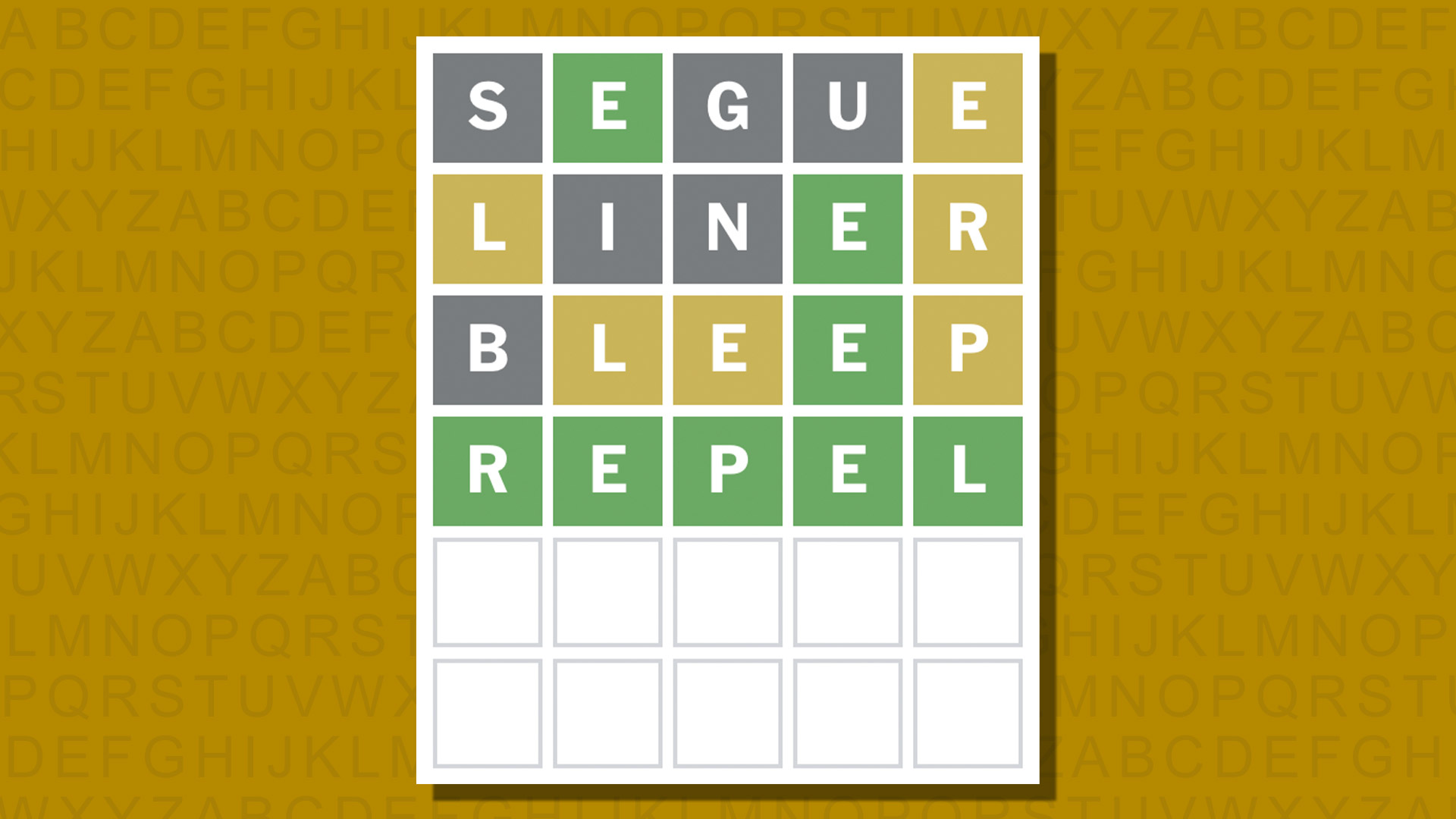

Go Here to Read this Fast! Quordle today – hints and answers for Monday, February 5 (game #742)

Originally appeared here:

Quordle today – hints and answers for Monday, February 5 (game #742)

The stablecoin market cap added $9 billion to the crypto market.

USDT’s market cap hit a fresh milestone.

The stablecoin market is showing signs of recovery, and its resurgence could play a

The post How USDT led the latest stablecoin resurgence appeared first on AMBCrypto.

Go here to Read this Fast! How USDT led the latest stablecoin resurgence

Originally appeared here:

How USDT led the latest stablecoin resurgence

Go Here to Read this Fast! Clippers vs Heat live stream: Can you watch the NBA game for free?

Originally appeared here:

Clippers vs Heat live stream: Can you watch the NBA game for free?