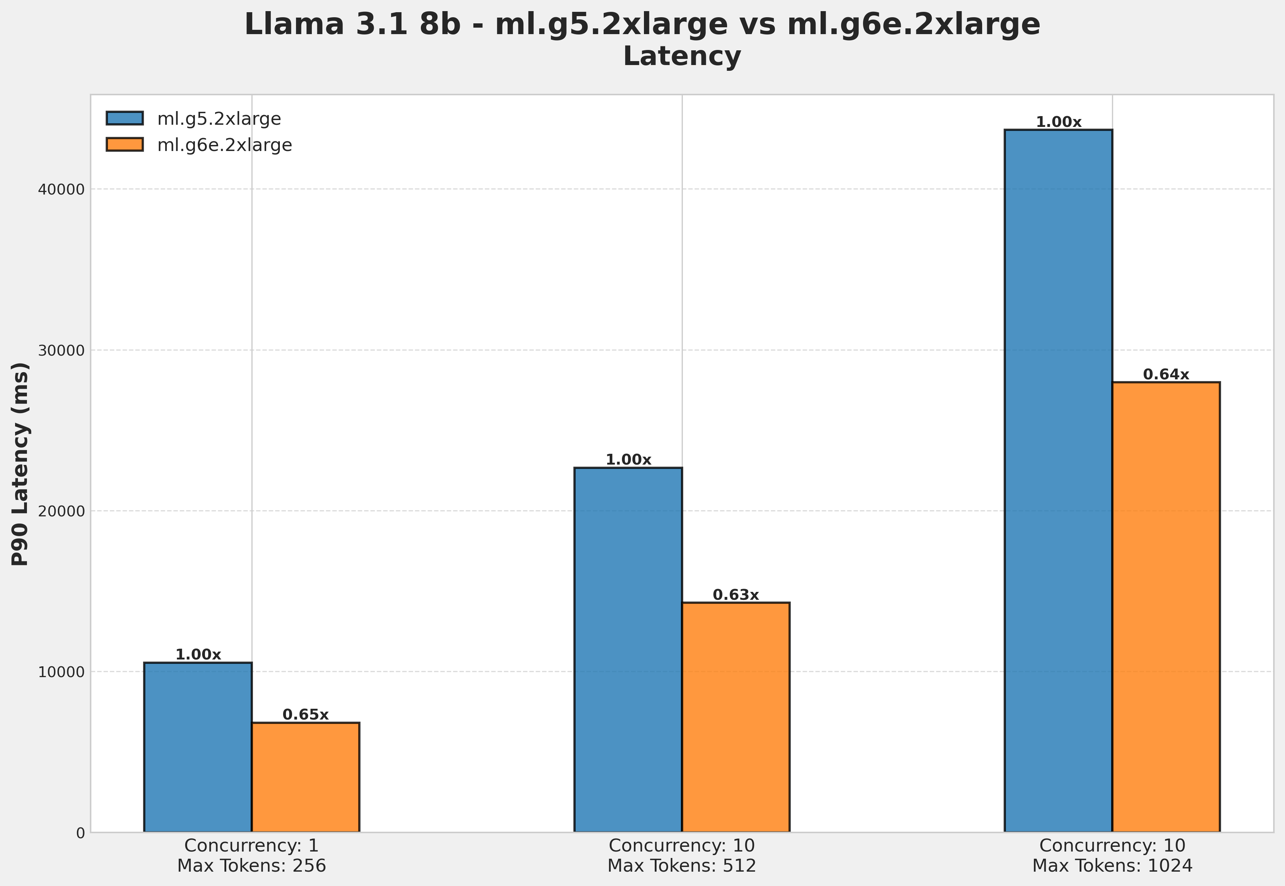

G6e instances on SageMaker unlock the ability to deploy a wide variety of open source models cost-effectively. With superior memory capacity, enhanced performance, and cost-effectiveness, these instances represent a compelling solution for organizations looking to deploy and scale their AI applications. The ability to handle larger models, support longer context lengths, and maintain high throughput makes G6e instances particularly valuable for modern AI applications.

Originally appeared here:

Amazon SageMaker Inference now supports G6e instances

Go Here to Read this Fast! Amazon SageMaker Inference now supports G6e instances