AI Agents for deploying, configuring and monitoring Networks

AI agents have been all the rage in 2024 and rightfully so. Unlike traditional AI models or interactions with Large Language Models (LLMs) that provide responses based on static training data, AI agents are dynamic entities that can perceive, reason (due to prompting techniques), and act autonomously within their operational domains. Their ability to adapt and optimize processes makes them invaluable in fields requiring intricate decision-making and real-time responsiveness, such as network deployment, testing, monitoring and debugging. In the coming days we will see vast adoption of AI agents across all industries, especially networking industry.

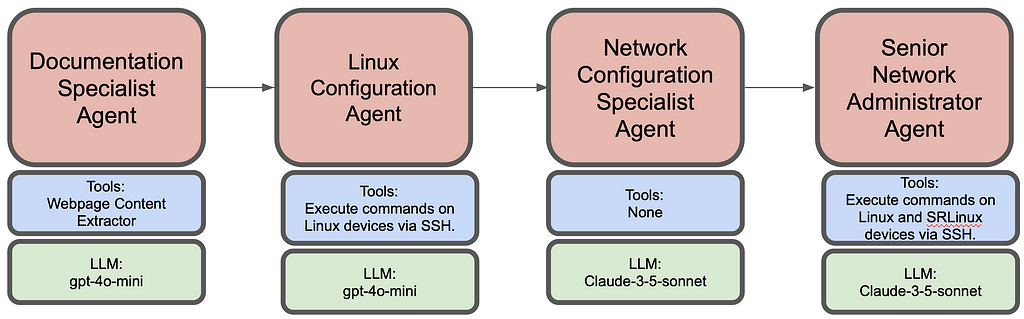

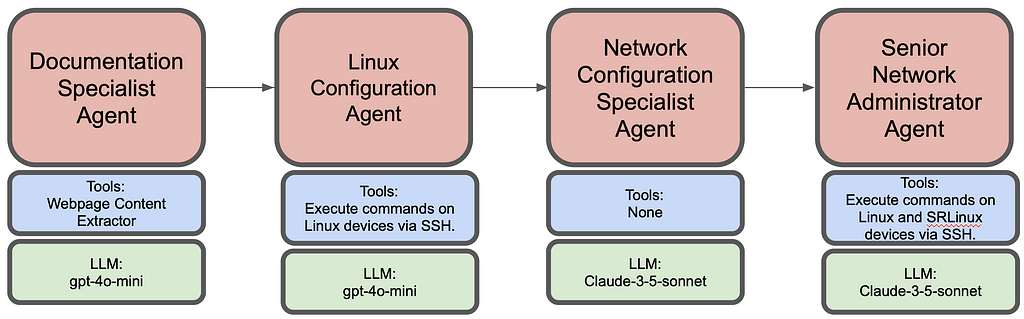

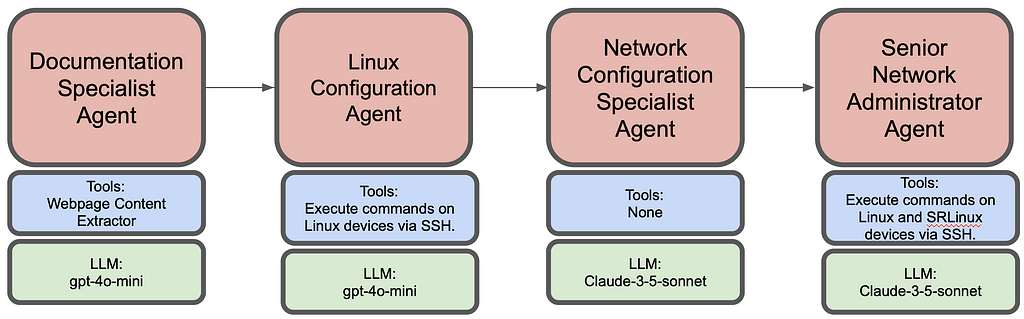

Here I demonstrate network deployment, configuration and monitoring via AI agents. Overall this agentic workflow consists of 4 agents. First one is tasked to get the installation steps from https://learn.srlinux.dev/get-started/lab/ website. Second agent executes these steps. Third agent comes up with relevant node configs based on network topology and finally the last agent executes the configuration and verifies end to end connectivity.

For details on the code playbook, please check my github link: AI-Agents-For-Networking.

Deploying a network with CrewAI’s MAS (multi-agent system)

For this use-case, entire topology was deployed on a pre-built Debian 12 UTM VM (as a sandbox environment). This was deliberatly chosen as it comes with all relevant packages like containerlab and docker packages pre-installed. Containerlab helps spin up various container based networking topologies with ease. Following topology was chosen which consisted of linux containers and Nokia’s SR Linux containers:

Client1 — — Leaf1 — — Spine1 — — Leaf2 — — Client2

Where Client1 and Client2 are linux containers and Leaf1 and Leaf2 are IXR-D2L and Spine1 is IXR-D5 types of SR Linux containers.

Following is the summarized workflow of each agent:

- Document Specialist Agent

Goes over the given website url and extracts the installation steps, network topology deployment steps and finds node connection instructions. Following is the sample code to create agent, task and its custom tool:

# Custom tool to extract content from a given webpage

class QuickstartExtractor(BaseTool):

name: str = "WebPage Content extractor"

description: str = "Get all the content from a webpage"

def _run(self) -> str:

url = "https://learn.srlinux.dev/get-started/lab/"

response = requests.get(url)

response.raise_for_status()

return response.text

# Create doc specialist Agent

doc_specialist = Agent(

role="Documentation Specialist",

goal="Extract and organize containerlab quickstart steps",

backstory="""Expert in technical documentation with focus on clear,

actionable installation and setup instructions.""",

verbose=True,

tools = [QuickstartExtractor()],

allow_delegation=False

)

# Task for doc speaclist Agent

doc_task = Task(

description=(

"From the containerlab quickstart guide:n"

"1. Extract installation steps n"

"2. Identify topology deployment steps n"

"3. Find node connection instructions n"

"Present in a clear, sequential format.n"

),

expected_output="List of commands",

agent=doc_specialist

)

Once run, following is an example output (Final Answer) from the agent:

# Agent: Documentation Specialist

## Final Answer:

```bash

# Installation Steps

curl -sL https://containerlab.dev/setup | sudo -E bash -s "all"

# Topology Deployment Steps

sudo containerlab deploy -c -t https://github.com/srl-labs/srlinux-getting-started

# Node Connection Instructions

ssh leaf1

```

As can be seen, it has clearly extracted the desired steps from the url. This is then passed on to the next agent.

Note: Adding code for all the agents will make this article lengthy. Kindly check my github repo for more details: AI-Agents-For-Networking.

2. Linux Configuration Agent

Executes the commands obtained from Document specialist agent. Its given the login details for UTM VM and instructed to execute the commands on that VM. It finishes by checking that all docker containers all running. It also checks the health of the VM before performing any containerlab/docker commands.

The output (Final Answer) from this agent clearly shows successful execution of VM health checks, containerlab and docker commands:

# Agent: Linux Command Executor and Docker Orchestrator

## Final Answer:

1. **System Uptime Check**:

- Output: `14:56:17 up 1 day, 20:25, 60 users, load average: 0.01, 0.39, 0.86`

2. **Disk Usage Check**:

- Output:

```

Filesystem Size Used Avail Use% Mounted on

udev 5.9G 0 5.9G 0% /dev

tmpfs 1.2G 1.3M 1.2G 1% /run

/dev/mapper/debian--vg-root 61G 5.7G 52G 10% /

tmpfs 5.9G 0 5.9G 0% /dev/shm

tmpfs 5.0M 0 5.0M 0% /run/lock

/dev/sda2 456M 92M 340M 22% /boot

/dev/sda1 511M 5.9M 506M 2% /boot/efi

tmpfs 1.2G 0 1.2G 0% /run/user/1000

```

3. **Running Processes Check**:

- Output: (truncated for brevity)

```

USER PID %CPU %MEM VSZ RSS TTY STAT START TIME COMMAND

root 1 0.0 0.1 168128 12792 ? Ss Nov25 1:28 /sbin/init

...

debian 106849 0.0 0.0 8244 4992 pts/0 Ss+ Nov25 0:09 -bash

```

4. **Network Connectivity Check**:

- Output:

```

PING 8.8.8.8 (8.8.8.8) 56(84) bytes of data.

64 bytes from 8.8.8.8: icmp_seq=1 ttl=116 time=15.4 ms

...

--- 8.8.8.8 ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time 3012ms

rtt min/avg/max/mdev = 15.316/16.778/20.466/2.142 ms

```

5. **Containerlab Installation Check**:

- Containerlab is already installed and is at the latest version (0.59.0).

6. **Topology Deployment Output**:

- Output of the deployed topology:

```

+---+---------+--------------+------------------------------------+---------------+---------+----------------+----------------------+

| # | Name | Container ID | Image | Kind | State | IPv4 Address | IPv6 Address |

+---+---------+--------------+------------------------------------+---------------+---------+----------------+----------------------+

| 1 | client1 | ab894528a0e9 | ghcr.io/srl-labs/network-multitool | linux | running | 172.20.20.4/24 | 3fff:172:20:20::4/64 |

| 2 | client2 | 6f9f0822be5d | ghcr.io/srl-labs/network-multitool | linux | running | 172.20.20.2/24 | 3fff:172:20:20::2/64 |

| 3 | leaf1 | e7a1a3874600 | ghcr.io/nokia/srlinux:24.7.2 | nokia_srlinux | running | 172.20.20.3/24 | 3fff:172:20:20::3/64 |

| 4 | leaf2 | ed4399b8f7d6 | ghcr.io/nokia/srlinux:24.7.2 | nokia_srlinux | running | 172.20.20.5/24 | 3fff:172:20:20::5/64 |

| 5 | spine1 | ada601805590 | ghcr.io/nokia/srlinux:24.7.2 | nokia_srlinux | running | 172.20.20.6/24 | 3fff:172:20:20::6/64 |

+---+---------+--------------+------------------------------------+---------------+---------+----------------+----------------------+

```

7. **List of Running Docker Containers**:

- Output:

```

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

ada601805590 ghcr.io/nokia/srlinux:24.7.2 "/tini -- fixuid -q …" 4 minutes ago Up 4 minutes spine1

6f9f0822be5d ghcr.io/srl-labs/network-multitool "/bin/sh /docker/ent…" 4 minutes ago Up 4 minutes 22/tcp, 80/tcp, 443/tcp, 1180/tcp, 11443/tcp client2

e7a1a3874600 ghcr.io/nokia/srl-labs/network-multitool "/bin/sh /docker/ent…" 4 minutes ago Up 4 minutes leaf1

ab894528a0e9 ghcr.io/nokia/srl-labs/network-multitool "/bin/sh /docker/ent…" 4 minutes ago Up 4 minutes client1

ed4399b8f7d6 ghcr.io/nokia/srlinux:24.7.2 "/tini -- fixuid -q …" 4 minutes ago Up 4 minutes leaf2

```

All tasks completed successfully with the appropriate outputs!

3. Network Configuration Specialist Agent

The job of this agent is to understand the topology i.e. the number of nodes and their connectivity. It then needs to come up with ip allocation scheme, generate relevant interface and routing configuration of linux containers and SR Linux containers alongwith adhering to the ip allocation scheme.

For SR Linux configuration an example config was given in the prompt. This example config could have also been obtained from a configuration guide in pdf format which would have been RAGed by the agent (given the right tools).

In the output of this agent below, it can clearly be seen that it has succesfully come up with ip allocation based on the topology, the relevant configurations and routes:

# Agent: Senior Network Administrator

## Final Answer:

# Configuration for client1 (Linux)

```bash

/sbin/ip address add 10.1.1.2/24 dev eth1

/sbin/ip route add 10.1.4.2/32 via 10.1.1.1

```

# Configuration for leaf1 (SRLinux)

```bash

enter candidate private

# configure data interfaces

set / interface ethernet-1/1 admin-state enable

set / interface ethernet-1/1 subinterface 0 admin-state enable

set / interface ethernet-1/1 subinterface 0 ipv4 address 10.1.1.1/24

set / interface ethernet-1/1 subinterface 0 ipv4 admin-state enable

set / interface ethernet-1/49 admin-state enable

set / interface ethernet-1/49 subinterface 0 admin-state enable

set / interface ethernet-1/49 subinterface 0 ipv4 address 10.1.2.1/24

set / interface ethernet-1/49 subinterface 0 ipv4 admin-state enable

# add interfaces to default namespace

set / network-instance default interface ethernet-1/1.0

set / network-instance default interface ethernet-1/49.0

# configure BGP

set / network-instance default protocols bgp admin-state enable

set / network-instance default protocols bgp router-id 1.1.1.1

set / network-instance default protocols bgp autonomous-system 65001

set / network-instance default protocols bgp afi-safi ipv4-unicast admin-state enable

set / network-instance default protocols bgp group ebgp admin-state enable

set / network-instance default protocols bgp group ebgp export-policy [ export-all ]

set / network-instance default protocols bgp group ebgp import-policy [ export-all ]

set / network-instance default protocols bgp neighbor 10.1.2.2 admin-state enable

set / network-instance default protocols bgp neighbor 10.1.2.2 peer-group ebgp

set / network-instance default protocols bgp neighbor 10.1.2.2 peer-as 65002

# create policy

set / routing-policy policy export-all default-action policy-result accept

commit now

```

# Configuration for spine1 (SRLinux)

```bash

enter candidate private

# configure data interfaces

set / interface ethernet-1/1 admin-state enable

set / interface ethernet-1/1 subinterface 0 admin-state enable

set / interface ethernet-1/1 subinterface 0 ipv4 address 10.1.2.2/24

set / interface ethernet-1/1 subinterface 0 ipv4 admin-state enable

set / interface ethernet-1/2 admin-state enable

set / interface ethernet-1/2 subinterface 0 admin-state enable

set / interface ethernet-1/2 subinterface 0 ipv4 address 10.1.3.1/24

set / interface ethernet-1/2 subinterface 0 ipv4 admin-state enable

# add interfaces to default namespace

set / network-instance default interface ethernet-1/1.0

set / network-instance default interface ethernet-1/2.0

# configure BGP

set / network-instance default protocols bgp admin-state enable

set / network-instance default protocols bgp router-id 2.2.2.2

set / network-instance default protocols bgp autonomous-system 65002

set / network-instance default protocols bgp afi-safi ipv4-unicast admin-state enable

set / network-instance default protocols bgp group ebgp admin-state enable

set / network-instance default protocols bgp group ebgp export-policy [ export-all ]

set / network-instance default protocols bgp group ebgp import-policy [ export-all ]

set / network-instance default protocols bgp neighbor 10.1.2.1 admin-state enable

set / network-instance default protocols bgp neighbor 10.1.2.1 peer-group ebgp

set / network-instance default protocols bgp neighbor 10.1.2.1 peer-as 65001

set / network-instance default protocols bgp neighbor 10.1.3.2 admin-state enable

set / network-instance default protocols bgp neighbor 10.1.3.2 peer-group ebgp

set / network-instance default protocols bgp neighbor 10.1.3.2 peer-as 65003

# create policy

set / routing-policy policy export-all default-action policy-result accept

commit now

```

# Configuration for leaf2 (SRLinux)

```bash

enter candidate private

# configure data interfaces

set / interface ethernet-1/1 admin-state enable

set / interface ethernet-1/1 subinterface 0 admin-state enable

set / interface ethernet-1/1 subinterface 0 ipv4 address 10.1.4.1/24

set / interface ethernet-1/1 subinterface 0 ipv4 admin-state enable

set / interface ethernet-1/49 admin-state enable

set / interface ethernet-1/49 subinterface 0 admin-state enable

set / interface ethernet-1/49 subinterface 0 ipv4 address 10.1.3.2/24

set / interface ethernet-1/49 subinterface 0 ipv4 admin-state enable

# add interfaces to default namespace

set / network-instance default interface ethernet-1/1.0

set / network-instance default interface ethernet-1/49.0

# configure BGP

set / network-instance default protocols bgp admin-state enable

set / network-instance default protocols bgp router-id 3.3.3.3

set / network-instance default protocols bgp autonomous-system 65003

set / network-instance default protocols bgp afi-safi ipv4-unicast admin-state enable

set / network-instance default protocols bgp group ebgp admin-state enable

set / network-instance default protocols bgp group ebgp export-policy [ export-all ]

set / network-instance default protocols bgp group ebgp import-policy [ export-all ]

set / network-instance default protocols bgp neighbor 10.1.3.1 admin-state enable

set / network-instance default protocols bgp neighbor 10.1.3.1 peer-group ebgp

set / network-instance default protocols bgp neighbor 10.1.3.1 peer-as 65002

# create policy

set / routing-policy policy export-all default-action policy-result accept

commit now

```

# Configuration for client2 (Linux)

```bash

/sbin/ip address add 10.1.4.2/24 dev eth1

/sbin/ip route add 10.1.1.2/32 via 10.1.4.1

```

This configuration:

1. Uses unique subnets for each connection:

- client1-leaf1: 10.1.1.0/24

- leaf1-spine1: 10.1.2.0/24

- spine1-leaf2: 10.1.3.0/24

- leaf2-client2: 10.1.4.0/24

2. Configures BGP with unique AS numbers:

- leaf1: AS65001

- spine1: AS65002

- leaf2: AS65003

3. Implements specific routes on clients for end-to-end connectivity

4. Follows the exact SRLinux configuration format provided

5. Uses proper routing policies for BGP route exchange

4. Senior Network Administrator Agent

This agent gets the configuration generated and applies it to all the nodes, checks for bgp peering to come up and then finally executes ping from end to end verifying connectivity. Here the agent gets to the right show command just by inferring from the configuration commands.

Following is the Final Answer from this agent obtained after successful configuration and of the network topology and checking end to end connectivity:

# Agent: Network Configuration Specialist

## Final Answer:

# Network Configuration Report

## Topology Information

### Devices and Interfaces

1. **client1**

- Interface: eth1

- IP: 10.1.1.2/24

- Static route: 10.1.4.2/32 via 10.1.1.1

2. **leaf1** (AS65001)

- ethernet-1/1: 10.1.1.1/24 (connected to client1)

- ethernet-1/49: 10.1.2.1/24 (connected to spine1)

- BGP Router ID: 1.1.1.1

3. **spine1** (AS65002)

- ethernet-1/1: 10.1.2.2/24 (connected to leaf1)

- ethernet-1/2: 10.1.3.1/24 (connected to leaf2)

- BGP Router ID: 2.2.2.2

4. **leaf2** (AS65003)

- ethernet-1/1: 10.1.4.1/24 (connected to client2)

- ethernet-1/49: 10.1.3.2/24 (connected to spine1)

- BGP Router ID: 3.3.3.3

5. **client2**

- Interface: eth1

- IP: 10.1.4.2/24

- Static route: 10.1.1.2/32 via 10.1.4.1

## Configuration Commands Executed

All configurations were successfully applied to their respective devices as per the provided configuration scripts. The key configurations included:

1. Interface IP addressing

2. BGP neighbor relationships

3. Route policies

4. Static routes on client machines

## Connectivity Test Results

```

PING 10.1.4.2 (10.1.4.2) 56(84) bytes of data.

64 bytes from 10.1.4.2: icmp_seq=1 ttl=61 time=128 ms

64 bytes from 10.1.4.2: icmp_seq=2 ttl=61 time=8.16 ms

64 bytes from 10.1.4.2: icmp_seq=3 ttl=61 time=4.49 ms

64 bytes from 10.1.4.2: icmp_seq=4 ttl=61 time=5.86 ms

--- 10.1.4.2 ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time 3005ms

rtt min/avg/max/mdev = 4.491/36.620/127.973/52.758 ms

```

The ping test from client1 (10.1.1.2) to client2 (10.1.4.2) was successful with:

- 100% success rate (4/4 packets)

- Average round-trip time: 36.620ms

- No packet loss

This confirms that the BGP configurations are working correctly and the network is properly forwarding traffic between the clients through the leaf-spine topology.

Conclusion

AI agents go above and beyond automation. They can reason and try to come up with alternatives. A simple example can be if a linux command fails due to permission issue and the agent has access to sudo password, next time it will try with a sudo password to succeed. A complex example can be incase the bgp peering is not up, then based on the prompt instructions they can try to find why and even go about fixing it.

Agentic workflows have its challenges and they require different way of thinking compared to programmatic approaches. So far the downsides that I have encountered include that it can take longer (sometimes way longer) to achieve an outcome, runtimes vary with each run and outputs can vary (this can somewhat be controlled by better prompts).

Finally, for trivial, straightforward tasks like scraping a website and executing the given set of commands, a smaller LLM can be used like gpt-4o-mini or llama3.1–7b etc. However, for tasks like designing the network topology, a bigger LLM is required. Although here claude-3–5-sonnet was chosen but ideally a good 70b model should suffice. Finally, you have to be very diligent with prompts. They will make or break your use-case!

AI Agents in Networking Industry was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

AI Agents in Networking Industry