The AI landscape is rapidly evolving, with synthetic data emerging as a powerful tool for model development. While it offers immense potential, recent concerns about model collapse have sparked debate. Let’s dive into the reality of synthetic data use and its impact on AI development.

Addressing the Model Collapse Concern

The Nature paper “AI models collapse when trained on recursively generated data” by Shumailov et al. raised important questions about the use of synthetic data:

- “We find that indiscriminate use of model-generated content in training causes irreversible defects in the resulting models, in which tails of the original content distribution disappear.” [1]

- “We argue that the process of model collapse is universal among generative models that recursively train on data generated by previous generations” [1]

However, it’s essential to note that this extreme scenario of recursive training on purely synthetic data is not representative of real-world AI development practices. The authors themselves acknowledge:

- “Here we explore what happens with language models when they are sequentially fine-tuned with data generated by other models… We evaluate the most common setting of training a language model — a fine-tuning setting for which each of the training cycles starts from a pre-trained model with recent data” [1]

Key Points

- The study’s methodology does not account for the continuous influx of new, diverse data that characterizes real-world AI model training. This limitation may lead to an overestimation of model collapse in practical scenarios, where fresh data serves as a potential corrective mechanism against degradation.

- The experimental design, which discards data from previous generations, diverges from common practices in AI development that involve cumulative learning and sophisticated data curation. This approach may not accurately represent the knowledge retention and building processes typical in industry applications.

- The use of a single, static model architecture (OPT-125m) throughout the generations does not reflect the rapid evolution of AI architectures in practice. This simplification may exaggerate the observed model collapse by not accounting for how architectural advancements potentially mitigate such issues. In reality, the field has seen rapid progression (e.g., from GPT-3 to GPT-3.5 to GPT-4, or from Phi-1 to Phi-2 to Phi-3), with each iteration introducing significant improvements in model capacity, generalization capabilities, and emergent behaviors.

- While the paper acknowledges catastrophic forgetting, it does not incorporate standard mitigation techniques used in industry, such as elastic weight consolidation or experience replay. This omission may amplify the observed model collapse effect and limits the study’s applicability to real-world scenarios.

- The approach to synthetic data generation and usage in the study lacks the quality control measures and integration practices commonly employed in industry. This methodological choice may lead to an overestimation of model collapse risks in practical applications where synthetic data is more carefully curated and combined with real-world data.

Supporting Quotes from the Paper

- “We also briefly mention two close concepts to model collapse from the existing literature: catastrophic forgetting arising in the framework of task-free continual learning and data poisoning maliciously leading to unintended behaviour” [1]

In practice, the goal of synthetic data is to augment and extend the existing datasets, including the implicit data baked into base models. When teams are fine-tuning or continuing pre-training, the objective is to provide additional data to improve the model’s robustness and performance.

Counterpoints from Academia and Research

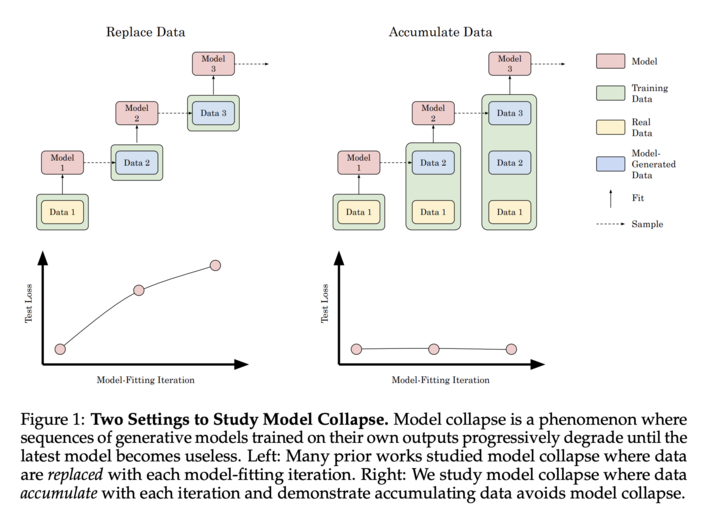

The paper“Is Model Collapse Inevitable? Breaking the Curse of Recursion by Accumulating Real and Synthetic Data” by Gerstgrasser et al., researchers from Stanford, MIT, and Constellation presents significant counterpoints to concerns about AI model collapse:

This work has shown that combining synthetic data with real-world data can prevent model degradation.

Quality Over Quantity

As highlighted in Microsoft’s Phi-3 technical report:

- “The creation of a robust and comprehensive dataset demands more than raw computational power: It requires intricate iterations, strategic topic selection, and a deep understanding of knowledge gaps to ensure quality and diversity of the data.” [3]

This emphasizes the importance of thoughtful synthetic data generation rather than indiscriminate use.

And Apple in training their new device and foundation models:

- “We find that data quality is essential to model success, so we utilize a hybrid data strategy in our training pipeline, incorporating both human-annotated and synthetic data, and conduct thorough data curation and filtering procedures.“ [10]

This emphasizes the importance of thoughtful synthetic data generation rather than indiscriminate use.

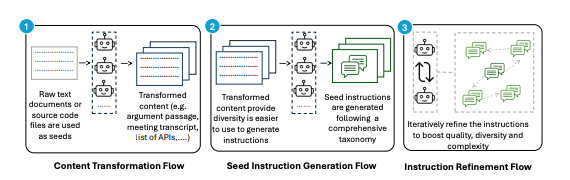

Iterative Improvement, Not Recursive Training

As highlighted in Gretel Navigator, NVIDIA’s Nemotron, and the AgentInstruct architecture, cutting edge synthetic data is generated by agents iteratively simulating, evaluating, and improving outputs- not simply recursively training on their own output. Below is an example of syntheticexample synthetic data generation architecture used in AgentInstruct.

On Synthetic Data Improving Model Performance

Here are some example results from recent synthetic data releases:

- AgentInstruct: “40% improvement on AGIEval, 19% on MMLU, 54% on GSM8K.”

- NVIDIA Nemotron-4 340B Instruct: Currently first place on the Hugging Face RewardBench leaderboard, created by AI2, for evaluating the capabilities, safety and pitfalls of reward models.

- Gretel Navigator: 73.6% win rate against human experts on synthetic data generation, including a 25.6% lift over OpenAI GPT-4 performance.

Industry Advances with Synthetic Data

Synthetic data is driving significant advancements across various industries:

Healthcare: Rhys Parker, Chief Clinical Officer at SA Health, stated:

“Our synthetic data approach with Gretel has transformed how we handle sensitive patient information. Data requests that previously took months or years are now achievable in days. This isn’t just a technological advance; it’s a fundamental shift in managing health data that significantly improves patient care while ensuring privacy. We predict synthetic data will become routine in medical research within the next few years, opening new frontiers in healthcare innovation.” [9]

Mathematical Reasoning: DeepMind’s AlphaProof and AlphaGeometry 2 systems,

“AlphaGeometry 2, based on Gemini and trained with an order of magnitude more data than its predecessor”, achieved a silver-medal level at the International Mathematical Olympiad by solving complex mathematical problems, demonstrating the power of synthetic data in enhancing AI capabilities in specialized fields [5].

Life Sciences Research: Nvidia’s research team reported:

“Synthetic data also provides an ethical alternative to using sensitive patient data, which helps with education and training without compromising patient privacy” [4]

Democratizing AI Development

One of the most powerful aspects of synthetic data is its potential to level the playing field in AI development.

Empowering Data-Poor Industries: Empowering Data-Poor Industries: Synthetic data allows industries with limited access to large datasets to compete in AI development. This is particularly crucial for sectors where data collection is challenging due to privacy concerns or resource limitations.

Customization at Scale: Even large tech companies are leveraging synthetic data for customization. Microsoft’s research on the Phi-3 model demonstrates how synthetic data can be used to create highly specialized models:

“We speculate that the creation of synthetic datasets will become, in the near future, an important technical skill and a central topic of research in AI.” [3]

Tailored Learning for AI Models: Andrej Karpathy, former Director of AI at Tesla, suggests a future where we create custom “textbooks” for teaching language models:

Scaling Up with Synthetic Data: Jim Fan, an AI researcher, highlights the potential of synthetic data to provide the next frontier of training data:

Fan also points out that embodied agents, such as robots like Tesla’s Optimus, could be a significant source of synthetic data if simulated at scale.

Economics of Synthetic Data

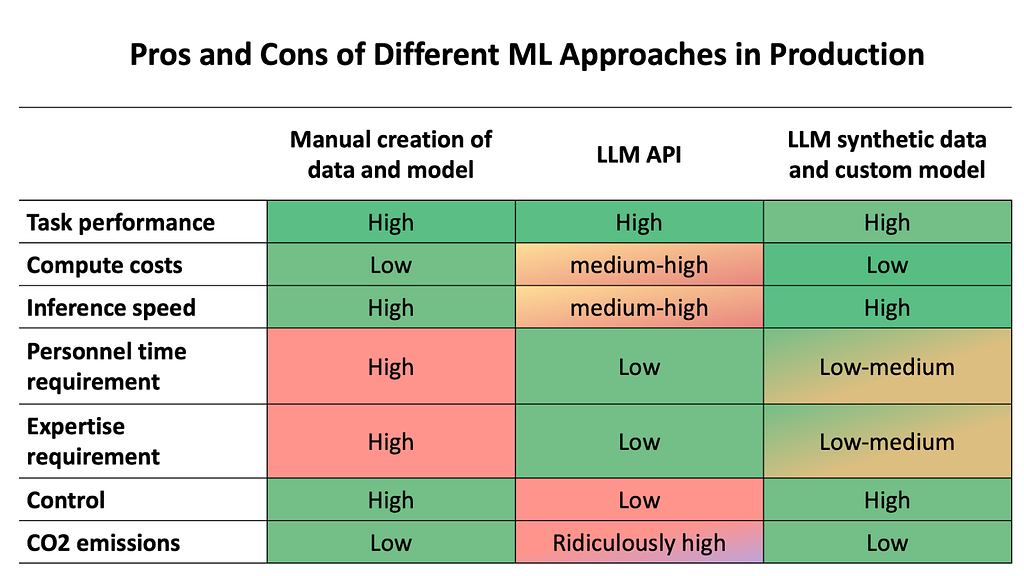

Cost Savings and Resource Efficiency:

The Hugging Face blog shows that fine-tuning a custom small language model using synthetic data costs around $2.7 to fine-tune, compared to $3,061 with GPT-4 on real-world data, while emitting significantly less CO2 and offering faster inference speeds.

Here’s a nice visualization from Hugging Face that shows the benefits across use cases:

Conclusion: A Balanced Approach

While the potential risks of model collapse should not be ignored, the real-world applications and benefits of synthetic data are too significant to dismiss. As we continue to advance in this field, a balanced approach that combines synthetic data with rigorous real-world validation and thoughtful generation practices will be key to maximize its potential.

Synthetic data, when used responsibly and in conjunction with real-world data, has the potential to dramatically accelerate AI development across all sectors. It’s not about replacing real data, but augmenting and extending our capabilities in ways we’re only beginning to explore. By enhancing datasets with synthetic data, we can fill critical data gaps, address biases, and create more robust models.

By leveraging synthetic data responsibly, we can democratize AI development, drive innovation in data-poor sectors, and push the boundaries of what’s possible in machine learning — all while maintaining the integrity and reliability of our AI systems.

References

- Shumailov, I., Shumaylov, Z., Zhao, Y., Gal, Y., Papernot, N., & Anderson, R. (2023). The curse of recursion: Training on generated data makes models forget. arXiv preprint arXiv:2305.17493.

- Gerstgrasser, M., Schaeffer, R., Dey, A., Rafailov, R., Sleight, H., Hughes, J., … & Zhang, C. (2023). Is model collapse inevitable? Breaking the curse of recursion by accumulating real and synthetic data. arXiv preprint arXiv:2404.01413.

- Li, Y., Bubeck, S., Eldan, R., Del Giorno, A., Gunasekar, S., & Lee, Y. T. (2023). Textbooks are all you need II: phi-1.5 technical report. arXiv preprint arXiv:2309.05463.

- Nvidia Research Team. (2024). Addressing Medical Imaging Limitations with Synthetic Data Generation. Nvidia Blog.

- DeepMind Blog. (2024). AI achieves silver-medal standard solving International Mathematical Olympiad problems. DeepMind.

- Hugging Face Blog on Synthetic Data. (2024). Synthetic data: save money, time and carbon with open source. Hugging Face.

- Karpathy, A. (2024). Custom Textbooks for Language Models. Twitter.

- Fan, J. (2024). Synthetic Data and the Future of AI Training. Twitter.

- South Australian Health. (2024). South Australian Health Partners with Gretel to Pioneer State-Wide Synthetic Data Initiative for Safe EHR Data Sharing. Microsoft for Startups Blog.

- Introducing Apple’s On-Device and Server Foundation Models. https://machinelearning.apple.com/research/introducing-apple-foundation-models

- AgentInstruct: Toward Generative Teaching with Agentic Flows. https://arxiv.org/abs/2407.03502

- Gerstgrasser, M. (2024). Comment on LinkedIn post by Yev Meyer, Ph.D. LinkedIn. https://www.linkedin.com/feed/update/urn:li:activity:7223028230444785664

Addressing Concerns of Model Collapse from Synthetic Data in AI was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Addressing Concerns of Model Collapse from Synthetic Data in AI

Go Here to Read this Fast! Addressing Concerns of Model Collapse from Synthetic Data in AI