Why real-time data and governance are non-negotiable for AI

Kafka is great. AI is great. What happens when we combine both? Continuity.

—

AI is changing many things about our efficiency and how we operate: sublime translations, customer interactions, code builder, driving our cars etc. Even if we love cutting-edge things, we’re all having a hard time keeping up with it.

There is a massive problem we tend to forget: AI can easily go off the rails without the right guardrails. And when it does, it’s not just a technical glitch, it can lead to disastrous consequences for the business.

From my own experience as a CTO, I’ve seen firsthand that real AI success doesn’t come from speed alone. It comes from control — control over the data your AI consumes, how it operates, and ensuring it doesn’t deliver the wrong outputs (more on this below).

The other part of the success is about maximizing the potential and impact of AI. That’s where Kafka and data streaming enter the game

Both AI Guardrails and Kafka are key to scaling a safe, compliant, and reliable AI.

AI without Guardrails is an open book

One of the biggest risks when dealing with AI is the absence of built-in governance. When you rely on AI/LLMs to automate processes, talk to customers, handle sensitive data, or make decisions, you’re opening the door to a range of risks:

- data leaks (and prompt leaks as we’re used to see)

- privacy breaches and compliance violations

- data bias and discrimination

- out-of-domain prompting

- poor decision-making

Remember March 2023? OpenAI had an incident where a bug caused chat data to be exposed to other users. The bottom line is that LLMs don’t have built-in security, authentication, or authorization controls. An LLM is like a massive open book — anyone accessing it can potentially retrieve information they shouldn’t. That’s why you need a robust layer of control and context in between, to govern access, validate inputs, and ensure sensitive data remains protected.

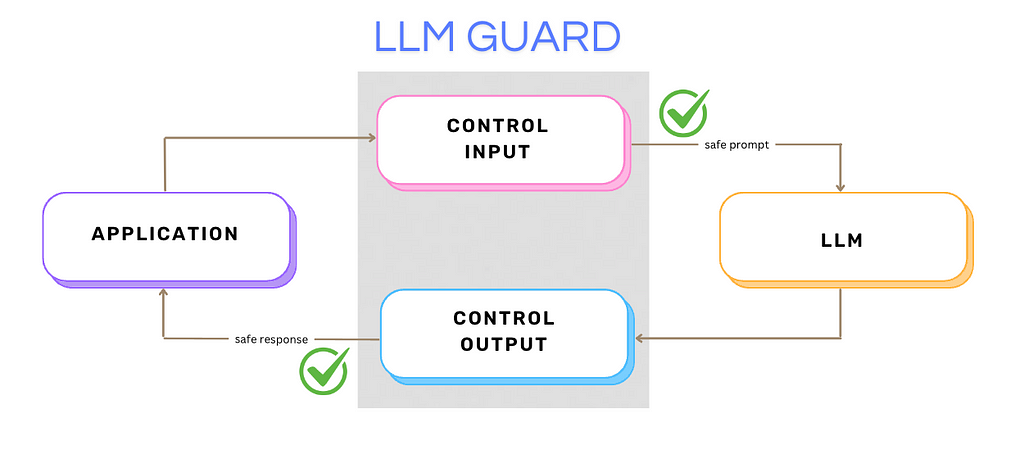

There is where AI guardrails, like NeMo (by Nvidia) and LLM Guard, come into the picture. They provide essential checks on the inputs and outputs of the LLM:

- prompt injections

- filtering out biased or toxic content

- ensuring personal data isn’t slipping through the cracks.

- out-of-context prompts

- jailbreaks

https://github.com/leondz/garak is an LLM vulnerability scanner. It checks if an LLM can be made to fail in a way we don’t want. It probes for hallucination, data leakage, prompt injection, misinformation, toxicity generation, jailbreaks, and many other weaknesses.

What’s the link with Kafka?

Kafka is an open-source platform designed for handling real-time data streaming and sharing within organizations. And AI thrives on real-time data to remain useful!

Feeding AI static, outdated datasets is a recipe for failure — it will only function up to a certain point, after which it won’t have fresh information. Think about ChatGPT always having a ‘cut-off’ date in the past. AI becomes practically useless if, for example, during customer support, the AI don’t have the latest invoice of a customer asking things because the data isn’t up-to-date.

Methods like RAG (Retrieval Augmented Generation) fix this issue by providing AI with relevant, real-time information during interactions. RAG works by ‘augmenting’ the prompt with additional context, which the LLM processes to generate more useful responses.

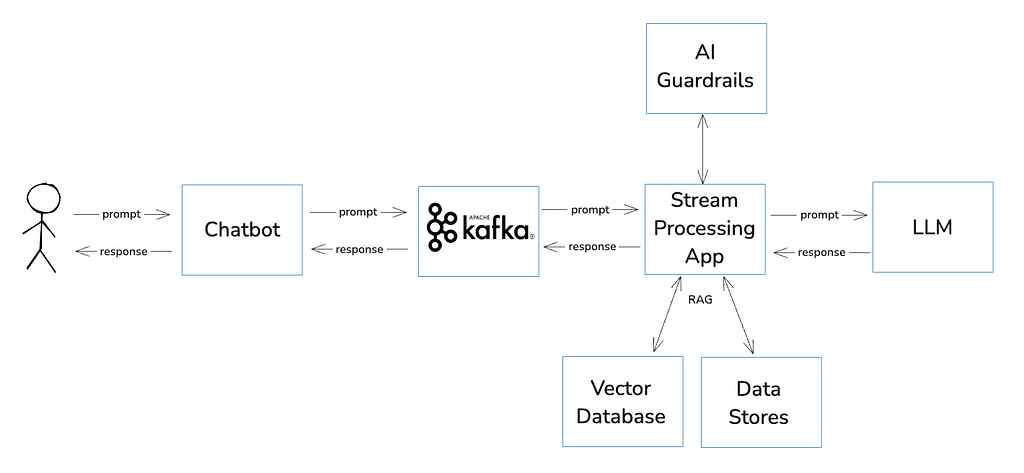

Guess what is frequently paired with RAG? Kafka. What better solution to fetch real-time information and seamlessly integrate it with an LLM? Kafka continuously streams fresh data, which can be composed with an LLM through a simple HTTP API in front. One critical aspect is to ensure the quality of the data being streamed in Kafka is under control: no bad data should enter the pipeline (Data Validations) or it will spread throughout your AI processes: inaccurate outputs, biased decisions, security vulnerabilities.

A typical streaming architecture combining Kafka, AI Guardrails, and RAG:

Gartner predicts that by 2025, organizations leveraging AI and automation will cut operational costs by up to 30%. Faster, smarter.

Should we care about AI Sovereignty? Yes.

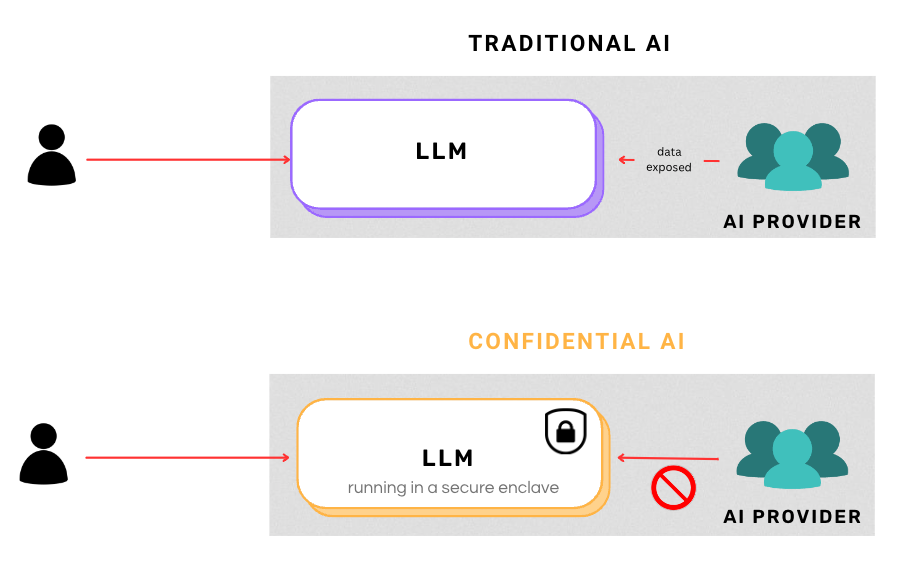

AI sovereignty is about ensuring that you fully control where your AI runs, how data is ingested, processed, and who has access to it. It’s not just about the software, it’s about the hardware as well, and the physical place things are happening.

Sovereignty is about the virtual, physical infrastructure and geopolitical boundaries where your data resides. We live in a physical world, and while AI might seem intangible, it’s bound by real-world regulations.

For instance, depending on where your AI infrastructure is hosted, different jurisdictions may demand access to your data (e.g. the States!), even if it’s processed by an AI model. That’s why ensuring sovereignty means controlling not just the code, but the physical hardware and the environment where the processing happens.

Technologies like Intel SGX (Software Guard Extensions) and AMD SEV (Secure Encrypted Virtualization) offer this kind of protection. They create isolated execution environments that protect sensitive data and code, even from potential threats inside the host system itself. And solutions like Mithril Security are also stepping up, providing Confidential AI where the AI provider cannot even access the data processed by their LLM.

Conclusion

It’s clear that AI guardrails and Kafka streaming are the foundation to make use-cases relying on AI successful. Without Kafka, AI models operate on stale data, making them unreliable and not very useful. And without AI guardrails, AI is at risk of making dangerous mistakes — compromising privacy, security, and decision quality.

This formula is what keeps AI on track and in control. The risks of operating without it are simply too high.

How to succeed with AI: Combining Kafka and AI Guardrails was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

How to succeed with AI: Combining Kafka and AI Guardrails

Go Here to Read this Fast! How to succeed with AI: Combining Kafka and AI Guardrails