Create more interpretable models by using concise, highly predictive features, automatically engineered based on arithmetic combinations of numeric features

In this article, we examine a tool called FormulaFeatures. This is intended for use primarily with interpretable models, such as shallow decision trees, where having a small number of concise and highly predictive features can aid greatly with the interpretability and accuracy of the models.

Interpretable Models in Machine Learning

This article continues my series on interpretable machine learning, following articles on ikNN, Additive Decision Trees, Genetic Decision Trees, and PRISM rules.

As indicated in the previous articles (and covered there in more detail), there is often a strong incentive to use interpretable predictive models: each prediction can be well understood, and we can be confident the model will perform sensibly on future, unseen data.

There are a number of models available to provide interpretable ML, although, unfortunately, well less than we would likely wish. There are the models described in the articles linked above, as well as a small number of others, for example, decision trees, decision tables, rule sets and rule lists (created, for example by imodels), Optimal Sparse Decision Trees, GAMs (Generalized Additive Models, such as Explainable Boosted Machines), as well as a few other options.

In general, creating predictive machine learning models that are both accurate and interpretable is challenging. To improve the options available for interpretable ML, four of the main approaches are to:

- Develop additional model types

- Improve the accuracy or interpretability of existing model types. For this, I’m referring to creating variations on existing model types, or the algorithms used to create the models, as opposed to completely novel model types. For example, Optimal Sparse Decision Trees and Genetic Decision Trees seek to create stronger decision trees, but in the end, are still decision trees.

- Provide visualizations of the data, model, and predictions made by the model. This is the approach taken, for example, by ikNN, which works by creating an ensemble of 2D kNN models (that is, ensembles of kNN models that each use only a single pair of features). The 2D spaces may be visualized, which provides a high degree of visibility into how the model works and why it made each prediction as it did.

- Improve the quality of the features that are used by the models, in order that models can be either more accurate or more interpretable.

FormulaFeatures is used to support the last of these approaches. It was developed by myself to address a common issue in decision trees: they can often achieve a high level of accuracy, but only when grown to a large depth, which then precludes any interpretability. Creating new features that capture part of the function linking the original features to the target can allow for much more compact (and therefore interpretable) decision trees.

The underlying idea is: for any labelled dataset, there is some true function, f(x) that maps the records to the target column. This function may take any number of forms, may be simple or complex, and may use any set of features in x. But regardless of the nature of f(x), by creating a model, we hope to approximate f(x) as well as we can given the data available. To create an interpretable model, we also need to do this clearly and concisely.

If the features themselves can capture a significant part of the function, this can be very helpful. For example, we may have a model that predicts client churn and we may have features for each client including: their number of purchases in the last year, and the average value of their purchases in the last year. The true f(x), though, may be based primarily on the product of these (the total value of their purchases in the last year, which is found by multiplying these two features).

In practice, we will generally never know the true f(x), but in this case, let’s assume that whether a client churns in the next year is related strongly to their total purchases in the prior year, and not strongly to their number of purchase or their average size.

We can likely build an accurate model using just the two original features, but a model using just the product feature will be more clear and interpretable. And possibly more accurate.

Example using a decision tree

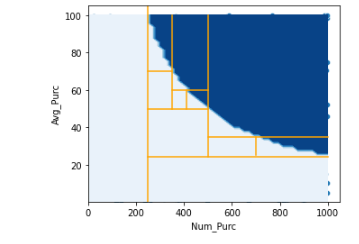

If we have only two features, then we can view them in a 2d plot. In this case, we can look at just num_purc and avg_purc: the number of purchases in the last year per client, and their average dollar value. Assuming the true f(x) is based primarily on their product, the space may look like the plot below, where the light blue area represents client who will churn in the next year, and the dark blue those who will not.

If using a decision tree to model this, we can create a model by dividing the data space recursively. The orange lines on the plot show a plausible set of splits a decision tree may use (for the first set of nodes) to try to predict churn. It may, as shown, first split on num_purc at a value of 250, then avg_purc at 24, and so on. It would continue to make splits in order to fit the curved shape of the true function.

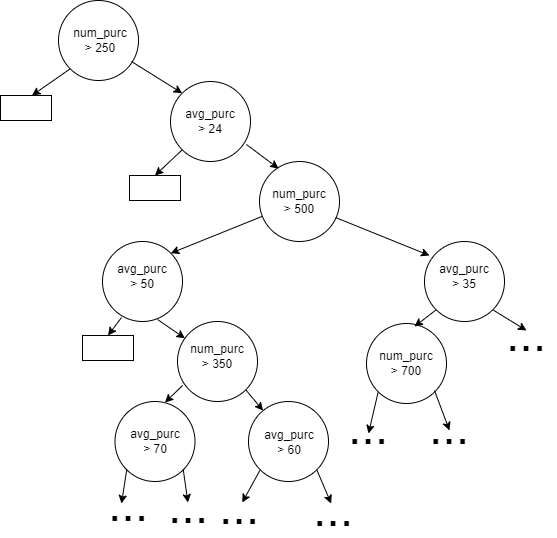

Doing this will create a decision tree that looks something like the tree below, where the circles represent internal nodes, the rectangles represent the leaf nodes, and ellipses the sub-trees that would like need to be grown several more levels deep to achieve decent accuracy. That is, this shows only a fraction of the full tree that would need to be grown to model this using these two features. We can see in the plot above as well: using axis-parallel split, we will need a large number of splits to fit the boundary between the two classes well.

If the tree is grown sufficiently, we can likely get a strong tree in terms of accuracy. But, the tree will be far from interpretable.

It is possible to view the decision space, as in the plot above (and this does make the behaviour of the model clear), but this is only feasible here because the space is limited to two dimensions. Normally this is impossible, and our best means to interpret the decision tree is to examine the tree itself. But, where the tree has many dozens of nodes or more, it becomes impossible to see the patterns it is working to capture.

In this case, if we engineered a feature for num_purc * avg_purc, we could have a very simple decision tree, with just a single internal node, with the split point: num_purc * avg_purc > 25000.

In practice, it’s never possible to produce features that are this close to the true function, and it’s never possible to create a fully accurate decision trees with very few nodes. But it is often quite possible to engineer features that are closer to the true f(x) than the original features.

Whenever there are interactions between features, if we can capture these with engineered features, this will allow for more compact models.

So, with FormulaFeatures, we attempt to create features such as num_purchases * avg_value_of_purchases, and they can quite often be used in models such as decision trees to capture the true function reasonably well.

As well, simply knowing that num_purchases * avg_value_of_purchases is predictive of the target (and that higher values are associated with lower risk of churn) in itself is informative. But the new feature is most useful in the context of seeking to make interpretable models more accurate and more interpretable.

As we’ll describe below, FormulaFeatures also does this in a way that minimizing creating other features, so that only a small set of features, all relevant, are returned.

Interpretable machine learning with decision trees

With tabular data, the top-performing models for prediction problems are typically boosted tree-based ensembles, particularly LGBM, XGBoost, and CatBoost. It will vary from one prediction problem to another, but most of the time, these three models tend to do better than other models (and are considered, at least outside of AutoML approaches, the current state of the art). Other strong model types such as kNNs, neural networks, Bayesian Additive Regression Trees, SVMs, and others will also occasionally perform the best. All of these models types are, though, quite uninterpretable, and are effectively black-boxes.

Unfortunately, interpretable models tend to be weaker than these with respect to accuracy. Sometimes, the drop in accuracy is fairly small (for example, in the 3rd decimal), and it’s worth sacrificing some accuracy for interpretability. In other cases, though, interpretable models may do substantially worse than the black-box alternatives. It’s difficult, for example for a single decision tree to compete with an ensemble of many decision trees.

So, it’s common to be able to create a strong black-box model, but at the same time for it to be challenging (or impossible) to create a strong interpretable model. This is the problem FormulaFeatures was designed to address. It seeks to capture some of logic that black-box models can represent, but in a simple, understandable way.

Much of the research done in interpretable AI focusses on decision trees, and relates to making decision trees more accurate and more interpretable. This is fairly natural, as decision trees are a model type that’s inherently straight-forward to understand (when sufficiently small, they are arguably as interpretable as any other model) and often reasonably accurate (though this is very often not the case).

Other interpretable models types (e.g. logistic regression, rules, GAMs, etc.) are used as well, but much of the research is focused on decision trees, and so this article works, for the most part, with decision trees. Nevertheless, FormulaFeatures is not specific to decision trees, and can be useful for other interpretable models. In fact, it’s fairly easy to see, once we explain FormulaFeatures below, how it may be applied as well to ikNN, Genetic Decision Trees, Additive Decision Trees, rules lists, rule sets, and so on.

To be more precise with respect to decision trees, when using these for interpretable ML, we are looking specifically at shallow decision trees — trees that have relatively small depths, with the deepest nodes being restricted to perhaps 3, 4, or 5 levels. This ensures two things: that shallow decision trees can provide both what are called local explanations and what are called global explanations. These are the two main concerns with interpretable ML. I’ll explain these here.

With local interpretability, we want to ensure that each individual prediction made by the model is understandable. Here, we can examine the decision path taken through the tree by each record for which we generate a decision. If a path includes the feature num_purc * avg_purc, and the path is very short, it can be reasonably clear. On the other hand, a path that includes: num_purc > 250 AND avg_purc > 24 AND num_purc < 500 AND avg_purc_50, and so on (as in the tree generated above without the benefit of the num_purc * avg_pur feature) can become very difficult to interpret.

With global interpretability, we want to ensure that the model as a whole is understandable. This allows us to see the predictions that would be made under any circumstances. Again, using more compact trees, and where the features themselves are informative, can aid with this. It’s much simpler, in this case, to see the big picture of how the decision tree outputs predictions.

We should qualify this, though, by indicating that shallow decision trees (which we focus on for this article) are very difficult to create in a way that’s accurate for regression problems. Each leaf node can predict only a single value, and so a tree with n leaf nodes can only output, at most, n unique predictions. For regression problems, this usually results in high error rates: normally decision trees need to create a large number of leaf nodes in order to cover the full range of values that can be potentially predicted, with each node having reasonable precision.

Consequently, shallow decision trees tend to be practical only for classification problems (if there are only a small number of classes that can be predicted, it is quite possible to create a decision tree with not too many leaf nodes to predict these accurately). FormulaFeatures can be useful for use with other interpretable regression models, but not typically with decision trees.

Supervised and unsupervised feature engineering

Now that we’ve seen some of the motivation behind FormulaFeatures, we’ll take a look at how it works.

FormulaFeatures is a form of supervised feature engineering, which is to say that it considers the target column when producing features, and so can generate features specifically useful for predicting that target. FormulaFeatures supports both regression & classification targets (though as indicated, when using decision trees, it may be that only classification targets are feasible).

Taking advantage of the target column allows it to generate only a small number of engineered features, each as simple or complex as necessary.

Unsupervised methods, on the other hand, do not take the target feature into consideration, and simply generate all possible combinations of the original features using some system for generating features.

An example of this is scikit-learn’s PolynomialFeatures, which will generate all polynomial combinations of the features. If the original features are, say: [a, b, c], then PolynomialFeatures can create (depending on the parameters specified) a set of engineered features such as: [ab, ac, bc, a², b², c²] — that is, it will generate all combinations of pairs of features (using multiplication), as well as all original features raised to the 2nd degree.

Using unsupervised methods, there is very often an explosion in the number of features created. If we have 20 features to start with, returning just the features created by multiplying each pair of features would generate (20 * 19) / 2, or 190 features (that is, 20 choose 2). If allowed to create features based on multiplying sets of three features, there are 20 choose 3, or 1140 of these. Allowing features such as a²bc, a²bc², and so on results in even more massive numbers of features (though with a small set of useful features being, quite possibly, among these).

Supervised feature engineering methods would tend to return only a much smaller (and more relevant) subset of these.

However, even within the context of supervised feature engineering (depending on the specific approach used), an explosion in features may still occur to some extent, resulting in a time consuming feature engineering process, as well as producing more features than can be reasonably used by any downstream tasks, such as prediction, clustering, or outlier detection. FormulaFeatures is optimized to keep both the engineering time, and the number of features returned, tractable, and its algorithm is designed to limit the numbers of features generated.

Algorithm

The tool operates on the numeric features of a dataset. In the first iteration, it examines each pair of original numeric features. For each, it considers four potential new features based on the four basic arithmetic operations (+, -, *, and /). For the sake of performance, and interpretability, we limit the process to these four operations.

If any perform better than both parent features (in terms of their ability to predict the target — described soon), then the strongest of these is added to the set of features. For example, if A + B and A * B are both strong features (both stronger than either A or B), only the stronger of these will be included.

Subsequent iterations then consider combining all features generated in the previous iteration will all other features, again taking the strongest of these, if any outperformed their two parent features. In this way, a practical number of new features are generated, all stronger than the previous features.

Example stepping through the algorithm

Assume we start with a dataset with features A, B, and C, that Y is the target, and that Y is numeric (this is a regression problem).

We start by determining how predictive of the target each feature is on its own. The currently-available version uses R2 for regression problems and F1 (macro) for classification problems. We create a simple model (a classification or regression decision tree) using only a single feature, determine how well it predicts the target column, and measure this with either R2 or F1 scores.

Using a decision tree allows us to capture reasonably well the relationships between the feature and target — even fairly complex, non-monotonic relationships — where they exist.

Future versions will support more metrics. Using strictly R2 and F1, however, is not a significant limitation. While other metrics may be more relevant for your projects, using these metrics internally when engineering features will identify well the features that are strongly associated with the target, even if the strength of the association is not identical as it would be found using other metrics.

In this example, we begin with calculating the R2 for each original feature, training a decision tree using only feature A, then another using only B, and then again using only C. This may give the following R2 scores:

A 0.43

B 0.02

C -1.23

We then consider the combinations of pairs of these, which are: A & B, A & C, and B & C. For each we try the four arithmetic operations: +, *, -, and /.

Where there are feature interactions in f(x), it will often be that a new feature incorporating the relevant original features can represent the interactions well, and so outperform either parent feature.

When examining A & B, assume we get the following R2 scores:

A + B 0.54

A * B 0.44

A - B 0.21

A / B -0.01

Here there are two operations that have a higher R2 score than either parent feature (A or B), which are + and *. We take the highest of these, A + B, and add this to the set of features. We do the same for A & B and B & C. In most cases, no feature will be added, but often one is.

After the first iteration we may have:

A 0.43

B 0.02

C -1.23

A + B 0.54

B / C 0.32

We then, in the next iteration, take the two features just added, and try combining them with all other features, including each other.

After this we may have:

A 0.43

B 0.02

C -1.23

A + B 0.54

B / C 0.32

(A + B) - C 0.56

(A + B) * (B / C) 0.66

This continues until there is no longer improvement, or a limit specified by a hyperparameter, max_iterations, is reached.

Further pruning based on correlations

At the end of each iteration, further pruning of the features is performed, based on correlations. The correlation among the features created during the current iteration is examined, and where two or more features that are highly correlated were created, only the strongest is kept, removing the others. This limits creating near-redundant features, which can become possible, especially as the features become more complex.

For example: (A + B + C) / E and (A + B + D) / E may both be strong, but quite similar, and if so, only the stronger of these will be kept.

One allowance for correlated features is made, though. In general, as the algorithm proceeds, more complex features are created, and these features more accurately capture the true relationship between the features in x and the target. But, the new features created may also be correlated with the features they build upon, which are simpler, and FormulaFeatures also seeks to favour simpler features over more complex, everything else equal.

For example, if (A + B + C) is correlated with (A + B), both would be kept even if (A + B + C) is stronger, in order that the simpler (A + B) may be combined with other features in subsequent iterations, possibly creating features that are stronger still.

How FormulaFeatures limits the features created

In the example above, we have features A, B, and C, and see that part of the true f(x) can be approximated with (A + B) – C.

We initially have only the original features. After the first iteration, we may generate (again, as in the example above) A + B and B / C, so now have five features.

In the next iteration, we may generate (A + B) — C.

This process is, in general, a combination of: 1) combining weak features to make them stronger (and more likely useful in a downstream task); as well as 2) combining strong features to make these even stronger, creating what are most likely the most predictive features.

But, what’s important is that this combining is done only after it’s confirmed that A + B is a predictive feature in itself, more so than either A or B. That is, we do not create (A + B) — C until we confirm that A + B is predictive. This ensures that, for any complex features created, each component within them is useful.

In this way, each iteration creates a more powerful set of features than the previous, and does so in a way that’s reliable and stable. It minimizes the effects of simply trying many complex combinations of features, which can easily overfit.

So, FormulaFeatures, executes in a principled, deliberate manner, creating only a small number of engineered features each step, and typically creates less features each iteration. As such, it, overall, favours creating features with low complexity. And, where complex features are generated, this can be shown to be justified.

With most datasets, in the end, the features engineered are combinations of just two or three original features. That is, it will usually create features more similar to A * B than to, say, (A * B) / (C * D).

In fact, to generate a features such as (A * B) / (C * D), it would need to demonstrate that A * B is more predictive than either A or B, that C * D is more predictive that C or D, and that (A * B) / (C * D) is more predictive than either (A * B) or (C * D). As that’s a lot of conditions, relatively few features as complex as (A * B) / (C * D) will tend to be created, many more like A * B.

Using 1D decision trees internally to evaluate the features

We’ll look here closer at using decision trees internally to evaluate each feature, both the original and the engineered features.

To evaluate the features, other methods are available, such as simple correlation tests. But creating simple, non-parametric models, and specifically decision trees, has a number of advantages:

- 1D models are fast, both to train and to test, which allows the evaluation process to execute very quickly. We can quickly determine which engineered features are predictive of the target, and how predictive they are.

- 1D models are simple and so may reasonably be trained on small samples of the data, further improving efficiency.

- While 1D decision tree models are relatively simple, they can capture non-monotonic relationships between the features and the target, so can detect where features are predictive even where the relationships are complex enough to be missed by simpler tests, such as tests for correlation.

- This ensures all features useful in themselves, so supports the features being a form of interpretability in themselves.

There are also some limitations of using 1D models to evaluate each feature, particularly: using single features precludes identifying effective combinations of features. This may result in missing some useful features (features that are not useful by themselves but are useful in combination with other features), but does allow the process to execute very quickly. It also ensures that all features produced are predictive on their own, which does aid in interpretability.

The goal is that: where features are useful only in combination with other features, a new feature is created to capture this.

Another limitation associated with this form of feature engineering is that almost all engineered features will have global significance, which is often desirable, but it does mean the tool can miss additionally generating features that are useful only in specific sub-spaces. However, given that the features will be used by interpretable models, such as shallow decision trees, the value of features that are predictive in only specific sub-spaces is much lower than where more complex models (such as large decision trees) are used.

Implications for the complexity of decision trees

FormulaFeatures does create features that are inherently more complex than the original features, which does lower the interpretability of the trees (assuming the engineered features are used by the trees one or more times).

At the same time, using these features can allow substantially smaller decision trees, resulting in a model that is, over all, more accurate and more interpretable. That is, even though the features used in a tree may be complex, the tree, may be substantially smaller (or substantially more accurate when keeping the size to a reasonable level), resulting in a net gain in interpretability.

When FormulaFeatures is used with shallow decision trees, the engineered features generated tend to be put at the top of the trees (as these are the most powerful features, best able to maximize information gain). No single feature can ever split the data perfectly at any step, which means further splits are almost always necessary. Other features are used lower in the tree, which tend to be simpler engineered features (based only only two, or sometimes three, original features), or the original features. On the whole, this can produce fairly interpretable decision trees, and tends to limit the use of the more complex engineered features to a useful level.

ArithmeticFeatures

To explain better some of the context for FormulaFeatures, I’ll describe another tool, also developed by myself, called ArithmeticFeatures, which is similar but somewhat simpler. We’ll then look at some of the limitations associated with ArithmeticFeatures that FormulaFeatures was designed to address.

ArithmeticFeatures is a simple tool, but one I’ve found useful in a number of projects. I initially created it, as it was a recurring theme that it was useful to generate a set of simple arithmetic combinations of the numeric features available for various projects I was working on. I then hosted it on github.

Its purpose, and its signature, are similar to scikit-learn’s PolynomialFeatures. It’s also an unsupervised feature engineering tool.

Given a set of numeric features in a dataset, it generates a collection of new features. For each pair of numeric features, it generates four new features: the result of the +, -, * and / operations.

This can generate a set of features that are useful, but also generates a very large set of features, and potentially redundant features, which means feature selection is necessary after using this.

Formula Features was designed to address the issue that, as indicated above, frequently occurs with unsupervised feature engineering tools including ArithmeticFeatures: an explosion in the numbers of features created. With no target to guide the process, they simply combine the numeric features in as many ways are are possible.

To quickly list the differences:

- FormulaFeatures will generate far fewer features, but each that it generates will be known to be useful. ArithmeticFeatures provides no check as to which features are useful. It will generate features for every combination of original features and arithmetic operation.

- FormulaFeatures will only generate features that are more predictive than either parent feature.

- For any given pair of features, FormulaFeatures will include at most one combination, which is the one that is most predictive of the target.

- FormulaFeatures will continue looping for either a specified number of iterations, or so long as it is able to create more powerful features, and so can create more powerful features than ArithmeticFeatures, which is limited to features based on pairs of original features.

ArithmeticFeatures, as it executes only one iteration (in order to manage the number of features produced), is often quite limited in what it can create.

Imagine a case where the dataset describes houses and the target feature is the house price. This may be related to features such as num_bedrooms, num_bathrooms and num_common rooms. Likely it is strongly related to the total number of rooms, which, let’s say, is: num_bedrooms + num_bathrooms + num_common rooms. ArithmeticFeatures, however is only able to produce engineered features based on pairs of original features, so can produce:

- num_bedrooms + num_bathrooms

- num_bedrooms + num_common rooms

- num_bathrooms + num_common rooms

These may be informative, but producing num_bedrooms + num_bathrooms + num_common rooms (as FormulaFeatures is able to do) is both more clear as a feature, and allows more concise trees (and other interpretable models) than using features based on only pairs of original features.

Another popular feature engineering tool based on arithmetic operations is AutoFeat, which works similarly to ArithmeticFeatures, and also executes in an unsupervised manner, so will create a very large number of features. AutoFeat is able it to execute for multiple iterations, creating progressively more complex features each iterations, but with increasing large numbers of them. As well, AutoFeat supports unary operations, such as square, square root, log and so on, which allows for features such as A²/log(B).

So, I’ve gone over the motivations to create, and to use, FormulaFeatures over unsupervised feature engineering, but should also say: unsupervised methods such as PolynomialFeatures, ArithmeticFeatures, and AutoFeat are also often useful, particularly where feature selection will be performed in any case.

FormulaFeatures focuses more on interpretability (and to some extent on memory efficiency, but the primary motivation was interpretability), and so has a different purpose.

Feature selection with feature engineering

Using unsupervised feature engineering tools such as PolynomialFeatures, ArithmeticFeatures, and AutoFeat increases the need for feature selection, but feature selection is generally performed in any case.

That is, even if using a supervised feature engineering method such as FormulaFeatures, it will generally be useful to perform some feature selection after the feature engineering process. In fact, even if the feature engineering process produces no new features, feature selection is likely still useful simply to reduce the number of the original features used in the model.

While FormulaFeatures seeks to minimize the number of features created, it does not perform feature selection per se, so can generate more features than will be necessary for any given task. We assume the engineered features will be used, in most cases, for a prediction task, but the relevant features will still depend on the specific model used, hyperparameters, evaluation metrics, and so on, which FormulaFeatures cannot predict

What can be relevant is that, using FormulaFeatures, as compared to many other feature engineering processes, the feature selection work, if performed, can be a much simpler process, as there will be far few features to consider. Feature selection can become slow and difficult when working with many features. For example, wrapper methods to select features become intractable.

API Signature

The tool uses the fit-transform pattern, the same as that used by scikit-learn’s PolynomialFeatures and many other feature engineering tools (including ArithmeticFeatures). As such, it’s easy to substitute this tool for others to determine which is the most useful for any given project.

Simple code example

In this example, we load the iris data set (a toy dataset provided by scikit-learn), split the data into train and test sets, use FormulaFeatures to engineer a set of additional features, and fit a Decision Tree using these.

This is fairly typical example. Using FormulaFeatures requires only creating a FormulaFeatures object, fitting it, and transforming the available data. This produces a new dataframe that can be used for any subsequent tasks, in this case to train a classification model.

import pandas as pd

from sklearn.datasets import load_iris

from formula_features import FormulaFeatures

# Load the data

iris = load_iris()

x, y = iris.data, iris.target

x = pd.DataFrame(x, columns=iris.feature_names)

# Split the data into train and test

x_train, x_test, y_train, y_test = train_test_split(x, y, random_state=42)

# Engineer new features

ff = FormulaFeatures()

ff.fit(x_train, y_train)

x_train_extended = ff.transform(x_train)

x_test_extended = ff.transform(x_test)

# Train a decision tree and make predictions

dt = DecisionTreeClassifier(max_depth=4, random_state=0)

dt.fit(x_train_extended, y_train)

y_pred = dt.predict(x_test_extended)

Setting the tool to execute with verbose=1 or verbose=2 allows viewing the process in greater detail.

The github page also provides a file called demo.py, which provides some examples using FormulaFeatures, though the signature is quite simple.

Example getting feature scores

Getting the feature scores, which we show in this example, may be useful for understanding the features generated and for feature selection.

In this example, we use the gas-drift dataset from openml (https://www.openml.org/search?type=data&sort=runs&id=1476&status=active, licensed under Creative Commons).

It largely works the same as the previous example, but also makes a call to the display_features() API, which provides information about the features engineered.

data = fetch_openml('gas-drift')

x = pd.DataFrame(data.data, columns=data.feature_names)

y = data.target

# Drop all non-numeric columns. This is not necessary, but is done here

# for simplicity.

x = x.select_dtypes(include=np.number)

# Divide the data into train and test splits. For a more reliable measure

# of accuracy, cross validation may also be used. This is done here for

# simplicity.

x_train, x_test, y_train, y_test = train_test_split(

x, y, test_size=0.33, random_state=42)

ff = FormulaFeatures(

max_iterations=2,

max_original_features=10,

target_type='classification',

verbose=1)

ff.fit(x_train, y_train)

x_train_extended = ff.transform(x_train)

x_test_extended = ff.transform(x_test)

display_df = x_test_extended.copy()

display_df['Y'] = y_test.values

print(display_df.head())

# Test using the extended features

extended_score = test_f1(x_train_extended, x_test_extended, y_train, y_test)

print(f"F1 (macro) score on extended features: {extended_score}")

# Get a summary of the features engineered and their scores based

# on 1D models

ff.display_features()

This will produce the following report, listing each feature index, F1 macro score, and feature name:

0: 0.438, V9

1: 0.417, V65

2: 0.412, V67

3: 0.412, V68

4: 0.412, V69

5: 0.404, V70

6: 0.409, V73

7: 0.409, V75

8: 0.409, V76

9: 0.414, V78

10: 0.447, ('V65', 'divide', 'V9')

11: 0.465, ('V67', 'divide', 'V9')

12: 0.422, ('V67', 'subtract', 'V65')

13: 0.424, ('V68', 'multiply', 'V65')

14: 0.489, ('V70', 'divide', 'V9')

15: 0.477, ('V73', 'subtract', 'V65')

16: 0.456, ('V75', 'divide', 'V9')

17: 0.45, ('V75', 'divide', 'V67')

18: 0.487, ('V78', 'divide', 'V9')

19: 0.422, ('V78', 'divide', 'V65')

20: 0.512, (('V67', 'divide', 'V9'), 'multiply', ('V65', 'divide', 'V9'))

21: 0.449, (('V67', 'subtract', 'V65'), 'divide', 'V9')

22: 0.45, (('V68', 'multiply', 'V65'), 'subtract', 'V9')

23: 0.435, (('V68', 'multiply', 'V65'), 'multiply', ('V67', 'subtract', 'V65'))

24: 0.535, (('V73', 'subtract', 'V65'), 'multiply', 'V9')

25: 0.545, (('V73', 'subtract', 'V65'), 'multiply', 'V78')

26: 0.466, (('V75', 'divide', 'V9'), 'subtract', ('V67', 'divide', 'V9'))

27: 0.525, (('V75', 'divide', 'V67'), 'divide', ('V73', 'subtract', 'V65'))

28: 0.519, (('V78', 'divide', 'V9'), 'multiply', ('V65', 'divide', 'V9'))

29: 0.518, (('V78', 'divide', 'V9'), 'divide', ('V75', 'divide', 'V67'))

30: 0.495, (('V78', 'divide', 'V65'), 'subtract', ('V70', 'divide', 'V9'))

31: 0.463, (('V78', 'divide', 'V65'), 'add', ('V75', 'divide', 'V9'))

This includes the original features (features 0 through 9) for context. In this example, there is a steady increase in the predictive power of the features engineered.

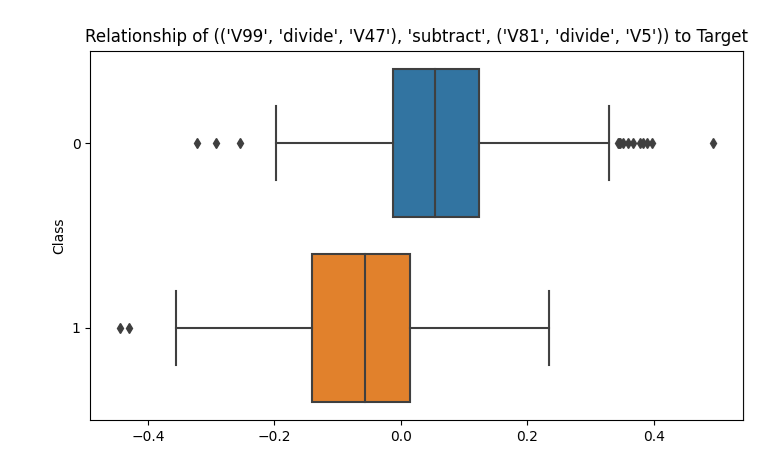

Plotting is also provided. In the case of regression targets, the tool presents a scatter plot mapping each feature to the target. In the case of classification targets, the tool presents a boxplot, giving the distribution of a feature broken down by class label. It is often the case that the original features show little difference in distributions per class, while engineered features can show a distinct difference. For example, one feature generated, (V99 / V47) — (V81 / V5) shows a strong separation:

The separation isn’t perfect, but is cleaner than with any of the original features.

This is typical of the features engineered; while each has an imperfect separation, each is strong, often much more so than for the original features.

Test Results

Testing was performed on synthetic and real data. The tool performed very well on the synthetic data, though this provides more debugging and testing than meaningful evaluation. For real data, a set of 80 random classification datasets from OpenML were selected, though only those having at least two numeric features could be included, leaving 69 files. Testing consisted of performing a single train-test split on the data, then training and evaluating a model on the numeric feature both before and after engineering additional features.

Macro F1 was used as the evaluation metric, evaluating a scikit-learn DecisionTreeClassifer with and without the engineered features, setting setting max_leaf_nodes = 10 (corresponding to 10 induced rules) to ensure an interpretable model.

In many cases, the tool provided no improvement, or only slight improvements, in the accuracy of the shallow decision trees, as is expected. No feature engineering technique will work in all cases. More important is that the tool led to significant increases inaccuracy an impressive number of times. This is without tuning or feature selection, which can further improve the utility of the tool.

Using other interpretable models will give different results, possibly stronger or weaker than was found with shallow decision trees, which did have show quite strong results.

In these tests we found better results limiting max_iterations to 2 compared to 3. This is a hyperparameter, and must be tuned for different datasets. For most datasets, using 2 or 3 works well, while with others, setting higher, even much higher (setting it to None allows the process to continue so long as it can produce more effective features), can work well.

In most cases, the time engineering the new features was just seconds, and in all cases was under two minutes, even with many of the test files having hundreds of columns and many thousands of rows.

The results were:

Dataset Score Score

Original Extended Improvement

isolet 0.248 0.256 0.0074

bioresponse 0.750 0.752 0.0013

micro-mass 0.750 0.775 0.0250

mfeat-karhunen 0.665 0.765 0.0991

abalone 0.127 0.122 -0.0059

cnae-9 0.718 0.746 0.0276

semeion 0.517 0.554 0.0368

vehicle 0.674 0.726 0.0526

satimage 0.754 0.699 -0.0546

analcatdata_authorship 0.906 0.896 -0.0103

breast-w 0.946 0.939 -0.0063

SpeedDating 0.601 0.608 0.0070

eucalyptus 0.525 0.560 0.0349

vowel 0.431 0.461 0.0296

wall-robot-navigation 0.975 0.975 0.0000

credit-approval 0.748 0.710 -0.0377

artificial-characters 0.289 0.322 0.0328

har 0.870 0.870 -0.0000

cmc 0.492 0.402 -0.0897

segment 0.917 0.934 0.0174

JapaneseVowels 0.573 0.686 0.1128

jm1 0.534 0.544 0.0103

gas-drift 0.741 0.833 0.0918

irish 0.659 0.610 -0.0486

profb 0.558 0.544 -0.0140

adult 0.588 0.588 0.0000

anneal 0.609 0.619 0.0104

credit-g 0.528 0.488 -0.0396

blood-transfusion-service-center 0.639 0.621 -0.0177

qsar-biodeg 0.778 0.804 0.0259

wdbc 0.936 0.947 0.0116

phoneme 0.756 0.743 -0.0134

diabetes 0.716 0.661 -0.0552

ozone-level-8hr 0.575 0.591 0.0159

hill-valley 0.527 0.743 0.2160

kc2 0.683 0.683 0.0000

eeg-eye-state 0.664 0.713 0.0484

climate-model-simulation-crashes 0.470 0.643 0.1731

spambase 0.891 0.912 0.0217

ilpd 0.566 0.607 0.0414

one-hundred-plants-margin 0.058 0.055 -0.0026

banknote-authentication 0.952 0.995 0.0430

mozilla4 0.925 0.924 -0.0009

electricity 0.778 0.787 0.0087

madelon 0.712 0.760 0.0480

scene 0.669 0.710 0.0411

musk 0.810 0.842 0.0326

nomao 0.905 0.911 0.0062

bank-marketing 0.658 0.645 -0.0134

MagicTelescope 0.780 0.807 0.0261

Click_prediction_small 0.494 0.494 -0.0001

page-blocks 0.669 0.816 0.1469

hypothyroid 0.924 0.907 -0.0161

yeast 0.445 0.487 0.0419

CreditCardSubset 0.785 0.803 0.0184

shuttle 0.651 0.514 -0.1368

Satellite 0.886 0.902 0.0168

baseball 0.627 0.701 0.0738

mc1 0.705 0.665 -0.0404

pc1 0.473 0.550 0.0770

cardiotocography 1.000 0.991 -0.0084

kr-vs-k 0.097 0.116 0.0187

volcanoes-a1 0.366 0.327 -0.0385

wine-quality-white 0.252 0.251 -0.0011

allbp 0.555 0.553 -0.0028

allrep 0.279 0.288 0.0087

dis 0.696 0.563 -0.1330

steel-plates-fault 1.000 1.000 0.0000

The model performed better with, than without, Formula Features feature engineering 49 out of 69 cases. Some noteworthy examples are:

- Japanese Vowels improved from .57 to .68

- gas-drift improved from .74 to .83

- hill-valley improved from .52 to .74

- climate-model-simulation-crashes improved from .47 to .64

- banknote-authentication improved from .95 to .99

- page-blocks improved from .66 to .81

Using Engineered Features with strong predictive models

We’ve looked so far primarily at shallow decision trees in this article, and have indicated that FormulaFeatures can also generate features useful for other interpretable models. But, this leaves the question of their utility with more powerful predictive models. On the whole, FormulaFeatures is not useful in combination with these tools.

For the most part, strong predictive models such as boosted tree models (e.g., CatBoost, LGBM, XGBoost), will be able to infer the patterns that FormulaFeatures captures in any case. Though they will capture these patterns in the form of large numbers of decision trees, combined in an ensemble, as opposed to single features, the effect will be the same, and may often be stronger, as the trees are not limited to simple, interpretable operators (+, -, *, and /).

So, there may not be an appreciable gain in accuracy using engineered features with strong models, even where they match the true f(x) closely. It can be worth trying FormulaFeatures in this case, and I’ve found it helpful with some projects, but most often the gain is minimal.

It’s really with smaller (interpretable) models where tools such as FormulaFeatures become most useful.

Working with very large numbers of original features

One limitation of feature engineering based on arithmetic operations is that it can be slow where there are a very large number of original features, and it’s relatively common in data science to encounter tables with hundreds of features, or more. This affects unsupervised feature engineering methods much more severely, but supervised methods can also be significantly slowed down.

In these cases, creating even pairwise engineered features can also invite overfitting, as an enormous number of features can be produced, with some performing very well simply by chance.

To address this, FormulaFeatures limits the number of original columns considered when the input data has many columns. So, where datasets have large numbers of columns, only the most predictive are considered after the first iteration. The subsequent iterations perform as normal; there is simply some pruning of the original features used during this first iteration.

Unary Functions

By default, Formula Features does not incorporate unary functions, such as square, square root, or log (though it can do so if the relevant parameters are specified). As indicated above, some tools, such as AutoFeat also optionally support these operations, and they can be valuable at times.

In some cases, it may be that a feature such as A² / B predicts the target better than the equivalent form without the square operator: A / B. However, including unary operators can lead to misleading features if not substantially correct, and may not significantly increase the accuracy of any models using them.

When working with decision trees, so long as there is a monotonic relationship between the features with and without the unary functions, there will not be any change in the final accuracy of the model. And, most unary functions maintain a rank order of values (with exceptions such as sin and cos, which may reasonably be used where cyclical patterns are strongly suspected). For example, the values in A will have the same rank values as A² (assuming all values in A are positive), so squaring will not add any predictive power — decision trees will treat the features equivalently.

As well, in terms of explanatory power, simpler functions can often capture nearly as much of the pattern as can more complex functions: simpler function such as A / B are generally more comprehensible than formulas such as A² / B, but still convey the same idea, that it’s the ratio of the two features that’s relevant.

Limiting the set of operators used by default also allows the process to execute faster and in a more regularized manner.

Coefficients

A similar argument may be made for including coefficients in engineered features. A feature such as 5.3A + 1.4B may capture the relationship A and B have with Y better than the simpler A + B, but the coefficients are often unnecessary, prone to be calculated incorrectly, and inscrutable even where approximately correct.

And, in the case of multiplication and division operations, the coefficients are most likely irrelevant (at least when used with decision trees). For example, 5.3A * 1.4B will be functionally equivalent to A * B for most purposes, as the difference is a constant which can be divided out. Again, there is a monotonic relationship with and without the coefficients, and thus the features are equivalent when used with models, such as decision trees, that are concerned only with the ordering of feature values, not their specific values.

Scaling

Scaling the features generated by FormulaFeatures is not necessary if used with decision trees (or similar model types such as Additive Decision Trees, rules, or decision tables). But, for some model types, such as SVM, kNN, ikNN, logistic regression, and others (including any that work based on distance calculations between points), the features engineered by Formula Features may be on quite different scales than the original features, and will need to be scaled. This is straightforward to do, and is simply a point to remember.

Explainable Machine Learning

In this article, we looked at interpretable models, but should indicate, at least quickly, FormulaFeatures can also be useful for what are called explainable models and it may be that this is actually a more important application.

To explain the idea of explainability: where it is difficult or impossible to create interpretable models with sufficient accuracy, we often instead develop black-box models (e.g. boosted models or neural networks), and then create post-hoc explanations of the model. Doing this is referred to as explainable AI (or XAI). These explanations try to make the black-boxes more understandable. Technique for this include: feature importances, ALE plots, proxy models, and counterfactuals.

These can be important tools in many contexts, but they are limited, in that they can provide only an approximate understanding of the model. As well, they may not be permissible in all environments: in some situations (for example, for safety, or for regulatory compliance), it can be necessary to strictly use interpretable models: that is, to use models where there are no questions about how the model behaves.

And, even where not strictly required, it’s quite often preferable to use an interpretable model where possible: it’s often very useful to have a good understanding of the model and of the predictions made by the model.

Having said that, using black-box models and post-hoc explanations is very often the most suitable choice for prediction problems. As FormulaFeatures produces valuable features, it can support XAI, potentially making feature importances, plots, proxy models, or counter-factuals more interpretable.

For example, it may not be feasible to use a shallow decision tree as the actual model, but it may be used as a proxy model: a simple, interpretable model that approximates the actual model. In these cases, as much as with interpretable models, having a good set of engineered features can make the proxy models more interpretable and more able to capture the behaviour of the actual model.

Installation

The tool uses a single .py file, which may be simply downloaded and used. It has no dependencies other than numpy, pandas, matplotlib, and seaborn (used to plot the features generated).

Conclusions

FormulaFeatures is a tool to engineer features based on arithmetic relationships between numeric features. The features can be informative in themselves, but are particularly useful when used with interpretable ML models.

While this tends to not improve the accuracy for all models, it does quite often improve the accuracy of interpretable models such as shallow decision trees.

Consequently, it can be a useful tool to make it more feasible to use interpretable models for prediction problems — it may allow the use of interpretable models for problems that would otherwise be limited to black box models. And where interpretable models are used, it may allow these to be more accurate or interpretable. For example, with a classification decision tree, we may be able to achieve similar accuracy using fewer nodes, or may be able to achieve higher accuracy using the same number of nodes.

FormulaFeatures can very often support interpretable ML well, but there are some limitations. It does not work with categorical or other non-numeric features. And, even with numeric features, some interactions may be difficult to capture using arithmetic functions. Where there is a more complex relationship between pairs of features and the target column, it may be more appropriate to use ikNN. This works based on nearest neighbors, so can capture relationships of arbitrary complexity between features and the target.

We focused on standard decision trees in this article, but for the most effective interpretable ML, it can be useful to try other interpretable models. It’s straightforward to see, for example, how the ideas here will apply directly to Genetic Decision Trees, which are similar to standard decision trees, simply created using bootstrapping and a genetic algorithm. Similarly for most other interpretable models.

All images are by the author

FormulaFeatures: A Tool to Generate Highly Predictive Features for Interpretable Models was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

FormulaFeatures: A Tool to Generate Highly Predictive Features for Interpretable Models