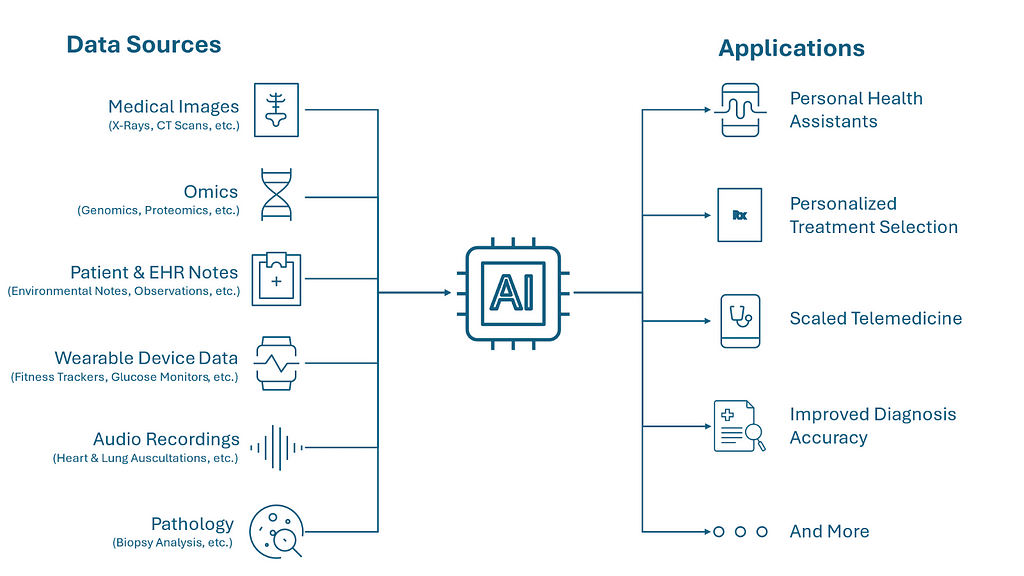

Integrating multimodal data enables a new generation of medical AI systems to better capture doctor’s thoughts and decision process

A multimodal AI model leverages data from various formats, such as text, images, and audio, to give users a more comprehensive understanding of a medical situation. These models are proliferating due to their ability to process and integrate multiple data types, painting a more holistic picture of health than any single data type can create. With the rise of transformer architectures and large language models (LLMs), broadly generalizable across data modalities, developers are gaining new tools to synthesize these data formats. Google’s Gemini multimodal AI and other cutting-edge generative AI models seamlessly understand and synthesize data formats across text, video, image, audio, and codes (genetic or computational). While there have been exciting developments in medical AI over the past several years, adoption has been slow, and existing applications are often targeted at very specific and narrow use cases. The future of medical AI lies in multimodal applications because they mirror the clinical process of doctors, who must consider many factors and data sources when making evaluations. Developers and companies who can execute in this space of immense potential will occupy a vital role in the future of AI-assisted medicine.

Benefits of working with multimodal data

Medical data is inherently multimodal, and AI systems should reflect this reality. When evaluating patients, Doctors leverage various data sources, such as patient notes, medical images, audio recordings, and genetic sequences. Traditionally, AI applications have been designed to handle specific, narrowly defined tasks within these individual data types. For instance, an AI system might excel at identifying lung nodules on a CT scan, but it cannot integrate that data with a patient’s reported symptoms, family history, and genetic information to assist a doctor in diagnosing lung cancer. By contrast, multimodal AI applications can integrate diverse data types, combining the flexibility of LLMs with the specialized expertise of specialist AI systems. Such systems also outperform single-modal AI systems on traditional AI tasks, with studies showing an improvement in accuracy of 6–33% for multimodal systems.

Multimodal AI models also work to break down silos between medical specialties. The evolution of medicine, driven by increasing specialization and proliferating research and data, has created a fragmented landscape where different fields, such as radiology, internal medicine, and oncology, can operate in silos. Caring for patients with complex diseases often requires collaboration across a large team of specialists, and critical insights can be lost due to poor communication. Multimodal AI models bridge these gaps by capturing knowledge from across specialties to ensure that patients benefit from the latest advances in medical knowledge in all relevant fields.

Overview of different medical data modalities

Medical data comprise over 30% of all data produced worldwide and come in many forms. Some of the most prominent forms are listed below (non-exhaustive):

Medical Images

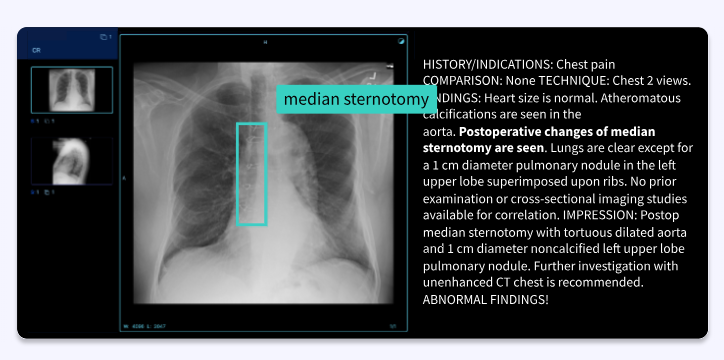

Medical imaging plays such a critical role in healthcare diagnosis and treatment planning that it has an entire specialty (radiology). CT scans and X-rays are commonly used for visualizing bone structures and detecting fractures or tumors, while ultrasounds are essential for monitoring fetal development and examining soft tissues. Doctors use pathology slide images to analyze tissue samples for diseases like cancer. AI algorithms like convolutional neural networks (CNNs) learn to identify patterns and anomalies in these images by processing large volumes of labeled images. Such tools help radiologists and other doctors to make faster and more accurate interpretations of images.

Omics

Omics data, including genomics, transcriptomics, and proteomics, has exploded in recent years thanks to falling sequencing costs. It has revolutionized personalized medicine by providing insights into the molecular underpinnings of diseases. In a multimodal medical AI system, omics data can be used to better understand patients’ susceptibility to certain diseases and potential responses to treatment options. For example, specific mutations in the BRCA genes indicate that a patient is significantly more likely to develop certain forms of cancer.

Patient & EHR Notes

Traditionally, patient notes (clinical observations, treatment plans, etc.) have been challenging to analyze because of their lack of structure. However, LLMs can use these notes to extract insights, identify patterns, and support new large-scale data analysis that would have been impossible before. For example, LLMs can read through notes on potential patients for a clinical trial and identify those who meet eligibility requirements — a previously labor-intensive task.

Wearable Device Data

Health monitoring sensors, such as wearable fitness trackers, measure vital signs like heart rate, blood pressure, sleep patterns, and glucose levels over time. AI applications can analyze these time series to detect trends and predict health events. Such applications help patients by offering personalized health recommendations and helping doctors monitor patients’ conditions outside the hospital setting.

Audio Recordings

Audio recordings, such as heart and lung auscultations, are commonly used to diagnose certain forms of disease. Doctors use heart auscultations to tag the range and intensity of heart murmurs, while lung auscultations can help identify conditions such as pneumonia. AI systems can analyze these audio recordings to detect abnormalities and assist in faster and cheaper diagnosis.

Pathology

Pathology data, derived from tissue samples and microscopic images, play a critical role in diagnosing diseases such as cancer. AI algorithms can analyze these data sources to identify abnormal cell structures, classify tissue types, and detect patterns indicative of disease. By processing vast amounts of pathology data, AI can assist pathologists in making more accurate diagnoses, flagging potential areas of concern, and even predicting disease progression. In fact, a team of researchers at Harvard Medical School and MIT recently launched a multimodal generative AI copilot for human pathology to assist pathologists with common medical tasks.

Applications of multimodal AI models

Multimodal algorithms have the potential to unlock a new paradigm in AI-powered medical applications. One promising application of multimodal AI is personalized medicine, where a system leverages data such as a patient’s condition, medical history, lifestyle, and genome to predict the most effective treatments for a particular patient. Consider an application designed to identify the most effective treatment options for a lung cancer patient. This application could consider the patient’s genetic profile, pathology (tissue sample) images and notes, radiology images (lung CT scans) and notes, and medical history clinical notes (to collect factors like smoking history and environmental impacts). Using all these data sources, the application could recommend the treatment option with the highest efficacy for a patient’s unique profile. Such an approach has already shown promising results in a study by Huang et. al, where the researchers could predict patients’ responses to standard-of-care chemotherapeutic drugs based on their gene expression profiles with >80% accuracy. This approach will help maximize treatment effectiveness and reduce the trial-and-error approach often associated with finding the proper medication or intervention.

Another critical use case is improving speed and accuracy for diagnosis and prognosis. By integrating data sources such as medical imaging, lab results, and patient notes, multimodal medical AI systems can assist doctors with holistic insights. For example, Tempus Next leverages waveform data from echocardiograms and ECGs, EHR text data, and abdominal radiological images (CT scans, ultrasounds) to help cardiologists diagnose and predict patient risk for heart issues like abdominal aortic aneurysms and atrial fibrillation. Optellum’s Virtual Nodule Clinic is taking a similar approach to assist in diagnosing lung cancer using CT scans and clinical notes. Applications like these both improve diagnosis accuracy and save doctors time, thereby helping to address the ongoing physician shortage and drive down healthcare costs.

Multimodal AI will also enable great advances in remote patient monitoring and telemedicine by integrating data from wearable devices, home monitoring systems, and patient self-reported notes to provide continuous, real-time insights into a patient’s health status. This capability is particularly valuable for managing chronic conditions, where ongoing monitoring can detect early signs of deterioration and prompt timely interventions. For example, an AI system might monitor a patient’s sleep data from an Eight Sleep Pod and blood glucose data from Levels (continuous glucose monitoring) to identify deterioration in a patient with pre-diabetes. Doctors can use this early warning to make proactive recommendations to help patients avoid further declines. This technology will help reduce hospital readmissions and improve the overall management of chronic diseases, making healthcare more accessible and reducing the overall load on the healthcare system.

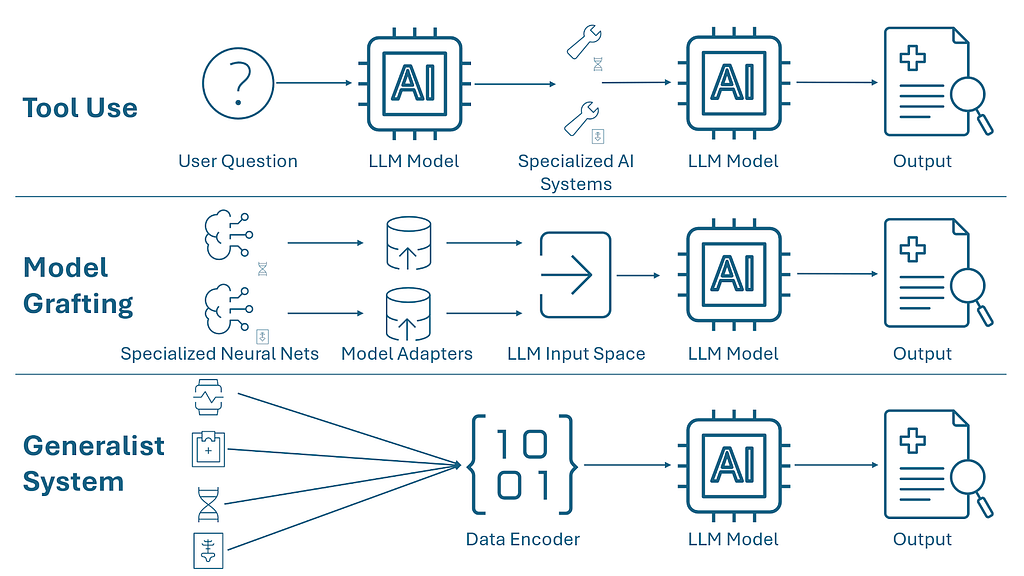

Approaches to building multimodal AI models

Researchers are currently experimenting with different approaches to building multimodal medical AI systems, and research is still in its preliminary stages. Three primary methods of developing systems explored by teams at Google are:

- Tool Use — In this approach, a master LLM outsources the analysis of different data sources to specialized software subsystems trained on that data form. For example, an LLM might forward a chest X-ray to a radiology AI system and ECG analysis to a specialized waveform analysis system and then integrate the responses with patient notes to evaluate heart health. This method allows for flexibility and independence between subsystems, enabling the use of best-in-class tools for each specific task.

- Model Grafting — This method involves adapting specialized neural networks for each relevant domain and integrating them directly into the LLM. For instance, a neural network trained to interpret medical images can be grafted onto an LLM by mapping its output directly to the LLM’s input space. This approach leverages existing optimized models and allows for modular development, although it requires creating adapters for each specific model and domain.

- Generalist Systems — The most ambitious approach involves building a single, integrated system capable of processing all data modalities natively. This method uses a unified model, such as Med-PaLM M, which combines a language model with a vision encoder to handle diverse data types. While this approach maximizes flexibility and information transfer, it also comes with higher computational costs and potential challenges in domain specialization and system debuggability.

Challenges to implementing multimodal AI models

While building multimodal AI models holds great promise, there are multiple challenges to implementing working systems. Some challenges include:

- Data Annotation — To enable supervised learning, machine learning algorithms require data annotated by expert human labelers with the correct features identified. It can be challenging to identify experts across domains to label different sorts of data modalities. Model builders should consider partnering with dedicated data annotation providers with expertise across modalities, such as Centaur Labs.

- Avoiding Bias — One of the most significant risks to deploying AI systems in medical contexts is their potential to exacerbate existing biases and inequities in healthcare. Multimodal systems may further ingrain bias because underrepresented populations are more likely to have missing data across one or more modalities a system is built for. To avoid bias, model builders should consider techniques to minimize bias in their AI applications.

- Regulation — Data privacy regulations like HIPAA impose strict controls on the sharing and use of patient data, making it challenging for developers to integrate and associate data across different modalities. This necessitates additional development efforts to ensure compliance.

- Adoption and Trust — Many traditional AI systems have found the greatest hurdle to impact is driving adoption and trust within the community of medical users. Doctors are concerned about the accuracy and consistency of AI outputs and do not want to endanger patient health by placing trust in these systems before they use them to inform patient care. Multimodal AI models will face similar hurdles towards adoption. Developers must coordinate closely with end users of such systems to drive trust and ensure that systems fit into existing clinical workflows.

- Lack of Data Format Sharing Standardization — For many data formats (e.g., tissue images), there are no standardized protocols for sharing data between different providers. This lack of interoperability can hinder the integration of data sources necessary for developing robust AI models. To expedite the development and adoption of AI systems operating in (currently) unstandardized medical data domains, the research and development community should develop universal standards/frameworks for data sharing and ensure compliance across institutions.

Conclusion

Multimodal AI represents the future of medical applications, offering the potential to revolutionize healthcare by expanding applications’ flexibility, accuracy, and capabilities through integrated and holistic data use. If these applications are effectively developed and deployed, they promise to cut medical costs, expand accessibility, and deliver higher-quality patient care and outcomes.

The most tremendous advances in knowledge and technology often come when from synthesizing insights from different fields. Consider Leonardo Da Vinci, who used his knowledge of drawing and fluid dynamics to inform his studies of the heart and physiology. Medical AI is no different. By integrating discoveries from computer science into medicine, developers unleashed an initial wave of breakthroughs. Now, the promise of integrating multiple data modalities will create a second wave of innovation fueled by ever-smarter AI systems.

Doctors Leverage Multimodal Data; Medical AI Should Too was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Doctors Leverage Multimodal Data; Medical AI Should Too

Go Here to Read this Fast! Doctors Leverage Multimodal Data; Medical AI Should Too