Using Gemini + Text to Speech + MoviePy to create a video, and what this says about what GenAI is becoming rapidly useful for

Like most everyone, I was flabbergasted by NotebookLM and its ability to generate a podcast from a set of documents. And then, I got to thinking: “how do they do that, and where can I get some of that magic?” How easy would it be to replicate?

Goal: Create a video talk from an article

I don’t want to create a podcast, but I’ve often wished I could generate slides and a video talk from my blog posts —some people prefer paging through slides, and others prefer to watch videos, and this would be a good way to meet them where they are. In this article, I’ll show you how to do this.

The full code for this article is on GitHub — in case you want to follow along with me. And the goal is to create this video from this article:

1. Initialize the LLM

I am going to use Google Gemini Flash because (a) it is the least expensive frontier LLM today, (b) it’s multimodal in that it can read and understand images also, and (c) it supports controlled generation, meaning that we can make sure the output of the LLM matches a desired structure.

import pdfkit

import os

import google.generativeai as genai

from dotenv import load_dotenv

load_dotenv("../genai_agents/keys.env")

genai.configure(api_key=os.environ["GOOGLE_API_KEY"])

Note that I’m using Google Generative AI and not Google Cloud Vertex AI. The two packages are different. The Google one supports Pydantic objects for controlled generation; the Vertex AI one only supports JSON for now.

2. Get a PDF of the article

I used Python to download the article as a PDF, and upload it to a temporary storage location that Gemini can read:

ARTICLE_URL = "https://lakshmanok.medium...."

pdfkit.from_url(ARTICLE_URL, "article.pdf")

pdf_file = genai.upload_file("article.pdf")

Unfortunately, something about medium prevents pdfkit from getting the images in the article (perhaps because they are webm and not png …). So, my slides are going to be based on just the text of the article and not the images.

3. Create lecture notes in JSON

Here, the data format I want is a set of slides each of which has a title, key points, and a set of lecture notes. The lecture as a whole has a title and an attribution also.

class Slide(BaseModel):

title: str

key_points: List[str]

lecture_notes: str

class Lecture(BaseModel):

slides: List[Slide]

lecture_title: str

based_on_article_by: str

Let’s tell Gemini what we want it to do:

lecture_prompt = """

You are a university professor who needs to create a lecture to

a class of undergraduate students.

* Create a 10-slide lecture based on the following article.

* Each slide should contain the following information:

- title: a single sentence that summarizes the main point

- key_points: a list of between 2 and 5 bullet points. Use phrases, not full sentences.

- lecture_notes: 3-10 sentences explaining the key points in easy-to-understand language. Expand on the points using other information from the article.

* Also, create a title for the lecture and attribute the original article's author.

"""

The prompt is pretty straightforward — ask Gemini to read the article, extract key points and create lecture notes.

Now, invoke the model, passing in the PDF file and asking it to populate the desired structure:

model = genai.GenerativeModel(

"gemini-1.5-flash-001",

system_instruction=[lecture_prompt]

)

generation_config={

"temperature": 0.7,

"response_mime_type": "application/json",

"response_schema": Lecture

}

response = model.generate_content(

[pdf_file],

generation_config=generation_config,

stream=False

)

A few things to note about the code above:

- We pass in the prompt as the system prompt, so that we don’t need to keep sending in the prompt with new inputs.

- We specify the desired response type as JSON, and the schema to be a Pydantic object

- We send the PDF file to the model and tell it generate a response. We’ll wait for it to complete (no need to stream)

The result is JSON, so extract it into a Python object:

lecture = json.loads(response.text)

For example, this is what the 3rd slide looks like:

{'key_points': [

'Silver layer cleans, structures, and prepares data for self-service analytics.',

'Data is denormalized and organized for easier use.',

'Type 2 slowly changing dimensions are handled in this layer.',

'Governance responsibility lies with the source team.'

],

'lecture_notes': 'The silver layer takes data from the bronze layer and transforms it into a usable format for self-service analytics. This involves cleaning, structuring, and organizing the data. Type 2 slowly changing dimensions, which track changes over time, are also handled in this layer. The governance of the silver layer rests with the source team, which is typically the data engineering team responsible for the source system.',

'title': 'The Silver Layer: Data Transformation and Preparation'

}

4. Convert to PowerPoint

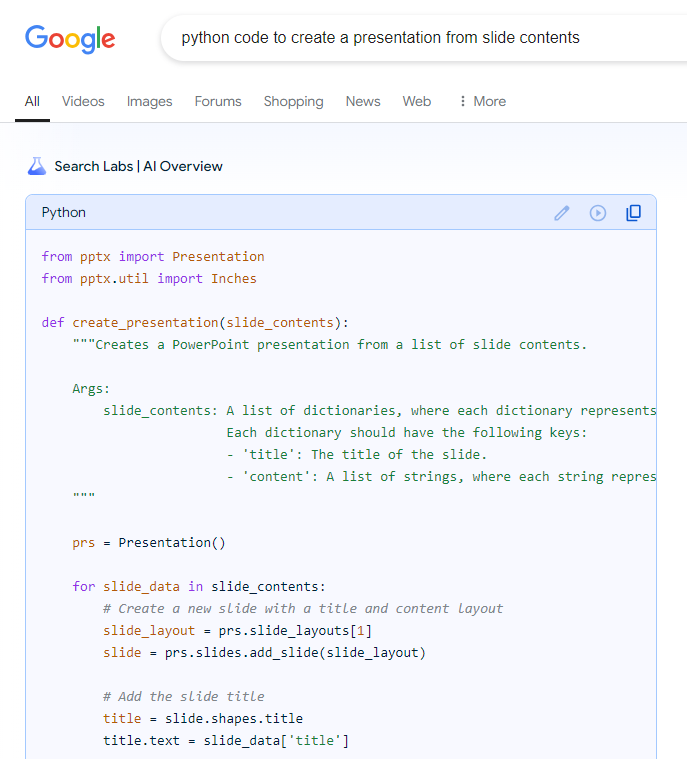

We can use the Python package pptx to create a Presentation with notes and bullet points. The code to create a slide looks like this:

for slidejson in lecture['slides']:

slide = presentation.slides.add_slide(presentation.slide_layouts[1])

title = slide.shapes.title

title.text = slidejson['title']

# bullets

textframe = slide.placeholders[1].text_frame

for key_point in slidejson['key_points']:

p = textframe.add_paragraph()

p.text = key_point

p.level = 1

# notes

notes_frame = slide.notes_slide.notes_text_frame

notes_frame.text = slidejson['lecture_notes']

The result is a PowerPoint presentation that looks like this:

Not very fancy, but definitely a great starting point for editing if you are going to give a talk.

5. Read the notes aloud and save audio

Well, we were inspired by a podcast, so let’s see how to create just an audio of someone summarizing the article.

We already have the lecture notes, so let’s create audio files of each of the slides.

Here’s the code to take some text, and have an AI voice read it out. We save the resulting audio into an mp3 file:

from google.cloud import texttospeech

def convert_text_audio(text, audio_mp3file):

"""Synthesizes speech from the input string of text."""

tts_client = texttospeech.TextToSpeechClient()

input_text = texttospeech.SynthesisInput(text=text)

voice = texttospeech.VoiceSelectionParams(

language_code="en-US",

name="en-US-Standard-C",

ssml_gender=texttospeech.SsmlVoiceGender.FEMALE,

)

audio_config = texttospeech.AudioConfig(

audio_encoding=texttospeech.AudioEncoding.MP3

)

response = tts_client.synthesize_speech(

request={"input": input_text, "voice": voice, "audio_config": audio_config}

)

# The response's audio_content is binary.

with open(audio_mp3file, "wb") as out:

out.write(response.audio_content)

print(f"{audio_mp3file} written.")

What’s happening in the code above?

- We are using Google Cloud’s text to speech API

- Asking it to use a standard US accent female voice. If you were doing a podcast, you’d pass in a “speaker map” here, one voice for each speaker.

- We then give it in the input text, ask it generate audio

- Save the audio as an mp3 file. Note that this has to match the audio encoding.

Now, create audio by iterating through the slides, and passing in the lecture notes:

for slideno, slide in enumerate(lecture['slides']):

text = f"On to {slide['title']} n"

text += slide['lecture_notes'] + "nn"

filename = os.path.join(outdir, f"audio_{slideno+1:02}.mp3")

convert_text_audio(text, filename)

filenames.append(filename)

The result is a bunch of audio files. You can concatenate them if you wish using pydub:

combined = pydub.AudioSegment.empty()

for audio_file in audio_files:

audio = pydub.AudioSegment.from_file(audio_file)

combined += audio

# pause for 4 seconds

silence = pydub.AudioSegment.silent(duration=4000)

combined += silence

combined.export("lecture.wav", format="wav")

But it turned out that I didn’t need to. The individual audio files, one for each slide, were what I needed to create a video. For a podcast, of course, you’d want a single mp3 or wav file.

6. Create images of the slides

Rather annoyingly, there’s no easy way to render PowerPoint slides as images using Python. You need a machine with Office software installed to do that — not the kind of thing that’s easily automatable. Maybe I should have used Google Slides … Anyway, a simple way to render images is to use the Python Image Library (PIL):

def text_to_image(output_path, title, keypoints):

image = Image.new("RGB", (1000, 750), "black")

draw = ImageDraw.Draw(image)

title_font = ImageFont.truetype("Coval-Black.ttf", size=42)

draw.multiline_text((10, 25), wrap(title, 50), font=title_font)

text_font = ImageFont.truetype("Coval-Light.ttf", size=36)

for ptno, keypoint in enumerate(keypoints):

draw.multiline_text((10, (ptno+2)*100), wrap(keypoint, 60), font=text_font)

image.save(output_path)

The resulting image is not great, but it is serviceable (you can tell no one pays me to write production code anymore):

7. Create a Video

Now that we have a set of audio files and a set of image files, we can use a Python package moviepy to create a video clip:

clips = []

for slide, audio in zip(slide_files, audio_files):

audio_clip = AudioFileClip(f"article_audio/{audio}")

slide_clip = ImageClip(f"article_slides/{slide}").set_duration(audio_clip.duration)

slide_clip = slide_clip.set_audio(audio_clip)

clips.append(slide_clip)

full_video = concatenate_videoclips(clips)

And we can now write it out:

full_video.write_videofile("lecture.mp4", fps=24, codec="mpeg4",

temp_audiofile='temp-audio.mp4', remove_temp=True)

End result? We have four artifacts, all created automatically from the article.pdf:

lecture.json lecture.mp4 lecture.pptx lecture.wav

There’s:

- a JSON file with keypoints, lecture notes, etc.

- A PowerPoint file that you can modify. The slides have the key points, and the notes section of the slides has the “lecture notes”

- An audio file consisting of an AI voice reading out the lecture notes

- A mp4 movie (that I uploaded to YouTube) of the audio + images. This is the video talk that I set out to create.

Pretty cool, eh?

8. What this says about the future of software

We are all, as a community, probing around to find what this really cool technology (generative AI) can be used for. Obviously, you can use it to create content, but the content that it creates is good for brainstorming, but not to use as-is. Three years of improvements in the tech have not solved the problem that GenAI generates blah content, and not-ready-to-use code.

That brings us to some of the ancillary capabilities that GenAI has opened up. And these turn out to be extremely useful. There are four capabilities of GenAI that this post illustrates.

(1) Translating unstructured data to structured data

The Attention paper was written to solve the translation problem, and it turns out transformer-based models are really good at translation. We keep discovering use cases of this. But not just Japanese to English, but also Java 11 to Java 17, of text to SQL, of text to speech, between database dialects, …, and now of articles to audio-scripts. This, it turns out is the stepping point of using GenAI to create podcasts, lectures, videos, etc.

All I had to do was to prompt the LLM to construct a series of slide contents (keypoints, title, etc.) from the article, and it did. It even returned the data to me in structured format, conducive to using it from a computer program. Specifically, GenAI is really good at translating unstructured data to structured data.

(2) Code search and coding assistance are now dramatically better

The other thing that GenAI turns out to be really good at is at adapting code samples dynamically. I don’t write code to create presentations or text-to-speech or moviepy everyday. Two years ago, I’d have been using Google search and getting Stack Overflow pages and adapting the code by hand. Now, Google search is giving me ready-to-incorporate code:

Of course, had I been using a Python IDE (rather than a Jupyter notebook), I could have avoided the search step completely — I could have written a comment and gotten the code generated for me. This is hugely helpful, and speeds up development using general purpose APIs.

(3) GenAI web services are robust and easy-to-consume

Let’s not lose track of the fact that I used the Google Cloud Text-to-Speech service to turn my audio script into actual audio files. Text-to-speech is itself a generative AI model (and another example of the translation superpower). The Google TTS service which was introduced in 2018 (and presumably improved since then) was one of the first generative AI services in production and made available through an API.

In this article, I used two generative AI models — TTS and Gemini — that are made available as web services. All I had to do was to call their APIs.

(4) It’s easier than ever to provide end-user customizability

I didn’t do this, but you can squint a little and see where things are headed. If I’d wrapped up the presentation creation, audio creation, and movie creation code in services, I could have had a prompt create the function call to invoke these services as well. And put a request-handling agent that would allow you to use text to change the look-and-feel of the slides or the voice of the person reading the video.

It becomes extremely easy to add open-ended customizability to the software you build.

Summary

Inspired by the NotebookLM podcast feature, I set out to build an application that would convert my articles to video talks. The key step is to prompt an LLM to produce slide contents from the article, another GenAI model to convert the audio script into audio files, and use existing Python APIs to put them together into a video.

This article illustrates four capabilities that GenAI is unlocking: translation of all kinds, coding assistance, robust web services, and end-user customizability.

I loved being able to easily and quickly create video lectures from my articles. But I’m even more excited about the potential that we keep discovering in this new tool we have in our hands.

Further Reading

- Full code for this article: https://github.com/lakshmanok/lakblogs/blob/main/genai_seminar/create_lecture.ipynb

- The source article that I converted to a video: https://lakshmanok.medium.com/what-goes-into-bronze-silver-and-gold-layers-of-a-medallion-data-architecture-4b6fdfb405fc

- The resulting video: https://youtu.be/jKzmj8-1Y9Q

- Turns out Sascha Heyer wrote up how to use GenAI to generate a podcast, which is the exact Notebook LM usecase. His approach is somewhat similar to mine, except that there is no video, just audio. In a cool twist, he uses his own voice as one of the podcast speakers!

- Of course, here’s the video talk of this article created using the technique shown in this video. Ideally, we are pulling out code snippets and images from the article, but this is a start …

Using Generative AI to Automatically Create a Video Talk from an Article was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Using Generative AI to Automatically Create a Video Talk from an Article

Go Here to Read this Fast! Using Generative AI to Automatically Create a Video Talk from an Article