Yet another LangGraph tutorial

A Task-Oriented Dialogue system (ToD) is a system that assists users in achieving a particular task, such as booking a restaurant, planning a travel itinerary or ordering delivery food.

We know that we instruct LLMs using prompts, but how can we implement these ToD systems so that the conversation always revolves around the task we want the users to achieve? One way of doing that is by using prompts, memory and tool calling. FortunatelyLangChain + LangGraph can help us tie all these things together.

In this article, you’ll learn how to build a Task Oriented Dialogue System that helps users create User Stories with a high level of quality. The system is all based on LangGraph’s Prompt Generation from User Requirements tutorial.

Why do we need to use LangGraph?

In this tutorial we assume you already know how to use LangChain. A User Story has some components like objective, success criteria, plan of execution and deliverables. The user should provide each of them, and we need to “hold their hand” into providing them one by one. Doing that using only LangChain would require a lot of ifs and elses.

With LangGraph we can use a graph abstraction to create cycles to control the dialogue. It also has built-in persistence, so we don’t need to worry about actively tracking the interactions that happen within the graph.

The main LangGraph abstraction is the StateGraph, which is used to create graph workflows. Each graph needs to be initialized with a state_schema: a schema class that each node of the graph uses to read and write information.

The flow of our system will consist of rounds of LLM and user messages. The main loop will contain these steps:

- User says something

- LLM reads the messages of the state and decides if it’s ready to create the User Story or if the user should respond again

Our system is simple so the schema consists only of the messages that were exchanged in the dialogue.

from langgraph.graph.message import add_messages

class StateSchema(TypedDict):

messages: Annotated[list, add_messages]

The add_messages method is used to merge the output messages from each node into the existing list of messages in the graph’s state.

Speaking about nodes, another two main LangGraph concepts are Nodes and Edges. Each node of the graph runs a function and each edge controls the flow of one node to another. We also have START and END virtual nodes to tell the graph where to start the execution and where the execution should end.

To run the system we’ll use the .stream() method. After we build the graph and compile it, each round of interaction will go through the START until the END of the graph and the path it takes (which nodes should run or not) is controlled by our workflow combined with the state of the graph. The following code has the main flow of our system:

config = {"configurable": {"thread_id": str(uuid.uuid4())}}

while True:

user = input("User (q/Q to quit): ")

if user in {"q", "Q"}:

print("AI: Byebye")

break

output = None

for output in graph.stream(

{"messages": [HumanMessage(content=user)]}, config=config, stream_mode="updates"

):

last_message = next(iter(output.values()))["messages"][-1]

last_message.pretty_print()

if output and "prompt" in output:

print("Done!")

At each interaction (if the user didn’t type “q” or “Q” to quit) we run graph.stream() passing the message of the user using the “updates” stream_mode, which streams the updates of the state after each step of the graph (https://langchain-ai.github.io/langgraph/concepts/low_level/#stream-and-astream). We then get this last message from the state_schema and print it.

In this tutorial we’ll still learn how to create the nodes and edges of the graph, but first let’s talk more about the architecture of ToD systems in general and learn how to implement one with LLMs, prompts and tool calling.

The Architecture of ToD systems

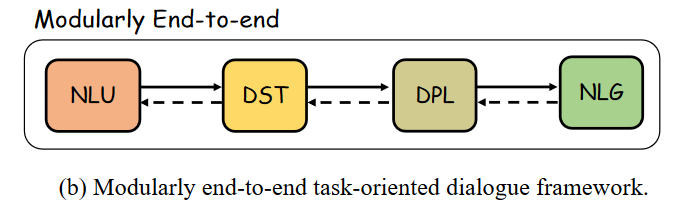

The main components of a framework to build End-to-End Task-Oriented Dialogue systems are [1]:

- Natural Language Understanding (NLU) for extracting the intent and key slots of users

- Dialogue State Tracking (DST) for tracing users’ belief state given dialogue

- Dialogue Policy Learning (DPL) to determine the next step to take

- Natural Language Generation (NLG) for generating dialogue system response

By using LLMs, we can combine some of these components into only one. The NLP and the NLG components are easy peasy to implement using LLMs since understanding and generating dialogue responses are their specialty.

We can implement the Dialogue State Tracking (DST) and the Dialogue Policy Learning (DPL) by using LangChain’s SystemMessage to prime the AI behavior and always pass this message every time we interact with the LLM. The state of the dialogue should also always be passed to the LLM at every interaction with the model. This means that we will make sure the dialogue is always centered around the task we want the user to complete by always telling the LLM what the goal of the dialogue is and how it should behave. We’ll do that first by using a prompt:

prompt_system_task = """Your job is to gather information from the user about the User Story they need to create.

You should obtain the following information from them:

- Objective: the goal of the user story. should be concrete enough to be developed in 2 weeks.

- Success criteria the sucess criteria of the user story

- Plan_of_execution: the plan of execution of the initiative

- Deliverables: the deliverables of the initiative

If you are not able to discern this info, ask them to clarify! Do not attempt to wildly guess.

Whenever the user responds to one of the criteria, evaluate if it is detailed enough to be a criterion of a User Story. If not, ask questions to help the user better detail the criterion.

Do not overwhelm the user with too many questions at once; ask for the information you need in a way that they do not have to write much in each response.

Always remind them that if they do not know how to answer something, you can help them.

After you are able to discern all the information, call the relevant tool."""

And then appending this prompt everytime we send a message to the LLM:

def domain_state_tracker(messages):

return [SystemMessage(content=prompt_system_task)] + messages

Another important concept of our ToD system LLM implementation is tool calling. If you read the last sentence of the prompt_system_task again it says “After you are able to discern all the information, call the relevant tool”. This way, we are telling the LLM that when it decides that the user provided all the User Story parameters, it should call the tool to create the User Story. Our tool for that will be created using a Pydantic model with the User Story parameters.

By using only the prompt and tool calling, we can control our ToD system. Beautiful right? Actually we also need to use the state of the graph to make all this work. Let’s do it in the next section, where we’ll finally build the ToD system.

Creating the dialogue system to build User Stories

Alright, time to do some coding. First we’ll specify which LLM model we’ll use, then set the prompt and bind the tool to generate the User Story:

import os

from dotenv import load_dotenv, find_dotenv

from langchain_openai import AzureChatOpenAI

from langchain_core.pydantic_v1 import BaseModel

from typing import List, Literal, Annotated

_ = load_dotenv(find_dotenv()) # read local .env file

llm = AzureChatOpenAI(azure_deployment=os.environ.get("AZURE_OPENAI_CHAT_DEPLOYMENT_NAME"),

openai_api_version="2023-09-01-preview",

openai_api_type="azure",

openai_api_key=os.environ.get('AZURE_OPENAI_API_KEY'),

azure_endpoint=os.environ.get('AZURE_OPENAI_ENDPOINT'),

temperature=0)

prompt_system_task = """Your job is to gather information from the user about the User Story they need to create.

You should obtain the following information from them:

- Objective: the goal of the user story. should be concrete enough to be developed in 2 weeks.

- Success criteria the sucess criteria of the user story

- Plan_of_execution: the plan of execution of the initiative

If you are not able to discern this info, ask them to clarify! Do not attempt to wildly guess.

Whenever the user responds to one of the criteria, evaluate if it is detailed enough to be a criterion of a User Story. If not, ask questions to help the user better detail the criterion.

Do not overwhelm the user with too many questions at once; ask for the information you need in a way that they do not have to write much in each response.

Always remind them that if they do not know how to answer something, you can help them.

After you are able to discern all the information, call the relevant tool."""

class UserStoryCriteria(BaseModel):

"""Instructions on how to prompt the LLM."""

objective: str

success_criteria: str

plan_of_execution: str

llm_with_tool = llm.bind_tools([UserStoryCriteria])

As we were talking earlier, the state of our graph consists only of the messages exchanged and a flag to know if the user story was created or not. Let’s create the graph first using StateGraph and this schema:

from langgraph.graph import StateGraph, START, END

from langgraph.graph.message import add_messages

class StateSchema(TypedDict):

messages: Annotated[list, add_messages]

created_user_story: bool

workflow = StateGraph(StateSchema)

The next image shows the structure of the final graph:

At the top we have a talk_to_user node. This node can either:

- Finalize the dialogue (go to the finalize_dialogue node)

- Decide that it’s time to wait for the user input (go to the END node)

Since the main loop runs forever (while True), every time the graph reaches the END node, it waits for the user input again. This will become more clear when we create the loop.

Let’s create the nodes of the graph, starting with the talk_to_user node. This node needs to keep track of the task (maintaing the main prompt during all the conversation) and also keep the message exchanges because it’s where the state of the dialogue is stored. This state also keeps which parameters of the User Story are already filled or not using the messages. So this node should add the SystemMessage every time and append the new message from the LLM:

def domain_state_tracker(messages):

return [SystemMessage(content=prompt_system_task)] + messages

def call_llm(state: StateSchema):

"""

talk_to_user node function, adds the prompt_system_task to the messages,

calls the LLM and returns the response

"""

messages = domain_state_tracker(state["messages"])

response = llm_with_tool.invoke(messages)

return {"messages": [response]}

Now we can add the talk_to_user node to this graph. We’ll do that by giving it a name and then passing the function we’ve created:

workflow.add_node("talk_to_user", call_llm)

This node should be the first node to run in the graph, so let’s specify that with an edge:

workflow.add_edge(START, "talk_to_user")

So far the graph looks like this:

To control the flow of the graph, we’ll also use the message classes from LangChain. We have four types of messages:

- SystemMessage: message for priming AI behavior

- HumanMessage: message from a human

- AIMessage: the message returned from a chat model as a response to a prompt

- ToolMessage: message containing the result of a tool invocation, used for passing the result of executing a tool back to a model

We’ll use the type of the last message of the graph state to control the flow on the talk_to_user node. If the last message is an AIMessage and it has the tool_calls key, then we’ll go to the finalize_dialogue node because it’s time to create the User Story. Otherwise, we should go to the END node because we’ll restart the loop since it’s time for the user to answer.

The finalize_dialogue node should build the ToolMessage to pass the result to the model. The tool_call_id field is used to associate the tool call request with the tool call response. Let’s create this node and add it to the graph:

def finalize_dialogue(state: StateSchema):

"""

Add a tool message to the history so the graph can see that it`s time to create the user story

"""

return {

"messages": [

ToolMessage(

content="Prompt generated!",

tool_call_id=state["messages"][-1].tool_calls[0]["id"],

)

]

}

workflow.add_node("finalize_dialogue", finalize_dialogue)

Now let’s create the last node, the create_user_story one. This node will call the LLM using the prompt to create the User Story and the information that was gathered during the conversation. If the model decided that it was time to call the tool then the values of the key tool_calls should have all the info to create the User Story.

prompt_generate_user_story = """Based on the following requirements, write a good user story:

{reqs}"""

def build_prompt_to_generate_user_story(messages: list):

tool_call = None

other_msgs = []

for m in messages:

if isinstance(m, AIMessage) and m.tool_calls: #tool_calls is from the OpenAI API

tool_call = m.tool_calls[0]["args"]

elif isinstance(m, ToolMessage):

continue

elif tool_call is not None:

other_msgs.append(m)

return [SystemMessage(content=prompt_generate_user_story.format(reqs=tool_call))] + other_msgs

def call_model_to_generate_user_story(state):

messages = build_prompt_to_generate_user_story(state["messages"])

response = llm.invoke(messages)

return {"messages": [response]}

workflow.add_node("create_user_story", call_model_to_generate_user_story)

With all the nodes are created, it’s time to add the edges. We’ll add a conditional edge to the talk_to_user node. Remember that this node can either:

- Finalize the dialogue if it’s time to call the tool (go to the finalize_dialogue node)

- Decide that we need to gather user input (go to the END node)

This means that we’ll only check if the last message is an AIMessage and has the tool_calls key; otherwise we should go to the END node. Let’s create a function to check this and add it as an edge:

def define_next_action(state) -> Literal["finalize_dialogue", END]:

messages = state["messages"]

if isinstance(messages[-1], AIMessage) and messages[-1].tool_calls:

return "finalize_dialogue"

else:

return END

workflow.add_conditional_edges("talk_to_user", define_next_action)

Now let’s add the other edges:

workflow.add_edge("finalize_dialogue", "create_user_story")

workflow.add_edge("create_user_story", END)

With that the graph workflow is done. Time to compile the graph and create the loop to run it:

memory = MemorySaver()

graph = workflow.compile(checkpointer=memory)

config = {"configurable": {"thread_id": str(uuid.uuid4())}}

while True:

user = input("User (q/Q to quit): ")

if user in {"q", "Q"}:

print("AI: Byebye")

break

output = None

for output in graph.stream(

{"messages": [HumanMessage(content=user)]}, config=config, stream_mode="updates"

):

last_message = next(iter(output.values()))["messages"][-1]

last_message.pretty_print()

if output and "create_user_story" in output:

print("User story created!")

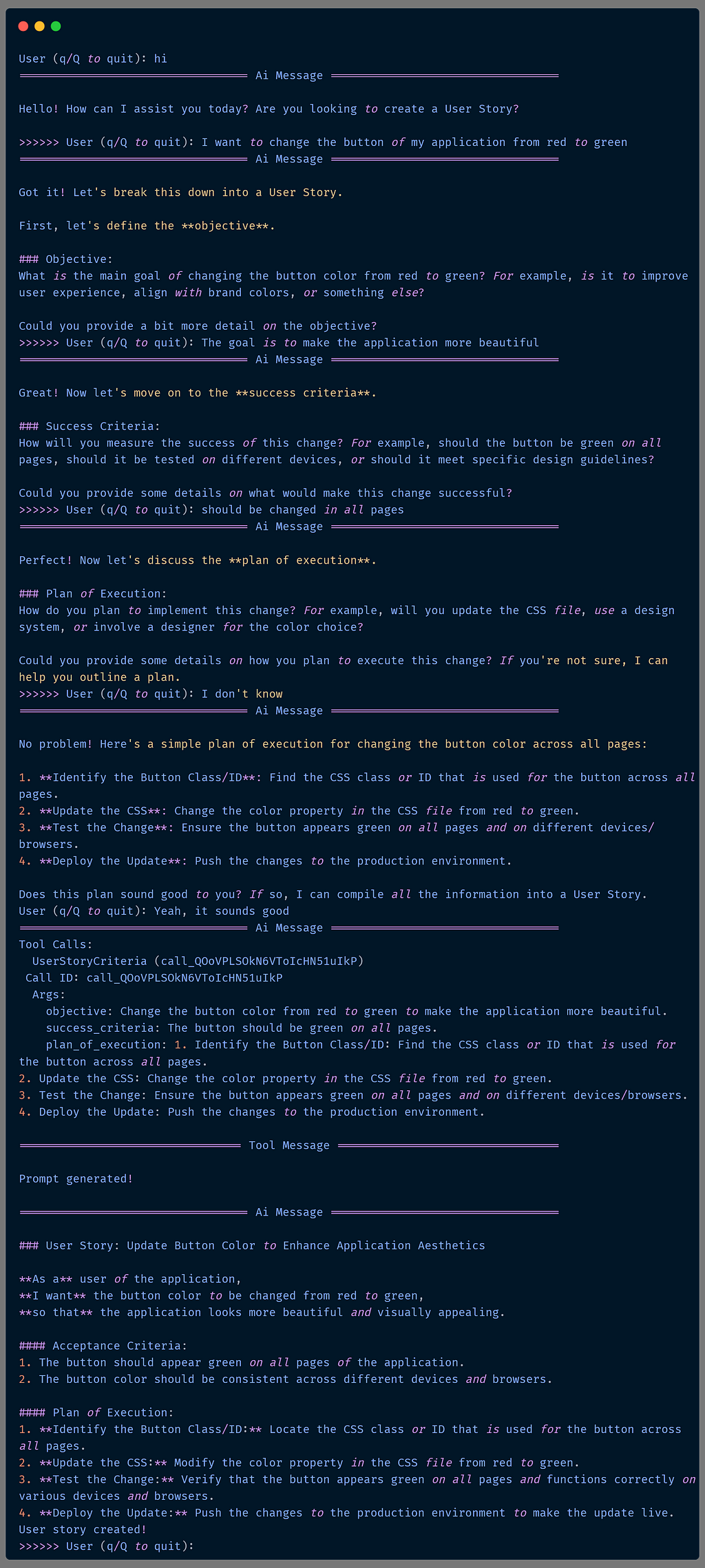

Let’s finally test the system:

Final Thoughts

With LangGraph and LangChain we can build systems that guide users through structured interactions reducing the complexity to create them by using the LLMs to help us control the conditional logic.

With the combination of prompts, memory management, and tool calling we can create intuitive and effective dialogue systems, opening new possibilities for user interaction and task automation.

I hope that this tutorial help you better understand how to use LangGraph (I’ve spend a couple of days banging my head on the wall to understand how all the pieces of the library work together).

All the code of this tutorial can be found here: dmesquita/task_oriented_dialogue_system_langgraph (github.com)

Thanks for reading!

References

[1] Qin, Libo, et al. “End-to-end task-oriented dialogue: A survey of tasks, methods, and future directions.” arXiv preprint arXiv:2311.09008 (2023).

[2] Prompt generation from user requirements. Available at: https://langchain-ai.github.io/langgraph/tutorials/chatbots/information-gather-prompting

Creating Task-Oriented Dialog systems with LangGraph and LangChain was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Creating Task-Oriented Dialog systems with LangGraph and LangChain

Go Here to Read this Fast! Creating Task-Oriented Dialog systems with LangGraph and LangChain