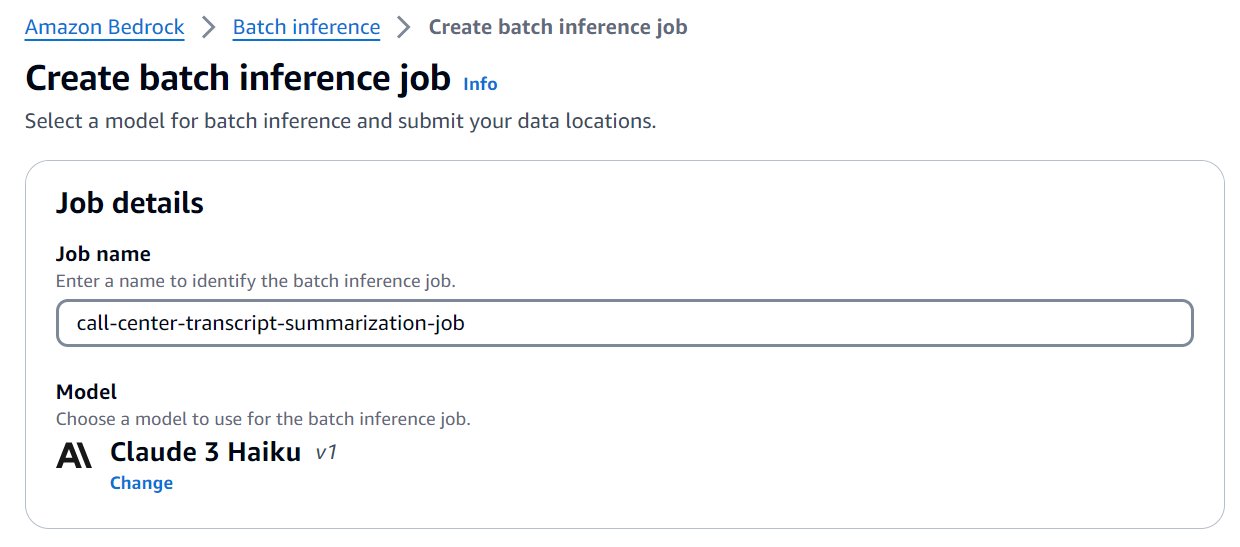

Today, we are excited to announce general availability of batch inference for Amazon Bedrock. This new feature enables organizations to process large volumes of data when interacting with foundation models (FMs), addressing a critical need in various industries, including call center operations. In this post, we demonstrate the capabilities of batch inference using call center transcript summarization as an example.

Originally appeared here:

Enhance call center efficiency using batch inference for transcript summarization with Amazon Bedrock