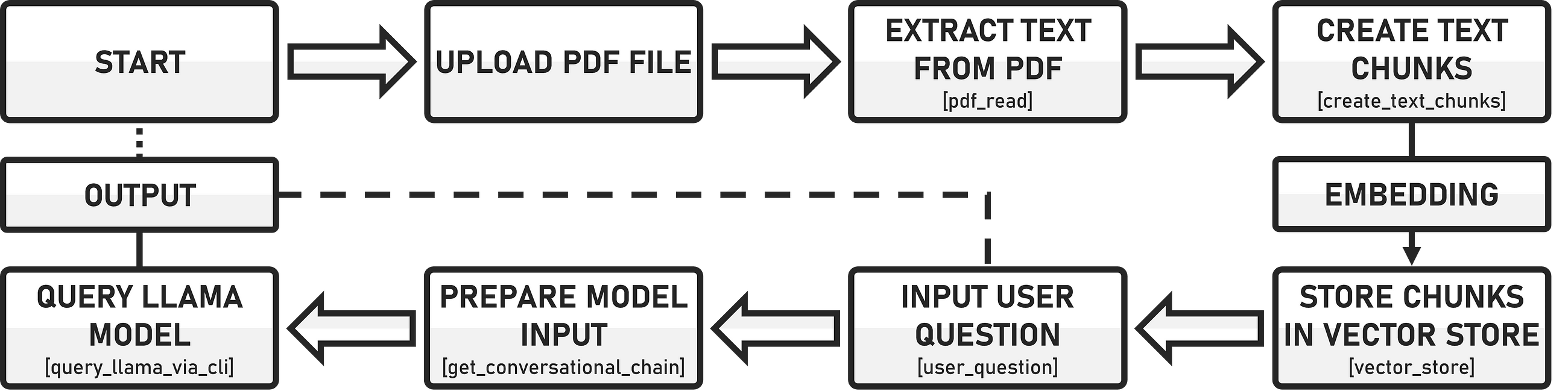

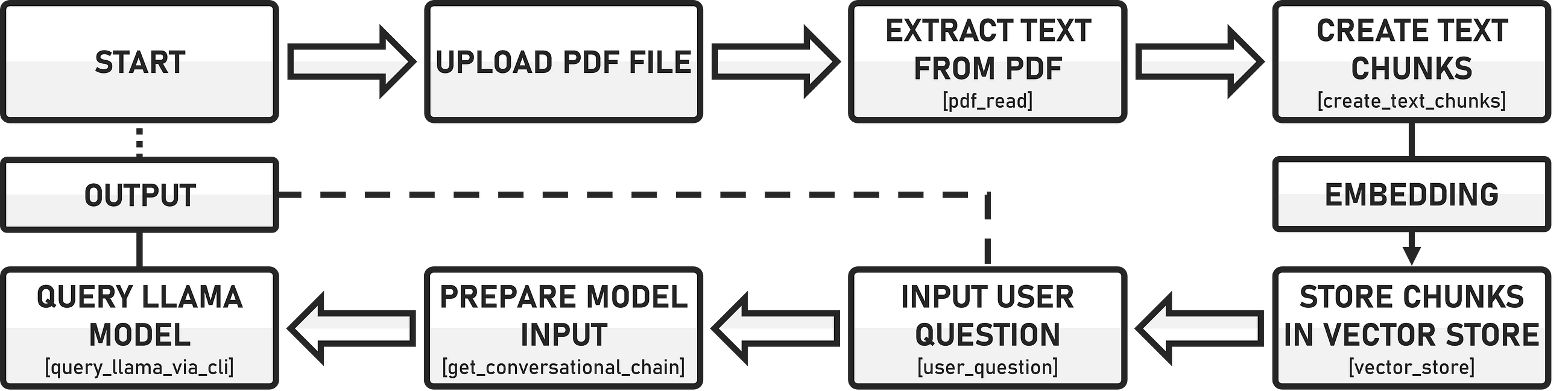

A contribution to the creation of a locally executed, free PDF chat app with Streamlit and Meta AI’s LLaMA model, without API limitations.

Originally appeared here:

How to Talk to a PDF File Without Using Proprietary Models: CLI + Streamlit + Ollama