Learn how to build a simple data model that validates your data through type hints

Many pandas tutorials online teach you the basics of manipulating and cleaning data, but rarely do they show how to validate whether data is correct. This is where data validation using pandera comes in.

Why Data Validation?

Like anyone, when I first look at data, I complete basic investigations such as looking at the data types, checking for null values, and visualising data distributions to figure out roughly how I should process data.

However, we need data validation to confirm our data follows business logic. For example, for data that contains product information, we need to validate that product price has no negative values, or when a user gives an email address, we need to assert the email address follows a recognised pattern.

Neglecting data validation will have downstream impacts on analytics and modelling as poor data quality can lead to increased bias, noise and inaccuracy.

A recent example of poor data validation is Zillow’s house pricing algorithm overvaluing 2/3 of the properties Zillow purchased, leading to a $500 million fall in Zillow property valuations in Q3 and Q4 of 2021 alone.

This shows that not only do you have to be conscious of whether your data meets validation criteria, but whether it reflects reality which in Zillow’s case it did not.

What is Pandera?

Pandera is a Python package that provides a well-documented and flexible API that integrates with both pandas and polars — the two main Python data libraries.

We can use pandera to validate dataframe data types and properties using business logic and domain expertise.

Outline

This article will cover:

- How to get started with pandera

- How to define a pandera data model

- How to validate data and handle errors

Setup

Install Dependencies

pip install pandas

pip install pandera

Data

The data used for this article is fake football market data generated using Claude.ai.

Define the Validation Model

The package allows you to define either a validation schema or a data validation model which closely resembles another great data validation package called Pydantic.

For this exercise, we will focus on the validation model as it allows for type hint integration with our Python code, and I find it is a bit more readable than the validation schema. However, if you want to leverage the validation schema, the model has a method to transform it into a schema.

You can find information about both validation methods here:

Load Data

data = {

'dob': pd.to_datetime(['1990-05-15', '1988-11-22', '1995-03-10', '1993-07-30', '1992-01-18', '1994-09-05', '1991-12-03', '1989-06-20', '1996-02-14', '1987-08-08']),

'age': [34, 35, 29, 31, 32, 30, 32, 35, 28, 37],

'country': ['England', 'Spain', 'Germany', 'France', 'Italy', 'Brazil', 'Argentina', 'Netherlands', 'Portugal', 'England'],

'current_club': ['Manchester United', 'Chelsea', 'Bayern Munich', 'Paris Saint-Germain', 'Juventus', 'Liverpool', 'Barcelona', 'Ajax', 'Benfica', 'Real Madrid'],

'height': pd.array([185, 178, None, 176, 188, 182, 170, None, 179, 300], dtype='Int16'),

'name': ['John Smith', 'Carlos Rodriguez', 'Hans Mueller', 'Pierre Dubois', 'Marco Rossi', 'Felipe Santos', 'Diego Fernandez', 'Jan de Jong', 'Rui Silva', 'Gavin Harris'],

'position': ['Forward', 'Midfielder', 'Defender', 'Goalkeeper', 'Defender', 'Forward', 'Midfielder', 'Defender', 'Forward', 'Midfielder'],

'value_euro_m': [75.5, 90.2, 55.8, 40.0, 62.3, 88.7, 70.1, 35.5, 45.9, 95.0],

'joined_date': pd.to_datetime(['2018-07-01', '2015-08-15', None, '2017-06-30', '2016-09-01', None, '2021-07-15', '2014-08-01', '2022-01-05', '2019-06-01']),

'number': [9, 10, 4, 1, 3, 11, 8, 5, 7, 17],

'signed_from': ['Everton', 'Atletico Madrid', 'Borussia Dortmund', None, 'AC Milan', 'Santos', None, 'PSV Eindhoven', 'Sporting CP', 'Newcastle United'],

'signing_fee_euro_m': [65.0, 80.5, 45.0, None, 55.0, 75.2, 60.8, None, 40.5, 85.0],

'foot': ['right', 'left', 'right', 'both', 'right', 'left', 'left', 'right', 'both', 'right'],

}

df = pd.DataFrame(data)

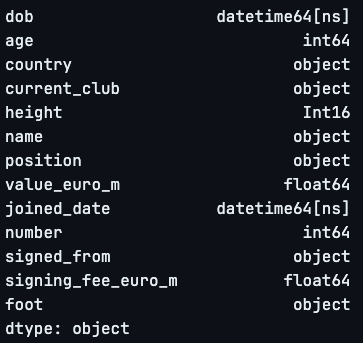

Check the Data Types

data.types

We can use the data types to help define our data model.

Create Data Model

class PlayerSchema(pa.DataFrameModel):

dob: Series[pd.Timestamp] = pa.Field(nullable=False, ge=pd.Timestamp('1975-01-01'))

age: Series[pa.Int64] = pa.Field(ge=0, le=50, nullable=False)

country: Series[pa.String] = pa.Field(nullable=False)

current_club: Series[pa.String] = pa.Field(nullable=False)

height: Series[pa.Int16] = pa.Field(ge=120, le=210, nullable=True)

name: Series[pa.String] = pa.Field(nullable=False)

position: Series[pa.String] = pa.Field(nullable=False)

value_euro_m: Series[pa.Float64] = pa.Field(ge=0, le=200)

joined_date: Series[pd.Timestamp] = pa.Field(nullable=True, ge=pd.Timestamp('2000-01-01'))

number: Series[pa.Int64] = pa.Field(ge=0, le=99)

signed_from: Series[pa.String] = pa.Field(nullable=True)

signing_fee_euro_m: Series[pa.Float64] = pa.Field(ge=0, le=300, nullable=True)

foot: Series[pa.String] = pa.Field(nullable=False, isin=['right', 'left', 'both', 'unknown'])

Above, we define a schema by sub-classing pa.DataFrameModel which is done in the same way you sub-class BaseModel in Pydantic. We then populated the schema with the corresponding columns in our dataset, providing the data type expected in each column, and defining boundaries using the pa.Field method.

Pandera has great integration with pandas meaning you can use pandas data types (e.g. pd.Timestamp) as well as pandera data types (e.g. pa.Int64) to define each column.

Reuse Fields

To avoid repeating fields, we can reuse fields by using partial from the built-in Python library functools.

from functools import partial

NullableField = partial(pa.Field, nullable=True)

NotNullableField = partial(pa.Field, nullable=False)

The partial class creates a new function that applies a specified subset of parameters from the original function.

Above, we created two reusable fields for model variables that either contain null values or do not contain null values.

Our updated data model looks like this

class PlayerSchema(pa.DataFrameModel):

dob: Series[pd.Timestamp] = pa.Field(nullable=False, ge=pd.Timestamp('1975-01-01'))

age: Series[pa.Int64] = pa.Field(ge=0, le=50, nullable=False)

country: Series[pa.String] = NotNullableField()

current_club: Series[pa.String] = NotNullableField()

height: Series[pa.Int16] = pa.Field(ge=120, le=250, nullable=True)

name: Series[pa.String] = NotNullableField()

position: Series[pa.String] = NotNullableField()

value_euro_m: Series[pa.Float64] = pa.Field(ge=0, le=200)

joined_date: Series[pd.Timestamp] = pa.Field(nullable=True, ge=pd.Timestamp('2000-01-01'))

number: Series[pa.Int64] = pa.Field(ge=0, le=99)

signed_from: Series[pa.String] = NullableField()

signing_fee_euro_m: Series[pa.Float64] = pa.Field(ge=0, le=300, nullable=True)

foot: Series[pa.String] = pa.Field(nullable=False, isin=['right', 'left', 'both', 'unknown'])

As partial creates a new function, we have to use brackets to call our new fields.

Validate Data

Now that the data model has been defined, we can use it to validate our data.

@pa.check_types

def load_data() -> DataFrame[PlayerSchema]:

return pd.read_parquet('../data/player_info_cleaned.parquet')

To validate the data, we use a combination of type hints and decorators. The @pa.check_types decorator denotes the data should be validated in line with the schema defined in the return type hint DataFrame[PlayerSchema].

@pa.check_types

def validate_data(df: DataFrame) -> DataFrame[PlayerSchema]:

try:

return df

except pa.errors.SchemaError as e:

print(e)

validate_data(df)

# error in check_types decorator of function 'load_data': Column 'height' failed element-wise validator number 1: less_than_or_equal_to(210) failure cases: 300

Using a try-except block, we can catch any errors thrown when the data is loaded and validated. The result shows that the ‘height’ column fails the less than or equal to test, with one height labelled as 300cm which isn’t correct.

Clean data

There are many strategies to clean data, some of which I have detailed in my previous article.

Master Pandas to Build Modular and Reusable Data Pipelines

For the sake of simplicity, I will impute any values above 210cm with the median height for the population.

def clean_height(df: DataFrame) -> DataFrame[PlayerSchema]:

data = df.copy()

data.loc[data[PlayerSchema.height] > 210, PlayerSchema.height] = round(data[PlayerSchema.height].median())

return data

df = clean_height(df)

Another useful property of pandera is the schema can be used to specify column names you would like to analyse in pandas. For example, instead of explicitly labelling ‘height’ we can use PlayerSchema.heightto improve readability and encourage consistency in our code.

Re-validation

As our data has already been loaded in, we can adapt the previous function to take in a dataframe as an input, run the dataframe through the try-except block and evaluate using the decorator and type hint.

validate_data_2(df)

# if there are no errors, the dataframe is the output.

This time there are no errors meaning the dataframe is returned as the function output.

Conclusion

This article has outlined why it is important to validate data to check it is consistent with business logic and reflects the real world, with the example of Zillow used to illustrate how poor data validation can have drastic consequences.

Pandera was used to show how data validation can be easily integrated with pandas to quickly validate a schema that closely resembles Pydantic using decorators and type hints. It was also shown how pa.Field can be used to set boundaries for your data and when used with partialreusable fields can be created to increase code readability.

I hope you found this article useful and thanks for reading. Reach out to me on LinkedIn if you have any questions!

How to Easily Validate Your Data with Pandera was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

How to Easily Validate Your Data with Pandera

Go Here to Read this Fast! How to Easily Validate Your Data with Pandera