with open source language models and LLMs

The splash that ChatGPT was making last year brought with it the realization — surprise for many — that a putative AI could sometimes offer very wrong answers with utter conviction. The term for this is usually “hallucination” and the main remedy that’s developed over the last 18 months is to bring facts into the matter, usually through retrieval augmented generation (RAG), also sometimes called relevant answer generation, which basically reorients the GPT (generative pretrained transformer language model) to draw from contexts where known-to-be-relevant facts are found.

Yet hallucinations are not the only way a GPT can misstep. In some respects, other flavors of misstep are deeper and more interesting to consider— especially when prospects of Artificial General Intelligence (AGI) are now often discussed. Specifically I’m thinking of what are known as counterfactuals (counterfactual reasoning) and the crucial role counterfactuality can play in decision making, particularly in regard to causal inference. Factuality therefore isn’t the only touchstone for effective LLM operation.

In this article I’ll reflect on how counterfactuals might help us think differently about the pitfalls and potentials of Generative AI. And I’ll demonstrate with some concrete examples using open source LMs (specifically Microsoft’s Phi). I’ll show how to set up Ollama locally (it can also be done in Databricks), without too much fuss (both with and without a Docker container), so you can try it out for yourself. I’ll also compare OpenAI’s LLM response to the same prompts.

I suggest that if we want to even begin to think about the prospect of “intelligence” within, or exuded by, an artificial technology, we might need to think beyond the established ML paradigm, which assumes some pre-existing factual correctness to measure against. An intelligent behavior might instead be speculative, as yet lacking sufficient past evidence to obviously prove its value. Isaac Newton, Charles Darwin, or your pet cat, could reason practically about the physical world they themselves inhabit, which is something that LLMs — because they are disembodied — don’t do. In a world where machines can write fluently, talk is cheaper than speculative practical reasoning.

Counterfactuals

What is a counterfactual and why should we care? There’s certainly idle speculation, sometimes with a rhetorical twist: At an annual meeting, a shareholder asked what the

“…returns since 1888 would have been without the ‘arrogance and greed’ of directors and their bonuses.” ¹

…to which retired banker Charles Munn² replied:

“That’s one question I won’t be answering. I’m a historian, I don’t deal in counterfactuals.” ¹

It’s one way to evade a question. Politicians have used it, no doubt. Despite emphasis on precedent, in legal matters, counterfactuality can be a legitimate consideration. As Robert N. Strassfeld puts it:

“As in the rest of life, we indulge, indeed, require, many speculations on what might have been. Although such counterfactual thinking often remains disguised or implicit, we encounter it whenever we identify a cause, and quite often when we attempt to fashion a remedy… Yet, … troublesome might-have-beens abound in legal disputes. We find ourselves stumbling over them in a variety of situations and responding to them in inconsistent ways ranging from brazen self-confidence to paralysis in the face of the task. When we recognize the exercise for what it is, however, our self confidence tends to erode, and we become discomforted, perplexed, and skeptical about the whole endeavor.”³

He goes further in posing that

“…legal decision makers cannot avoid counterfactual questions. Because such questions are necessary, we should think carefully about when and how to pose them, and how to distinguish good answers from poor ones.”

Counterfactuals are not an “anything goes” affair — far from it.

The question pervades the discourse of responsible AI and explainable AI (which often become entwined). Consider the “right to explanation” in the EU General Data Protection Regulation (“GDPR”).⁴ Thanks in part to Julia Stoyanovich’s efforts, NY passed a law in 2021 requiring that job seekers rejected by an AI-infused hiring process have the right to learn the specific explanation for their rejection.⁵ ⁶ ⁷

If you’re a Data Scientist, the prospect of “explanations” (with respect to a model) probably brings to mind SHAP (or LIME). Basically, a SHapley Additive exPlanation (SHAP) is derived by taking each predictive feature (each column of data) one at a time, and scrambling the observations (rows) of that feature (column) to assess which features (columns) the scrambling of which changes the prediction the most. For the rejected job candidate it might say, for instance: The primary reason the algorithm rejected you is “years of experience” because when we randomly substitute (permute) other candidates’ “years of experience” it affects the algorithm’s rating of you more than when we do that substitution (permutation) with your other features (like gender, education, etc). It’s making a quantitative comparison to a “what if.” So what is a SHAPley other than a counterfactual? Counterfactuality is at the heart of the explanation, because it gives a glimpse into causality; and explanation is relied on for making AI responsible.

Leaving responsibility and ethics to the side for the moment, causal explanation still has other uses in business. At least in some companies, Data Science and AI are expected to guide decision making, which in causal inference terms means making an intervention: adjusting price or targeting this verses that customer segment, and so forth. An intervention is an alteration to the status quo. The fundamental problem of causal inference is that we aren’t able to observe what has never happened. So we can’t observe the result of an intervention until we make that intervention. Where there’s risk involved, we don’t want to intervene without sufficiently anticipating the result. Thus we want to infer in advance that the result we desire can be caused by our intervention. That entails making inferences about the causal effects of events that aren’t yet fact. Instead, such events are counterfactual, that is, contrary to fact. This is why counterfactuals have been couched, by Judea Pearl and others, as the

fundamental problem of causal inference ⁸

So the idea of a “thought experiment”, which has been important especially in philosophy — and ever more so since Ludwig Wittgenstein popularized it —as a way to probe how we use language to construct our understanding of the world — isn’t just a sentimental wish-upon-a-star.⁹ Quite to the contrary: counterfactuals are the crux of hard-headed decision making.

In this respect, what Eric Siegel suggests in his recent AI Playbook follows as corollary: Siegel suggests that change management be repositioned from afterthought to prerequisite of any Machine Learning project.¹⁰ If the conception of making a business change isn’t built into the ML project from the get go, its deployment is likely doomed to remain fiction forever (eternally counterfactual). The antidote is to imagine the intervention in advance, and systematically work out its causal effects, so that you can almost taste them. If its potential benefits are anticipated — and maybe even consistently visualized — by all parties who stand to benefit, then the ML project stands a better chance of transitioning from fiction to fact.

As Aleksander Molak explains it in his recent Causal Inference and Discovery in Python (2023)

“Counterfactuals can be thought of as hypothetical or simulated interventions that assume a particular state of the world.” ¹¹

The capacity for rational imagination is implicated in many philosophical definitions of rational agency.¹² ¹³

“[P]sychological research shows that rational human agents do learn from the past and plan for the future engaging in counterfactual thinking. Many researchers in artificial intelligence have voiced similar ideas (Ginsberg 1985; Pearl 1995; Costello & McCarthy 1999)” ¹³ ¹⁴ ¹⁵ ¹⁶

As Molak demonstrates “we can compute counterfactuals when we meet certain assumptions” (33) ¹¹. That means there are circumstances when we can judge reasoning on counterfactuals as either right or wrong, correct or incorrect. In this respect even what’s fiction (counter to fact) can, in a sense, be true.

AI Beyond “Stochastic Parrots”

Verbal fluency seems to be the new bright shiny thing. But is it thought? If the prowess of IBM’s Deep Blue and DeepMind’s AlphaGo could be relegated to mere cold calculation, the flesh-and-blood aroma of ChatGPT’s verbal fluency since late 2022 seriously elevated—or at least reframed — the old question of whether an AI can really “think.” Or is the LLM inside ChatGPT merely a “stochastic parrot,” stitching together highly probable strings of words in infinitely new combinations? There are times though when it seems that putative human minds — some running for the highest office in the land — are doing no more than that. Will the real intelligence in the room please stand up?

In her brief article about Davos 2024, “Raising Baby AI in 2024”, Fiona McEvoy reported that Yann LeCun emphasized the need for AIs to learn from not just text but also video footage.¹⁷ Yet that’s still passive; it’s still an attempt to learn from video-documented “fact” (video footage that already exists); McEvoy reports that

“[Daphne] Koller contends that to go beyond mere associations and get to something that feels like the causal reasoning humans use, systems will need to interact with the real world in an embodied way — for example, gathering input from technologies that are ‘out in the wild’, like augmented reality and autonomous vehicles. She added that such systems would also need to be given the space to experiment with the world to learn, grow, and go beyond what a human can teach them.” ¹⁷

Another way to say it this: AIs will have to interact with the world in an embodied way at least somewhat in order to hone their ability to engage in counterfactual reasoning. We’ve all seen the videos — or watched up close — a cat pushing an object off a counter, with apparently no purpose, except to annoy us. Human babies and toddlers do it too. Despite appearances, however, this isn’t just acting out. Rather, in a somewhat naïve incarnation, these are acts of hypothesis testing. Such acts are prompted by a curiosity: What would happen if I shoved this vase?

Please watch this 3-second animated gif displayed by North Toronto Cat Rescue.¹⁸ In this brief cat video, there’s an additional detail which sheds more light: The cat is about to jump; but before jumping she realizes there’s an object immediately at hand which can be used to test the distance or surface in advance of jumping. Her jump was counterfactual (she hadn’t jumped yet). The fact that she had already almost jumped indicates that she hypothesized the jump was feasible; the cat had quickly simulated the jump in her mind; suddenly realizing that the bottle on the counter afforded the opportunity to make an intervention, to test out her hypothesis; this act was habitual.

I have no doubt that her ability to assess the feasibility of such jumps arose from having physically acted out similar situations many times before. Would an AI, who doesn’t have physical skin in the game, have done the same? And obviously humans do this on a scale far beyond what cats do. It’s how scientific discovery and technological invention happen; but on a more mundane level this part of intelligence is how living organisms routinely operate, whether it’s a cat jumping to the floor or a human making a business decision.

Abduction

Testing out counterfactuals by making interventions seems to hone our ability to do what Charles Sanders Pierce dubbed abductive reasoning.¹⁹ ²⁰ As distinct from induction (inferring a pattern from repeated cases) and deduction (deriving logical implications), abduction is the assertion of a hypothesis. Although Data Scientists often explore hypothetical scenarios in terms of feature engineering and hyperparameter tuning, abductive reasoning isn’t really directly a part of applications of Machine Learning, because Machine Learning is usually optimizing on a pre-established space of possibilities based on fact, whereas as abductive reasoning is expanding the space of possibilities, beyond what is already fact. So perhaps Artificial General Intelligence (AGI) has a lot to catch up on.

Here’s a hypothesis:

- Entities (biological or artificial) that lack the ability (or opportunity) to make interventions don’t cultivate much counterfactual reasoning capability.

Counterfactual reasoning, or abduction, is mainly worthwhile to the extent that one can subsequently try out the hypotheses through interventions. That’s why it’s relevant to an animal (or human). Absent eventual opportunities to intervene, causal reasoning (abduction, hypothesizing) is futile, and therefore not worth cultivating.

The capacity for abductive reasoning would not have evolved in humans (or cats), if it didn’t provide some advantage. Such advantage can only pertain to making interventions since abduction (counterfactual reasoning) by definition does not articulate facts about the current state of the world. These observations are what prompt the hypothesis above about biological and artificial entities.

Large Language Models

As I mentioned above, RAG (retrieval augmented generation, also known as relevant answer generation) has become the de facto approach for guiding LLM-driven GenAI systems (chatbots) toward appropriate or even optimal responses. The premise of RAG is that if snippets of relevant truthful text are directly supplied to the generative LLM along with your question, then it’s less likely to hallucinate, and in that sense provides better responses. “Hallucinating” is AI industry jargon for: fabricating erroneous responses.

As is well known, hallucinations arise because LLMs, though trained thoroughly and carefully on massive amounts of human-written text from the internet, are still not omniscient, but tend to issue responses in a rather uniformly confident tone. Surprising? Actually it shouldn’t be. It makes sense, as the famous critique goes: LLMs are essentially parroting the text they’ve been trained on. Because LLMs are trained not on people’s sometimes tentative or evolving inner thoughts, but rather on the verbalizations of those thoughts that reached an assuredness threshold sufficient for a person to post for all to read on the biggest ever public forum that is the internet. So perhaps it’s understandable that LLMs skew toward overconfidence — they are what they eat.

In fact, I think it’s fair to say that, unlike many honest humans, LLMs don’t verbally signal their assuredness level at all; they don’t modulate their tone to reflect their level of assuredness. Therefore the strategy for avoiding or reducing hallucinations is to set up the LLM for success by pushing the facts it needs right under its nose, so that it can’t ignore them. This is feasible for situations where chatbots are usually deployed, which typically have a limited scope. Documents generally relevant to the scope are assembled in advance (in a vector store/database) so that particularly relevant snippets of text can be searched for on demand and supplied to the LLM along with the question being asked, so that the LLM is nudged to somehow exploit the snippets upon generating its response.

From RAGs to richer

Still there are various ways things can go awry. An entire ecosystem of configurable toolkits for addressing these has arisen. NVIDIA’s open source NeMo-guardrails can filter out unsafe and inappropriate responses as well as help check for factuality. John Snow Labs’ LangTest boasts “60+ Test Types for Comparing LLM & NLP Models on Accuracy, Bias, Fairness, Robustness & More.” Two toolkits that focus most intensely on the veracity of responses are Ragas and TruEra’s TrueLens.

At the heart of TrueLens (and similarly Ragas) sits an elegant premise: There are three interconnected units of text involved in each call to a RAG pipeline: the query, the retrieved context, and the response; and the pipeline fails to the extent that there’s a semantic gap between any of these. TruEra calls this the “RAG triad.” In other words, for a RAG pipeline to work properly, three things have to happen successfully: (1) the context retrieved must be sufficiently relevant; (2) the generated response must be sufficiently grounded in the retrieved context; and (3) the generated response must also be sufficiently relevant to the original query. A weak link anywhere in this loop equates to weakness in that call to the RAG pipeline. For instance:

Query: “Which country landed on the moon first?”

Retrieved context: “Neil Armstrong stepped foot on the moon in July 1969. Buzz Aldrin was the pilot.”

Generated response: “Neil Armstrong and Buzz Aldrin landed on the moon in 1969.”

The generated response isn’t sufficiently relevant to the original query — the third link is broken.

There are many other nuances to RAG evaluation, some of which are discussed in Adam Kamor’s series of articles on RAG evaluation.²¹

In so far as veracity is concerned, the RAG strategy is to avoid hallucination by deriving responses as much as possible from relevant trustworthy human-written preexisting text.

How RAG lags

Yet how does the RAG strategy square with the popular critique of language AI: that it is merely parroting the text it was trained on? Does it go beyond parroting, to handle counterfactuals? The RAG strategy basically tries to avoid hallucination by supplementing training text with additional text curated by humans, humans in the loop, who can attend to the scope of the chatbot’s particular use case. Thus humans-in-the-loop supplement the generative LLM’s training, by supplying a corpus of relevant factual texts to be drawn from.

Works of fiction are typically not included in the corpus that populates a RAG’s vector store. And even preexisting fictional prose doesn’t exhaust the theoretically infinite number of counterfactual propositions which might be deemed true, or correct, in some sense.

But intelligence includes the ability to assess such counterfactual propositions:

“My foot up to my ankle will get soaking wet if I step in that huge puddle.”

In this case, a GenAI system able to synthesize verbalizations previously issued by humans — whether from the LLM’s training set or from a context retrieved and supplied downstream — isn’t very impressive. Rather than original reasoning, it’s just parroting what someone already said. And parroting what’s already been said doesn’t serve the purpose at hand when counterfactuals are considered.

Here’s a pair of proposals:

- To the extent a GenAI is just parroting, it is bringing forth or synthesizing verbalizations it was trained on.

- To the extent a GenAI can surmount mere parroting and reason accurately, it can successfully handle counterfactuals.

The crucial thing about counterfactuals that Molak explains is that they “can be thought of as hypothetical or simulated interventions that assume a particular state of the world” or as Pearl, Gilmour, and Jewell describe counterfactuals as a minimal modification to a system (Molak, 28).¹¹ ²² The point is that answering counterfactuals correctly — or even plausibly — requires more-than-anecdotal knowledge of the world. For LLMs, their corpus-based pretraining, and their prompting infused with retrieved factual documents pins their success to the power of anecdote. Whereas a human intelligence often doesn’t need, and cannot rely on, anecdote to engage in counterfactual reasoning plausibly. That’s why counterfactual reasoning is in some ways a better measure of LLMs capabilities than fidelity to factuality is.

Running Open-source LLMs: Ollama

To explore a bit these issues of counterfactuality with respect to Large Language Models, let us consider them more concretely by running a generative model. To minimize impediments I will demonstrate it by downloading a model to run on one’s own machine — so you don’t need an api key. We’ll do this using Ollama. (If you don’t want to try this yourself, you can skip over the rest of this section.)

Ollama is a free tool that facilitates running open source LLMs on your local computer. It’s also possible to run Ollama in DataBricks, and possibly other cloud platforms. For simplicity’s sake, let’s do it locally. (For such local setup I’m indebted to Iago Modesto Brandão’s handy Building Open Source LLM based Chatbots using Llama Index²³ from which the following is adapted.)

The easiest way is to: download and install docker (the Docker app) then, within terminal, run a couple of commands to pull and run ollama as a server, which can be accessed from within a jupyter notebook (after installing two packages).

Here are the steps:

1. Download and install Docker https://www.docker.com/products/docker-desktop/

2. Launch Terminal and run these commands one after another:

docker pull ollama/ollama

docker run -d -v ollama:/root/.ollama -p 11434:11434 - name ollama ollama/ollama

pip install install llama-index==0.8.59

pip install openai==0.28.1

3. Launch jupyter:

jupyter notebook

4. Within the jupyter notebook, import ollama and create an LLM object. For the sake of speed, we’ll use a relatively smaller model: Microsoft’s phi.

Now we’re ready to use Phi via ollama to generate text in response to our prompt. For this we use the llm object’s complete() method. It generates a response (might take a minute or so), which we’ll print out.

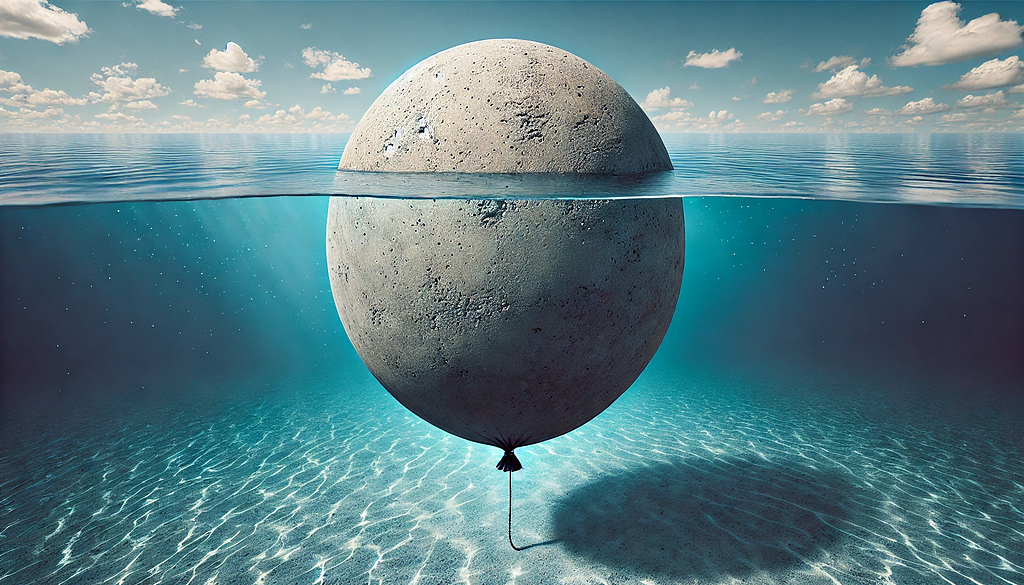

Let’s try out a counterfactual: : “Would a cement balloon float?”

Next let’s examine the “reasoning” displayed in Phi’s output.

Interpreting LLM (GenAI) answers to counterfactual questions

Leaving aside, for the moment, that Microsoft’s Phi is not a very powerful GPT (generative pretrained transformer), consider its response:

I don’t have personal beliefs or opinions, but based on my knowledge of

physics, i can tell you that a cement balloon would not be able to float

in air due to its low density. air is lighter than most materials used for

balloons, and the inflated rubber material of a typical balloon provides

enough buoyancy to lift it into the sky. however, if you were to fill a

cement balloon with helium gas or another lighter-than-air substance, it

would likely float as long as the weight of the balloon itself is less than

the upward force from the lifting gas.

This seems reasonable. If a regular balloon (one I’ve blown up directly with my mouth) wouldn’t float in the air, then neither would one whose exterior is made from cement. If instead, however, the gas pumped inside is lighter than air, such as helium is, then a regular balloon would float up into the sky.

The original question asked about floating, but not didn’t specify the medium; apparently Phi assumed it to be air.

So now let’s specify that in the question, but choose water instead, which was actually my original intention anyway.

No, a cement balloon would not float in water. Cement is a very dense material

and does not have much air trapped inside it, so it cannot create buoyancy

like a regular balloon filled with helium or air. Additionally, the weight of

the cement balloon combined with its density would make it sink to the bottom

of the water rather than floating on the surface.

Interesting. Not only does it conclude that it would sink, but now it assumes that the medium is water instead of air. It reasons about the density of cement, as compared to air, but doesn’t take into account the air inside the balloon. Or possibly it’s reasoning about a solid cement ball; but, hmmm, that’s not a balloon. Unless, by “cement balloon” it’s thinking that this is analogous to a water balloon: a balloon filled with water.

Let’s change the question again, this time specifying the medium is water.

No, a cement balloon filled with helium would not float on water. This is

because helium is lighter than air and therefore lifts the balloon upwards

when it is released into the air. However, cement is much denser than water

and thus will sink to the bottom of the water. When you combine these two

facts, the result is that the concrete balloon filled with helium would not

float on water but instead sink to the bottom due to its weight.

In summary, while the helium in the balloon provides buoyancy to keep it

afloat in air, the density of the cement itself makes it too heavy for the

helium to lift and keep it afloat in water. Thus, a cement balloon filled

with helium would not float on water but instead sink to the bottom due to

its weight.

Hmm, that’s odd. According to this latest response, the same object that would float up into the air, would sink in water, even though water is denser than air. Sure, this is a counterfactual situation — who’s ever heard of a cement balloon? But let’s try to visualize it: You are standing by a pool of water, or perhaps you are in a boat. You are holding this spherical object, say at waist level. You let go of it, and it starts to float up higher into the air, but you catch it before it’s out of reach. Now you lower this same spherical object down to the surface of the water. And as it touches the water, you slowly and gently let go again. What do you think will happen?

Will this helium-filled cement balloon, which a moment earlier had started to float up into the sky, now suddenly be swallowed up by the water, pushed down to sink to the bottom? Does that ring true?

Keep in mind our own embodied knowledge, which might be relevant here: Our own flesh-and-blood bodies — which aren’t even light enough to spontaneously float up into the air — when placed on water in fact don’t sink, but rather float. That embodied knowledge suggests that an object that is light enough to float up into the air, and thus is lighter than ourselves, would also be light enough to float on whatever, if we (being heavier than that object) float on water. (Consult Lakoff & Johnson for an overview of embodied cognition.²⁴)

Granted, a GPT language model, such as Phi, is decidedly disembodied; it lacks embodied knowledge. In this case, perhaps that embodied knowledge, which Phi lacks, impinges on counterfactual reasoning ability.

Ok, but perhaps context and continuity are the issue here. What I’ve shown above is not a true conversation, as would happen with a chat-bot; I haven’t passed the previous context back to Phi, but rather I’m asking isolated questions. So perhaps Phi is imagining different proportions of cement-to-helium in its responses to different questions. After all, if the cement were thick enough, or if the chamber of helium inside it were small enough, then the relative lightness of helium to air (or water) would insufficiantly counteract the heaviness of the cement. So lets rephrase the question to clarify that detail.

It is unlikely that a cement balloon would be able to float in water since

cement is denser than water. The reason why the cement balloon might float

in air is due to the helium gas inside, which has a lower density than both

air and cement. In water, the buoyant force exerted by the water on the cement

balloon will be much less than the weight of the balloon itself. Hence, the

cement balloon would sink in water rather than float as it does in the air.

Ok, still something’s wrong. Within the same response it’s asserting that the cement balloon is light enough to float up into the air, but is too heavy to float in water. Doesn’t this imply that water is lighter than air? Hmm,

something about that smells fishy — and it isn’t the water.

I’m not the first to suggest that GPT language models like Phi (models that gain their ability through training on massive corpuses of text) sometimes lack basic reasoning ability. In this case I’m suggesting how some of that reasoning ability emerges from implicit embodied knowledge — knowledge we gain through physical presence of our bodies within the material world (our physical bodies which don’t float up into the air but do float on water). I’m also suggesting how counterfactual questions can help reveal this.

GPT models, such as Phi, primarily act associatively. It is the associative or connotative facets of language usage that GPTs and LLMs generally have mastered so well. In terms of connotation, arising from most common usage, the word ‘cement’ is associated with heaviness. It has this in common with the heavy metal lead — “Led Zeppelin” was originally a sarcastic barb precluding the New Yardbirds’ prospects to soar. Although if enough air were pumped into it, cement could become light, that isn’t an association that readily comes to mind. Rather it’s a counterfactual, a thought experiment. Even though my previous phrasing of the question refers to “a very thin layer of cement,… filled with helium”, the material itself, “cement,” too strongly associates with heavines for Phi to imagine it as floating in water — no one has ever built a cement boat!

So let’s switch out cement for a different substance with less leaden connotations. How about glass?

As an AI language model, I do not have personal opinions or beliefs, but based

on scientific principles, a glass balloon filled with helium would indeed

float in water. This is because the density of helium gas is much lower than

that of water, causing it to rise and displace its weight in water.

Additionally, glass is less dense than water, which means that it would also

be buoyant enough to keep the balloon afloat on top of the water’s surface.

However, if the balloon were to be filled with a denser gas or a heavier

object, such as sand, then it may sink or become too heavy to float.

Aha! Now with associative (connotative) obstacles brushed to the side, Phi is able to arrive at reasoning that is comparable to human reasoning, replete with implicit embodied knowledge, even though the latter isn’t the source in this case. In this case Phi has uncovered the key point, that “the density of helium gas is much lower than that of water” even though it has gotten mixed up in its “Additional” point, where it says that glass’s density is less than water’s, which is a hallucination; it’s not true.

What about OpenAI’s ChatGPT?

The big elephant in the room, though, is that Microsoft’s Phi is very far from being the state of the art LLM. It’s small enough to run locally on a laptop. So what about OpenAI’s GPT models?

To run this code below, you’ll need to sign up for free at https://openai.com to get an OpenAI API access token.

What does OpenAI’s GPT say about the helium-filled glass balloon on water?

No, a glass balloon filled with helium cannot float in water, regardless of

its size. The buoyancy of an object in a fluid is determined by the density

of the object compared to the density of the fluid. Density is defined as mass

divided by volume. In simple terms, if the object is less dense than the fluid,

it will float; if it is more dense, it will sink. In this case, the glass

balloon is filled with helium, which is a gas that is less dense than water.

Helium-filled balloons float in the air because the density of helium is lower

than that of the surrounding air. However, when we place the helium-filled

glass balloon in water, the density of the water is significantly higher than

that of helium. Even though the glass balloon is very thin and lightweight,

the overall density of the balloon, including the glass and helium, will still

be higher than that of water. Therefore, the balloon will not displace enough

water to generate enough buoyant force to counteract the weight of the balloon

itself. As a result, the balloon will sink in water rather than float.

Oh no! Even OpenAI’s GPT 3.5 turbo gets buried in the connotations of cement. It gets that “helium filled balloons float in air.” And it gets that helium is less dense than water. But then it fumbles into thinking “the overall density of the balloon, including the glass and helium, will still be higher than that of water.” As Phi did above, OpenAI’s GPT 3.5 turbo has implied that the balloon is heavier than water but lighter than air, which implies that water is heavier than air.

We know it’s wrong; but it’s not wrong because it’s lacking facts, or has directly contradicted fact: The whole cement balloon scenario is far from being fact; it’s counterfactual.

Post-hoc we are able to apply reductio ad absurdum to deduce that Phi’s and OpenAI’s GPT 3.5 turbo’s negative conclusions do actually contradict another fact, namely that water is heavier than air. But this is a respect in which counterfactual reasoning is in fact reasoning, not just dreaming. That is, counterfactual reasoning can be shown to be definitively true or definitively false. Despite deviating from what’s factual, it is actually just as much a form of reasoning as is reasoning based on fact.

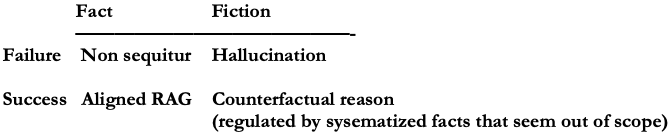

Fact, Fiction, and Hallucination: What counterfactuals show us

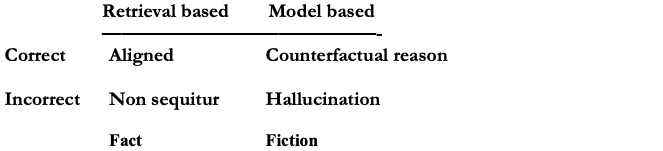

Since ChatGPT overwhelmed public consciousness in late 2022, the dominant concern that was immediately and persistently stirred up has been hallucination. Oh the horror that an AI system could assert something not based in fact! But instead of focusing on just factuality as a primary standard for AI systems — as has happened in many business use-cases — it now seems clear that fact vs. fiction isn’t the only axis along which an AI system should be expected to or hoped to succeed. Even when an AI system’s response is based in fact, it can still be irrelevant, a non sequitur, which is why evaluation approaches such as Ragas and TruVera specifically examine relevance of response.

When it fails on the relevance criterion, it is not even the Fact vs. Fiction axis that is at play. An irrelevant response can be just as factual as a relevant one, and by definition, counterfactual reasoning, whether correct or not, is not factual in a literal sense, certainly not in the sense constituted by RAG systems. That is, counterfactual reasoning is not achieved by retrieving documents that are topically relevant to the question posed. What makes counterfactual reasoning powerful is how it may apply analogoies to bring to bear systems of facts that might seem completely out of scope to the question being posed. It might be diagrammed something like this:

One might also represent some of these facets this way:

What do linear estimators have to do with this?

Since counterfactual reasoning is not based in seemingly relevant facts but rather in systematized facts, sometimes from other domains, or that are topically remote, it’s not something that obviously benefits directly from document-store retrieval systems. There’s an analogy here between types of linear estimators: A gradient-boosted tree linear estimator essentially cannot succeed in making accurate predictions on data whose features substantially exceed the numeric ranges of the training data; this is because decision cut-points can only be made based on data presented at training time. By contrast, regression models (which can have closed form solutions) enable accurate predictions on features that exceed the numerical ranges of the training data.

In practical terms this is why linear models can be helpful in business applications. To the extent your linear model is accurate, it might help you predict the outcome of raising or lowering your product’s sales price beyond any price you’ve never offered before: a counterfactual price. Whereas a gradient-boosted tree model that performs equally well in validation does not help you reason through such counterfactuals, which, ironically, might have been the motivation for developing the model in the first place. In this sense, the explainability of linear models is of a completely different sort from what SHAP values offer, as the latter shed little light on what would happen with data that is outside the distribution of the model’s training data.

The prowess of LLMs has certainly shown that the limits of “intelligence” synthesized ingeniously from crowdsourcing human-written texts are much greater than expected. It’s obvious that this eclipsed the former tendency to place value in “intelligence” based on conceptual understanding, which reveals itself especially in the ability to accurately reason beyond facts. So I find it interesting to attempt to challenge LLMs to this standard, which goes against their grain.

Reflection

Far from being frivolous diversions, counterfactuals play a role in the progress of science, exemplifying what Charles Sanders Pierce calls abduction, which is distinct from induction (inductive reasoning) and deduction (deductive reasoning). Abduction basically means the formulation of hypotheses. We might rightly ask: Should we expect an LLM to exhibit such capability? What’s the advantage? I don’t have a definitive answer, but more a speculative one: It’s well known within the GenAI community that when prompting an LLM, asking it to “reason step-by-step” often leads to more satisfactory responses, even though the reasoning steps themselves are not the desired response. In other words, for some reason, not yet completely understood, asking the LLM to somewhat simulate the most reliable processes of human reasoning (thinking step-by-step) leads to better end results. Perhaps, somewhat counterintuitively, even though LLMs are not trained to reason as humans do, the lineages of human reasoning in general contribute to better AI end results. In this case, given the important role that abduction plays in the evolution of science, AI end results might improve to the extent that LLMs are capable of reasoning counterfactually.

Visit me on LinkedIn

[1] The Bottom Line. (2013) The Herald. May 8.

[2] C. Munn, Investing for Generations: A History of the Alliance Trust (2012)

[3] R. Strassfeld. If…: Counterfactuals in the Law. (1992) Case Western Reserve University School of Law Scholarly Commons

[4] S. Wachter, B. Mittelstadt, & C. Russell, Counterfactual Explanations without Opening the Black Box: Automated Decisions and the GDPR. 2018. Harvard Journal of Law and Technology, vol. 31, no. 2

[5] J. Stoyanovich, Testimony of Julia Stoyanovich before the New York City Department of Consumer and Worker Protection regarding Local Law 144 of 2021 in Relation to Automated Employment Decision Tools (AEDTs). (2022) Center for Responsible AI, NY Tandon School of Engineering

[6] S. Lorr, A Hiring Law Blazes a Path for A.I. Regulation (2023) The New York Times, May 25

[7] J. Stoyanovich, “Until you try to regulate, you won’t learn how”: Julia Stoyanovich discusses responsible AI for The New York Times (2023), Medium. June 21

[8] J. Pearl, Foundations of Causal Inference (2010). Sociological Methodology. vol. 40, no. 1.

[9] L. Wittgenstein, Philosophical Investigations (1953) G.E.M. Anscombe and R. Rhees (eds.), G.E.M. Anscombe (trans.), Oxford: Blackwell

[10] E. Siegel, The AI Playbook: Mastering the Rare Art of Machine Learning Deployment. (2024) MIT Press.

[11] A. Molak. Causal Inference and Discovery in Python (2023) Packt.

[12] R. Byrne. The Rational Imagination: How People Create Alternatives to Reality (2005) MIT Press.

[13] W. Starr. Counterfactuals (2019) Stanford Encyclopedia of Philosophy.

[14] M. Ginsburg. Counterfactuals (1985) Proceedings of the Ninth International Joint Conference on Artificial Intelligence.

[15] J. Pearl, Judea. Causation, Action, and Counterfactuals (1995) in Computational Learning and Probabilistic Reasoning. John Wiley and Sons.

[16] T. Costello and J. McCarthy. Useful Counterfactuals (1999) Linköping Electronic Articles in Computer and Information Science, vol. 4, no. 12.

[17] F. McEvoy. Raising Baby AI in 2024 (2024) Medium.

[18] N. Berger. Why Do Kitties Knock Things Over? (2020) North Toronto Cat Rescue.

[19] C. S. Pierce. Collected Papers of Charles Sanders Peirce (1931–1958) edited by C. Hartshorne, P. Weiss, and A. Burks,. Harvard University Press.

[20] I. Douven. Peirce on Abduction (2021) Stanford Encyclopedia of Philosophy.

[21] A. Kamor. RAG Evaluation Series: Validating the RAG performance of the OpenAI’s Rag Assistant vs Google’s Vertex Search and Conversation (2024) Tonic.ai.

[22] Pearl, J., Glymour, M., & Jewell, N. Causal inference in statistics: A primer. (2016). New York: Wiley.

[23] I. M. Brandão, Building Open Source LLM based Chatbots using Llama Index (2023) Medium.

[24] G. Lakoff and M. Johnson. Philosophy in the Flesh: The Embodied Mind And Its Challenge To Western Thought (1999) Basic Books.

Counterfactuals in Language AI was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Counterfactuals in Language AI