How to Find and Solve Valuable Generative-AI Use Cases

80% of AI projects fail due to poor use cases or technical knowledge. Gen AI reduced complexity, and now we must pick the right battles.

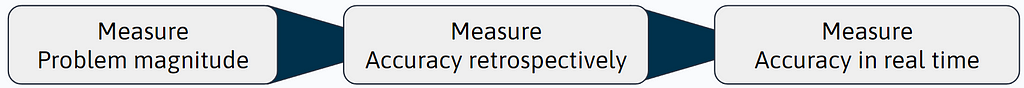

It has become apparent that AI projects are hard. Some estimate that 80% of the AI projects fail. Still, generative AI is here to stay, and companies are searching for how to apply it to their operations. AI projects fail, because they fail to deliver value. The root cause of failure is applying AI to the wrong use cases. The solution for finding the right use cases is with three measures:

- Measure the problem magnitude

- Measure the solution accuracy retrospectively

- Measure the solution accuracy in real time

These steps should be investigated sequentially. If the problem magnitude is not big enough to bring the needed value, do not build. If the solution accuracy on historical data is not high enough, do not deploy. If the real-time accuracy is not high enough, adjust the solution.

I will be discussing generative AI instead of AI in general. Generative AI is a small subfield of AI. With general AI projects the goal is to find a model to approximate how the data is generated — you must have high proficiency in understanding different machine learning algorithms and data processing. With generative AI the model is given, LLM (e.g. chatGPT), and the goal is to use the existing model to solve some business problem. The latter requires less technical skills, and more problem solving knowledge. Generative AI use cases are much easier to implement and validate, as the step of creating the algorithm is left out, and the data (text) is (relatively) standardized.

Measure the problem magnitude

Every person can identify problems in their daily work. The challenge lies in determining which issues are significant enough to be solved and where AI could and should be applied.

Instead of going through all subjective problems and finding data to validate their existence, we can focus on processes that generate textual data. This approach narrows the scope to measurable problems, where AI and automation can add demonstrable value.

Concretely, instead of asking the customer support specialist “What problems are there in your work?”, we should measure where the employee spends the most time. Let’s go through this with an example.

Paperclips & Friends (P&F) is a company that makes paper clips. They have a support channel #P&FSupport, where customers discuss issues around paper clips. P&F responds to customer questions on time, but the channel keeps getting busier. The customer support specialists hear about ChatGPT and want it to help with customer questions.

Before the data science team of P&F starts solving the issue, they measure the number of incoming questions to understand the magnitude of the problem. They notice hundreds and hundreds of inquiries, daily.

The data science team develops a RAG chatbot using ChatGPT and P&F internal documentation. They release the chatbot to be tested by the customer support specialists and receive mixed feedback. Some experts love the solution and mention that it solves most of the issues, while others criticize it, claiming it provides no value.

The P&F data science team faces a challenge — who speaks the truth? Is the chatbot any good? Then, they remember the second Measure:

“Measure the solution accuracy retrospectively”

NOTE: it is crucial to verify can the root cause be solved. If Paperclips & Friends found out, that 90% of the support channel messages were related to unclear usage instructions, P&F could create a chatbot for answering those messages. However, the customer wouldn’t have sent the question in the first place, if P&F included a simple instruction guide with the paperclip shipment.

Measure the solution accuracy retrospectively

The P&F data science team faces a challenge: They must weigh each expert opinion equally, but can’t satisfy everyone. Instead of focusing on expert subjective opinions, they decide to evaluate the chatbot on historical customer questions. Now experts do not need to come up with questions to test the chatbot, bringing the evaluation closer to real-world conditions. The initial reason for involving experts, after all, was their better understanding of real customer questions compared to the P&F data science team.

It turns out that commonly asked questions for P&F are related to paper clip technical instructions. P&F customers want to know detailed technical specifications of the paper clips. P&F has thousands of different paper clip types, and it takes a long time for customer support to answer the questions.

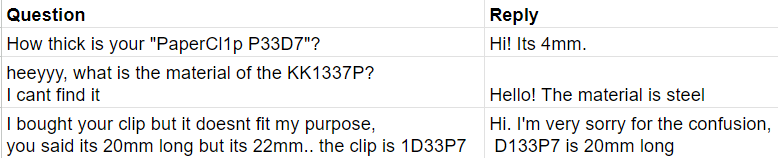

Understanding the test-driven development, the data science team creates a dataset from the conversation history, including the customer question and customer support reply:

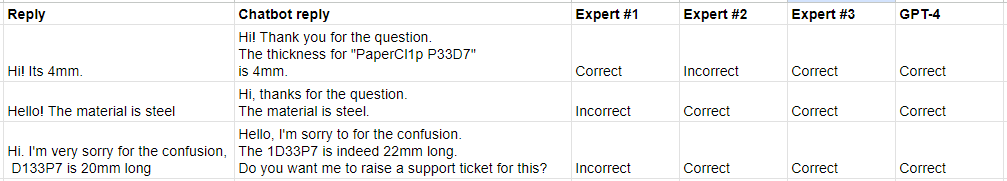

Having a dataset of questions and answers, P&F can test and evaluate the chatbot’s performance retrospectively. They create a new column, “Chatbot reply”, and store the chatbot example replies to the questions.

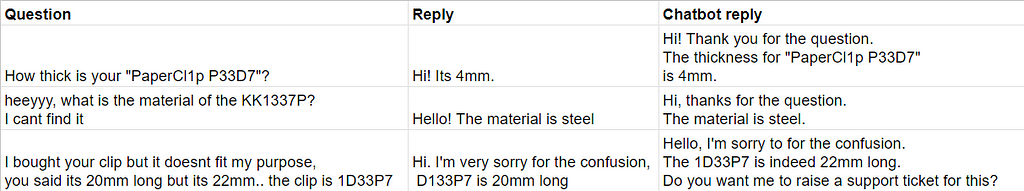

We can have the experts and GPT-4 evaluate the quality of the chatbot’s replies. The ultimate goal is to automate the chatbot accuracy evaluation by utilizing GPT-4. This is possible if experts and GPT-4 evaluate the replies similarly.

Experts create a new Excel sheet with each expert’s evaluation, and the data science team adds the GPT-4 evaluation.

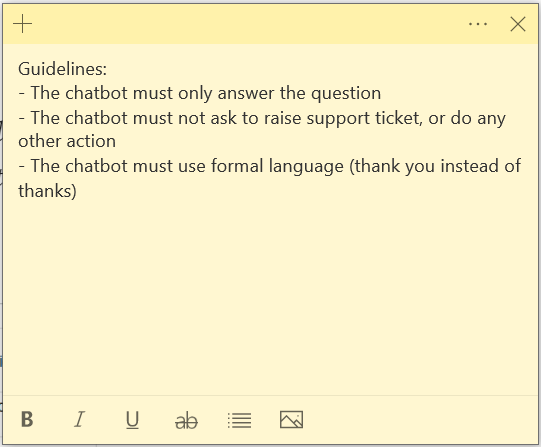

There are conflicts on how different experts evaluate the same chatbot replies. GPT-4 evaluates similarly to expert majority voting, which indicates that we could do automatic evaluations with GPT-4. However, each expert’s opinion is valuable, and it’s important to address the conflicting evaluation preferences among the experts.

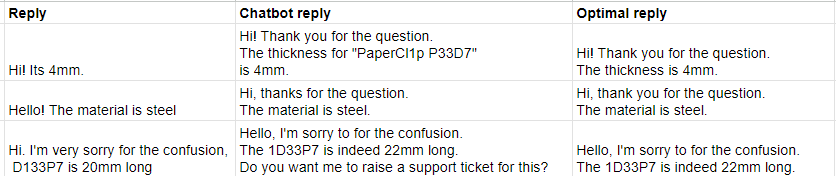

P&F organizes a workshop with the experts to create golden standard responses to the historical question dataset

and evaluation best practice guidelines, to which all experts agree.

With the insights from the workshop, the data science team can create a more detailed evaluation prompt for the GPT-4 that covers edge cases (i.e. “chatbot should not ask to raise support tickets”). Now the experts can use time to improve the paper clip documentation and define best practices, instead of laborious chatbot evaluations.

By measuring the percentage of correct chatbot replies, P&F can decide whether they want to deploy the chatbot to the support channel. They approve the accuracy and deploy the chatbot.

Measure the solution accuracy in real-time

Finally, it’s time to save all the chatbot responses and calculate how well the chatbot performs to solve real customer inquiries. As the customer can directly respond to the chatbot, it is also important to record the response from the customer, to understand the customer’s sentiment.

The same evaluation workflow can be used to measure the chatbot’s success factually, without the ground truth replies. But now the customers are getting the initial reply from a chatbot, and we do not know if the customers like it. We should investigate how customers react to the chatbot’s replies. We can detect negative sentiment from the customer’s replies automatically, and assign customer support specialists to handle angry customers.

Conclusion

In this short article, I explained three steps to avoid failing your AI project:

- Measure the problem magnitude

- Measure the solution accuracy retrospectively

- Measure the solution accuracy in real-time

The first two steps are by far the most crucial and are the primary reasons why many projects fail. While it is possible to succeed without measuring the problem’s magnitude or the solution’s accuracy, subjective estimates are generally flawed due to hundreds of human biases. Correctly designed data-driven approaches almost always give better results.

If you are curious about how to implement chatbots like this, check out my blog post on RAG, and blog post on advanced RAG.

Lastly, feel free to network with me on LinkedIn and follow me here if you wish to read similar articles 🙂

Linkedin: @sormunenteemu

Unless otherwise noted, all images and data are by the author.

How to Find and Solve Valuable Generative AI Use Cases was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

How to Find and Solve Valuable Generative AI Use Cases

Go Here to Read this Fast! How to Find and Solve Valuable Generative AI Use Cases