Improving retrieval beyond semantic similarity

Vector databases have revolutionized the way we search and retrieve information by allowing us to embed data and quickly search over it using the same embedding model, with only the query being embedded at inference time. However, despite their impressive capabilities, vector databases have a fundamental flaw: they treat queries and documents in the same way. This can lead to suboptimal results, especially when dealing with complex tasks like matchmaking, where queries and documents are inherently different.

The challenge of Task-aware RAG (Retriever-augmented Generation) lies in its requirement to retrieve documents based not only on their semantic similarity but also on additional contextual instructions. This adds a layer of complexity to the retrieval process, as it must consider multiple dimensions of relevance.

Here are some examples of Task-Aware RAG problems:

1. Matching Company Problem Statements to Job Candidates

- Query: “Find candidates with experience in scalable system design and a proven track record in optimizing large-scale databases, suitable for addressing our current challenge of enhancing data retrieval speeds by 30% within the existing infrastructure.”

- Context: This query aims to directly connect the specific technical challenge of a company with potential job candidates who have relevant skills and experience.

2. Matching Pseudo-Domains to Startup Descriptions

- Query: “Match a pseudo-domain for a startup that specializes in AI-driven, personalized learning platforms for high school students, emphasizing interactive and adaptive learning technologies.”

- Context: Designed to find an appropriate, catchy pseudo-domain name that reflects the innovative and educational focus of the startup. A pseudo-domain name is a domain name based on a pseudo-word, which is a word that sound real but isn’t.

3. Investor-Startup Matchmaking

- Query: “Identify investors interested in early-stage biotech startups, with a focus on personalized medicine and a history of supporting seed rounds in the healthcare sector.”

- Context: This query seeks to match startups in the biotech field, particularly those working on personalized medicine, with investors who are not only interested in biotech but have also previously invested in similar stages and sectors.

4. Retrieving Specific Kinds of Documents

- Query: “Retrieve recent research papers and case studies that discuss the application of blockchain technology in securing digital voting systems, with a focus on solutions tested in the U.S. or European elections.”

- Context: Specifies the need for academic and practical insights on a particular use of blockchain, highlighting the importance of geographical relevance and recent applications

The Challenge

Let’s consider a scenario where a company is facing various problems, and we want to match these problems with the most relevant job candidates who have the skills and experience to address them. Here are some example problems:

- “High employee turnover is prompting a reassessment of core values and strategic objectives.”

2. “Perceptions of opaque decision-making are affecting trust levels within the company.”

3. “Lack of engagement in remote training sessions signals a need for more dynamic content delivery.”

We can generate true positive and hard negative candidates for each problem using an LLM. For example:

problem_candidates = {

"High employee turnover is prompting a reassessment of core values and strategic objectives.": {

"True Positive": "Initiated a company-wide cultural revitalization project that focuses on autonomy and purpose to enhance employee retention.",

"Hard Negative": "Skilled in rapid recruitment to quickly fill vacancies and manage turnover rates."

},

# … (more problem-candidate pairs)

}

Even though the hard negatives may appear similar on the surface and could be closer in the embedding space to the query, the true positives are clearly better fits for addressing the specific problems.

The Solution: Instruction-Tuned Embeddings, Reranking, and LLMs

To tackle this challenge, we propose a multi-step approach that combines instruction-tuned embeddings, reranking, and LLMs:

1. Instruction-Tuned Embeddings

Instruction-Tuned embeddings function like a bi-encoder, where both the query and document embeddings are processed separately and then their embeddings are compared. By providing additional instructions to each embedding, we can bring them to a new embedding space where they can be more effectively compared.

The key advantage of instruction-tuned embeddings is that they allow us to encode specific instructions or context into the embeddings themselves. This is particularly useful when dealing with complex tasks like job description-resume matchmaking, where the queries (job descriptions) and documents (resumes) have different structures and content.

By prepending task-specific instructions to the queries and documents before embedding them, we can theoretically guide the embedding model to focus on the relevant aspects and capture the desired semantic relationships. For example:

documents_with_instructions = [

"Represent an achievement of a job candidate achievement for retrieval: " + document

if document in true_positives

else document

for document in documents

]

This instruction prompts the embedding model to represent the documents as job candidate achievements, making them more suitable for retrieval based on the given job description.

Still, RAG systems are difficult to interpret without evals, so let’s write some code to check the accuracy of three different approaches:

1. Naive Voyage AI instruction-tuned embeddings with no additional instructions.

2. Voyage AI instruction-tuned embeddings with additional context to the query and document.

3. Voyage AI non-instruction-tuned embeddings.

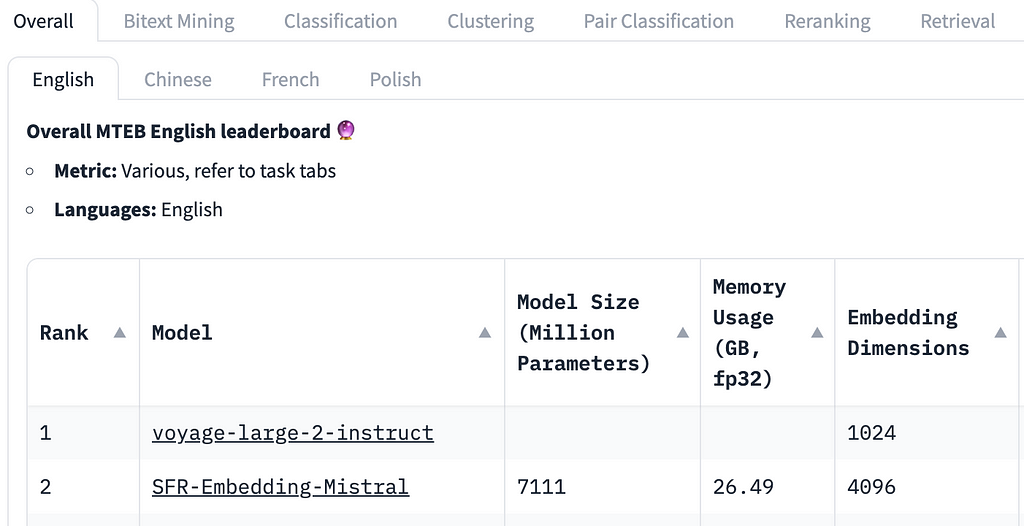

We use Voyage AI embeddings because they are currently best-in-class, and at the time of this writing comfortably sitting at the top of the MTEB leaderboard. We are also able to use three different strategies with vectors of the same size, which will make comparing them easier. 1024 dimensions also happens to be much smaller than any embedding modals that come even close to performing as well.

In theory, we should see instruction-tuned embeddings perform better at this task than non-instruction-tuned embeddings, even if just because they are higher on the leaderboard. To check, we will first embed our data.

When we do this, we try prepending the string: “Represent the most relevant experience of a job candidate for retrieval: “ to our documents, which gives our embeddings a bit more context about our documents.

If you want to follow along, check out this colab link.

import voyageai

vo = voyageai.Client(api_key="VOYAGE_API_KEY")

problems = []

true_positives = []

hard_negatives = []

for problem, candidates in problem_candidates.items():

problems.append(problem)

true_positives.append(candidates["True Positive"])

hard_negatives.append(candidates["Hard Negative"])

documents = true_positives + hard_negatives

documents_with_instructions = ["Represent the most relevant experience of a job candidate for retrieval: " + document for document in documents]

batch_size = 50

resume_embeddings_naive = []

resume_embeddings_task_based = []

resume_embeddings_non_instruct = []

for i in range(0, len(documents), batch_size):

resume_embeddings_naive += vo.embed(

documents[i:i + batch_size], model="voyage-large-2-instruct", input_type='document'

).embeddings

for i in range(0, len(documents), batch_size):

resume_embeddings_task_based += vo.embed(

documents_with_instructions[i:i + batch_size], model="voyage-large-2-instruct", input_type=None

).embeddings

for i in range(0, len(documents), batch_size):

resume_embeddings_non_instruct += vo.embed(

documents[i:i + batch_size], model="voyage-2", input_type='document' # we are using a non-instruct model to see how well it works

).embeddings

We then insert our vectors into a vector database. We don’t strictly need one for this demo, but a vector database with metadata filtering capabilities will allow for cleaner code, and for eventually scaling this test up. We will be using KDB.AI, where I’m a Developer Advocate. However, any vector database with metadata filtering capabilities will work just fine.

To get started with KDB.AI, go to cloud.kdb.ai to fetch your endpoint and api key.

Then, let’s instantiate the client and import some libraries.

!pip install kdbai_client

import os

from getpass import getpass

import kdbai_client as kdbai

import time

Connect to our session with our endpoint and api key.

KDBAI_ENDPOINT = (

os.environ["KDBAI_ENDPOINT"]

if "KDBAI_ENDPOINT" in os.environ

else input("KDB.AI endpoint: ")

)

KDBAI_API_KEY = (

os.environ["KDBAI_API_KEY"]

if "KDBAI_API_KEY" in os.environ

else getpass("KDB.AI API key: ")

)

session = kdbai.Session(api_key=KDBAI_API_KEY, endpoint=KDBAI_ENDPOINT)

Create our table:

schema = {

"columns": [

{"name": "id", "pytype": "str"},

{"name": "embedding_type", "pytype": "str"},

{"name": "vectors", "vectorIndex": {"dims": 1024, "metric": "CS", "type": "flat"}},

]

}

table = session.create_table("data", schema)

Insert the candidate achievements into our index, with an “embedding_type” metadata filter to separate our embeddings:

import pandas as pd

embeddings_df = pd.DataFrame(

{

"id": documents + documents + documents,

"embedding_type": ["naive"] * len(documents) + ["task"] * len(documents) + ["non_instruct"] * len(documents),

"vectors": resume_embeddings_naive + resume_embeddings_task_based + resume_embeddings_non_instruct,

}

)

table.insert(embeddings_df)

And finally, evaluate the three methods above:

import numpy as np

# Function to embed problems and calculate similarity

def get_embeddings_and_results(problems, true_positives, model_type, tag, input_prefix=None):

if input_prefix:

problems = [input_prefix + problem for problem in problems]

embeddings = vo.embed(problems, model=model_type, input_type="query" if input_prefix else None).embeddings

# Retrieve most similar items

results = []

most_similar_items = table.search(vectors=embeddings, n=1, filter=[("=", "embedding_type", tag)])

most_similar_items = np.array(most_similar_items)

for i, item in enumerate(most_similar_items):

most_similar = item[0][0] # the fist item

results.append((problems[i], most_similar == true_positives[i]))

return results

# Function to calculate and print results

def print_results(results, model_name):

true_positive_count = sum([result[1] for result in results])

percent_true_positives = true_positive_count / len(results) * 100

print(f"n{model_name} Model Results:")

for problem, is_true_positive in results:

print(f"Problem: {problem}, True Positive Found: {is_true_positive}")

print("nPercent of True Positives Found:", percent_true_positives, "%")

# Embedding, result computation, and tag for each model

models = [

("voyage-large-2-instruct", None, 'naive'),

("voyage-large-2-instruct", "Represent the problem to be solved used for suitable job candidate retrieval: ", 'task'),

("voyage-2", None, 'non_instruct'),

]

for model_type, prefix, tag in models:

results = get_embeddings_and_results(problems, true_positives, model_type, tag, input_prefix=prefix)

print_results(results, tag)

Here are the results:

naive Model Results:

Problem: High employee turnover is prompting a reassessment of core values and strategic objectives., True Positive Found: True

Problem: Perceptions of opaque decision-making are affecting trust levels within the company., True Positive Found: True

...

Percent of True Positives Found: 27.906976744186046 %

task Model Results:

...

Percent of True Positives Found: 27.906976744186046 %

non_instruct Model Results:

...

Percent of True Positives Found: 39.53488372093023 %

The instruct model performed worse on this task!

Our dataset is small enough that this isn’t a significantly large difference (under 35 high quality examples.)

Still, this shows that

a) instruct models alone are not enough to deal with this challenging task.

b) while instruct models can lead to good performance on similar tasks, it’s important to always run evals, because in this case I suspected they would do better, which wasn’t true

c) there are tasks for which instruct models perform worse

2. Reranking

While instruct/regular embedding models can narrow down our candidates somewhat, we clearly need something more powerful that has a better understanding of the relationship between our documents.

After retrieving the initial results using instruction-tuned embeddings, we employ a cross-encoder (reranker) to further refine the rankings. The reranker considers the specific context and instructions, allowing for more accurate comparisons between the query and the retrieved documents.

Reranking is crucial because it allows us to assess the relevance of the retrieved documents in a more nuanced way. Unlike the initial retrieval step, which relies solely on the similarity between the query and document embeddings, reranking takes into account the actual content of the query and documents.

By jointly processing the query and each retrieved document, the reranker can capture fine-grained semantic relationships and determine the relevance scores more accurately. This is particularly important in scenarios where the initial retrieval may return documents that are similar on a surface level but not truly relevant to the specific query.

Here’s an example of how we can perform reranking using the Cohere AI reranker (Voyage AI also has an excellent reranker, but when I wrote this article Cohere’s outperformed it. Since then they have come out with a new reranker that according to their internal benchmarks performs just as well or better.)

First, let’s define our reranking function. We can also use Cohere’s Python client, but I chose to use the REST API because it seemed to run faster.

import requests

import json

COHERE_API_KEY = 'COHERE_API_KEY'

def rerank_documents(query, documents, top_n=3):

# Prepare the headers

headers = {

'accept': 'application/json',

'content-type': 'application/json',

'Authorization': f'Bearer {COHERE_API_KEY}'

}

# Prepare the data payload

data = {

"model": "rerank-english-v3.0",

"query": query,

"top_n": top_n,

"documents": documents,

"return_documents": True

}

# URL for the Cohere rerank API

url = 'https://api.cohere.ai/v1/rerank'

# Send the POST request

response = requests.post(url, headers=headers, data=json.dumps(data))

# Check the response and return the JSON payload if successful

if response.status_code == 200:

return response.json() # Return the JSON response from the server

else:

# Raise an exception if the API call failed

response.raise_for_status()

Now, let’s evaluate our reranker. Let’s also see if adding additional context about our task improves performance.

import cohere

co = cohere.Client('COHERE_API_KEY')

def perform_reranking_evaluation(problem_candidates, use_prefix):

results = []

for problem, candidates in problem_candidates.items():

if use_prefix:

prefix = "Relevant experience of a job candidate we are considering to solve the problem: "

query = "Here is the problem we want to solve: " + problem

documents = [prefix + candidates["True Positive"]] + [prefix + candidate for candidate in candidates["Hard Negative"]]

else:

query = problem

documents = [candidates["True Positive"]]+ [candidate for candidate in candidates["Hard Negative"]]

reranking_response = rerank_documents(query, documents)

top_document = reranking_response['results'][0]['document']['text']

if use_prefix:

top_document = top_document.split(prefix)[1]

# Check if the top ranked document is the True Positive

is_correct = (top_document.strip() == candidates["True Positive"].strip())

results.append((problem, is_correct))

# print(f"Problem: {problem}, Use Prefix: {use_prefix}")

# print(f"Top Document is True Positive: {is_correct}n")

# Evaluate overall accuracy

correct_answers = sum([result[1] for result in results])

accuracy = correct_answers / len(results) * 100

print(f"Overall Accuracy with{'out' if not use_prefix else ''} prefix: {accuracy:.2f}%")

# Perform reranking with and without prefixes

perform_reranking_evaluation(problem_candidates, use_prefix=True)

perform_reranking_evaluation(problem_candidates, use_prefix=False)

Now, here are our results:

Overall Accuracy with prefix: 48.84%

Overall Accuracy without prefixes: 44.19%

By adding additional context about our task, it might be possible to improve reranking performance. We also see that our reranker performed better than all embedding models, even without additional context, so it should definitely be added to the pipeline. Still, our performance is lacking at under 50% accuracy (we retrieved the top result first for less than 50% of queries), there must be a way to do much better!

The best part of rerankers are that they work out of the box, but we can use our golden dataset (our examples with hard negatives) to fine-tune our reranker to make it much more accurate. This might improve our reranking performance by a lot, but it might not generalize to different kinds of queries, and fine-tuning a reranker every time our inputs change can be frustrating.

3. LLMs

In cases where ambiguity persists even after reranking, LLMs can be leveraged to analyze the retrieved results and provide additional context or generate targeted summaries.

LLMs, such as GPT-4, have the ability to understand and generate human-like text based on the given context. By feeding the retrieved documents and the query to an LLM, we can obtain more nuanced insights and generate tailored responses.

For example, we can use an LLM to summarize the most relevant aspects of the retrieved documents in relation to the query, highlight the key qualifications or experiences of the job candidates, or even generate personalized feedback or recommendations based on the matchmaking results.

This is great because it can be done after the results are passed to the user, but what if we want to rerank dozens or hundreds of results? Our LLM’s context will be exceeded, and it will take too long to get our output. This doesn’t mean you shouldn’t use an LLM to evaluate the results and pass additional context to the user, but it does mean we need a better final-step reranking option.

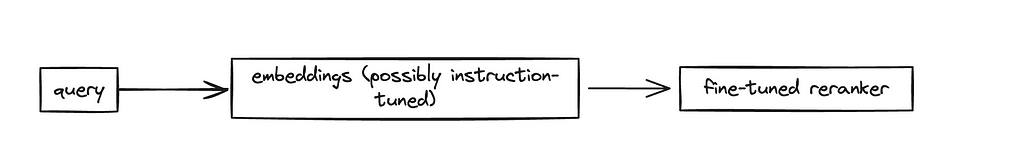

Let’s imagine we have a pipeline that looks like this:

This pipeline can narrow down millions of possible documents to just a few dozen. But the last few dozen is extremely important, we might be passing only three or four documents to an LLM! If we are displaying a job candidate to a user, it’s very important that the first candidate shown is a much better fit than the fifth.

We know that LLMs are excellent rerankers, and there are a few reasons for that:

- LLMs are list aware. This means they can see other candidates and compare them, which is additional information that can be used. Imagine you (a human) were asked to rate a candidate from 1–10. Would showing you all other candidates help? Of course!

- LLMs are really smart. LLMs understand the task they are given, and based on this can very effectively understand whether a candidate is a good fit, regardless of simple semantic similarity.

We can exploit the second reason with a perplexity based classifier. Perplexity is a metric which estimates how much an LLM is ‘confused’ by a particular output. In other words, we can as an LLM to classify our candidate into ‘a very good fit’ or ‘not a very good fit’. Based on the certainty with which it places our candidate into ‘a very good fit’ (the perplexity of this categorization,) we can effectively rank our candidates.

There are all kinds of optimizations that can be made, but on a good GPU (which is highly recommended for this part) we can rerank 50 candidates in about the same time that cohere can rerank 1 thousand. However, we can parallelize this calculation on multiple GPUs to speed this up and scale to reranking thousands of candidates.

First, let’s install and import lmppl, a library that let’s us evaluate the perplexity of certain LLM completions. We will also create a scorer, which is a large T5 model (anything larger runs too slowly, and smaller performs much worse.) If you can achieve similar results with a decoder model, please let me know, as that would make additional performance gains much easier (decoders are getting better and cheaper much more quickly than encoder-decoder models.)

!pip install lmppl

import lmppl

# Initialize the scorer for a encoder-decoder model, such as flan-t5. Use small, large, or xl depending on your needs. (xl will run much slower unless you have a GPU and a lot of memory) I recommend large for most tasks.

scorer = lmppl.EncoderDecoderLM('google/flan-t5-large')

Now, let’s create our evaluation function. This can be turned into a general function for any reranking task, or you can change the classes to see if that improves performance. This example seems to work well. We cache responses so that running the same values is faster, but this isn’t too necessary on a GPU.

cache = {}

def evaluate_candidates(query, documents, personality, additional_command=""):

"""

Evaluate the relevance of documents to a given query using a specified scorer,

caching individual document scores to avoid redundant computations.

Args:

- query (str): The query indicating the type of document to evaluate.

- documents (list of str): List of document descriptions or profiles.

- personality (str): Personality descriptor or model configuration for the evaluation.

- additional_command (str, optional): Additional command to include in the evaluation prompt.

Returns:

- sorted_candidates_by_score (list of tuples): List of tuples containing the document description and its score, sorted by score in descending order.

"""

try:

uncached_docs = []

cached_scores = []

# Identify cached and uncached documents

for document in documents:

key = (query, document, personality, additional_command)

if key in cache:

cached_scores.append((document, cache[key]))

else:

uncached_docs.append(document)

# Process uncached documents

if uncached_docs:

input_prompts_good_fit = [

f"{personality} Here is a problem statement: '{query}'. Here is a job description we are determining if it is a very good fit for the problem: '{doc}'. Is this job description a very good fit? Expected response: 'a great fit.', 'almost a great fit', or 'not a great fit.' This document is: "

for doc in uncached_docs

]

print(input_prompts_good_fit)

# Mocked scorer interaction; replace with actual API call or logic

outputs_good_fit = ['a very good fit.'] * len(uncached_docs)

# Calculate perplexities for combined prompts

perplexities = scorer.get_perplexity(input_texts=input_prompts_good_fit, output_texts=outputs_good_fit)

# Store scores in cache and collect them for sorting

for doc, good_ppl in zip(uncached_docs, perplexities):

score = (good_ppl)

cache[(query, doc, personality, additional_command)] = score

cached_scores.append((doc, score))

# Combine cached and newly computed scores

sorted_candidates_by_score = sorted(cached_scores, key=lambda x: x[1], reverse=False)

print(f"Sorted candidates by score: {sorted_candidates_by_score}")

print(query, ": ", sorted_candidates_by_score[0])

return sorted_candidates_by_score

except Exception as e:

print(f"Error in evaluating candidates: {e}")

return None

Now, let’s rerank and evaluate:

def perform_reranking_evaluation_neural(problem_candidates):

results = []

for problem, candidates in problem_candidates.items():

personality = "You are an extremely intelligent classifier (200IQ), that effectively classifies a candidate into 'a great fit', 'almost a great fit' or 'not a great fit' based on a query (and the inferred intent of the user behind it)."

additional_command = "Is this candidate a great fit based on this experience?"

reranking_response = evaluate_candidates(problem, [candidates["True Positive"]]+ [candidate for candidate in candidates["Hard Negative"]], personality)

top_document = reranking_response[0][0]

# Check if the top ranked document is the True Positive

is_correct = (top_document == candidates["True Positive"])

results.append((problem, is_correct))

print(f"Problem: {problem}:")

print(f"Top Document is True Positive: {is_correct}n")

# Evaluate overall accuracy

correct_answers = sum([result[1] for result in results])

accuracy = correct_answers / len(results) * 100

print(f"Overall Accuracy Neural: {accuracy:.2f}%")

perform_reranking_evaluation_neural(problem_candidates)

And our result:

Overall Accuracy Neural: 72.09%

This is much better than our rerankers, and required no fine-tuning! Not only that, but this is much more flexible towards any task, and easier to get performance gains just by modifying classes and prompt engineering. The drawback is that this architecture is unoptimized, it’s difficult to deploy (I recommend modal.com for serverless deployment on multiple GPUs, or to deploy a GPU on a VPS.)

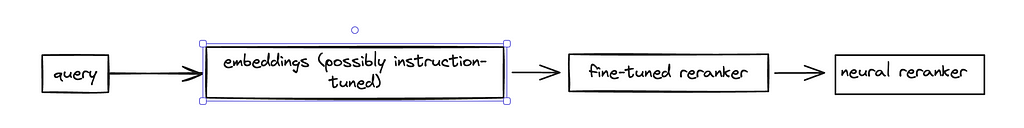

With this neural task aware reranker in our toolbox, we can create a more robust reranking pipeline:

Conclusion

Enhancing document retrieval for complex matchmaking tasks requires a multi-faceted approach that leverages the strengths of different AI techniques:

1. Instruction-tuned embeddings provide a foundation by encoding task-specific instructions to guide the model in capturing relevant aspects of queries and documents. However, evaluations are crucial to validate their performance.

2. Reranking refines the retrieved results by deeply analyzing content relevance. It can benefit from additional context about the task at hand.

3. LLM-based classifiers serve as a powerful final step, enabling nuanced reranking of the top candidates to surface the most pertinent results in an order optimized for the end user.

By thoughtfully orchestrating instruction-tuned embeddings, rerankers, and LLMs, we can construct robust AI pipelines that excel at challenges like matching job candidates to role requirements. Meticulous prompt engineering, top-performing models, and the inherent capabilities of LLMs allow for better Task-Aware RAG pipelines — in this case delivering outstanding outcomes in aligning people with ideal opportunities. Embracing this multi-pronged methodology empowers us to build retrieval systems that just retrieving semantically similar documents, but truly intelligent and finding documents that fulfill our unique needs.

Task-Aware RAG Strategies for When Sentence Similarity Fails was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Task-Aware RAG Strategies for When Sentence Similarity Fails

Go Here to Read this Fast! Task-Aware RAG Strategies for When Sentence Similarity Fails