Routing in RAG-Driven Applications

Directing the application flow based on query intent

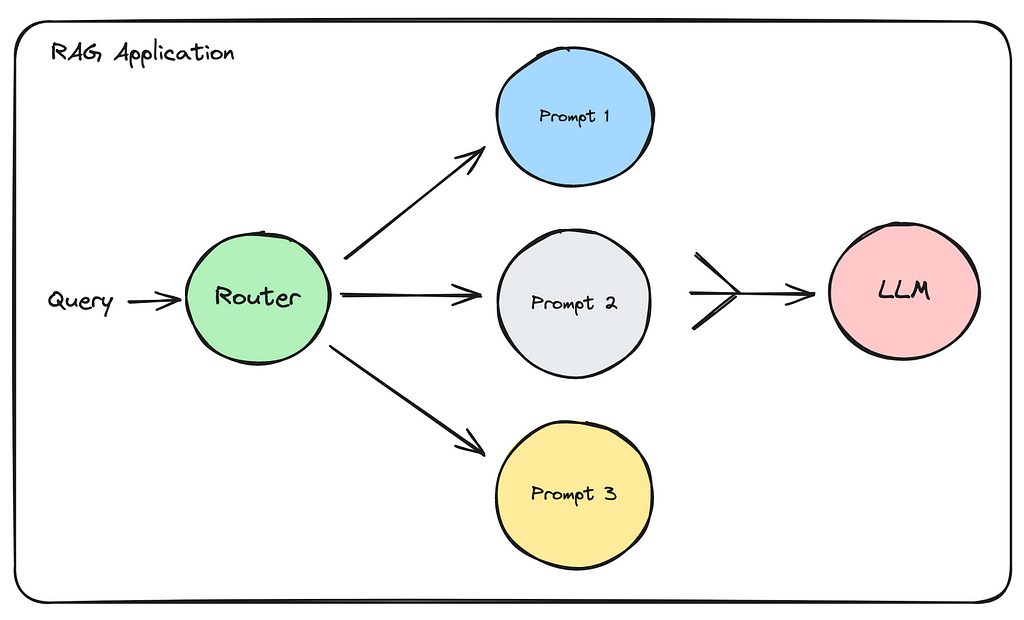

Routing the control flow inside a RAG application based on the intent of the user’s query can help us create more useful and powerful Retrieval Augmented Generation (RAG) based applications.

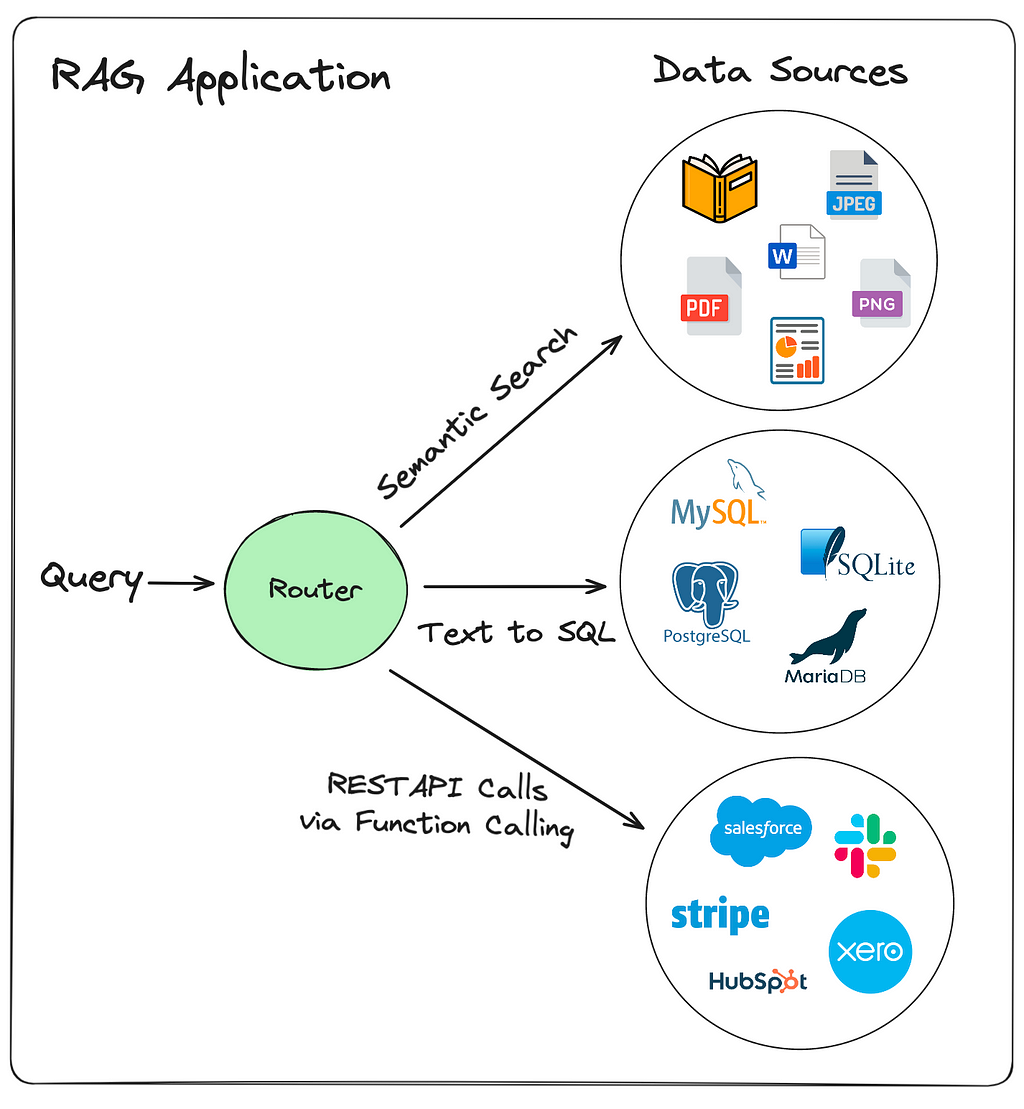

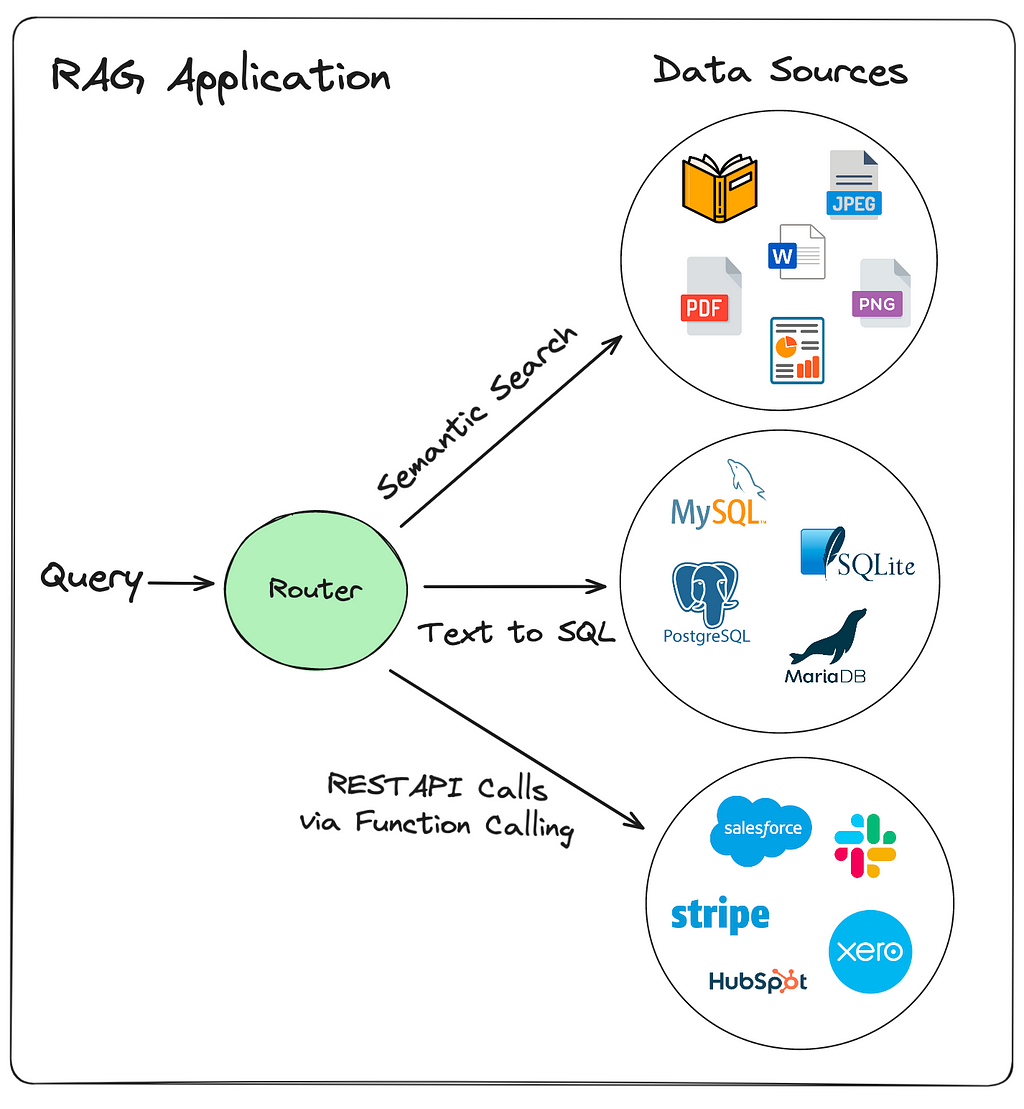

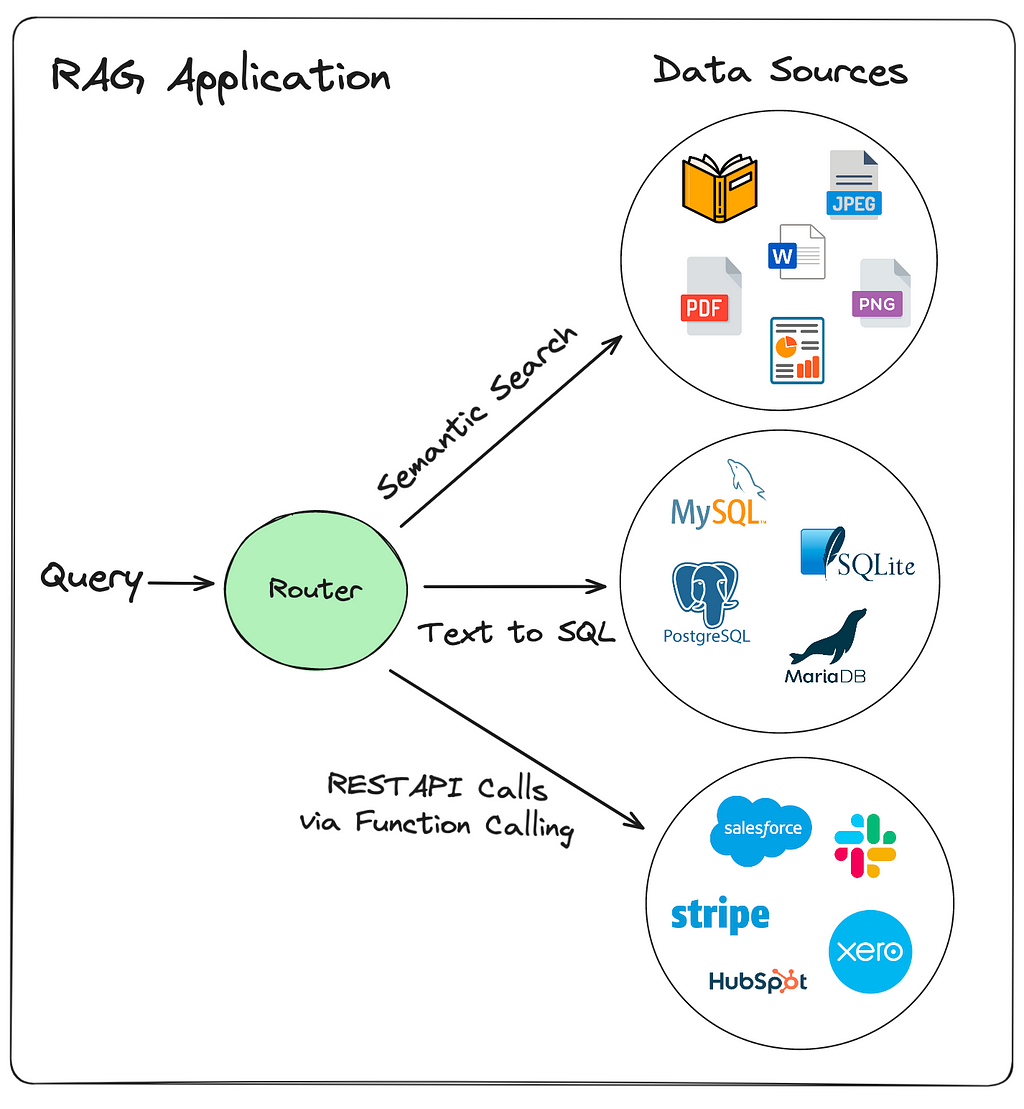

The data we want to enable the user to interact with may well be coming from a diverse range of sources, such as from reports, documents, images, databases, and third party systems. For business-based RAG applications, we may want to enable the user to interact with information from a range of areas in the business also, such as from the sales, ordering and accounting systems.

Because of this diverse range of data sources, the way the information is stored, and the way we want to interact with it, is likely to be diverse also. Some data may be stored in vector stores, some in SQL databases, and some we may need to access over API calls as it sits in third party systems.

There could be different vector stores setup also for the same but of data, optimised for different query types. For example one vector store could be setup for answering summary type questions, and another for answering specific, directed type questions.

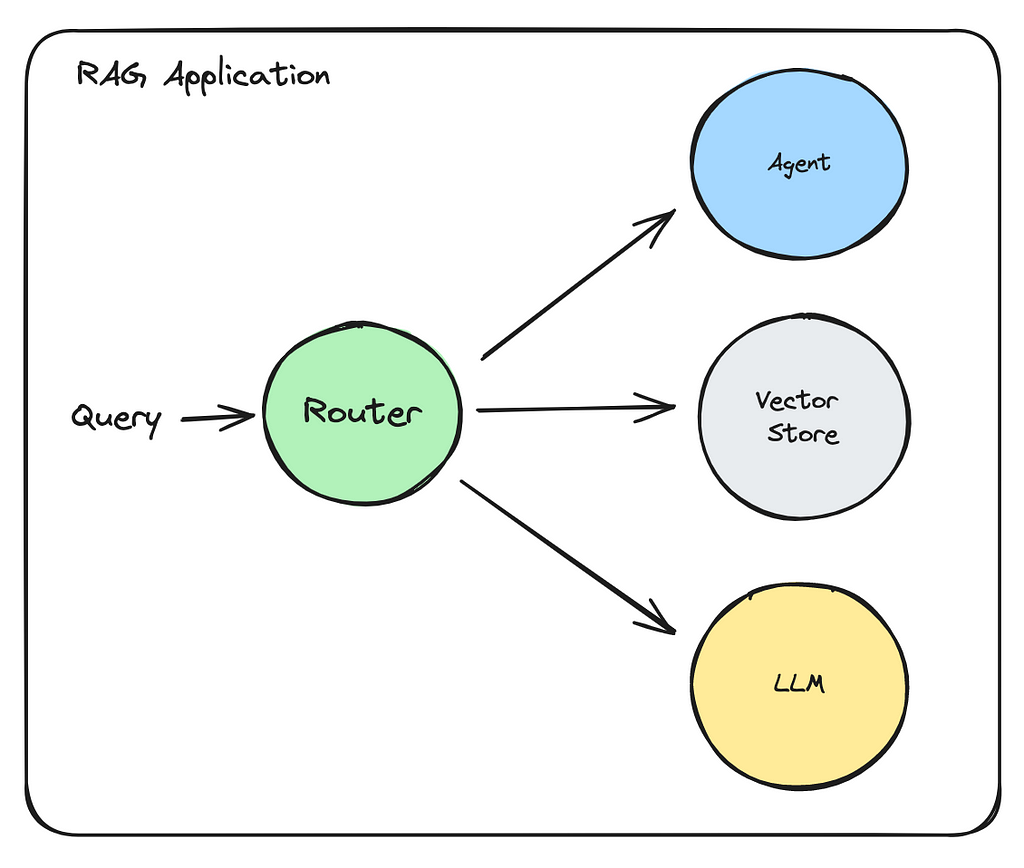

And we may want to route to different component types also, based on the question. For example we may want to pass the query to an Agent, VectorStore, or just directly to an LLM for processing, all based on the nature of the question

We may even want to customise the prompt templates depending on the question being asked.

All in all, there are numerous reasons we would want to change and direct the flow of the user’s query through the application. The more use cases our application is trying to fulfil, the more likely we are to have routing requirements throughout the application.

Routers are essentially just If/Else statements we can use to direct the control flow of the query.

What is interesting about them though is it that they need to make their decisions based on natural language input. So we are looking for a discrete output based on a natural language description.

And since a lot of the routing logic is based on using LLMs or machine learning algorithms, which are non-deterministic in nature, we cannot guarantee that a router will always 100% make the right choice. Add to that that we are unlikely to be able to predict all the different query variations that come into a router. However, using best practices and some testing we should be able to employ Routers to help create more powerful RAG applications.

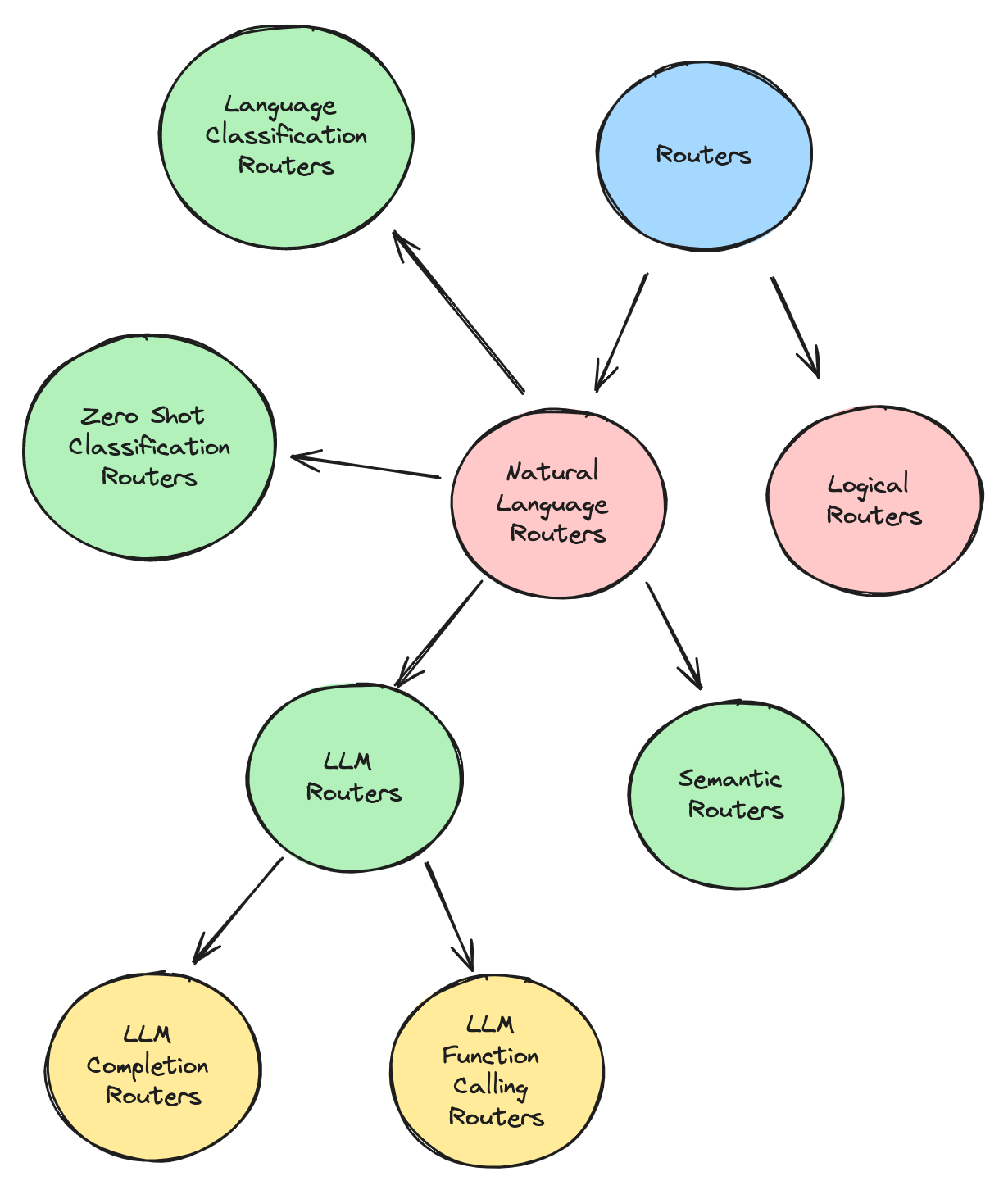

Natural Language Routers

We will explore here a few of the natural language routers I have found that are implemented by some different RAG and LLM frameworks and libraries.

- LLM Completion Routers

- LLM Function Calling Routers

- Semantic Routers

- Zero Shot Classification Routers

- Language Classification Routers

The diagram below gives a description of these routers, along with the frameworks/packages where they can be found.

The diagram also includes Logical Routers, which I am defining as routers that work based on discrete logic such as conditions against string length, file names, integer values, e.t.c. In other words they are not based on having to understand the intent of a natural language query

Let’s explore each of these routers in a little more detail

LLM Routers

These leverage the decision making abilities of LLMs to select a route based on the user’s query.

LLM Completion Router

These use an LLM completion call, asking the LLM to return a single word that best describes the query, from a list of word options you pass in to its prompt. This word can then be used as part of an If/Else condition to control the application flow.

This is how the LLM Selector router from LlamaIndex works. And is also the example given for a router inside the LangChain docs.

Let’s look at a code sample, based on the one provided in the LangChain docs, to make this a bit more clear. As you can see, coding up one of these on your own inside LangChain is pretty straight forward.

from langchain_anthropic import ChatAnthropic

from langchain_core.output_parsers import StrOutputParser

from langchain_core.prompts import PromptTemplate

# Set up the LLM Chain to return a single word based on the query,

# and based on a list of words we provide to it in the prompt template

llm_completion_select_route_chain = (

PromptTemplate.from_template("""

Given the user question below, classify it as either

being about `LangChain`, `Anthropic`, or `Other`.

Do not respond with more than one word.

<question>

{question}

</question>

Classification:"""

)

| ChatAnthropic(model_name="claude-3-haiku")

| StrOutputParser()

)

# We setup an IF/Else condition to route the query to the correct chain

# based on the LLM completion call above

def route_to_chain(route_name):

if "anthropic" == route_name.lower():

return anthropic_chain

elif "langchain" == route_name.lower():

return langchain_chain

else:

return general_chain

...

# Later on in the application, we can use the response from the LLM

# completion chain to control (i.e route) the flow of the application

# to the correct chain via the route_to_chain method we created

route_name = llm_completion_select_route_chain.invoke(user_query)

chain = route_to_chain(route_name)

chain.invoke(user_query)

LLM Function Calling Router

This leverages the function-calling ability of LLMs to pick a route to traverse. The different routes are set up as functions with appropriate descriptions in the LLM Function Call. Then, based on the query passed to the LLM, it is able to return the correct function (i.e route), for us to take.

This is how the Pydantic Router works inside LlamaIndex. And this is the way most Agents work also to select the correct tool to be used. They leverage the Function Calling abilities of LLMs in order to select the correct tool for the job based on the user’s query.

Semantic Router

This router type leverages embeddings and similarity searches to select the best route to traverse.

Each route has a set of example queries associated with it, that become embedded and stored as vectors. The incoming query gets embedded also, and a similarity search is done against the other sample queries from the router. The route which belongs to the query with the closest match gets selected.

There is in fact a python package called semantic-router that does just this. Let’s look at some implementation details to get a better idea of how the whole thing works. These examples come straight out of that libraries GitHub page.

Let’s set up two routes, one for questions about politics, and another for general chitchat type questions. To each route, we assign a list of questions that might typically be asked in order to trigger that route. These example queries are referred to as utterances. These utterances will be embedded, so that we can use them for similarity searches against the user’s query.

from semantic_router import Route

# we could use this as a guide for our chatbot to avoid political

# conversations

politics = Route(

name="politics",

utterances=[

"isn't politics the best thing ever",

"why don't you tell me about your political opinions",

"don't you just love the president",

"they're going to destroy this country!",

"they will save the country!",

],

)

# this could be used as an indicator to our chatbot to switch to a more

# conversational prompt

chitchat = Route(

name="chitchat",

utterances=[

"how's the weather today?",

"how are things going?",

"lovely weather today",

"the weather is horrendous",

"let's go to the chippy",

],

)

# we place both of our decisions together into single list

routes = [politics, chitchat]

We assign OpenAI as the encoder, though any embedding library will work. And next we create our route layer using the routers and encoder.

encoder = OpenAIEncoder()

from semantic_router.layer import RouteLayer

route_layer = RouteLayer(encoder=encoder, routes=routes)

Then, when apply our query against the router layer, it returns the route that should be used for query

route_layer("don't you love politics?").name

# -> 'politics'

So, just to summarise again, this semantic router leverages embeddings and similarity searches using the user’s query to select the optimal route to traverse. This router type should be faster than the other LLM based routers also, since it requires just a single Index query to be processed, as oppose to the other types which require calls to an LLM.

Zero Shot Classification Router

“Zero-shot text classification is a task in natural language processing where a model is trained on a set of labeled examples but is then able to classify new examples from previously unseen classes”. These routers leverage a Zero-Shot Classification model to assign a label to a piece of text, from a predefined set of labels you pass in to the router.

Example: The ZeroShotTextRouter in Haystack, which leverages a Zero Shot Classification model from Hugging Face. Check out the source code here to see where the magic happens.

Language Classification Router

This type of router is able to identify the language that the query is in, and routes the query based on that. Useful if you require some sort of multilingual parsing abilities in your application.

Example: The TextClassificationRouter from Haystack. It leverages the langdetect python library to detect the language of of the text, which itself uses a Naive Bayes algorithm to detect the language.

Keyword Router

This article from Jerry Liu, the Co-Founder of LlamaIndex, on routing inside RAG applications, suggests, among other options, a keyword router that would try to select a route by matching keywords between the query and routes list.

This Keyword router could be powered by an LLM also to identify keywords, or by some other keyword matching library. I have not been able to find any packages that implement this router type

Logical Routers

These use logic checks against variables, such as string lengths, file names, and value comparisons to handle how to route a query. They are very similar to typical If/Else conditions used in programming.

In other words, they are not based on having to understand the intent of a natural language query but can make their choice based on existing and discrete variables.

Example: The ConditionalRouter and FileTypeRouter from HayStack.

Agents v.s Routers

At first sight, there is indeed a lot of similarities between routers and agents, and it might be difficult to distinguish how they are different.

The similarities exist because Agents do in fact perform routing as part of their flow. They use a routing mechanism in order to select the correct tool to use for the job. They often leverage function calling in order to select the correct tool, just like the LLM Function Calling Routers described above.

Routers are much more simple components than Agents though, often with the “simple” job of just routing a task to the correct place, as oppose to carrying out any of the logic or processing related to that task.

Agents on the other hand are often responsible for processing logic, including managing work done by the tools they have access to.

Conclusion

We covered here a few of the different natural language routers currently found inside different RAG and LLM frameworks and packages.

The concepts and packages and libraries around routing are sure to increase as time goes on. When building a RAG application, you will find that at some point, not too far in, routing capabilities do become necessary in order to build an application that is useful for the user.

Routers are these basic building blocks that allow you to route the natural language requests to your application to the right place, so that the user’s queries can be fulfilled as best as possible.

Hope that was useful! Do subscribe to get a notification whenever a new article of mine comes out

Unless otherwise noted, all images are by the author. Icons used in the first image by SyafriStudio, Dimitry Miroliubovand, and Free Icons PNG

Routing in RAG Driven Applications was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Routing in RAG Driven Applications

Go Here to Read this Fast! Routing in RAG Driven Applications