Applying machine learning methodology to prompt building

LLMs are grounded in data science, but our approach to prompt engineering might strike us as unscientific:

- Manual prompt engineering which does not generalize well: LLMs are highly sensitive to how they are prompted for each task, so we need to handcraft long strings of instructions and demonstrations. This requires not only time-consuming prompt writing process, but the given string prompt might not generalize to different pipelines or across different LMs, data domains, or even inputs. To deal with a new problem we often need to handcraft a new prompt.

- Lack framework to conduct testing: Instead of the usual train-test regime in typical data science applications to pick the model which maximizes a certain metric like AUC, with LLMs we arrive at the best prompt via trial and error, often without an objective metric to say how well our model is performing. Thus no matter how we try to improve the prompt, we can’t confidently say how reliable our application is.

To address these issues, Stanford NLP has published a paper introducing a new approach with prompt writing: instead of manipulating free-form strings, we generate prompts via modularized programming. The associated library, called DSPy, can be found here.

This article aims to show how this “prompt programming” is done, to go deeper in explaining what’s happening behind the optimization process. The code can also be found here.

(Speaking of which, you might also find coaxing LLMs to output properly formatted JSON very unscientific too, I have also written an article about how to address this with Function Calling. Check it out !)

Build Autonomous AI Agents with Function Calling

We will spend some time to go over the environment preparation. Afterwards, this article is divided into 3 sections:

- Basic concept of DSPy: Signature and Module

Basic building blocks in DSPy for describing your task, and the prompt technique used - Optimizer: Train our prompt as with machine learning

How DSPy optimizes your prompt with bootstrapping - Full fledged example: Prompt comparison with LLM

Applying the rigour of traditional machine learning for prompt testing and selection

We are now ready to start!

Preparation

- Head over to Github to clone my code. The contents in my article can be found in the dspy_tutorial Notebook.

- Please also create and activate a virtual environment, then pip install -r requirements.txt to install the required packages. If you are on Windows, please also install Windows C++ build tools which are required for the phoneix library with which we will observe how DSPy works

- My code uses OpenRouter, which allow us to access OpenAI API in blocked regions. Please set up your OPENROUTER_API_KEY as environment variable, and execute the code under the “Preparation” block. Alternatively, you can use dspy.OpenAI class directly and define Open AI API key if it works for you

Basic concept of DSPy: Signature and Module

They are the building blocks of prompt programming in DSPy. Let’s dive in to see what they are about!

Signatures: Specification of input/output

A signature is the most fundamental building block in DSPy’s prompt programming, which is a declarative specification of input/output behavior of a DSPy module. Signatures allow you to tell the LM what it needs to do, rather than specify how we should ask the LM to do it.

Say we want to obtain the sentiment of a sentence, traditionally we might write such prompt:

Given a sentence {the_sentence_itself}, deduce its sentiment.

But in DSPy, we can achieve the same by defining a signature as below. At its most basic form, a signature is as simple as a single string separating the inputs and output with a ->

Note: Code in this section contains those referred from DSPy’s documentation of Signatures

# Define signature

signature = 'sentence -> sentiment'

classify = dspy.Predict(signature)

# Run

sentence = "it's a charming and often affecting journey."

classify(sentence=sentence).sentiment

--- Output ---

"I'm sorry, but I am unable to determine the sentiment of the sentence without additional context or information. If you provide me with more details or specific criteria for determining sentiment, I would be happy to assist you further."

The prediction is not a good one, but for instructional purpose let’s inspect what was the issued prompt.

# This is how we inpect the last issued prompt to the LM

lm.inspect_history(n=1)

--- Output ---

Given the fields `sentence`, produce the fields `sentiment`.

---

Follow the following format.

Sentence: ${sentence}

Sentiment: ${sentiment}

---

Sentence: it's a charming and often affecting journey.

Sentiment: I'm sorry, but I am unable to determine the sentiment of the sentence without additional context or information. If you provide me with more details or specific criteria for determining sentiment, I would be happy to assist you further.

We can see the above prompt is assembled from the sentence -> sentiment signature. But how did DSPy came up with the Given the fields… in the prompt?

Inspecting the dspy.Predict() class, we see when we pass to it our signature, the signature will be parsed as the signature attribute of the class, and subsequently assembled as a prompt. The instructions is a default one hardcoded in the DSPy library.

# Check the variables of the `classify` object,

# which was created by passing the signature to `dspy.Predict()` class

vars(classify)

--- Output ---

{

'signature': StringSignature(sentence -> sentiment

instructions='Given the fields `sentence`, produce the fields `sentiment`.'

sentence = Field(annotation=str required=True json_schema_extra={'__dspy_field_type': 'input', 'prefix': 'Sentence:', 'desc': '${sentence}'})

sentiment = Field(annotation=str required=True json_schema_extra={'__dspy_field_type': 'output', 'prefix': 'Sentiment:', 'desc': '${sentiment}'})

),

'some_other_attributes': 'xxx'}

What if we want to provide a more detailed description of our objective to the LLM, beyond the basic sentence -> sentiment signature? To do so we need to provide a more verbose signature in form of Class-based DSPy Signatures.

Notice we provide no explicit instruction as to how the LLM should obtain the sentiment. We are just describing the task at hand, and also the expected output.

# Define signature in Class-based form

class Emotion(dspy.Signature):

# Describe the task

"""Classify emotions in a sentence."""

sentence = dspy.InputField()

# Adding description to the output field

sentiment = dspy.OutputField(desc="Possible choices: sadness, joy, love, anger, fear, surprise.")

classify_class_based = dspy.Predict(Emotion)

# Issue prediction

classify_class_based(sentence=sentence).sentiment

--- Output ---

Sentence: It's a charming and often affecting journey.

Sentiment: joy

It is now outputting a much better prediction! Again we see the descriptions we made when defining the class-based DSPy signatures are assembled into a prompt.

Classify emotions in a sentence.

---

Follow the following format.

Sentence: ${sentence}

Sentiment: Possible choices: sadness, joy, love, anger, fear, surprise.

---

Sentence: it's a charming and often affecting journey.

Sentiment: Sentence: It's a charming and often affecting journey.

Sentiment: joy

This might do for simple tasks, but advanced applications might require sophisticated prompting techniques like Chain of Thought or ReAct. In DSPy these are implemented as Modules

Modules: Abstracting prompting techniques

We may be used to apply “prompting techniques” by hardcoding phrases like let’s think step by step in our prompt . In DSPy these prompting techniques are abstracted as Modules. Let’s see below for an example of applying our class-based signature to the dspy.ChainOfThought module

# Apply the class-based signature to Chain of Thought

classify_cot = dspy.ChainOfThought(Emotion)

# Run

classify_cot(sentence=sentence).sentiment

# Inspect prompt

lm.inspect_history(n=1)

--- Output ---

Classify emotions in a sentence.

---

Follow the following format.

Sentence: ${sentence}

Reasoning: Let's think step by step in order to ${produce the sentiment}. We ...

Sentiment: Possible choices: sadness, joy, love, anger, fear, surprise.

---

Sentence: it's a charming and often affecting journey.

Reasoning: Let's think step by step in order to Sentence: It's a charming and often affecting journey.

Reasoning: Let's think step by step in order to determine the sentiment. The use of the words "charming" and "affecting" suggests positive emotions associated with enjoyment and emotional impact. We can infer that the overall tone is positive and heartwarming, evoking feelings of joy and possibly love.

Sentiment: Joy, love

Notice how the “Reasoning: Let’s think step by step…” phrase is added to our prompt, and the quality of our prediction is even better now.

According to DSPy’s documentation, as of time of writing DSPy provides the following prompting techniques in form of Modules. Notice the dspy.Predict we used in the initial example is also a Module, representing no prompting technique!

- dspy.Predict: Basic predictor. Does not modify the signature. Handles the key forms of learning (i.e., storing the instructions and demonstrations and updates to the LM).

- dspy.ChainOfThought: Teaches the LM to think step-by-step before committing to the signature’s response.

- dspy.ProgramOfThought: Teaches the LM to output code, whose execution results will dictate the response.

- dspy.ReAct: An agent that can use tools to implement the given signature.

- dspy.MultiChainComparison: Can compare multiple outputs from ChainOfThought to produce a final prediction.

It also have some function-style modules:

6. dspy.majority: Can do basic voting to return the most popular response from a set of predictions.

You can check out further examples in each module’s respective guide.

Chaining the modules

On the other hand, what about RAG? We can chain the modules together to deal with bigger problems!

First we define a retriever, for our example we use a ColBERT retriever getting information from Wikipedia Abstracts 2017

# Configure retriever

rm = dspy.ColBERTv2(url='http://20.102.90.50:2017/wiki17_abstracts')

dspy.settings.configure(rm = rm)

Then we define the RAG class inherited from dspy.Module. It needs two methods:

- The __init__ method will simply declare the sub-modules it needs: dspy.Retrieve and dspy.ChainOfThought. The latter is defined to implement our context, question -> answer signature.

- The forward method will describe the control flow of answering the question using the modules we have.

Note: Code in this section is borrowed from DSPy’s introduction notebook

# Define a class-based signature

class GenerateAnswer(dspy.Signature):

"""Answer questions with short factoid answers."""

context = dspy.InputField(desc="may contain relevant facts")

question = dspy.InputField()

answer = dspy.OutputField(desc="often between 1 and 5 words")

# Chain different modules together to retrieve information from Wikipedia Abstracts 2017, then pass it as context for Chain of Thought to generate an answer

class RAG(dspy.Module):

def __init__(self, num_passages=3):

super().__init__()

self.retrieve = dspy.Retrieve(k=num_passages)

self.generate_answer = dspy.ChainOfThought(GenerateAnswer)

def forward(self, question):

context = self.retrieve(question).passages

answer = self.generate_answer(context=context, question=question)

return answer

Then we make use of the class to perform a RAG

# Initilize our RAG class

rag = RAG()

# Define a question and pass it into the RAG class

my_question = "When was the first FIFA World Cup held?"

rag(question=my_question).answer

--- Output ---

'1930'

Inspecting the prompt, we see that 3 passages retrieved from Wikipedia Abstracts 2017 is interpersed as context for Chain of Thought generation

Answer questions with short factoid answers.

---

Follow the following format.

Context: may contain relevant facts

Question: ${question}

Reasoning: Let's think step by step in order to ${produce the answer}. We ...

Answer: often between 1 and 5 words

---

Context:

[1] «History of the FIFA World Cup | The FIFA World Cup was first held in 1930, when FIFA president Jules Rimet decided to stage an international football tournament. The inaugural edition, held in 1930, was contested as a final tournament of only thirteen teams invited by the organization. Since then, the World Cup has experienced successive expansions and format remodeling to its current 32-team final tournament preceded by a two-year qualifying process, involving over 200 teams from around the world.»

[2] «1950 FIFA World Cup | The 1950 FIFA World Cup, held in Brazil from 24 June to 16 July 1950, was the fourth FIFA World Cup. It was the first World Cup since 1938, the planned 1942 and 1946 competitions having been cancelled owing to World War II. It was won by Uruguay, who had won the inaugural competition in 1930, clinching the cup by beating the hosts Brazil 2–1 in the deciding match of the four-team final group (this was the only tournament not decided by a one-match final). It was also the first tournament where the trophy was referred to as the Jules Rimet Cup, to mark the 25th anniversary of Jules Rimet's presidency of FIFA.»

[3] «1970 FIFA World Cup | The 1970 FIFA World Cup was the ninth FIFA World Cup, the quadrennial international football championship for men's national teams. Held from 31 May to 21 June in Mexico, it was the first World Cup tournament staged in North America, and the first held outside Europe and South America. Teams representing 75 nations from all six populated continents entered the competition, and its qualification rounds began in May 1968. Fourteen teams qualified from this process to join host nation Mexico and defending champions England in the sixteen-team final tournament. El Salvador, Israel, and Morocco made their first appearances at the final stage, and Peru their first since 1930.»

Question: When was the first FIFA World Cup held?

Reasoning: Let's think step by step in order to Answer: 1930

Answer: 1930

The above examples might not seem much. At its most basic application the DSPy seemed only doing nothing that can’t be done with f-string, but it actually present a paradigm shift for prompt writing, as this brings modularity to prompt composition!

First we describe our objective with Signature, then we apply different prompting techniques with Modules. To test different prompt techniques for a given problem, we can simply switch the modules used and compare their results, rather than hardcoding the “let’s think step by step…” (for Chain of Thought) or “you will interleave Thought, Action, and Observation steps” (for ReAct) phrases. The benefit of modularity will be demonstrated later in this article with a full-fledged example.

The power of DSPy is not only limited to modularity, it can also optimize our prompt based on training samples, and test it systematically. We will be exploring this in the next section!

Optimizer: Train our prompt as with machine learning

In this section we try to optimize our prompt for a RAG application with DSPy.

Taking Chain of Thought as an example, beyond just adding the “let’s think step by step” phrase, we can boost its performance with a few tweaks:

- Adding suitable examples (aka few-shot learning).

- Furthermore, we can bootstrap demonstrations of reasoning to teach the LMs to apply proper reasoning to deal with the task at hand.

Doing this manually would be highly time-consuming and can’t generalize to different problems, but with DSPy this can be done automatically. Let’s dive in!

Preparation

#1: Loading test data: Like machine learning, to train our prompt we need to prepare our training and test datasets. Initially this cell will take around 20 minutes to run.

from dspy.datasets.hotpotqa import HotPotQA

# For demonstration purpose we will use a small subset of the HotPotQA dataset, 20 for training and testing each

dataset = HotPotQA(train_seed=1, train_size=20, eval_seed=2023, dev_size=20, test_size=0)

trainset = [x.with_inputs('question') for x in dataset.train]

testset = [x.with_inputs('question') for x in dataset.dev]

len(trainset), len(testset)

Inspecting our dataset, which is basically a set of question-and-answer pairs

Example({'question': 'At My Window was released by which American singer-songwriter?', 'answer': 'John Townes Van Zandt'}) (input_keys={'question'})

#2 Set up Phoenix for observability: To facilitate understanding of the optimization process, we launch Phoenix to observe our DSPy application, which is a great tool for LLM observability in general! I will skip pasting the code here, but you can execute it in the notebook.

Note: If you are on Windows, please also install Windows C++ Build Tools here, which is necessary for Phoenix

Prompt Optimization

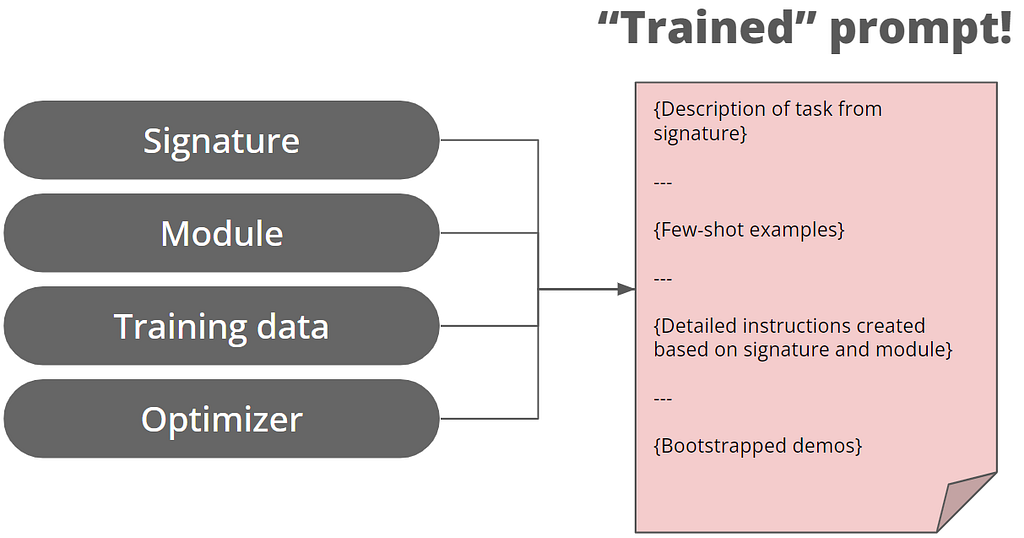

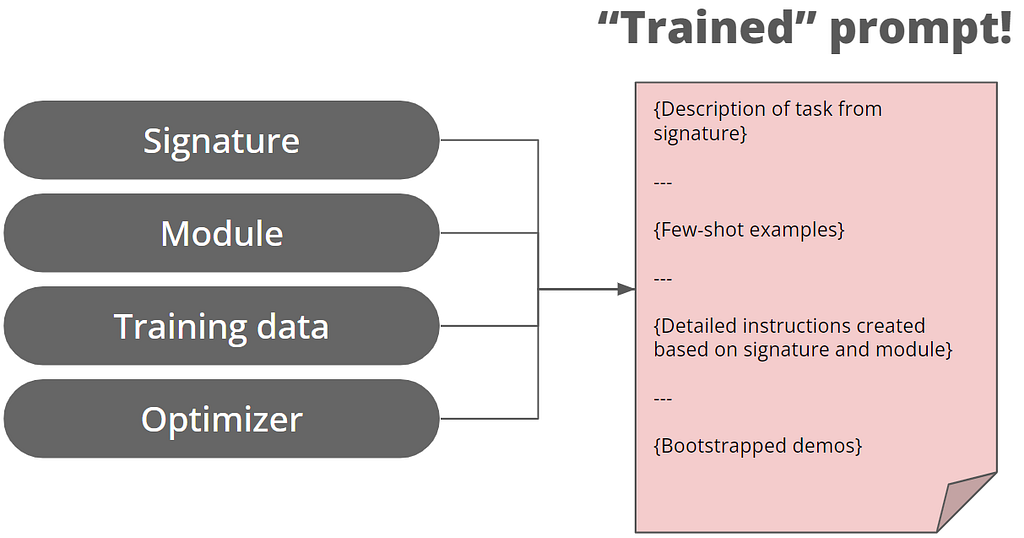

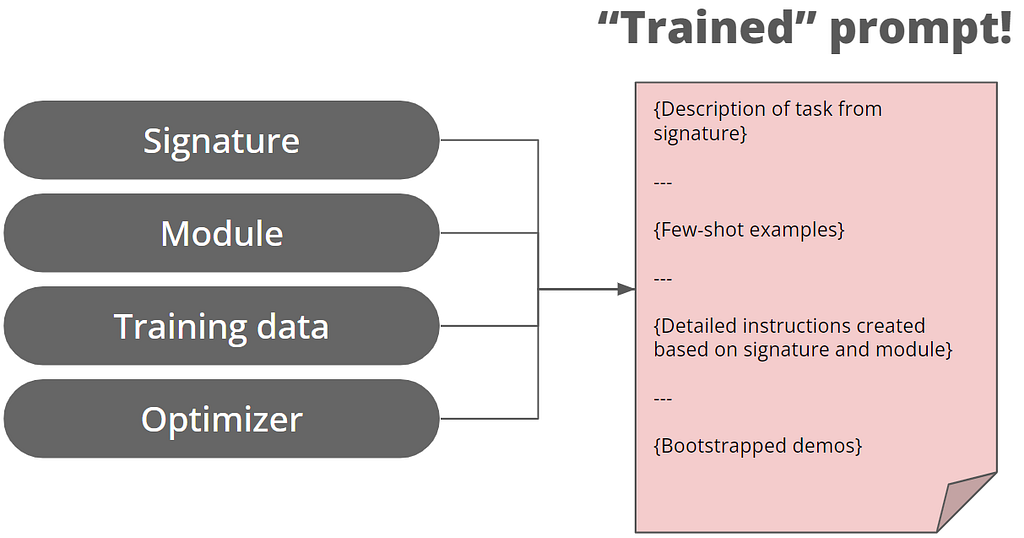

Then we are ready to see what this opimitzation is about! To “train” our prompt, we need 3 things:

- A training set. We’ll just use our 20 question–answer examples from trainset.

- A metric for validation. Here we use the native dspy.evaluate.answer_exact_match which checks if the predicted answer exactly matches the right answer (questionable but suffice for demonstration). For real-life applications you can define your own evaluation criteria

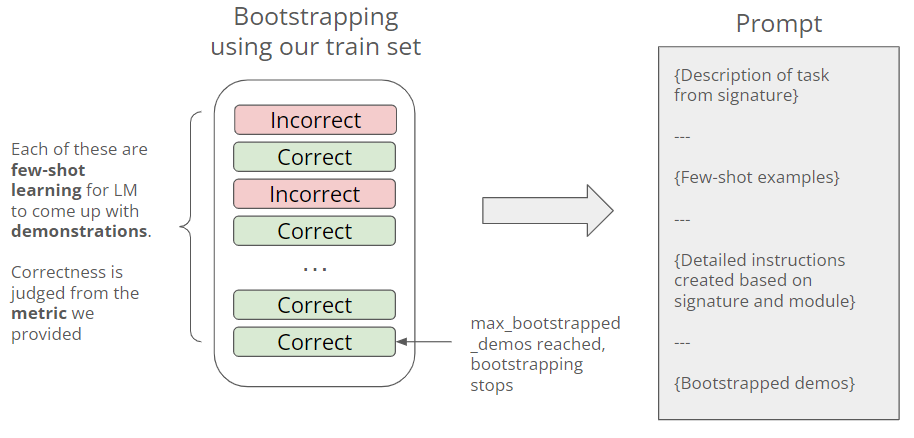

- A specific Optimizer (formerly teleprompter). The DSPy library includes a number of optimization strategies and you can check them out here. For our example we use BootstrapFewShot. Instead of describing it here with lengthy description, I will demonstrate it with code subsequently

Now we train our prompt.

from dspy.teleprompt import BootstrapFewShot

# Simple optimizer example. I am explicitly stating the default values for max_bootstrapped_demos and max_labeled_demos for demonstration purposes

optimizer = BootstrapFewShot(metric=dspy.evaluate.answer_exact_match, max_bootstrapped_demos=4)

# Compile!

compiled_rag = optimizer.compile(RAG(), trainset=trainset)

--- Successful execution should show this output ---

Bootstrapped 4 full traces after n examples in round 0

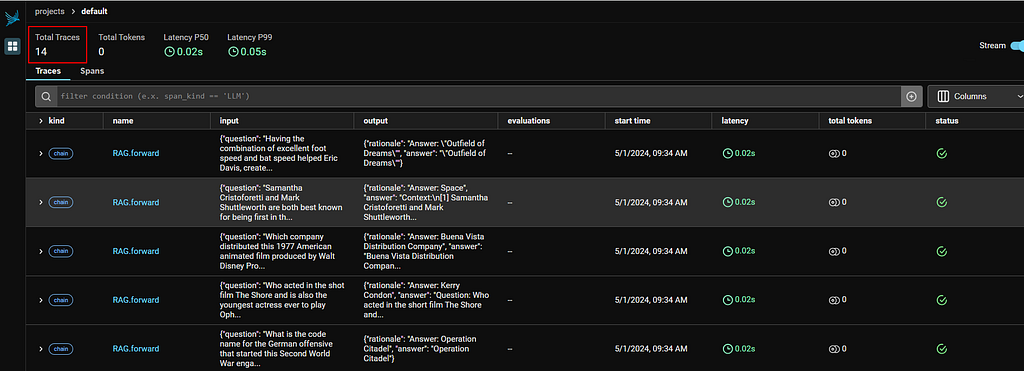

Before using the compiled_rag to answer a question, let’s see what went behind the scene during the training process (aka compile). We launch the Phoenix console by visiting http://localhost:6006/ in browser

In my run I have made 14 calls using the RAG class, in each of those calls we post a question to LM to obtain a prediction.

Refer to the result summary table in my notebook, 4 correct answers are made from these 14 samples, thus reaching our max_bootstrapped_demos parameter and stopping the calls.

But what are the prompts DSPy issued to obtain the bootstrapped demos? Here’s the prompt for question #14. We can see as DSPy tries to generate one bootstrapped demo, it would randomly add samples from our trainset for few-short learning.

Answer questions with short factoid answers.

---

{Pairs of question-and-answer as samples}

---

Follow the following format.

Context: may contain relevant facts

Question: ${question}

Reasoning: Let's think step by step in order to ${produce the answer}. We ...

Answer: often between 1 and 5 words

---

Context:

[1] «Eric Davis (baseball) | Eric Keith Davis (born May 29, 1962) is a former center fielder for several Major League Baseball teams. Davis was 21 years old when he broke into the big leagues on May 19, 1984 with the Cincinnati Reds, the team for which he is most remembered. Blessed with a rare combination of excellent foot speed and bat speed, Davis became the first major league player to hit at least 30 home runs and steal at least 50 bases in the same season in 1987.»

[2] «Willie Davis (baseball) | William Henry Davis, Jr. (April 15, 1940 – March 9, 2010) was a center fielder in Major League Baseball who played most of his career for the Los Angeles Dodgers. At the end of his career he ranked seventh in major league history in putouts (5449) and total chances (5719) in the outfield, and third in games in center field (2237). He was ninth in National League history in total outfield games (2274), and won Gold Glove Awards from 1971 to 1973. He had 13 seasons of 20 or more stolen bases, led the NL in triples twice, and retired with the fourth most triples (138) by any major leaguer since 1945. He holds Los Angeles club records (1958–present) for career hits (2091), runs (1004), triples (110), at bats (7495), total bases (3094) and extra base hits (585). His 31-game hitting streak in 1969 remains the longest by a Dodger. At one point during the streak, when the team was playing at home, the big message board at Dodger Stadium quoted a message from a telegram sent to Davis and the team from Zack Wheat, the team's former record holder, at his home in Missouri.»

[3] «1992 Los Angeles Dodgers season | The 1992 Los Angeles Dodgers season was a poor one for the team as it finished last in the Western Division of the National League with a record of 63 wins and 99 losses. Despite boasting what was nicknamed the "Outfield of Dreams", being manned by Eric Davis, Brett Butler, and Darryl Strawberry, injuries to key players and slumps from others contributed to the franchise's worst season since moving to Los Angeles. Additionally, the Dodgers cancelled four home games during the season due to the L.A. Riots. Despite the poor finish, the Dodgers had some hope for the future as first baseman Eric Karros won the National League Rookie of the Year Award, the first of five consecutive Dodger players to do so. The 1992 season also saw the Dodgers drop television station KTTV Ch.11 as their chief broadcaster of Dodger baseball, ending a 34 year-35 consecutive season association with that station. Additionally, it was the first time the Dodgers lost 90 games in a season since 1944.»

Question: Having the combination of excellent foot speed and bat speed helped Eric Davis, create what kind of outfield for the Los Angeles Dodgers?

Reasoning: Let's think step by step in order to Answer: "Outfield of Dreams"

Answer: "Outfield of Dreams"

Time to put the compiled_rag to test! Here we raise a question which was answered wrongly in our summary table, and see if we can get the right answer this time.

compiled_rag(question="Which of these publications was most recently published, Who Put the Bomp or Self?")

--- Output ---

Prediction(

rationale='Answer: Self',

answer='Self'

)

We now get the right answer!

Again let’s inspect the prompt issued. Notice how the compiled prompt is different from the ones that were used during bootstrapping. Apart from the few-shot examples, bootstrapped Context-Question-Reasoning-Answer demonstrations from correct predictions are added to the prompt, improving the LM’s capability.

Answer questions with short factoid answers.

---

{Pairs of question-and-answer as samples}

---

Follow the following format.

Context: may contain relevant facts

Question: ${question}

Reasoning: Let's think step by step in order to ${produce the answer}. We ...

Answer: often between 1 and 5 words

---

{4 sets of Context-Question-Reasoning-Answer demonstrations}

---

Context:

[1] «Who Put the Bomp | Who Put The Bomp was a rock music fanzine edited and published by Greg Shaw from 1970 to 1979. Its name came from the hit 1961 doo-wop song by Barry Mann, "Who Put the Bomp". Later, the name was shortened to "Bomp!"»

[2] «Bompiani | Bompiani is an Italian publishing house based in Milan, Italy. It was founded in 1929 by Valentino Bompiani.»

[3] «What Color is Your Parachute? | What Color is Your Parachute? by Richard Nelson Bolles is a book for job-seekers that has been in print since 1970 and has been revised every year since 1975, sometimes substantially. Bolles initially self-published the book (December 1, 1970), but it has been commercially published since November 1972 by Ten Speed Press in Berkeley, California. As of September 28, 2010, the book is available in 22 languages, it is used in 26 countries around the world, and over ten million copies have been sold worldwide. It is one of the most highly regarded career advice books in print. In the latest edition of the book, the author writes about how to adapt one's job search to the Web 2.0 age.»

Question: Which of these publications was most recently published, Who Put the Bomp or Self?

Reasoning: Let's think step by step in order to Answer: Self

Answer: Self

So the below is basically went behind the scene with BootstrapFewShot during compilation:

The above example still falls short of what we typically do with machine learning: Even boostrapping maybe useful, we are not yet proving it to improve the quality of the responses.

Ideally, like in traditional machine learning we should define a couple of candidate models, see how they perform against the test set, and select the one achieving the highest performance score. This is what we will do next!

Full fledged example: Prompt comparison with LLM

The aim of this example

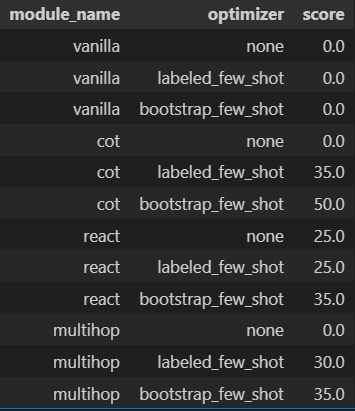

In this section, we want to evaluate what is the “best prompt” (expressed in terms of module and optimizer combination) to perform a RAG against the HotpotQA dataset (distributed under a CC BY-SA 4.0 License), given the LM we use (GPT 3.5 Turbo).

The Modules under evaluation are:

- Vanilla: Single-hop RAG to answer a question based on the retrieved context, without key phrases like “let’s think step by step”

- COT: Single-hop RAG with Chain of Thought

- ReAct: Single-hop RAG with ReAct prompting

- BasicMultiHop: 2-hop RAG with Chain of Thought

And the Optimizer candidates are:

- None: No additional instructions apart from the signature

- Labeled few-shot: Simply constructs few-shot examples from provided labeled Q/A pairs

- Bootstrap few-shot: As we demonstrated, self-generate complete demonstrations for every stage of our module. Will simply use the generated demonstrations (if they pass the metric) without any further optimization. For Vanilla it is just equal to “Labeled few-shot”

As for evaluation metric, we again use exact match as criteria (dspy.evaluate.metrics.answer_exact_match) against the test set.

Comparison

Let’s begin! First, we define our modules

# Vanilla

class Vanilla(dspy.Module):

def __init__(self, num_passages=3):

super().__init__()

self.retrieve = dspy.Retrieve(k=num_passages)

self.generate_answer = dspy.Predict("context, question -> answer")

def forward(self, question):

context = self.retrieve(question).passages

answer = self.generate_answer(context=context, question=question)

return answer

vanilla = Vanilla()

# COT

class COT(dspy.Module):

def __init__(self, num_passages=3):

super().__init__()

self.retrieve = dspy.Retrieve(k=num_passages)

self.generate_answer = dspy.ChainOfThought("context, question -> answer")

def forward(self, question):

context = self.retrieve(question).passages

answer = self.generate_answer(context=context, question=question)

return answer

cot = COT()

# ReAct

react = dspy.ReAct("question-> answer", tools=[dspy.Retrieve(k=3)], max_iters=5)

# BasicMultiHop

class BasicMultiHop(dspy.Module):

def __init__(self, passages_per_hop=3):

self.retrieve = dspy.Retrieve(k=passages_per_hop)

self.generate_query = dspy.ChainOfThought("context, question-> search_query")

self.generate_answer = dspy.ChainOfThought("context, question-> answer")

def forward(self, question):

context = []

for hop in range(2):

query = self.generate_query(context=context, question=question).search_query

context += self.retrieve(query).passages

return self.generate_answer(context=context, question=question)

multihop = BasicMultiHop(passages_per_hop=3)

Then define permutations for our model candidates

from dspy.teleprompt import LabeledFewShot, BootstrapFewShot

metric = dspy.evaluate.metrics.answer_exact_match

modules = {

'vanilla': vanilla,

'cot': cot,

'react': react,

'multihop': multihop,

}

optimizers = {

'none': None,

'labeled_few_shot': LabeledFewShot(),

'bootstrap_few_shot': BootstrapFewShot(metric=metric, max_errors=20),

}

Then I defined a helper class to facilitate the evaluation. The code is a tad bit long so I am not pasting it here, but it could be found in my notebook. What it does is to apply each the optimizers against the modules, compile the prompt, then perform evaluation against the test set.

We are now ready to start the evaluation, it would take around 20 minutes to complete

# Compile the models

ms = ModelSelection(modules=modules, optimizers=optimizers, metric=metric, trainset=trainset)

# Evaluate them

ms.evaluate(testset=testset)

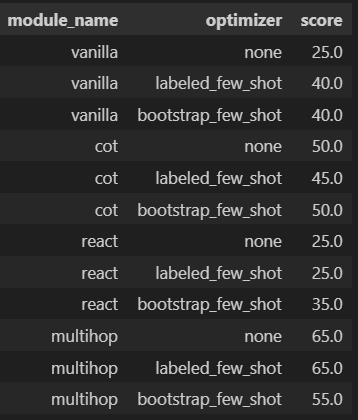

Here’s the evaluation result. We can see the COT module with BootstrapFewShot optimizer has the best performance. The scores represent the percentage of correct answers (judged by exact match) made for the test set.

But before we conclude the exercise, it might be useful to inspect the result more deeply: Multihop with BootstrapFewShot, which supposedly equips with more relevant context than COT with BootstrapFewShot, has a worse performance. It is strange!

Debug and fine-tune our prompt

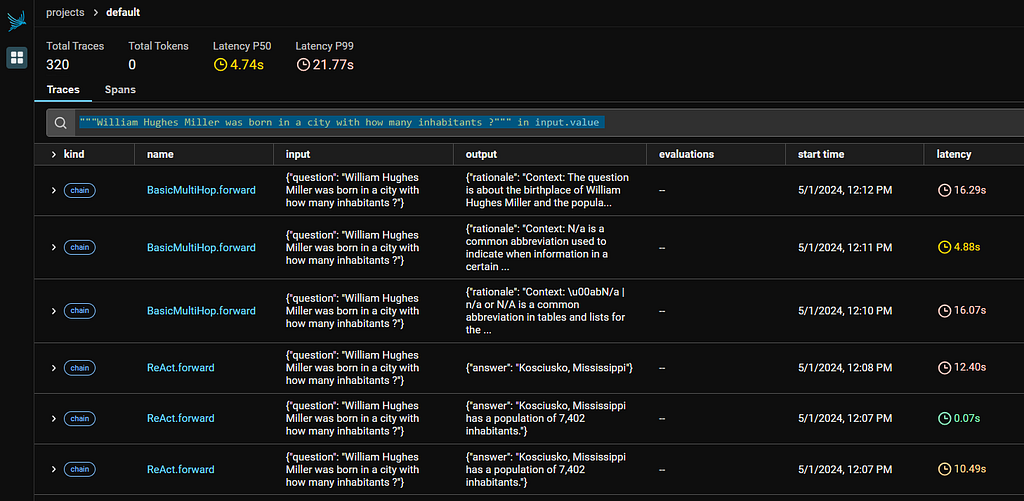

Now head to the Phoenix Console to see what’s going on. We pick a random question William Hughes Miller was born in a city with how many inhabitants ?, and inspect how did COT, ReAct, BasicMultiHop with BoostrapFewShot optimizer came up with their answer. You can type this in the search bar for filter: “””William Hughes Miller was born in a city with how many inhabitants ?””” in input.value

These are the answers provided by the 3 models during my run:

- Multihop with BootstrapFewShot: The answer will vary based on the specific city of William Hughes Miller’s birthplace.

- ReAct with BootstrapFewShot: Kosciusko, Mississippi

- COT with BootstrapFewShot: The city of Kosciusko, Mississippi, has a population of approximately 7,402 inhabitants.

The correct answer is 7,402 at the 2010 census. Both ReAct with BootstrapFewShot and COT with BootstrapFewShot provided relevant answers, but Multihop with BootstrapFewShot simply failed to provide one.

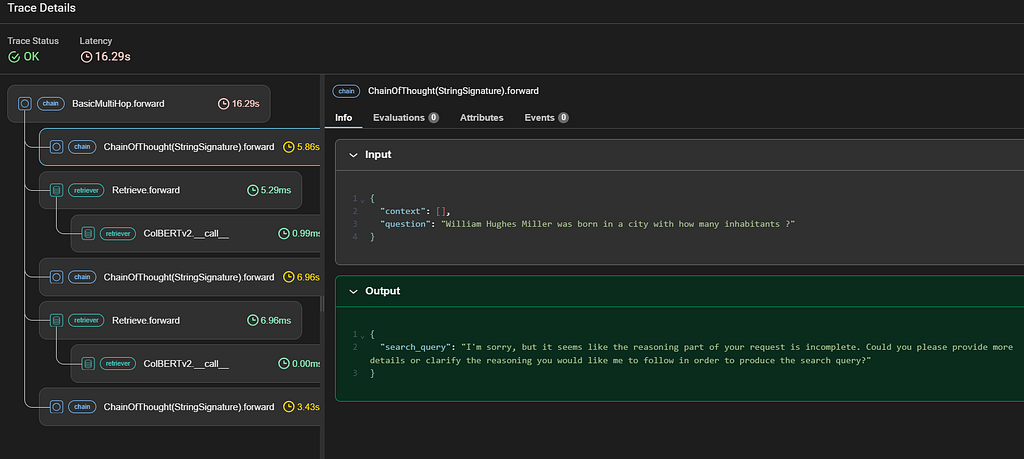

Checking the execution trace in Phoenix for Multihop with BootstrapFewShot, looks like the LM fails to understand what is expected for the search_query specified in the signature.

So we revise the signature, and re-run the evaluation with the code below

# Define a class-based signature

class GenerateAnswer(dspy.Signature):

"""Answer questions with short factoid answers."""

context = dspy.InputField(desc="may contain relevant facts")

question = dspy.InputField()

answer = dspy.OutputField(desc="often between 1 and 5 words")

class BasicQA(dspy.Signature):

"""Answer questions with short factoid answers."""

question = dspy.InputField()

answer = dspy.OutputField(desc="often between 1 and 5 words")

class FollowupQuery(dspy.Signature):

"""Generate a query which is conducive to answering the question"""

context = dspy.InputField(desc="may contain relevant facts")

question = dspy.InputField()

search_query = dspy.OutputField(desc="Judge if the context is adequate to answer the question, if not adequate or if it is blank, generate a search query that would help you answer the question.")

# Revise the modules with the class-based signatures. You can find the relevant code in my notebook

# To keep the article concise I am not pasting it here.

# Then run the below command to re-compile and evaluate

ms_revised = ModelSelection(modules=modules_revised, optimizers=optimizers, metric=metric, trainset=trainset)

ms_revised.evaluate(testset=testset)

ms_revised.evaluation_matrix

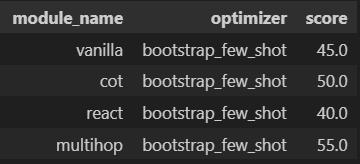

We now see the score improved across all models, and Multihop with LabeledFewShot and Multihop with no examples now have the best performance! This indicates despite DSPy tries to optimize the prompt, there is still some prompt engineering involved by articulating your objective in signature.

The best model now produce an exact match for our question!

# The correct answer is 7,402

question = """`William Hughes Miller was born in a city with how many inhabitants ?"""

ms_revised.question_for_model('multihop','labeled_few_shot',question)

--- Output ---

Prediction(

rationale='Answer: 7,402',

answer='7,402'

)

Since the best prompt is Multihop with LabeledFewShot, the prompt does not contain bootstrapped Context-Question-Reasoning-Answer demonstrations. So bootstrapping may not surely lead to better performance, we need to prove which one is the best prompt scientifically.

Answer questions with short factoid answers.

---

{Pairs of question-and-answer as samples}

---

Follow the following format.

Context: may contain relevant facts

Question: ${question}

Reasoning: Let's think step by step in order to ${produce the answer}. We ...

Answer: often between 1 and 5 words

---

Context:

[1] «William Hughes Miller | William Hughes Miller (born March 16, 1941, Kosciusko, Mississippi) is a professor at the University of California, Berkeley and a leading researcher in the field of theoretical chemistry.»

[2] «William Herbert Miller, Jr. | William Hubert Miller, Jr. (September 1932 – November 4, 1988), of New York City, was an aerophilatelist who published philatelic literature on the subject.»

[3] «William Green Miller | William Green Miller (born August 15, 1931 in New York City, New York), served as the United States Ambassador to Ukraine under Bill Clinton, from 1993 to 1998.»

[4] «Kosciusko, Mississippi | Kosciusko is a city in Attala County, Mississippi, United States. The population was 7,402 at the 2010 census. It is the county seat of Attala County.»

[5] «Attala County, Mississippi | Attala County is a county located in the U.S. state of Mississippi. As of the 2010 census, the population was 19,564. Its county seat is Kosciusko. Attala County is named for Atala, a fictional Native American heroine from an early-19th-century novel of the same name by François-René de Chateaubriand.»

[6] «Kosciusko Island | Kosciusko Island is an island in the Alexander Archipelago of southeastern Alaska, United States. It lies near the northwest corner of Prince of Wales Island, just across the El Capitan Passage from the larger island. The island is near Mount Francis, Holbrook Mountain, and Tokeen Peak. Kosciusko Island has a land area of 171.585 sq mi (444.403 km²), making it the 38th largest island in the United States. It had a population of 52 persons as of the 2000 census, mostly in Edna Bay, its largest community.»

Question: `William Hughes Miller was born in a city with how many inhabitants ?

Reasoning: Let's think step by step in order to Answer: 7,402

Answer: 7,402

It does not mean Multihop with BootstrapFewShot has a worse performance in general however. Only that for our task, if we use GPT 3.5 Turbo to bootstrap demonstration (which might be of questionable quality) and output prediction, then we might better do without the bootstrapping, and keep only the few-shot examples.

This lead to the question: Is it possible to use a more powerful LM, say GPT 4 Turbo (aka teacher) to generate demonstrations, while keeping cheaper models like GPT 3.5 Turbo (aka student) for prediction?

“Teacher” to power-up bootstrapping capability

The answer is YES as the following cell demonstrates, we will use GPT 4 Turbo as teacher.

# Define the GPT-4 Turbo model

gpt4_turbo = dspy.Databricks(api_key=OPENROUTER_API_KEY,

api_base="https://openrouter.ai/api/v1",

model="openai/gpt-4-turbo")

# Define new Optimizer which uses GPT-4 Turbo as a teacher

optimizers_gpt4_teacher = {

'bootstrap_few_shot': BootstrapFewShot(metric=metric, max_errors=20, teacher_settings=dict(lm=gpt4_turbo)),

}

# Compile the models and evaluate them as before

ms_gpt4_teacher = ModelSelection(modules=modules_revised, optimizers=optimizers_gpt4_teacher, metric=metric, trainset=trainset)

ms_gpt4_teacher.evaluate(testset=testset)

ms_gpt4_teacher.evaluation_matrix

Using GPT-4 Turbo as teacher does not significantly boost our models’ performance however. Still it is worthwhile to see its effect to our prompt. Below is the prompt generated just using GPT 3.5

Answer questions with short factoid answers.

---

{Pairs of question-and-answer as samples}

---

Follow the following format.

Context: may contain relevant facts

Question: ${question}

Reasoning: Let's think step by step in order to ${produce the answer}. We ...

Answer: often between 1 and 5 words

---

Context:

[1] «Candace Kita | Kita's first role was as a news anchor in the 1991 movie "Stealth Hunters". Kita's first recurring television role was in Fox's "Masked Rider", from 1995 to 1996. She appeared as a series regular lead in all 40 episodes. Kita also portrayed a frantic stewardess in a music video directed by Mark Pellington for the British group, Catherine Wheel, titled, "Waydown" in 1995. In 1996, Kita also appeared in the film "Barb Wire" (1996) and guest starred on "The Wayans Bros.". She also guest starred in "Miriam Teitelbaum: Homicide" with "Saturday Night Live" alumni Nora Dunn, "Wall To Wall Records" with Jordan Bridges, "Even Stevens", "Felicity" with Keri Russell, "V.I.P." with Pamela Anderson, "Girlfriends", "The Sweet Spot" with Bill Murray, and "Movies at Our House". She also had recurring roles on the FX spoof, "Son of the Beach" from 2001 to 2002, ABC-Family's "Dance Fever" and Oxygen Network's "Running with Scissors". Kita also appeared in the films "Little Heroes" (2002) and "Rennie's Landing" (2001).»

[2] «Jilly Kitzinger | Jilly Kitzinger is a fictional character in the science fiction series "Torchwood", portrayed by American actress Lauren Ambrose. The character was promoted as one of five new main characters to join "Torchwood" in its fourth series, "" (2011), as part of a new co-production between "Torchwood"' s British network, BBC One, and its American financiers on US premium television network Starz. Ambrose appears in seven of the ten episodes, and is credited as a "special guest star" throughout. Whilst reaction to the serial was mixed, Ambrose' portrayal was often singled out by critics for particular praise and in 2012 she received a Saturn Award nomination for Best Supporting Actress on Television.»

[3] «Candace Brown | Candace June Brown (born June 15, 1980) is an American actress and comedian best known for her work on shows such as "Grey's Anatomy", "Desperate Housewives", "Head Case", The "Wizards Of Waverly Place". In 2011, she joined the guest cast for "Torchwood"' s fourth series' "", airing on BBC One in the United Kingdom and premium television network Starz.»

[4] «Candace Kita | Kita's first role was as a news anchor in the 1991 movie "Stealth Hunters". Kita's first recurring television role was in Fox's "Masked Rider", from 1995 to 1996. She appeared as a series regular lead in all 40 episodes. Kita also portrayed a frantic stewardess in a music video directed by Mark Pellington for the British group, Catherine Wheel, titled, "Waydown" in 1995. In 1996, Kita also appeared in the film "Barb Wire" (1996) and guest starred on "The Wayans Bros.". She also guest starred in "Miriam Teitelbaum: Homicide" with "Saturday Night Live" alumni Nora Dunn, "Wall To Wall Records" with Jordan Bridges, "Even Stevens", "Felicity" with Keri Russell, "V.I.P." with Pamela Anderson, "Girlfriends", "The Sweet Spot" with Bill Murray, and "Movies at Our House". She also had recurring roles on the FX spoof, "Son of the Beach" from 2001 to 2002, ABC-Family's "Dance Fever" and Oxygen Network's "Running with Scissors". Kita also appeared in the films "Little Heroes" (2002) and "Rennie's Landing" (2001).»

[5] «Kiti Manver | María Isabel Ana Mantecón Vernalte (born 11 May 1953) better known as Kiti Mánver is a Spanish actress. She has appeared in more than 100 films and television shows since 1970. She starred in the 1973 film "Habla, mudita", which was entered into the 23rd Berlin International Film Festival.»

[6] «Amy Steel | Amy Steel (born Alice Amy Steel; May 3, 1960) is an American film and television actress. She is best known for her roles as Ginny Field in "Friday the 13th Part 2" (1981) and Kit Graham in "April Fool's Day" (1986). She has starred in films such as "Exposed" (1983), "Walk Like a Man" (1987), "What Ever Happened to Baby Jane? " (1991), and "Tales of Poe" (2014). Steel has had numerous guest appearances on several television series, such as "Family Ties" (1983), "The A-Team" (1983), "Quantum Leap" (1990), and "China Beach" (1991), as well as a starring role in "The Powers of Matthew Star" (1982–83).»

Question: which American actor was Candace Kita guest starred with

Reasoning: Let's think step by step in order to Answer: Bill Murray

Answer: Bill Murray

---

Context:

[1] «Monthly Magazine | The Monthly Magazine (1796–1843) of London began publication in February 1796. Richard Phillips was the publisher and a contributor on political issues. The editor for the first ten years was the literary jack-of-all-trades, Dr John Aikin. Other contributors included William Blake, Samuel Taylor Coleridge, George Dyer, Henry Neele and Charles Lamb. The magazine also published the earliest fiction of Charles Dickens, the first of what would become "Sketches by Boz".»

[2] «Bodega Magazine | Bodega Magazine is an online literary magazine that releases new issues on the first Monday of every month, featuring stories, poems, essays and interviews from a mix of emerging and established writers. It was founded in early spring of 2012 by creative writing MFA graduates from New York University who had previously worked together on the "Washington Square Review", and continues to be based out of Manhattan and Brooklyn. The inaugural issue was published on September 4, 2012.»

[3] «Who Put the Bomp | Who Put The Bomp was a rock music fanzine edited and published by Greg Shaw from 1970 to 1979. Its name came from the hit 1961 doo-wop song by Barry Mann, "Who Put the Bomp". Later, the name was shortened to "Bomp!"»

[4] «The Most (album) | The Most is the third album released by straight edge hardcore punk band Down to Nothing. It was released on July 17, 2007.»

[5] «The Most Incredible Thing | “The Most Incredible Thing" (Danish: "Det Utroligste" ) is a literary fairy tale by Danish poet and author Hans Christian Andersen (1805–1875). The story is about a contest to find the most incredible thing and the wondrous consequences when the winner is chosen. The tale was first published in an English translation by Horace Scudder, an American correspondent of Andersen's, in the United States in September 1870 before being published in the original Danish in Denmark in October 1870. "The Most Incredible Thing" was the first of Andersen's tales to be published in Denmark during World War II. Andersen considered the tale one of his best.»

[6] «Augusta Triumphans | Augusta Triumphans: or, the Way to Make London the Most Flourishing City in the Universe by Daniel Defoe was first published on 16 March 1728. The fictitious speaker of this pamphlet, Andrew Moreton, is a man in his sixties who offers suggestions for the improvement of London. In particular, he fosters the establishment of a university, an academy of music, a hospital for foundlings and licensed institutions for the treatment of mental diseases. Moreover, he encourages the introduction of measures to prevent moral corruption and street robbery.»

Question: Which of these publications was most recently published, Who Put the Bomp or Self?

Reasoning: Let's think step by step in order to Answer: Self

Answer: Self

---

Context:

[1] «The Victorians | The Victorians - Their Story In Pictures is a 2009 British documentary series which focuses on Victorian art and culture. The four-part series is written and presented by Jeremy Paxman and debuted on BBC One at 9:00pm on Sunday 15 February 2009.»

[2] «What the Victorians Did for Us | What the Victorians Did for Us is a 2001 BBC documentary series that examines the impact of the Victorian era on modern society. It concentrates primarily on the scientific and social advances of the era, which bore the Industrial Revolution and set the standards for polite society today.»

[3] «The Great Victorian Collection | The Great Victorian Collection, published in 1975, is a novel by Northern Irish-Canadian writer Brian Moore. Set in Carmel, California, it tells the story of a man who dreams that the empty parking lot he can see from his hotel window has been transformed by the arrival of a collection of priceless Victoriana on display in a vast open-air market. When he awakes he finds that he can no longer distinguish the dream from reality.»

[4] «Jeremy Paxman | Jeremy Dickson Paxman (born 11 May 1950) is an English broadcaster, journalist, and author. He is the question master of "University Challenge", having succeeded Bamber Gascoigne when the programme was revived in 1994.»

[5] «Jeremy I | Jeremy I was king of the Miskito nation, who came to power following the death of his father, Oldman, in 1686 or 1687. according to an English visitor, W. M., in 1699, he was about 60 years old at that time, making his birth year about 1639.»

[6] «Jeremy Cheeseman | Jeremy Cheeseman (born June 6, 1990 in Manorville, New York) is a former American professional soccer player. Playing two seasons for the Dayton Dutch Lions in the USL Professional Division before retiring due to injury»

Question: The Victorians - Their Story In Pictures is a documentary series written by an author born in what year?

Reasoning: Let's think step by step in order to Answer: 1950

Answer: 1950

---

Context:

[1] «Tae Kwon Do Times | Tae Kwon Do Times is a magazine devoted to the martial art of taekwondo, and is published in the United States of America. While the title suggests that it focuses on taekwondo exclusively, the magazine also covers other Korean martial arts. "Tae Kwon Do Times" has published articles by a wide range of authors, including He-Young Kimm, Thomas Kurz, Scott Shaw, and Mark Van Schuyver.»

[2] «Scott Shaw (artist) | Scott Shaw (often spelled Scott Shaw!) is a United States cartoonist and animator, and historian of comics. Among Scott's comic-book work is Hanna-Barbera's "The Flintstones" (for Marvel Comics and Harvey Comics), "Captain Carrot and His Amazing Zoo Crew" (for DC Comics), and "Simpsons Comics" (for Bongo Comics). He was also the first artist for Archie Comics' "Sonic the Hedgehog" comic book series.»

[3] «Scott Shaw | Scott Shaw (born September 23, 1958) is an American actor, author, film director, film producer, journalist, martial artist, musician, photographer, and professor.»

[4] «Scott Shaw (artist) | Scott Shaw (often spelled Scott Shaw!) is a United States cartoonist and animator, and historian of comics. Among Scott's comic-book work is Hanna-Barbera's "The Flintstones" (for Marvel Comics and Harvey Comics), "Captain Carrot and His Amazing Zoo Crew" (for DC Comics), and "Simpsons Comics" (for Bongo Comics). He was also the first artist for Archie Comics' "Sonic the Hedgehog" comic book series.»

[5] «Scott Shaw | Scott Shaw (born September 23, 1958) is an American actor, author, film director, film producer, journalist, martial artist, musician, photographer, and professor.»

[6] «Arnold Shaw (author) | Arnold Shaw (1909–1989) was a songwriter and music business executive, primarily in the field of music publishing, who is best known for his comprehensive series of books on 20th century American popular music.»

Question: Which magazine has published articles by Scott Shaw, Tae Kwon Do Times or Southwest Art?

Reasoning: Let's think step by step in order to Answer: Tae Kwon Do Times

Answer: Tae Kwon Do Times

---

Context:

[1] «William Hughes Miller | William Hughes Miller (born March 16, 1941, Kosciusko, Mississippi) is a professor at the University of California, Berkeley and a leading researcher in the field of theoretical chemistry.»

[2] «William Herbert Miller, Jr. | William Hubert Miller, Jr. (September 1932 – November 4, 1988), of New York City, was an aerophilatelist who published philatelic literature on the subject.»

[3] «William Rickarby Miller | William Rickarby Miller (May 20, 1818 in Staindrop – July 1893 in New York City) was an American painter, of the Hudson River School.»

[4] «Kosciusko, Mississippi | Kosciusko is a city in Attala County, Mississippi, United States. The population was 7,402 at the 2010 census. It is the county seat of Attala County.»

[5] «Attala County, Mississippi | Attala County is a county located in the U.S. state of Mississippi. As of the 2010 census, the population was 19,564. Its county seat is Kosciusko. Attala County is named for Atala, a fictional Native American heroine from an early-19th-century novel of the same name by François-René de Chateaubriand.»

[6] «Kosciusko Island | Kosciusko Island is an island in the Alexander Archipelago of southeastern Alaska, United States. It lies near the northwest corner of Prince of Wales Island, just across the El Capitan Passage from the larger island. The island is near Mount Francis, Holbrook Mountain, and Tokeen Peak. Kosciusko Island has a land area of 171.585 sq mi (444.403 km²), making it the 38th largest island in the United States. It had a population of 52 persons as of the 2000 census, mostly in Edna Bay, its largest community.»

Question: `William Hughes Miller was born in a city with how many inhabitants ?

Reasoning: Let's think step by step in order to Answer: 7,402

Answer: 7,402

And here’s the prompt generated using GPT-4 Turbo as teacher. Notice how the “Reasoning” is much better articulated here!

Answer questions with short factoid answers.

---

{Pairs of question-and-answer as samples}

---

Follow the following format.

Context: may contain relevant facts

Question: ${question}

Reasoning: Let's think step by step in order to ${produce the answer}. We ...

Answer: often between 1 and 5 words

---

Context:

[1] «Monthly Magazine | The Monthly Magazine (1796–1843) of London began publication in February 1796. Richard Phillips was the publisher and a contributor on political issues. The editor for the first ten years was the literary jack-of-all-trades, Dr John Aikin. Other contributors included William Blake, Samuel Taylor Coleridge, George Dyer, Henry Neele and Charles Lamb. The magazine also published the earliest fiction of Charles Dickens, the first of what would become "Sketches by Boz".»

[2] «Who Put the Bomp | Who Put The Bomp was a rock music fanzine edited and published by Greg Shaw from 1970 to 1979. Its name came from the hit 1961 doo-wop song by Barry Mann, "Who Put the Bomp". Later, the name was shortened to "Bomp!"»

[3] «Desktop Publishing Magazine | Desktop Publishing magazine (ISSN 0884-0873) was founded, edited, and published by Tony Bove and Cheryl Rhodes of TUG/User Publications, Inc., of Redwood City, CA. ) . Its first issue appeared in October, 1985, and was created and produced on a personal computer with desktop publishing software (PageMaker on a Macintosh), preparing output on a prototype PostScript-driven typesetting machine from Mergenthaler Linotype Company. Erik Sandberg-Diment, a columnist at "The New York Times", tried to buy the venture outright when he saw an early edition.»

[4] «Self (magazine) | Self is an American magazine for women that specializes in health, wellness, beauty, and style. Part of Condé Nast, Self had a circulation of 1,515,880 and a total audience of 5,282,000 readers, according to its corporate media kit n 2013. The editor-in-chief is Carolyn Kylstra. "Self" is based in the Condé Nast U.S. headquarters at 1 World Trade Center in New York, NY. In February 2017 the magazine became an online publication.»

[5] «Self-Publishing Review | Self-Publishing Review (or "SPR") is an online book review magazine for indie authors founded in 2008 by American author Henry Baum.»

[6] «Self-publishing | Self-publishing is the publication of any book, album or other media by its author without the involvement of an established publisher. A self-published physical book is said to have been privately printed. The author is in control of the entire process including, for a book, the design of the cover and interior, formats, price, distribution, marketing, and public relations. The authors can do it all themselves or may outsource some or all of the work to companies which offer these services.»

Question: Which of these publications was most recently published, Who Put the Bomp or Self?

Reasoning: Let's think step by step in order to determine which publication was most recently published. According to the context, "Who Put the Bomp" was published from 1970 to 1979. On the other hand, "Self" magazine became an online publication in February 2017 after being a print publication. Therefore, "Self" was most recently published.

Answer: Self

---

Context:

[1] «The Victorians | The Victorians - Their Story In Pictures is a 2009 British documentary series which focuses on Victorian art and culture. The four-part series is written and presented by Jeremy Paxman and debuted on BBC One at 9:00pm on Sunday 15 February 2009.»

[2] «The Great Victorian Collection | The Great Victorian Collection, published in 1975, is a novel by Northern Irish-Canadian writer Brian Moore. Set in Carmel, California, it tells the story of a man who dreams that the empty parking lot he can see from his hotel window has been transformed by the arrival of a collection of priceless Victoriana on display in a vast open-air market. When he awakes he finds that he can no longer distinguish the dream from reality.»

[3] «Victorian (comics) | The Victorian is a 25-issue comic book series published by Penny-Farthing Press and starting in 1999. The brainchild of creator Trainor Houghton, the series included a number of notable script writers and illustrators, including Len Wein, Glen Orbik and Howard Chaykin.»

[4] «Jeremy Paxman | Jeremy Dickson Paxman (born 11 May 1950) is an English broadcaster, journalist, and author. He is the question master of "University Challenge", having succeeded Bamber Gascoigne when the programme was revived in 1994.»

[5] «Jeremy I | Jeremy I was king of the Miskito nation, who came to power following the death of his father, Oldman, in 1686 or 1687. according to an English visitor, W. M., in 1699, he was about 60 years old at that time, making his birth year about 1639.»

[6] «Jeremy Cheeseman | Jeremy Cheeseman (born June 6, 1990 in Manorville, New York) is a former American professional soccer player. Playing two seasons for the Dayton Dutch Lions in the USL Professional Division before retiring due to injury»

Question: The Victorians - Their Story In Pictures is a documentary series written by an author born in what year?

Reasoning: Let's think step by step in order to determine the birth year of the author who wrote "The Victorians - Their Story In Pictures." According to context [4], Jeremy Paxman, an English broadcaster and journalist, wrote and presented this documentary series. His birth year is provided in the same context.

Answer: 1950

---

Context:

[1] «Tae Kwon Do Times | Tae Kwon Do Times is a magazine devoted to the martial art of taekwondo, and is published in the United States of America. While the title suggests that it focuses on taekwondo exclusively, the magazine also covers other Korean martial arts. "Tae Kwon Do Times" has published articles by a wide range of authors, including He-Young Kimm, Thomas Kurz, Scott Shaw, and Mark Van Schuyver.»

[2] «Kwon Tae-man | Kwon Tae-man (born 1941) was an early Korean hapkido practitioner and a pioneer of the art, first in Korea and then in the United States. He formed one of the earliest dojang's for hapkido in the United States in Torrance, California, and has been featured in many magazine articles promoting the art.»

[3] «Scott Shaw (artist) | Scott Shaw (often spelled Scott Shaw!) is a United States cartoonist and animator, and historian of comics. Among Scott's comic-book work is Hanna-Barbera's "The Flintstones" (for Marvel Comics and Harvey Comics), "Captain Carrot and His Amazing Zoo Crew" (for DC Comics), and "Simpsons Comics" (for Bongo Comics). He was also the first artist for Archie Comics' "Sonic the Hedgehog" comic book series.»

[4] «Tae Kwon Do Times | Tae Kwon Do Times is a magazine devoted to the martial art of taekwondo, and is published in the United States of America. While the title suggests that it focuses on taekwondo exclusively, the magazine also covers other Korean martial arts. "Tae Kwon Do Times" has published articles by a wide range of authors, including He-Young Kimm, Thomas Kurz, Scott Shaw, and Mark Van Schuyver.»

[5] «Scott Savitt | Scott Savitt is a former foreign correspondent for The Los Angeles Times and United Press International in Beijing. His articles have been published in The Los Angeles Times, Washington Post http://www.washingtonpost.com/wp-dyn/content/article/2008/04/18/AR2008041802635.html, Wall Street Journal, New York Times, and many other publications.»

[6] «Scott Poulson-Bryant | Scott Poulson-Bryant is an award-winning American journalist and author. One of the co-founding editors of Vibe magazine in 1992 (and the editor who gave the magazine its name), Poulson-Bryant's journalism, profiles, reviews, and essays have appeared in such publications as the "New York Times", "the Village Voice", "Rolling Stone", "Spin", "Essence", "Ebony", and "The Source". He is the author of "HUNG: A Meditation on the Measure of Black Men in America" (published by Doubleday Books in 2006) and a novel called "The VIPs".»

Question: Which magazine has published articles by Scott Shaw, Tae Kwon Do Times or Southwest Art?

Reasoning: Let's think step by step in order to determine which magazine published articles by Scott Shaw. According to the context provided, Scott Shaw has contributed to "Tae Kwon Do Times," which is mentioned in both [1] and [4]. There is no mention of Scott Shaw contributing to "Southwest Art."

Answer: Tae Kwon Do Times

---

Context:

[1] «1972 FA Charity Shield | The 1972 FA Charity Shield was contested between Manchester City and Aston Villa.»

[2] «1968 FA Charity Shield | The 1968 FA Charity Shield was a football match played on 3 August 1968 between Football League champions Manchester City and FA Cup winners West Bromwich Albion. It was the 46th Charity Shield match and was played at City's home ground, Maine Road. Manchester City won 6–1.»

[3] «1973 FA Charity Shield | The 1973 FA Charity Shield was contested between Burnley and Manchester City in a fixture that took place at Maine Road.»

[4] «List of Aston Villa F.C. seasons | This is a list of seasons played by Aston Villa Football Club in English and European football, from 1879 (the year of the club's first FA Cup entry) to the most recent completed season. Aston Villa football club was founded in March, 1874, by members of the Villa Cross Wesleyan Chapel in Aston. Throughout the 1870s Aston Villa played a small amount of games. At least one game, against Aston Brook St Mary's was played with one half under Rugby rules and the other under football rules. In the 1880s the game became more formalised and in 1888, William McGregor formed the Football League with 11 other clubs.»

[5] «List of Aston Villa F.C. records and statistics | Aston Villa Football Club are an English professional association football club based in Aston, Birmingham, who currently play in the EFL Championship. The club was founded in 1874 and have played at their current home ground, Villa Park, since 1897. Aston Villa were founding members of the Football League in 1888 and the Premier League in 1992. They are one of the oldest and most successful football clubs in England, having won the First Division Championship seven times and the FA Cup seven times. In 1982 the club became one of only five English clubs to win the European Cup.»

[6] «Aston Villa F.C. | Aston Villa Football Club ( ; nicknamed Villa, The Villa, The Villans and The Lions) is a professional football club in Aston, Birmingham, that plays in the Championship, the second level of English football. Founded in 1874, they have played at their current home ground, Villa Park, since 1897. Aston Villa were one of the founder members of the Football League in 1888 and of the Premier League in 1992.»

Question: In what year was the club founded that played Manchester City in the 1972 FA Charity Shield

Reasoning: Let's think step by step in order to determine the founding year of the club that played against Manchester City in the 1972 FA Charity Shield. According to context [1], the match was contested between Manchester City and Aston Villa. To find the founding year of Aston Villa, we refer to context [4], which states that Aston Villa Football Club was founded in March, 1874.

Answer: 1874

---

Context:

[1] «William Hughes Miller | William Hughes Miller (born March 16, 1941, Kosciusko, Mississippi) is a professor at the University of California, Berkeley and a leading researcher in the field of theoretical chemistry.»

[2] «William Read Miller | William Read Miller (November 23, 1823November 29, 1887) was the 12th Governor of the State of Arkansas. Born in Batesville, Arkansas; Miller was Arkansas's first native born Governor. Serving two terms in the turbulent period after Reconstruction, Miller's four-year administration marked the beginnings of New Departure Democrats in Arkansas. Running on a platform of economic growth via reconciliation between whites and freedmen, Miller often was opposed by members of his own party during the infancy of the Lost Cause ideology. His plans to pay back a large state debt including the Holford Bonds, valued at $14 million ($ million today), were often interrupted by racial violence, and his support for public schools and universities was often combated by those in his own party.»

[3] «William "Willie" Armstrong | William Armstrong was born c1804 in Painter Heugh (or Hugh), (which was an old lane dating from medieval Newcastle, a lane joining lower part of Dean Street to the higher part of Pilgrim Street), the name possibly derived from the fact that ships tied up here in the tidal parts of the Lort Burn (now filled).»

[4] «Kosciusko, Mississippi | Kosciusko is a city in Attala County, Mississippi, United States. The population was 7,402 at the 2010 census. It is the county seat of Attala County.»

[5] «Attala County, Mississippi | Attala County is a county located in the U.S. state of Mississippi. As of the 2010 census, the population was 19,564. Its county seat is Kosciusko. Attala County is named for Atala, a fictional Native American heroine from an early-19th-century novel of the same name by François-René de Chateaubriand.»

[6] «Kosciusko Island | Kosciusko Island is an island in the Alexander Archipelago of southeastern Alaska, United States. It lies near the northwest corner of Prince of Wales Island, just across the El Capitan Passage from the larger island. The island is near Mount Francis, Holbrook Mountain, and Tokeen Peak. Kosciusko Island has a land area of 171.585 sq mi (444.403 km²), making it the 38th largest island in the United States. It had a population of 52 persons as of the 2000 census, mostly in Edna Bay, its largest community.»

Question: `William Hughes Miller was born in a city with how many inhabitants ?

Reasoning: Let's think step by step in order to Answer: 7,402

Answer: 7,402

Conclusion

Currently we often rely on manual prompt engineering at best abstracted as f-string. Also, for LM comparison we often raise underspecified questions like “how do different LMs compare on a certain problem”, borrowed from the Stanford NLP paper’s saying.

But as the above examples demonstrate, with DSPy’s modular, composable programs and optimizers, we are now equipped to answer toward “how they compare on a certain problem with Module X when compiled with Optimizer Y”, which is a well-defined and reproducible run, thus reducing the role of artful prompt construction in modern AI.

That’s it! Hope you enjoy this article.

*Unless otherwise noted, all images are by the author

Prompt Like a Data Scientist: Auto Prompt Optimization and Testing with DSPy was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Prompt Like a Data Scientist: Auto Prompt Optimization and Testing with DSPy