An easy and yet powerful tool for decision making

I suppose most of us have heard the statement “correlation doesn’t imply causation” multiple times. It often becomes a problem for analysts since we frequently can see only correlations but still want to make causal conclusions.

Let’s discuss a couple of examples to understand the difference better. I would like to start with a case from everyday life rather than the digital world.

In 1975, a vast population study was launched in Denmark. It’s called the Copenhagen City Heart Study (CCHS). Researchers gathered information about 20K men and women and have been monitoring these people for decades. The initial goal of this research was to find ways to prevent cardiovascular diseases and strokes. One of the conclusions from this study is that people who reported regularly playing tennis have 9.7 years higher life expectancy.

Let’s think about how we could interpret this information. Does it mean that if a person starts playing tennis weekly today, they will increase their life expectancy by ten years? Unfortunately, not exactly. Since it’s an observational study, we should be cautious about making causal inferences. There might be some other effects. For example, tennis players are likely to be wealthier, and we know that higher wealth correlates with greater longevity. Or there could be a correlation that people who regularly do sports also care more about their health and, because of it, do all checkups regularly. So, observational research might overestimate the effect of tennis on longevity since it doesn’t control other factors.

Let’s move on to the examples closer to product analytics and our day-to-day job. The number of Customer Support contacts for a client will likely be positively correlated with the probability of churn. If customers had to contact our support ten times, they would likely be annoyed and stop using our product, while customers who never had problems and are happy with the service might never reach out with any questions.

Does it mean that if we reduce the number of CS contacts, we will increase customer retention? I’m ready to bet that if we hide contact info and significantly reduce the number of CS contacts, we won’t be able to decrease churn because the actual root cause of churn is not CS contact but customers’ dissatisfaction with the product, which leads to both customers contacting us and stopping using our product.

I hope that with these examples, you can gain some intuition about the correlation vs. causation problem.

In this article, I would like to share approaches for driving causal conclusions from the data. Surprisingly, we will be able to use the most basic tool — just a linear regression.

If we use the same linear regression for causal inference, you might wonder, what is the difference between our usual approach and causal analytics? That’s a good question. Let’s start our causal journey by understanding the differences between approaches.

Predictive vs. causal analytics

Predictive analytics helps to make forecasts and answer questions like “How many customers will we have in a year if nothing changes?” or “What is the probability for this customer to make a purchase within the next seven days?”.

Causal analytics tries to understand the root causes of the process. It might help you to answer “what if” questions like “How many customers will churn if we increase our subscription fee?” or “How many customers would have signed up for our subscription if we didn’t launch this Saint Valentine’s promo?”.

Causal questions seem way more complicated than just predictive ones. However, these two approaches often leverage the same tools, such as linear or logistic regressions. Even though tools are the same, they have absolutely different goals:

- For predictive analytics, we try our best to predict a value in the future based on information we know. So, the main KPI is an error in the prediction.

- Building a regression model for the causal analysis, we focus on the relationships between our target value and other factors. The model’s main output is coefficients rather than forecasts.

Let’s look at a simple example. Suppose we would like to forecast the number of active customers.

- In the predictive approach, we are talking about baseline forecast (given that the situation will stay pretty much the same). We can use ARIMA (Autoregressive Integrated Moving Average) and base our projections on previous values. ARIMA works well for predictions but can’t tell you anything about the factors affecting your KPI and how to improve your product.

- In the case of causal analytics, our goal is to find causal relationships in our data, so we will build a regression and identify factors that can impact our KPI, such as subscription fees, marketing campaigns, seasonality, etc. In that case, we will get not only the BAU (business as usual) forecast but also be able to estimate different “what if” scenarios for the future.

Now, it’s time to dive into causal theory and learn basic terms.

Correlation doesn’t imply causation

Let’s consider the following example for our discussion. Imagine you sent a discount coupon to loyal customers of your product, and now you want to understand how it affected their value (money spent on the product) and retention.

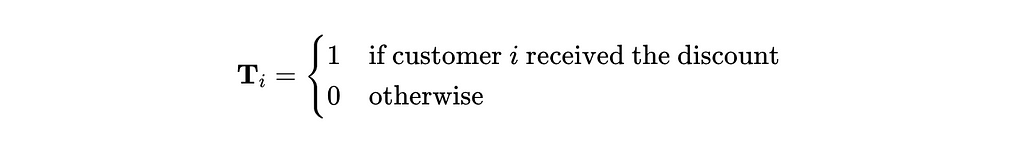

One of the most basic causal terms is treatment. It sounds like something related to the medicine, but actually, it’s just an intervention. In our case, it’s a discount. We usually define treatment at the unit level (in our case, customer) in the following way.

The other crucial term is outcome Y, our variable of interest. In our example, it’s the customer’s value.

The fundamental problem of causal inference is that we can’t observe both outcomes for the same customers. So, if a customer received the discount, we will never know what value or retention he would have had without a coupon. It makes causal inference tricky.

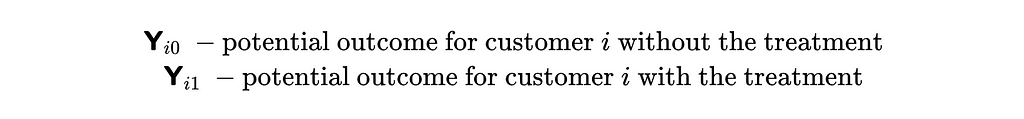

That’s why we need to introduce another concept — potential outcomes. The outcome that happened is usually called factual, and the one that didn’t is counterfactual. We will use the following notation for it.

The main goal of causal analysis is to measure the relationship between treatment and outcome. We can use the following metrics to quantify it:

- ATE — average treatment effect,

- ATT — average treatment effect on treated (customers with the treatment)

They are both equal to expected values of the differences between potential outcomes either for all units (customers in our case) or only for treated ones.

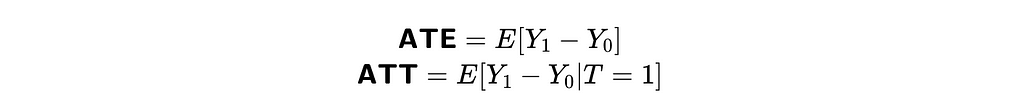

That’s an actual causal effect, and unfortunately, we won’t be able to calculate it. But cheer up; we can still get some estimations. We can observe the difference between values for treated and not treated customers (correlation effect). Let’s try to interpret this value.

Using a couple of simple mathematical transformations (i.e. adding and subtracting the same value), we’ve concluded that the average in values between treated and not treated customers equals the sum of ATT (average treatment effect on treated) and bias term. The bias equals the difference between control and treatment groups without a treatment.

If we return to our case, the bias will be equal to the difference between expected customer value for the treatment group if they haven’t received discount (counterfactual outcome) and the control group (factual outcome).

In our example, the average value from customers who received a discount will likely be much higher than for those who didn’t. Could we attribute all this effect to our treatment (discount coupon)? Unfortunately not. Since we sent discount to loyal customers who are already spending a lot of money in our product, they would likely have higher value than control group even without a treatment. So, there’s a bias, and we can’t say that the difference in value between two segments equals ATT.

Let’s think about how to overcome this obstacle. We can do an A/B test: randomly split our loyal customers into two groups and send discount coupons only to half of them. Then, we can estimate the discount’s effect as the average difference between these two groups since we’ve eliminated bias (without treatment, there’s no difference between these groups except for discount).

We’ve covered the basic theory of causal inference and have learned the most crucial concept of bias. So, we are ready to move on to practice. We will start by analysing the A/B test results.

Use case: A/B test

Randomised controlled trial (RTC), often called the A/B test, is a powerful tool for getting causal conclusions from data. This approach assumes that we are assigning treatment randomly, and it helps us eliminate bias (since groups are equal without treatment).

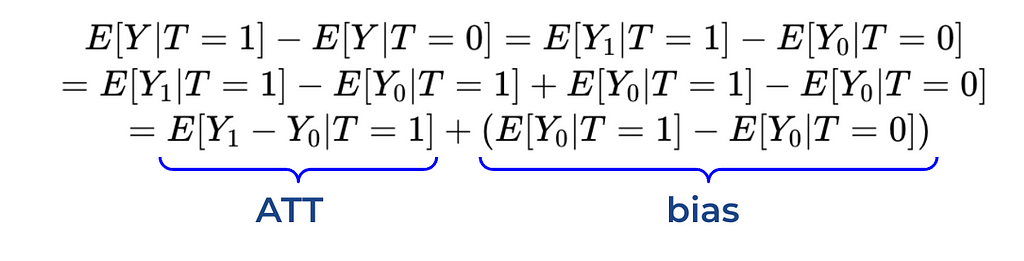

To practice solving such tasks, we will look at the example based on synthetic data. Suppose we’ve built an LLM-based tool that helps customer support agents answer questions more quickly. To measure the effect, we introduced this tool to half of the agents, and we would like to measure how our treatment (LLM-based tool) affects the outcome (time the agent spends answering a customer’s question).

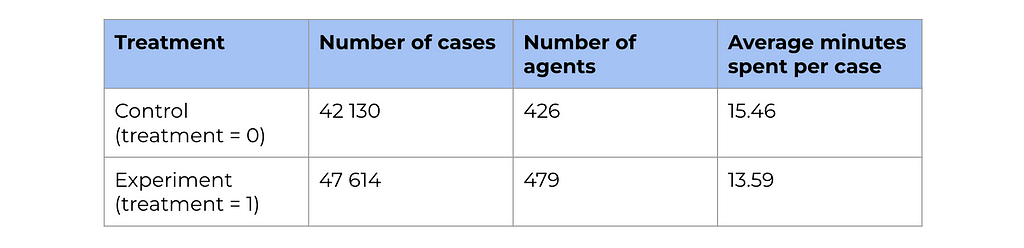

Let’s have a quick look at the data we have.

Here are the description of the parameters we logged:

- case_id — unique ID for the case.

- agent_id — unique ID for the agent.

- treatment equals 1 if agent was in an experiment group and have a chance to use LLMs, 0 — otherwise.

- time_spent_mins — minutes spent answering the customer’s question.

- cs_center — customer support centre. We are working with several CS centres. We launched this experiment in some of them because it’s easier to implement. Such an approach also helps us to avoid contamination (when agents from experiment and control groups interact and can affect each other).

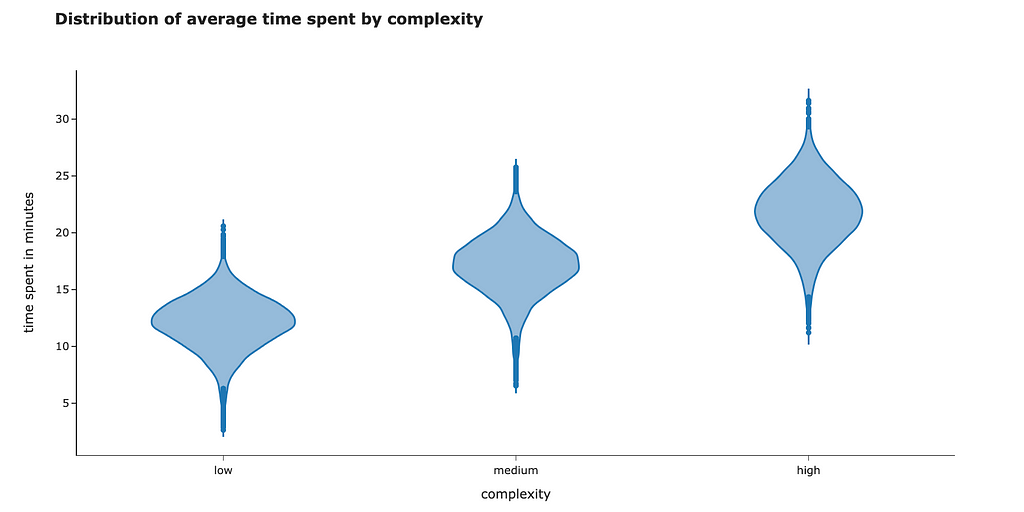

- complexity equals low, medium or high. This feature is based on the category of the customer’s question and defines how much time an agent is supposed to spend solving this case.

- tenure — number of months since the agent started working.

- passed_training — whether the agent passed LLM training. This value can be equal to True only for the treatment group since this training wasn’t offered to the agents from the control group.

- within_sla equals 1 if the agent was able to answer the question within SLA (15 minutes).

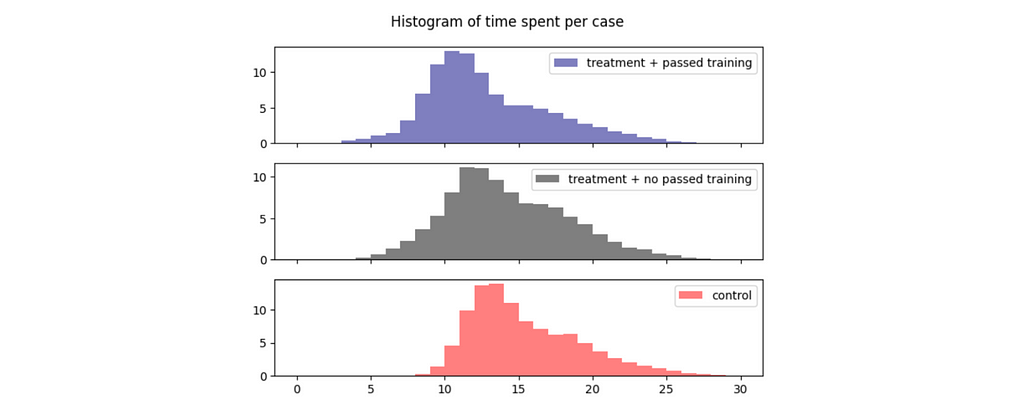

As usual, let’s start with a high-level overview of the data. We have quite a lot of data points, so we will likely be able to get statistically significant results. Also, we can see way lower average response times for the treatment group, so we can hope that the LLM tool really helps.

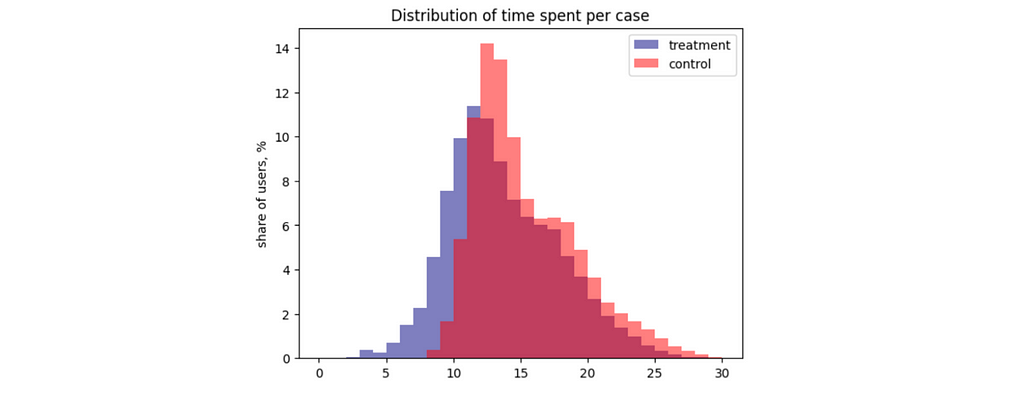

I also usually look at the actual distributions since average statistics might be misleading. In this case, we can see two unimodal distributions without distinctive outliers.

Classic statistical approach

The classic approach to analysing A/B tests is to use statistical formulas. Using the scipy package, we can calculate the confidence interval for the difference between the two means.

# defining samples

control_values = df[df.treatment == 0].time_spent_mins.values

exp_values = df[df.treatment == 1].time_spent_mins.values

# calculating p-values

from scipy.stats import ttest_ind

ttest_ind(exp_values, control_values)

# Output: TtestResult(statistic=-70.2769283935386, pvalue=0.0, df=89742.0)

We got a p-value below 1%. So, we can reject the null hypothesis and conclude that there’s a difference in average time spent per case in the control and test groups. To understand the effect size, we can also calculate the confidence interval.

from scipy import stats

import numpy as np

# Calculate sample statistics

mean1, mean2 = np.mean(exp_values), np.mean(control_values)

std1, std2 = np.std(exp_values, ddof=1), np.std(control_values, ddof=1)

n1, n2 = len(exp_values), len(control_values)

pooled_std = np.sqrt(((n1 - 1) * std1**2 + (n2 - 1) * std2**2) / (n1 + n2 - 2))

degrees_of_freedom = n1 + n2 - 2

confidence_level = 0.95

# Calculate margin of error

margin_of_error = stats.t.ppf((1 + confidence_level) / 2, degrees_of_freedom) * pooled_std * np.sqrt(1 / n1 + 1 / n2)

# Calculate confidence interval

mean_difference = mean1 - mean2

conf_interval = (mean_difference - margin_of_error,

mean_difference + margin_of_error)

print("Confidence Interval:", list(map(lambda x: round(x, 3), conf_interval)))

# Output: Confidence Interval: [-1.918, -1.814]

As expected since p-value is below 5%, our confidence interval doesn’t include 0.

The traditional approach works. However, we can get the same results with linear regression, which will also allow us to do more advanced analysis later. So, let’s discuss this method.

Linear regression basics

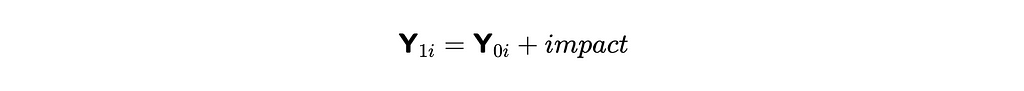

As we already discussed, observing both potential outcomes (with and without treatment) for the same object is impossible. Since we won’t be able to estimate the impact on each object individually, we need a model. Let’s assume the constant treatment effect.

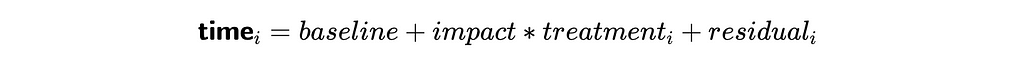

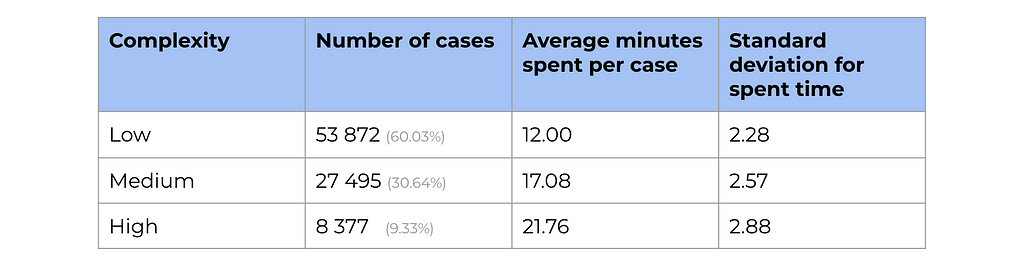

Then, we can write down the relation between outcome (time spent on request) and treatment in the following way, where

- baseline is a constant that shows the basic level of outcome,

- residual represents other potential relationships we don’t care about right now (for example, the agent’s maturity or complexity of the case).

It’s a linear equation, and we can get the estimation of the impact variable using linear regression. We will use OLS (Ordinary Least Squares) function from statsmodels package.

import statsmodels.formula.api as smf

model = smf.ols('time_spent_mins ~ treatment', data=df).fit()

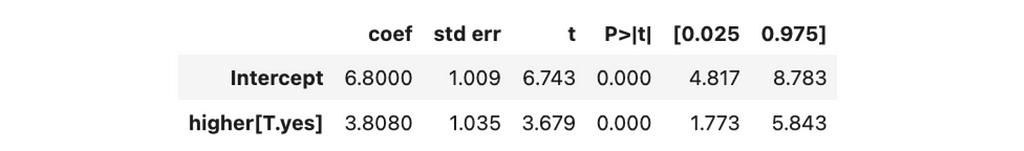

model.summary().tables[1]

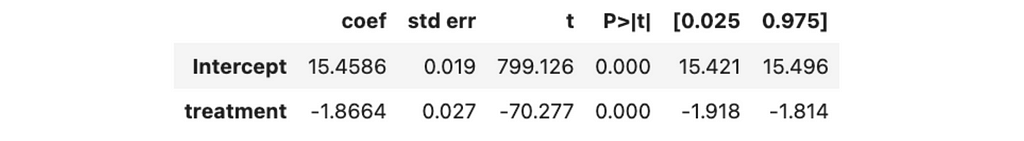

In the result, we got all the needed info: estimation of the effect (coefficient for the treatment variable), its p-value and confidence interval.

Since the p-value is negligible (definitely below 1%), we can consider the effect significant and say that our LLM-based tool helps to reduce the time spent on a case by 1.866 minutes with a 95% confidence interval (1.814, 1.918). You can notice that we got exactly the same result as with statistical formulas before.

Adding more variables

As promised, we can make a more complex analysis with linear regression and take into account more factors, so let’s do it. In our initial approach, we used only one regressor — treatment flag. However, we can add more variables (for example, complexity).

In this case, the impact will show estimation after accounting for all the effects of other variables in the model (in our case — task complexity). Let’s estimate it. Adding more variables into the regression model is straightforward — we just need to add another component to the equation.

import statsmodels.formula.api as smf

model = smf.ols('time_spent_mins ~ treatment + complexity', data=df).fit()

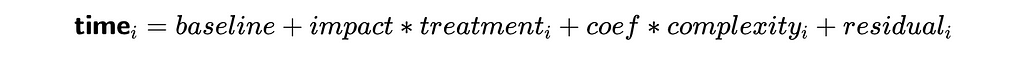

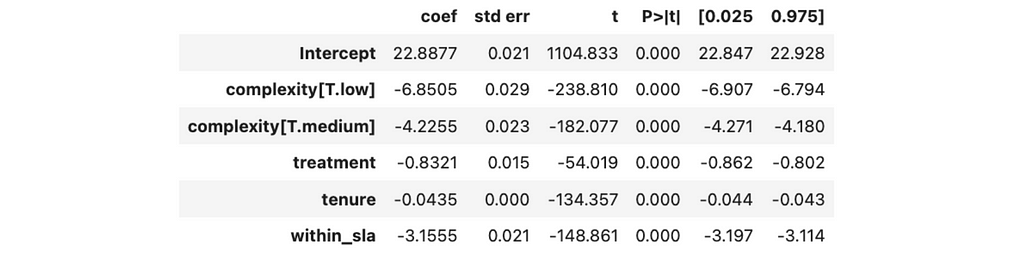

model.summary().tables[1]

Now, we see a bit higher estimation of the effect — 1.91 vs 1.87 minutes. Also, the error has decreased (0.015 vs 0.027), and the confidence interval has narrowed.

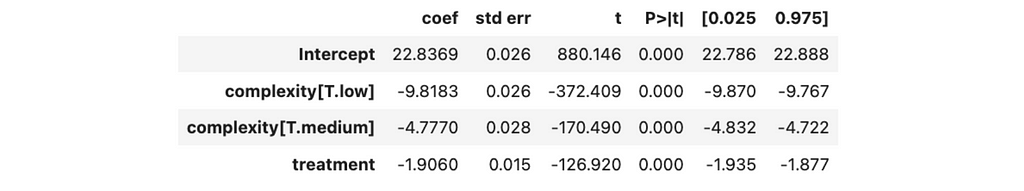

You can also notice that since complexity is a categorical variable, it was automatically converted into a bunch of dummy variables. So, we got estimations of -9.8 minutes for low-complexity tasks and -4.7 minutes for medium ones.

Let’s try to understand why we got a more confident result after adding complexity. Time spent on a customer case significantly depends on the complexity of the tasks. So, complexity is responsible for a significant amount of our variable’s variability.

As I mentioned before, the coefficient for treatment estimates the impact after accounting for all the other factors in the equation. When we added complexity to our linear regression, it reduced the variance of residuals, and that’s why we got a narrower confidence interval for time.

Let’s double-check that complexity explains a significant proportion of variance. We can see a considerable decrease: time spent has a variance equal to 16.6, but when we account for complexity, it reduces to just 5.9.

time_model = smf.ols('time_spent_mins ~ complexity', data=df).fit()

print('Initial variance: %.2f' % (df.time_spent_mins.var()))

print('Residual variance after accounting for complexity: %.2f'

% (time_model.resid.var()))

# Output:

# Initial variance: 16.63

# Residual variance after accounting for complexity: 5.94

So, we can see that adding a factor that can predict the outcome variable to a linear regression can improve your effect size estimations. Also, it’s worth noting that the variable is not correlated with treatment assignment (the tasks of each complexity have equal chances to be in the control or test group).

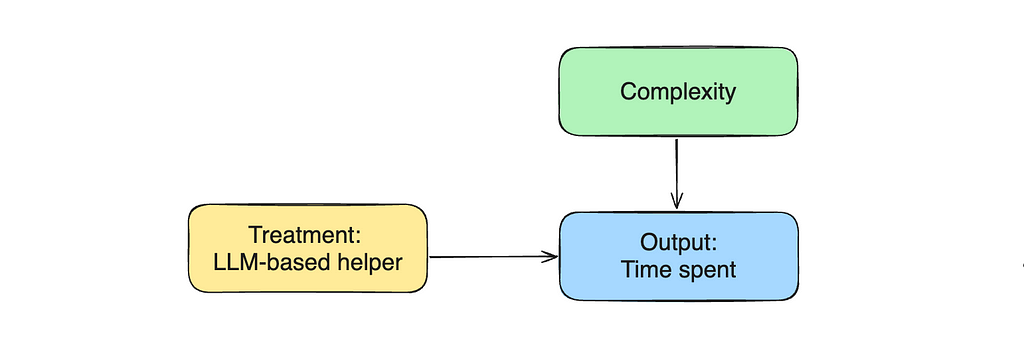

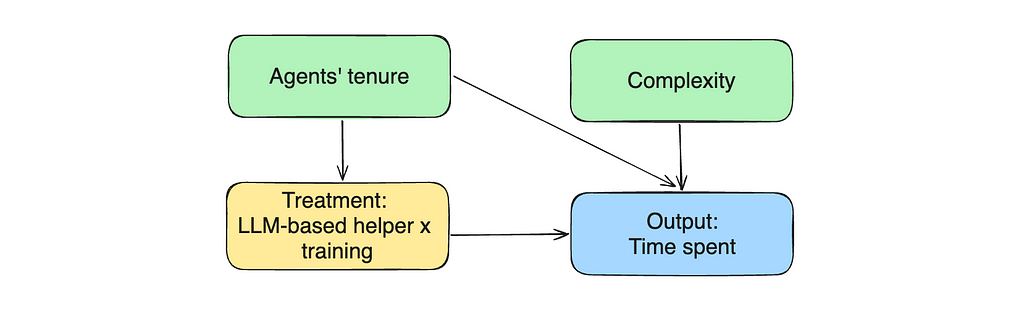

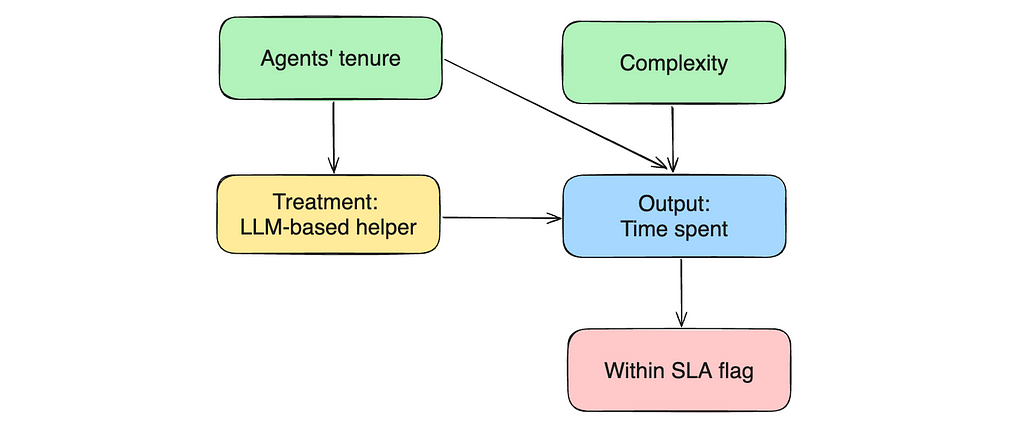

Traditionally, causal graphs are used to show the relationships between the variables. Let’s draw such a graph to represent our current situation.

Non-linear relationships

So far, we’ve looked only at linear relationships, but sometimes, it’s not enough to model our situation.

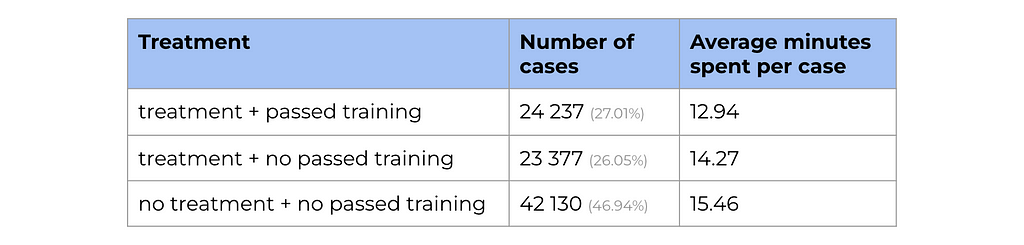

Let’s look at the data on LLM training that agents from the experiment group were supposed to pass. Only half of them have passed the LLM training and learned how to use the new tool effectively.

We can see a significant difference in average time spent for the treatment group who passed training vs. those who didn’t.

So, we should expect different impacts from treatment for these two groups. We can use non-linearity to express such relationships in formulas and add treatment * passed_training component to our equation.

model = smf.ols('time_spent_mins ~ treatment * passed_training + complexity',

data=df).fit()

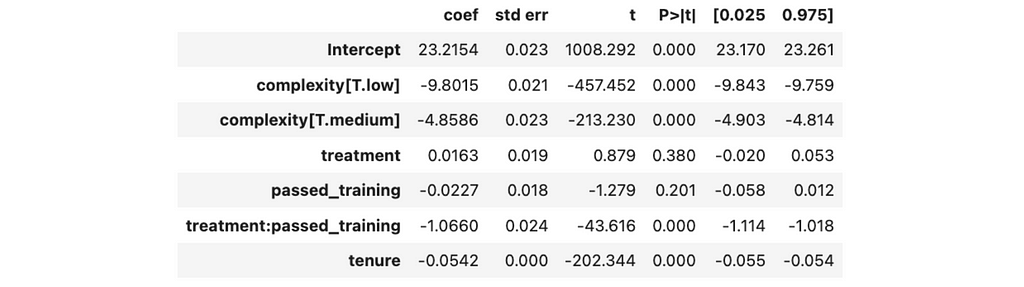

model.summary().tables[1]

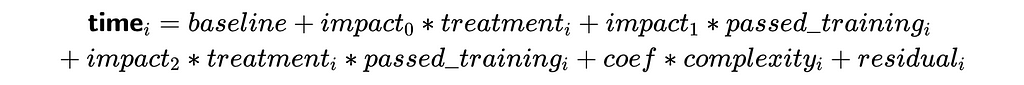

The treatment and passed_training factors will also be automatically added to the regression. So, we will be optimising the following formula.

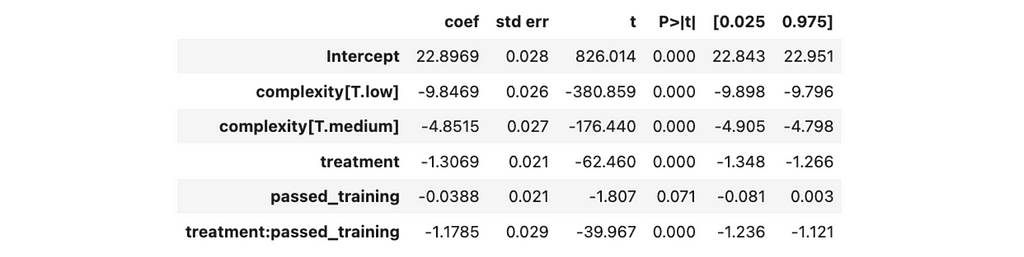

We got the following results from the linear regression.

No statistically significant effect is associated with passed training since the p-value is above 5%, while other coefficients differ from zero.

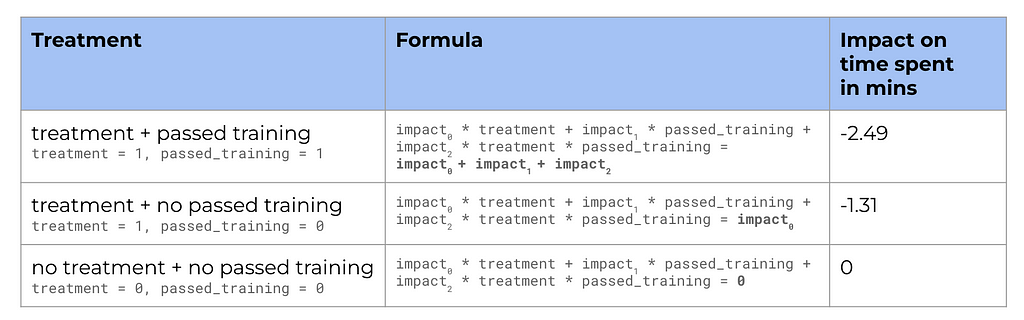

Let’s put down all the different scenarios and estimate the effects using the coefficients we got from the linear regression.

So, we’ve got new treatment estimations: 2.5 minutes improvement per case for the agents who have passed the training and 1.3 minutes — for those who didn’t.

Confounders

Before jumping to conclusions, it’s worth double-checking some assumptions we made — for example, random assignment. We’ve discussed that we launched the experiment in some CS centres. Let’s check whether agents in the different centres are similar so that our control and test groups are non-biased.

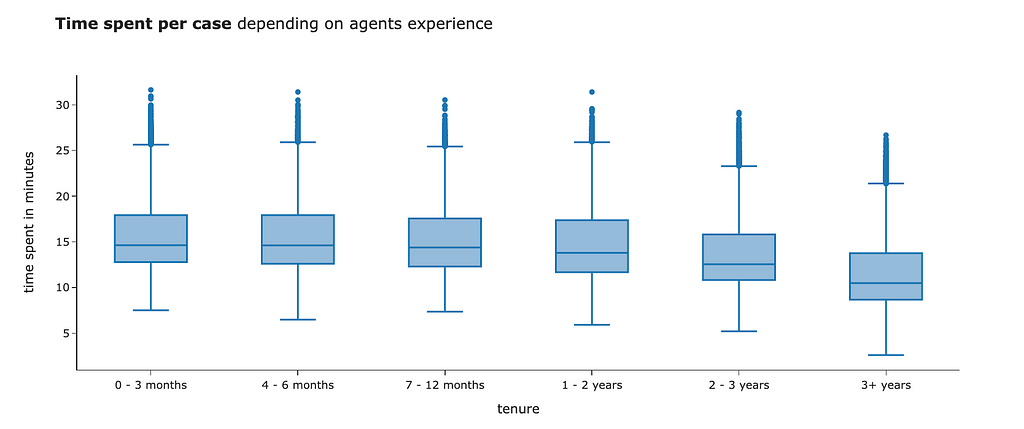

We know that agents differ by experience, which might significantly affect their performance. Our day-to-day intuition tells us that more experienced agents will spend less time on tasks. We can see in the data that it is actually like this.

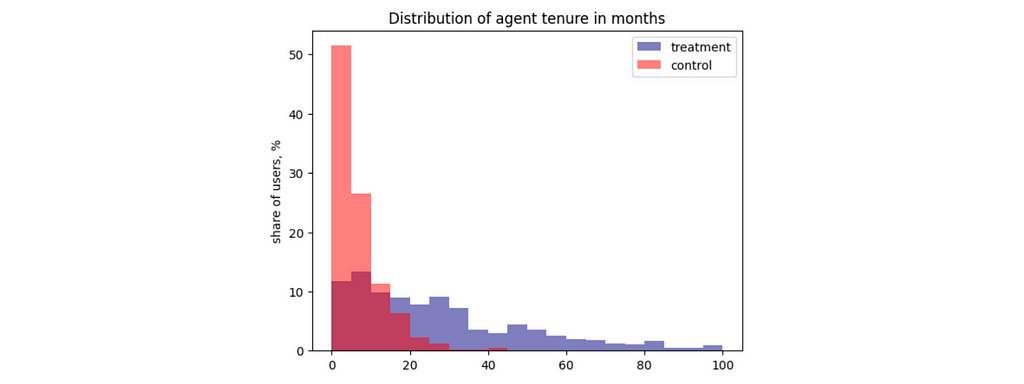

Let’s see whether our experiment and control have the same level of agents’ experience. The easiest way to do it is to look at distributions.

Apparently, agents in the treatment group have much more experience than the ones in the control group. Overall, it makes sense that the product team decided to launch the experiment, starting with the more trained agents. However, it breaks our assumption about random assignment. Since the control and test groups are different even without treatment, we are overestimating the effect of our LLM tool on the agents’ performance.

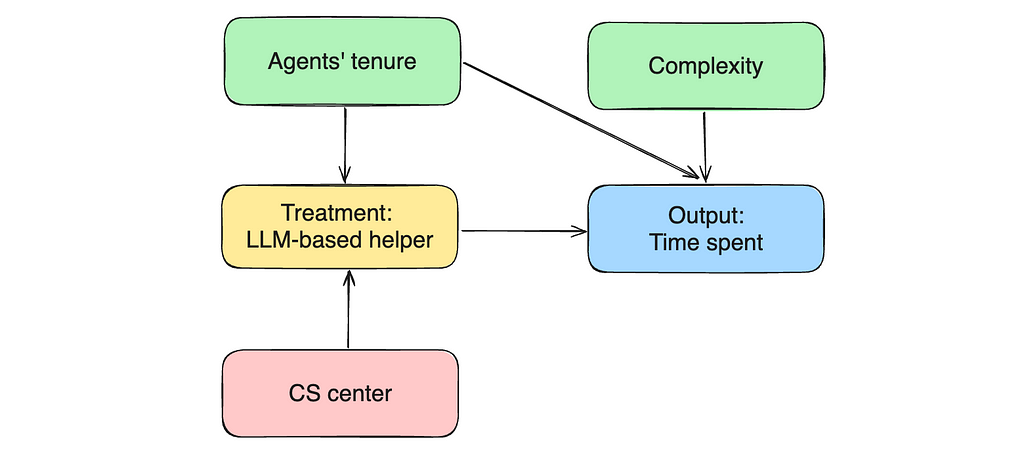

Let’s return to our causal graph. The agent’s experience affects both treatment assignment and output variable (time spent). Such variables are called confounders.

Don’t worry. We can solve this issue effortlessly — we just need to include confounders in our equation to control for it. When we add it to the linear regression, we start to estimate the treatment effect with fixed experience, eliminating the bias. Let’s try to do it.

model = smf.ols('time_spent_mins ~ treatment * passed_training + complexity + tenure', data=df).fit()

model.summary().tables[1]

With added tenure, we got the following results:

- There is no statistically significant effect of passed training or treatment alone since the p-value is above 5%. So, we can conclude that an LLM helper does not affect agents’ performance unless they have passed the training. In the previous iteration, we saw a statistically significant effect, but it was due to tenure confounding bias.

- The only statistically significant effect is for the treatment group with passed training. It equals 1.07 minutes with a 95% confidence interval (1.02, 1.11).

- Each month of tenure is associated with 0.05 minutes less time spent on the task.

We are working with synthetic data so we can easily compare our estimations with actual effects. The LLM tool reduces the time spent per task by 1 minute if the agent has passed the training, so our estimations are pretty accurate.

Bad controls

Machine learning tasks are often straightforward: you gather data with all possible features you can get, try to fit some models, compare their performance and pick the best one. Contrarily, causal inference requires some art and a deep understanding of the process you’re working with. One of the essential questions is what features are worth including in regression and which ones will spoil your results.

Till now, all the additional variables we’ve added to the linear regression have been improving the accuracy. So, you might think adding all your features to regression will be the best strategy. Unfortunately, it’s not that easy for causal inference. In this section, we will look at a couple of cases when additional variables decrease the accuracy of our estimations.

For example, we have a CS centre in data. We’ve assigned treatment based on the CS centre, so including it in the regression might sound reasonable. Let’s try.

model = smf.ols('time_spent_mins ~ treatment + complexity + tenure + cs_center',

data=df[df.treatment == df.passed_training]).fit()

model.summary().tables[1]

For simplicity, I’ve removed non-linearity from our dataset and equation, filtering out cases where the agents from the treatment groups didn’t pass the LLM training.

If we include the CS centre in linear regression, we will get a ridiculously high estimation of the effect (around billions) without statistical significance. So, this variable is rather harmful than helpful.

Let’s update a causal chart and try to understand why it doesn’t work. CS centre is a predictor for our treatment but has no relationship with the output variable (so it’s not a confounder). Adding a treatment predictor leads to multicollinearity (like in our case) or reduces the treatment variance (it’s challenging to estimate the effect of treatment on the output variable since treatment doesn’t change much). So, it’s a bad practice to add such variables to the equation.

Let’s move on to another example. We have a within_sla variable showing whether the agents finished the task within 15 minutes. Can this variable improve the quality of our effect estimations? Let’s see.

model = smf.ols('time_spent_mins ~ treatment + complexity + tenure + within_sla',

data=df[df.treatment == df.passed_training]).fit()

model.summary().tables[1]

The new effect estimation is way lower: 0.8 vs 1.1 minutes. So, it poses a question: which one is more accurate? We’ve added more parameters to this model, so it’s more complex. Should it give more precise results, then? Unfortunately, it’s not always like that. Let’s dig deeper into it.

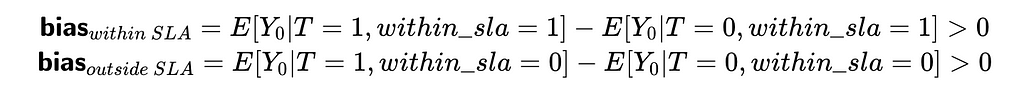

In this case, within_sla flag shows whether the agent solved the problem within 15 minutes or the question took more time. So, if we return to our causal chart, within_sla flag is an outcome of our output variable (time spent on the task).

When we add the within_slag flag into regression and control for it, we are starting to estimate the effect of treatment with a fixed value of within_sla. So, we will have two cases: within_sla = 1 and within_sla = 0. Let’s look at the bias for each of them.

In both cases, bias is not equal to 0, which means our estimation is biased. At first glance, it might look a bit counterintuitive. Let me explain the logic behind it a bit.

- In the first equation, we compare cases where agents finished the tasks within 15 minutes with the help of the LLM tool and without. The previous analysis shows that the LLM tool (our treatment) tends to speed up agents’ work. So, if we compare the expected time spent on tasks without treatments (when agents work independently without the LLM tool), we should expect quicker responses from the second group.

- Similarly, for the second equation, we are comparing agents who haven’t completed tasks within 15 minutes, even with the help of LLM and those who did it on their own. Again, we should expect longer response times from the first group without treatment.

It’s an example of selection bias — a case when we control for a variable on the path from treatment to output variable or outcome of the output variable. Controlling for such variables in a linear regression also leads to biased estimations, so don’t do it.

Grouped data

In some cases, you might not have granular data. In our example, we might not know the time spent on each task individually, but know the averages. It’s easier to track aggregated numbers for agents. For example, “within two hours, an agent closed 15 medium tasks”. We can aggregate our raw data to get such statistics.

agents_df = df.groupby(['agent_id', 'treatment', 'complexity', 'tenure',

'passed_training'], as_index = False).aggregate(

{'case_id': 'nunique', 'time_spent_mins': 'mean'}

)

It’s not a problem for linear regression to deal with agent-level data. We just need to specify weights for each agent (equal to the number of cases).

model = smf.ols('time_spent_mins ~ treatment + complexity + tenure',

data = agents_df[agents_df.treatment == agents_df.passed_training],

weights = agents_df[agents_df.treatment == agents_df.passed_training]['case_id'])

.fit()

model.summary().tables[1]

With aggregated data, we have roughly the same results for the effect of treatment. So, there’s no problem if you have only average numbers.

Use case: observational data

We’ve looked at the A/B test examples for causal inference in detail. However, in many cases, we can’t conduct a proper randomised trial. Here are some examples:

- Some experiments are unethical. For example, you can’t push students to drink alcohol or smoke to see how it affects their performance at university.

- In some cases, you might be unable to conduct an A/B test because of legal limitations. For example, you can’t charge different prices for the same product.

- Sometimes, it’s just impossible. For example, if you are working on an extensive rebranding, you will have to launch it globally one day with a big PR announcement.

In such cases, you have to use just observations to make conclusions. Let’s see how our approach works in such a case. We will use the Student Performance data set from the UC Irvine Machine Learning Repository.

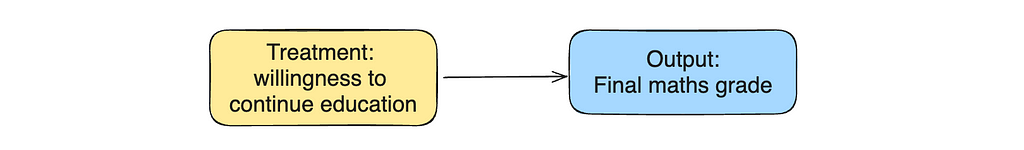

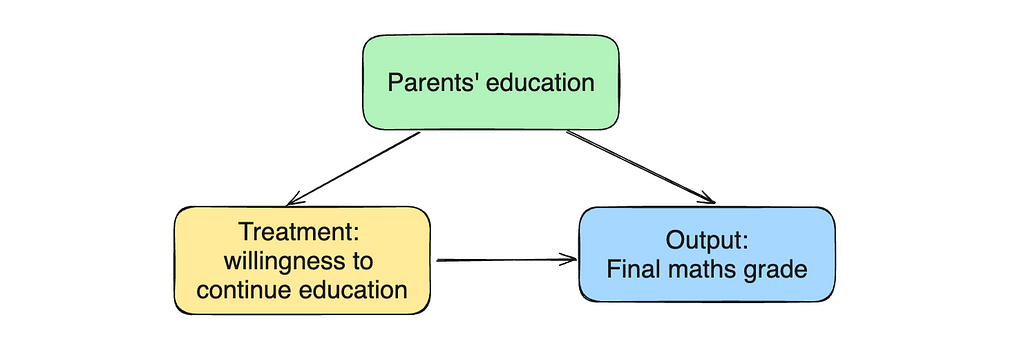

Let’s use this real-life data to investigate how willingness to take higher education affects the math class’s final score. We will start with a trivial model and a causal chart.

df = pd.read_csv('student-mat.csv', sep = ';')

model = smf.ols('G3 ~ higher', data=df).fit()

model.summary().tables[1]

We can see that willingness to continue education statistically significantly increases the final grade for the course by 3.8 points.

However, there might be some confounders that we have to control for. For example, parents’ education can affect both treatments (children are more likely to plan to take higher education if their parents have it) and outcomes (educated parents are more likely to help their children so that they have higher grades). Let’s add the mother and father’s education level to the model.

model = smf.ols('G3 ~ higher + Medu + Fedu', data=df).fit()

model.summary().tables[1]

We can see a statistically significant effect from the mother’s education. We likely improved the accuracy of our estimation.

However, we should treat any causal conclusions based on observational data with a pinch of salt. We can’t be sure that we’ve taken into account all confounders and that the estimation we’ve got is entirely unbiased.

Also, it might be tricky to interpret the direction of the relation. We are sure there’s a correlation between willingness to continue education and final grade. However, we can interpret it in multiple ways:

- Students who want to continue their education are more motivated, so they have higher final grades.

- Students with higher final grades are inspired by their success in studying, and that’s why they want to continue their education.

With observational data, we can only use our common sense to choose one option or the other. There’s no way to infer this conclusion from data.

Despite the limitations, we can still use this tool to try our best to come to some conclusions about the world. As I mentioned, causal inference is based significantly on domain knowledge and common sense, so it’s worth spending time near the whiteboard to think deeply about the process you’re modelling. It will help you to achieve excellent results.

You can find complete code for these examples on GitHub.

Summary

We’ve discussed quite a broad topic of causal inference, so let me recap what we’ve learned:

- The main goal of predictive analytics is to get accurate forecasts. The causal inference is focused on understanding the relationships, so we care more about the coefficients in the model than the actual predictions.

- We can leverage linear regression to get the causal conclusions.

- Understanding what features we should add to the linear regression is an art, but here is some guidance.

— You must include confounders (features that affect both treatment and outcome).

— Adding a feature that predicts the output variable and explains its variability can help you to get more confident estimations.

— Avoid adding features that either affect only treatment or are the outcome of the output variable. - You can use this approach for both A/B tests and observational data. However, with observations, we should treat our causal conclusions with a pinch of salt because we can never be sure that we accounted for all confounders.

Thank you a lot for reading this article. If you have any follow-up questions or comments, please leave them in the comments section.

Dataset

Cortez, Paulo. (2014). Student Performance. UCI Machine Learning Repository (CC BY 4.0). https://doi.org/10.24432/C5TG7T

Reference

All the images are produced by the author unless otherwise stated.

This article is inspired by the book Causal Inference for the Brave and True that gives a wonderful overview on the causal inference basics.

Linear Regressions for Causal Conclusions was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Linear Regressions for Causal Conclusions

Go Here to Read this Fast! Linear Regressions for Causal Conclusions