Go here to Read this Fast! Marathon Digital pays Hut 8 $13.5m to run two Bitcoin mining sites

Originally appeared here:

Marathon Digital pays Hut 8 $13.5m to run two Bitcoin mining sites

Go here to Read this Fast! Marathon Digital pays Hut 8 $13.5m to run two Bitcoin mining sites

Originally appeared here:

Marathon Digital pays Hut 8 $13.5m to run two Bitcoin mining sites

Despite Ripple’s major legal victories, including a court declaration that XRP is not a security last July, Ripple’s global business expansion, and XRPL’s robust growth over the past year, XRP’s price performance remains a cause for concern.

Originally appeared here:

XRP Army Debates Price ‘Weakness’ Even As Ripple CEO Envisions ‘Big Year’ Amid Key Legal Wins

Imagine a scenario where you just started an A/B test that will be running for the next two weeks. However, after just a day or two, it is becoming increasingly clear that version A is working better for certain types of users, whereas version B is working better for another set of users. You think to yourself: Perhaps I should re-route the traffic such that users are getting more of the version that is benefiting them more, and less of the other version. Is there a principled way to achieve this?

Contextual bandits are a class of one-step reinforcement learning algorithms specifically designed for such treatment personalization problems where we would like to dynamically adjust traffic based on which treatment is working for whom. Despite being incredibly powerful in what they can achieve, they are one of the lesser known methods in Data Science, and I hope that this post will give you a comprehensive introduction to this amazing topic. Without further ado, let’s dive right in!

If you are just getting started with contextual bandits, it can be confusing to understand how contextual bandits are related to other more widely known methods such as A/B testing, and why you might want to use contextual bandits instead of those other methods. Therefore, we start our journey by discussing the similarities and differences between contextual bandits and related methods.

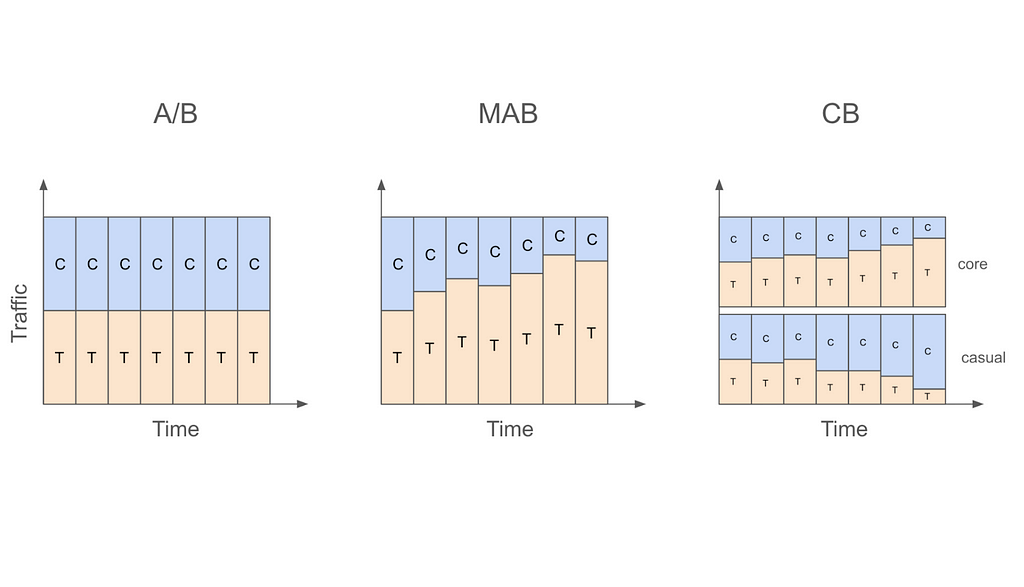

Let us start with the most basic A/B testing setting that allocates traffic into treatment and control in a static fashion. For example, a data scientist might decide to run an A/B test for two weeks with 50% of traffic going to treatment and 50% going to control. What this means is that regardless of whether we are on the first day of the test or the last, we will be assigning users to control or treatment with 50% probability.

On the other hand, if the data scientist were to use a multi-armed bandit (MAB) instead of an A/B test in this case, then traffic will be allocated to treatment and control in a dynamic fashion. In other words, traffic allocations in a MAB will change as days go by. For example, if the algorithm decides that treatment is doing better than control on the first day, the traffic allocation can change from 50% treatment and 50% control to 60% treatment vs 40% control on the second day, and so on.

Despite allocating traffic dynamically, MAB ignores an important fact, which is that not all users are the same. This means that a treatment that is working for one type of user might not work for another. For example, it might be the case that while treatment is working better for core users, control is actually better for casual users. In this case, even if treatment is better overall, we can actually get more value from our application if we assign more core users to treatment and more casual users to control.

This is exactly where contextual bandits (CB) come in. While MAB simply looks at whether treatment or control is doing better overall, CB focuses on whether treatment or control is doing better for a user with a given set of characteristics. The “context” in contextual bandits precisely refers to these user characteristics and is what differentiates it from MAB. For example, CB might decide to increase treatment allocation to 60% for core users but decrease treatment allocation to 40% for casual users after observing first day’s data. In other words, CB will dynamically update traffic allocation taking user characteristics (core vs casual in this example) into account.

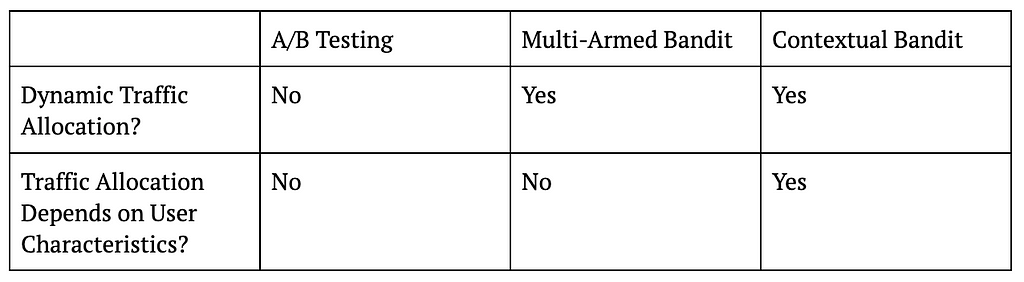

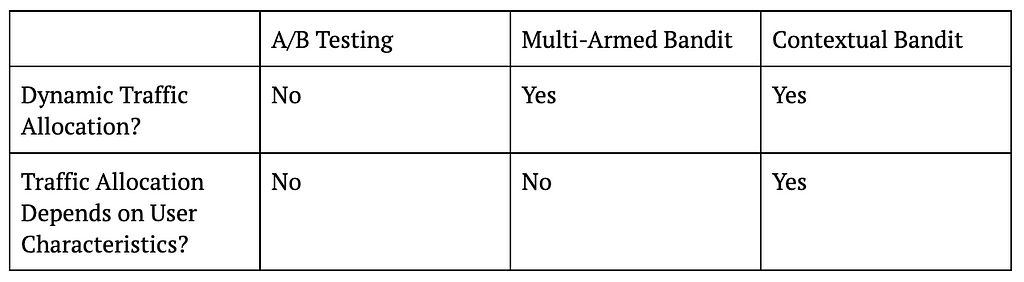

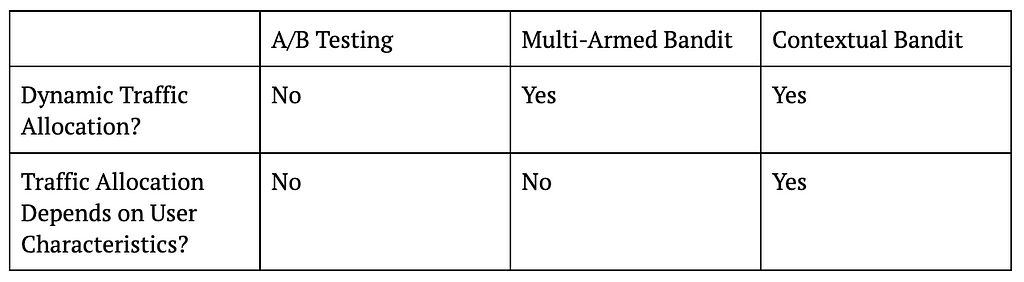

The following table summarizes the key differences between A/B testing, MAB, and CB, and the figure that follows visualizes these ideas.

Table 1: Differences Between A/B Testing, MAB, and CB

Figure 1: Traffic Allocations in A/B Testing, MAB, and CB

At this point, you might be tempted to think that CB is nothing more than a set of multiple MABs running together. In fact, when the context we are interested in is a small one (e.g., we are only interested in whether a user is a core user or a casual user), we can simply run one MAB for core users and another MAB for casual users. However, as the context gets large (core vs casual, age, country, time since last active, etc.) it becomes impractical to run a separate MAB for each unique context value.

The real value of CB emerges in this case through the use of models to describe the relationship of the experimental conditions in different contexts to our outcome of interest (e.g., conversion). As opposed to enumerating through each context value and treating them independently, the use of models allows us to share information from different contexts and makes it possible to handle large context spaces. This idea of a model will be discussed at several different points in this post, so keep on reading to learn more.

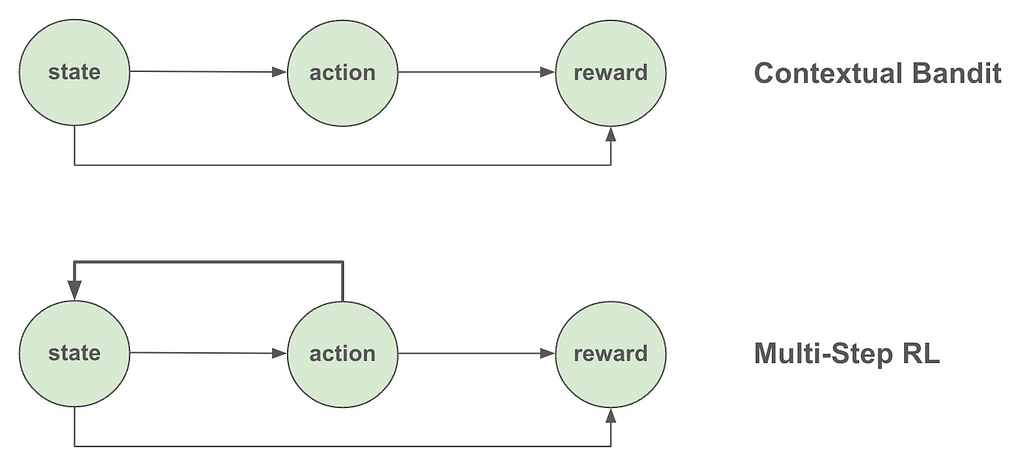

The introduction referred to CB as a class of one-step reinforcement learning (RL) algorithms. So, what exactly is the difference between one-step and multi-step RL? And what makes CB one-step? The fundamental difference between CB and multi-step RL is that in CB we assume the actions the algorithm takes (e.g., serve treatment or control to a specific user) don’t affect the future states of the overall system. In other words, the state (or “context” as is more appropriately called in CB) affects what action we take, but that action we took does not in turn impact or change the state. The following figure summarizes this distinction.

Figure 2: Contextual Bandit vs Multi-Step RL

A few examples should make this distinction clearer. Let’s say that we are building a system to decide what ads to show to users based on their age. We would expect that users from different age groups may find different ads more relevant to them, which means that a user’s age should affect what ads we should show them. However, the ad we showed them doesn’t in turn affect their age, so the one-step assumption of CB seems to hold. However, if we move one step further and find out that serving expensive ads deplete our inventory (and limit which ads we can serve in the future) or that the ad we show today affect whether the user will visit our site again, then the one-step assumption is indirectly inviolated, so we may want to consider developing a full-blown RL system instead.

A note of caution though: While multi-step reinforcement learning is more flexible compared to contextual bandits, it’s also more complicated to implement. So, if the problem at hand can be accurately framed as a one-step problem (even though it looks like a multi-step problem at first glance), contextual bandits could be the more practical approach.

Before moving on to discussing different CB algorithms, I would also like to briefly touch upon the connection between CB and uplift modeling. An uplift model is usually built on top of A/B test data to discover the relationship between the treatment effect (uplift) and user characteristics. The results from such a model can then be used to personalize treatments in the future. For example, if the uplift model discovers that certain users are more likely to benefit from a treatment, then only those types of users might be given the treatment in the future.

Given this description of uplift modeling, it should be clear that both CB and uplift modeling are solutions to the personalization problem. The key difference between them is that CB approaches this problem in a more dynamic way in the sense that personalization happens on-the-fly instead of waiting for results from an A/B test. At a conceptual level, CB can very loosely be thought of as A/B testing and uplift modeling happening concurrently instead of sequentially. Given the focus of this post, I won’t be discussing uplift modeling further, but there are several great resources to learn more about it such as [1].

Above we discussed how CB dynamically allocates traffic depending on whether treatment or control is doing better for a given group of users at a given point in time. This raises an important question: How aggressive do we want to be when we are making these traffic allocation changes? For example, if after just one day of data we decide that treatment is working better for users from the US, should we completely stop serving control to US users?

I’m sure most of you would agree that this would be a bad idea, and you would be correct. The main problem with changing traffic allocations this aggressively is that making inferences based on insufficient amounts of data can lead to erroneous conclusions. For example, it might be that the first day of data we gathered is actually not representative of dormant users and that in reality control is better for them. If we stop serving control to US users after the first day, we will never be able to learn this correct relationship.

A better approach to dynamically updating traffic allocations is striking the right balance between exploitation (serve the best experimental condition based on the data so far) and exploration (continue to serve other experimental conditions as well). Continuing with the previous example, if data from the first day indicate that treatment is better for US users, we can serve treatment to these users with an increased probability the next day while still allocating a reduced but non-zero fraction to control.

There are numerous exploration strategies used in CB (and MAB) as well as several variations of them that try to strike this right balance between exploration and exploitation. Three popular strategies include ε-greedy, upper confidence bound, and Thompson sampling.

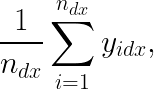

In this strategy, we first decide which experimental condition is doing better for a given group of users at a given point in time. The simplest way to do this is by comparing the average target values (y) for each experimental condition for these users. More formally, we can decide the “winning” condition for a group of users by finding the condition d that has the higher value for

where n_dx is the number of samples we have so far from users in condition d with context x, and y_idx is the target value for a given sample i in condition d with context x.

After deciding which experimental condition is currently “best” for these users, we serve them that condition with 1-ε probability (where ε is usually a small number such as 0.05) and serve a random experimental condition with probability ε. In reality, we might want to dynamically update our ε such that it is large at the beginning of the experiment (when more exploration is needed) and gradually gets smaller as we collect more and more data.

Additionally, context X might be high-dimensional (country, gender, platform, tenure, etc.) so we might want to use a model to get these y estimates to deal with the curse of dimensionality. Formally, the condition to serve can be decided by finding the condition d that has the higher value for

where x^T is an m-dimensional row-vector of context values and θ_d is an m-dimensional column-vector of learnable parameters associated with condition d.

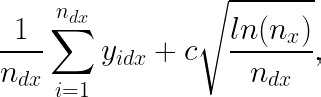

This strategy decides the next condition to serve by looking at not only which condition has a higher y estimate but also our precision of (or confidence in) that estimate. In a simple MAB setting, precision can be thought to be a function of how many times a given condition has already been served so far. In particular, a condition that (i) has a high average y (so it makes sense to exploit) or (ii) has not yet been served many times (so it needs more exploration) is more likely to be served next.

We can generalize this idea to the CB setting by keeping track of how many times different conditions are served in different contexts. Assuming a simple setting with a low-dimensional context X such that CB can be thought of as just multiple MABs running together, we can select the next condition to serve based on which condition d has the higher value for

where c is some constant (to be selected based on how much emphasis we want to put on the precision of our estimate when exploring) and n_x is the number of times context x is seen so far.

However, in most cases, the context X will be high-dimensional, which means that just like in the ε-greedy case, we would need to make use of a model. In this setting, a condition d can be served next if it has the higher value for

where SE(.) is the standard error of our estimate (or more generally a metric that quantifies our current level of confidence in that estimate).

Note that there are several versions of UCB, so you will likely come across different formulas. A popular UCB method is LinUCB that formalizes the problem in a linear model framework (e.g., [2]).

The third and final exploration strategy to be discussed is Thompson sampling, which is a Bayesian approach to solving the exploration-exploitation dilemma. Here, we have a model f(D, X; Θ) that returns predicted y values given experimental condition D, context X, and some set of learnable parameters Θ. This function gives us access to posterior distributions of expected y values for any condition-context pair, thus allowing us to choose the next condition to serve according to the probability that it yields the highest expected y given context. Thompson sampling naturally balances exploration and exploitation as we are sampling from the posterior and updating our model based on the observations. To make these ideas more concrete, here are the steps involved in Thompson sampling:

In practice, instead of having a single function we can also use a different function for each experimental condition (e.g., evaluate both f_c(X; Θ_c) and f_t(X; Θ_t) and then select the condition with the higher value). Furthermore, the update step usually takes place not after each sample but rather after seeing a batch of samples. For more details on Thompson sampling, you can refer to [3] [4].

The previous section (especially the part on Thompson sampling) should already give you a pretty good sense of the steps involved in a CB algorithm. However, for the sake of completeness, here is a step-by-step description of a standard CB algorithm:

So far we have only discussed how to implement a CB algorithm as new data come in. An equally important topic to cover is how to evaluate a CB algorithm using old (or logged) data. This is called offline evaluation or offline policy evaluation (OPE).

One way to do OPE is using well-known causal inference techniques such as Inverse Propensity Scoring (IPS) or the Doubly Robust (DR) method. Causal inference is appropriate here because we are essentially trying to estimate the counterfactual of what would have happened if a different policy served a different condition to a user. There is already a great Medium article on this topic [5], so here I will only briefly summarize the main idea from that piece and adapt it to our discussion.

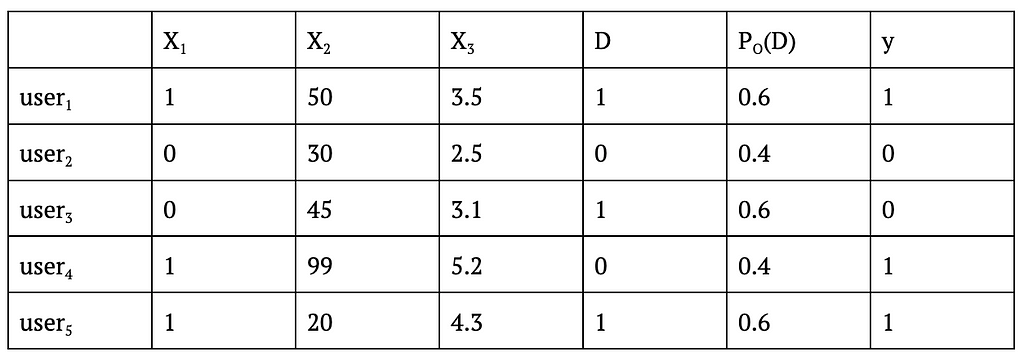

Taking IPS as an example, doing OPE usually requires us to know not only (i) the probability of assigning a given condition to a sample using our new CB algorithm but also (ii) the probability with which a given condition was assigned to a sample in the logged data. Take the following hypothetical logged data with X_1-X_3 being context, D being the experimental condition, P_O(D) being the probability of assigning D to that user, and y being the outcome.

Table 2: Example Logged Data From An A/B Test

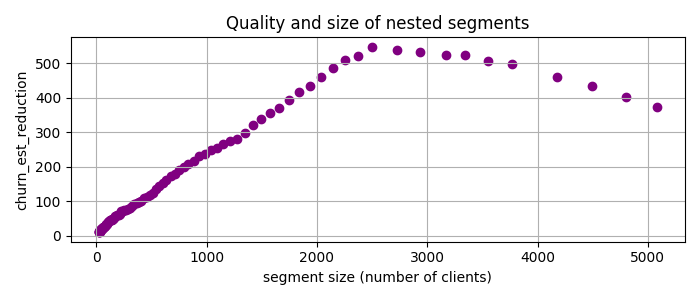

As you can see, in this example P_O(D) is always 0.6 for D=1 and 0.4 for D=0 regardless of the context, so the logged data can be assumed to come from an A/B test that assigns treatment with probability 0.6. Now, if we want to test how a CB algorithm would have performed had we assigned conditions using a CB algorithm rather than a simple A/B test, we can use the following formula to get the IPS estimate of the cumulative y for CB

where n is the number of samples in the logged data (which is 5 here) and P_N(D_i) is the probability of serving the logged D for user_i had we used the new CB algorithm instead (this probability will depend on the specific algorithm being evaluated).

Once we have this estimate, we can compare that to the observed cumulative y from the old A/B test (which is 1+0+0+1+1=3 here) to decide if the CB would have yielded a higher cumulative y.

For more information on OPE using causal inference methods, please refer to the article linked at the beginning of the section. The article also links to a nice GitHub repo with lots of OPE implementations.

A side note here is that this section discussed causal inference methods only as a technique used in OPE. However, in reality, one can also apply them while the CB algorithm is being run so as to “debias” the training data that the algorithm collects along the way. The reason why we might want to apply methods such as IPS to our training data is that the CB policy that generates this data is a non-uniform random policy by definition, so estimating causal effects from it to decide what action to take would benefit from using causal inference methods. If you would like to learn more about debiasing, please refer to [6].

Another way to do OPE is through the use of sampling methods. In particular, a very simple replay method [7] can be used to evaluate a CB algorithm (or any other algorithm for that matter) using logged data from a randomized policy such as an A/B test. In its simplest form (where we assume a uniform random logging policy), the method works as follows:

If the logging policy doesn’t assign treatments uniformly at random, then the method needs to be slightly modified. One modification that the authors themselves mention is to use rejection sampling (e.g., [8]) whereby we would accept samples from the majority treatment less often compared to the minority treatment in Step 3. Alternatively, we could consider dividing the observed y by the propensity in Step 3 to similarly “down-weight” the more frequent treatment and “up-weight” the less frequent one.

In the next section, I employ an even simpler method in my evaluation that uses up- and down-sampling with bootstrap to transform the original non-uniform data into a uniform one and then apply the method as it is.

To demonstrate contextual bandits in action, I put together a notebook that generates a simulated dataset and compares the cumulative y (or “reward”) estimates for new A/B, MAB, and CB policies evaluated on this dataset. Many parts of the code in this notebook are taken from the Contextual Bandits chapter of an amazing book on Reinforcement Learning [9] (highly recommended if you would like to dig deeper into Reinforcement Learning using Python) and two great posts by James LeDoux [10] [11] and adapted to the setting we are discussing here.

The setup is very simple: The original data we have comes from an A/B test that assigned treatment to users with probability 0.75 (so not uniformly at random). Using this randomized logged data, we would like to evaluate and compare the following three policies based on their cumulative y:

I modified the original method described in the Li et al. paper such that instead of directly sampling from the simulated data (which is 75% treatment and only 25% control in my example), I first down-sample treatment cases and up-sample control cases (both with replacement) to get a new dataset that is exactly 50% treatment and 50% control.

The reason why I start with a dataset that is not 50% treatment and 50% control is to show that even if the original data doesn’t come from a policy that assigns treatment and control uniformly at random, we can still work with that data to do offline evaluation after doing up- and/or down-sampling to massage it into a 50/50% dataset. As mentioned in the previous section, the logic behind up- and down-sampling is similar to rejection sampling and the related idea of dividing the observed y by the propensity.

The following figure compares the three policies described above (A/B vs MAB vs CB) in terms of their cumulative y values.

Figure 3: Cumulative Reward Comparison

As can be seen in this figure, cumulative y increases fastest for CB and slowest for A/B with MAB somewhere in between. While this result is based on a simulated dataset, the patterns observed here can still be generalized. The reason why A/B testing isn’t able to get a high cumulative y is because it isn’t changing the 60/40% allocation at all even after seeing sufficient evidence that treatment is better than control overall. On the other hand, while MAB is able to dynamically update this traffic allocation, it is still performing worse than CB because it isn’t personalizing the treatment vs control assignment based on the context X being observed. Finally, CB is both dynamically changing the traffic allocation and also personalizing the treatment, hence the superior performance.

Congratulations on making it to the end of this fairly long post! We covered a lot of ground related to contextual bandits in this post, and I hope that you leave this page with an appreciation of the usefulness of this fascinating method for online experimentation, especially when treatments need to be personalized.

If you are interested in learning more about contextual bandits (or want to go a step further into multi-step reinforcement learning), I highly recommend the book Mastering Reinforcement Learning with Python by E. Bilgin. The Contextual Bandit chapter of this book was what finally gave me the “aha!” moment in understanding this topic, and I kept on reading to learn more about RL in general. As far as offline policy evaluation is concerned, I highly recommend the posts by E. Conti and J. LeDoux, both of which provide great explanations of the techniques involved and provide code examples. Regarding debiasing in contextual bandits, the paper by A. Bietti, A. Agarwal, and J. Langford provides a great overview of the techniques involved. Finally, while this post exclusively focused on using regression models when building contextual bandits, there is an alternative approach called cost-sensitive classification, which you can start learning by checking out these lecture notes by A. Agarwal and S. Kakade [12].

Have fun building contextual bandits!

I would like to thank Colin Dickens for introducing me to contextual bandits as well as providing valuable feedback on this post, Xinyi Zhang for all her helpful feedback throughout the writing, Jiaqi Gu for a fruitful conversation on sampling methods, and Dennis Feehan for encouraging me to take the time to write this piece.

Unless otherwise noted, all images are by the author.

[1] Z. Zhao and T. Harinen, Uplift Modeling for Multiple Treatments with Cost Optimization (2019), DSAA

[2] Y. Narang, Recommender systems using LinUCB: A contextual multi-armed bandit approach (2020), Medium

[3] D. Russo, B. Van Roy, A. Kazerouni, I. Osband, and Z. Wen, A Tutorial on Thompson Sampling (2018), Foundations and Trends in Machine Learning

[4] B. Shahriari, K. Swersky, Z. Wang, R. Adams, and N. de Freitas, Taking the Human Out of the Loop: A Review of Bayesian Optimization (2015), IEEE

[5] E. Conti, Offline Policy Evaluation: Run fewer, better A/B tests (2021), Medium

[6] A. Bietti, A. Agarwal, and J. Langford, A Contextual Bandit Bake-off (2021), ArXiv

[7] L. Li, W. Chu, J. Langford, and X. Wang, Unbiased Offline Evaluation of Contextual-bandit-based News Article Recommendation Algorithms (2011), WSDM

[8] T. Mandel, Y. Liu, E. Brunskill, and Z. Popovic, Offline Evaluation of Online Reinforcement Learning Algorithms (2016), AAAI

[9] E. Bilgin, Mastering Reinforcement Learning with Python (2020), Packt Publishing

[10] J. LeDoux, Offline Evaluation of Multi-Armed Bandit Algorithms in Python using Replay (2020), LeDoux’s personal website

[11] J. LeDoux, Multi-Armed Bandits in Python: Epsilon Greedy, UCB1, Bayesian UCB, and EXP3 (2020), LeDoux’s personal website

[12] A. Agarwal and S. Kakade, Off-policy Evaluation and Learning (2019), University of Washington Computer Science Department

An Overview of Contextual Bandits was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

An Overview of Contextual Bandits

Go Here to Read this Fast! An Overview of Contextual Bandits

Inspired by an in-depth Medium article [1] with a case study on identifying bank customer segments with high churn reduction potential, this story explores a similar challenge through the lens of subgroup discovery methods [2]. Intrigued by the parallels, I applied a subgroup discovery approach to the same dataset and uncovered a segment with a 35% higher churn reduction potential — a significant improvement over what was previously reported. This story will take you through each step of the process, including building the methodology from the ground up. At the end of this journey, you’ll gain:

The complete code for PRIM and the experiment is on GitHub [3].

For the experiment, I’ve chosen my favorite subgroup discovery method: PRIM [4]. Despite its long presence in the field, PRIM has a unique mix of properties that make it very versatile:

In summary, PRIM’s straightforward logic not only makes it easy to implement, but also allows for customization.

PRIM works through two distinct phases: peeling and pasting. Peeling starts from a segment encompassing the entire dataset and gradually shrinks it while optimizing its quality. Pasting works similarly, but in the opposite direction — it tries to expand the selected candidate segment without quality loss. In our previous experiments [5], we observed that the pasting phase typically contributes minimally to the output quality. Therefore, I will focus on the peeling phase. The underlying logic of the peeling phase is as follows:

1. Initialize:

- Set the peeling parameter (usually 0.05)

- Set the initial box (segment) to encompass the entire data space.

- Define the target quality function (e.g., a potential churn reduction).

2. While the stopping criterion is not met:

- For each dimension of the data space:

* Identify a small portion (defined by a peeling parameter)

of the data to remove that maximizes quality of remaining data

- Update the box by removing the identified portion from

the current box.

- Update the dataset by removing the data points that fall outside

the new box.

3. End when the stopping criterion is met

(e.g., after a certain number of iterations

or minimum number of data points remaining).

4. Return the final box and all the preceding boxes as candidate segments.

In this pseudo-code:

Consider simple examples of how PRIM handles numeric and categorical variables:

Numeric variables:

Imagine you have a numeric variable such as age. In each step of the peeling phase, PRIM looks at the range of that variable (say, age from 18 to 80). PRIM then “peels off” a portion of that range from either end, as defined by the peeling parameter. For example, it might remove ages 75 to 80 because doing so improves the target quality function in the remaining data (e.g., increasing the churn reduction potential). The animation below shows PRIM finding an interesting segment (with a high proportion of orange squares) in a 2D numeric dataset.

Categorical nominal variables:

Now consider a categorical nominal variable such as country, with categories such as Germany, France, and Spain. In the peeling phase, PRIM evaluates each category based on how well it improves the target quality function. It then removes the least promising category. For example, if removing “Germany” results in a subset where the target quality function is improved (such as a higher potential churn reduction), then all data points with “Germany” are “peeled”. Note that the peeling parameter has no effect on the processing of categorical data, which can cause undesired effects in some cases, as I will discuss and provide a simple remedy (in section “Better segments via enforced ‘patience’”).

Categorical ordinal variables:

For ordinal variables, disjoint intervals in segment descriptions can sometimes be less intuitive. Consider an education variable with levels such as primary, secondary, vocational, bachelor, and graduate. Finding a rule like education in {primary, bachelor} may not fit well with the ordinal nature of the data because it combines non-adjacent categories. For those looking for a more coherent segmentation, such as education > secondary, that respects the natural order of the variable, using an ordinal encoding can be a useful workaround. For more insight into categorical encoding, you may find my earlier post [6] helpful, as it navigates you to the necessary information.

Now everything is ready to start the experiment. Following the Medium article on identifying unique data segments [1], I will apply the PRIM method to the Churn for Bank Customers [7] dataset from Kaggle, available under the CC0: Public Domain license. I will also adopt the target quality function from the article:

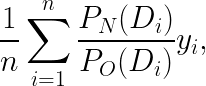

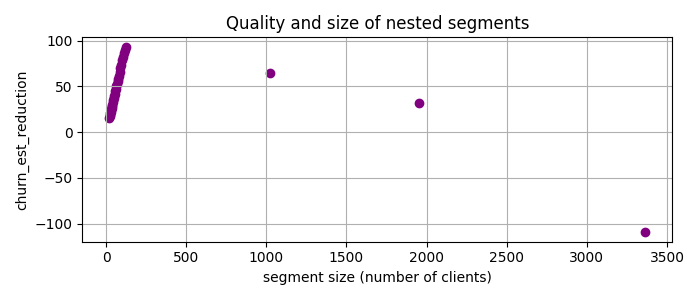

That is, I will look for the segments with many customers where the churn rate is much higher than the baseline, which is the average churn rate in the entire dataset. So I use PRIM, which gives me a set of nested candidate segments, and plot the churn_est_reduction against the number of clients.

The highest quality, churn_est_reduction = 457 is achieved for the 11th candidate segment with the description num_of_products < 2, is_active_member < 1, age > 37. This is quite an improvement over the previously reported maximum churn_est_reduction = 410 in [1]. Comparing the segment descriptions, I suspect that the main reason for this improvement is PRIM’s ability to handle numeric variables.

Something suspicious is going on in the previous plot. By its nature, PRIM is expected to be “patient”, i.e. to reduce the segment size only a little bit at each iteration. However, the second candidate segment is twice as small as the previous one — PRIM has cut off half the data at once. The reason for this is the low cardinality of some features, which is often the case with categorical or indicator variables. For example, is_active_member only takes the values 0 or 1. PRIM can only cut off large chunks of data for such variables, giving them an unfair advantage.

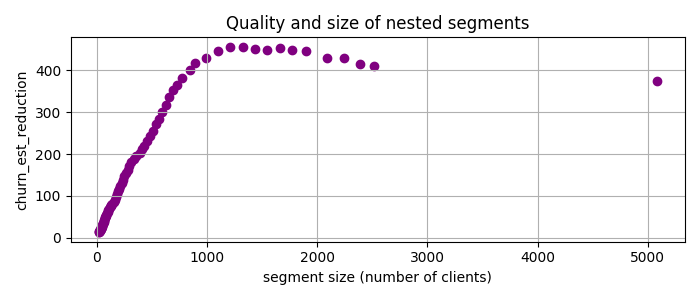

To address this issue, I’ve added an additional parameter called patience to give more weight to smaller cuts. Specifically, for the task at hand, I prioritize cuts by multiplying the churn rate reduction by the segment size raised to the power of patience. This approach helps to fine-tune the selection of segments based on their size, making it more tailored to our analysis needs. Applying PRIM with patience = 2 to the data yields the following candidate segments

Now the best candidate segment is num_of_products < 2, 37 < age < 64 with churn_est_reduction = 548, much better than any previous result!

Let us say we have selected the just discovered segment and ask one of two responsible teams to focus on it. Can PRIM find a job for another team, i.e., find another group of clients, not in the first segment, with a high potential churn rate reduction? Yes it can, with so-called “covering” approach [4]. This means that one simply drops the clients belonging to the previously selected segment(s) from the dataset and apply PRIM once again. So I removed data with num_of_products < 2, 37 < age < 64 and applied PRIM to the rest:

Here the best candidate segment is gender != ‘Male’, num_of_products > 2, balance > 0.0 with chirn_est_reduction = 93.

To wrap things up, I illustrated PRIM’s strong performance on a Customer Churn Dataset for a task to find unusual segments. Points to note:

[1] Figuring out the most unusual segments in data

[2] Atzmueller, Martin. “Subgroup discovery.” Wiley Interdisciplinary Reviews: Data Mining and Knowledge Discovery 5.1 (2015): 35–49.

[3] My code for PRIM and the experiment

[4] Friedman, Jerome H., and Nicholas I. Fisher. “Bump hunting in high-dimensional data.” Statistics and computing 9.2 (1999): 123–143.

[5] Arzamasov, Vadim, and Klemens Böhm. “REDS: rule extraction for discovering scenarios.” Proceedings of the 2021 International Conference on Management of Data. 2021.

[6] Categorical Encoding: Key Insights

[7] Churn for Bank Customers dataset

[8] Patient Rule Induction Method for Python

[9] Patient Rule Induction Method for R

Find Unusual Segments in Your Data with Subgroup Discovery was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Find Unusual Segments in Your Data with Subgroup Discovery

Go Here to Read this Fast! Find Unusual Segments in Your Data with Subgroup Discovery

Learn how your Text-to-SQL LLM app may be vulnerable to Prompt Injections, and mitigation measures you could adopt to protect your data

Originally appeared here:

Text-to-SQL LLM Applications: Prompt Injections

Go Here to Read this Fast! Text-to-SQL LLM Applications: Prompt Injections

And 5 ways to use it in data science and machine learning

Originally appeared here:

Python’s Most Powerful Decorator

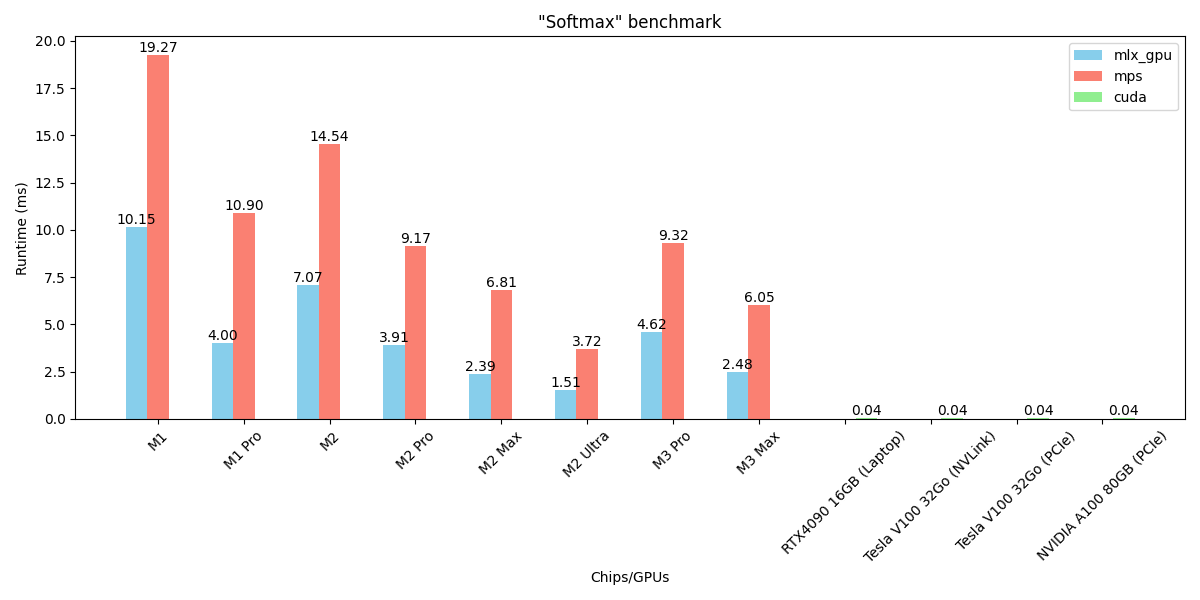

A benchmark of the main operations and layers on MLX, PyTorch MPS and CUDA GPUs.

Originally appeared here:

How Fast Is MLX? A Comprehensive Benchmark on 8 Apple Silicon Chips and 4 CUDA GPUs

Bridging the gap: Brain-inspired solutions to address catastrophic forgetting in artificial neural networks

Originally appeared here:

How the Brain and AI Overcome Forgetting

Go Here to Read this Fast! How the Brain and AI Overcome Forgetting

A prototype tool powered by Large Language Models to make querying your databases as easy as saying the word.

Originally appeared here:

QueryGPT — Harnessing Generative AI To Query Your Data With Natural Language.