Go here to Read this Fast! Runes volume rises with Bitcoin gaining meme coin utility

Originally appeared here:

Runes volume rises with Bitcoin gaining meme coin utility

Go here to Read this Fast! Runes volume rises with Bitcoin gaining meme coin utility

Originally appeared here:

Runes volume rises with Bitcoin gaining meme coin utility

Go here to Read this Fast! Renzo team changes airdrop conditions amid community criticism

Originally appeared here:

Renzo team changes airdrop conditions amid community criticism

Go here to Read this Fast! Trader claims Bitcoin algo netted $71k profits, community disagrees

Originally appeared here:

Trader claims Bitcoin algo netted $71k profits, community disagrees

Shiba Inu reclaimed a support zone that had been in place since mid-March.

The increased buying pressure could see the token begin its recovery.

Shiba Inu [SHIB] was trending downward in the

The post Shiba Inu: Reason to expect an 18% price hike is… appeared first on AMBCrypto.

Go here to Read this Fast! Shiba Inu: Reason to expect an 18% price hike is…

Originally appeared here:

Shiba Inu: Reason to expect an 18% price hike is…

Amidst the crypto market’s volatility, BlockDAG’s staggering 30,000X ROI potential and the buzz surrounding its moon-shot keynote have caught the attention of Ethereum Classic investors. This comes as

The post BlockDAG’s 30,000X ROI potential & Moon-shot keynote draw Ethereum Classic investors appeared first on AMBCrypto.

Amidst fluctuating markets, the Shiba Inu (SHIB) price showcases potential growth. Concurrently, AVAX seeks stability, with its price prediction wavering after recent dips. In contrast, BlockDAG captures attention with its remarkable presale success exceeding $20.6 million, fueled by the efficient and beginner-friendly X10 crypto mining rigs. This advancement, coupled with the excitement from its recent […]

My team and I (Sandi Besen, Tula Masterman, Mason Sawtell, and Alex Chao) recently published a survey research paper that offers a comprehensive look at the current state of AI agent architectures. As co-authors of this work, we set out to uncover the key design elements that enable these autonomous systems to effectively execute complex goals.

This paper serves as a resource for researchers, developers, and anyone interested in staying updated on the cutting-edge progress in the field of AI agent technologies.

Read the full meta-analysis on Arxiv

Since the launch of ChatGPT, the initial wave of generative AI applications has largely revolved around chatbots that utilize the Retrieval Augmented Generation (RAG) pattern to respond to user prompts. While there is ongoing work to enhance the robustness of these RAG-based systems, the research community is now exploring the next generation of AI applications — a common theme being the development of autonomous AI agents.

Agentic systems incorporate advanced capabilities like planning, iteration, and reflection, which leverage the model’s inherent reasoning abilities to accomplish tasks end-to-end. Paired with the ability to use tools, plugins, and function calls — agents are empowered to tackle a wider range of general-purpose work.

Reasoning is a foundational building block of the human mind. Without reasoning one would not be able to make decisions, solve problems, or refine plans when new information is learned — essentially misunderstanding the world around us. If agents don’t have strong reasoning skills then they might misunderstand their task, generate nonsensical answers, or fail to consider multi-step implications.

We find that most agent implementations contain a planning phase which invokes one of the following techniques to create a plan: task decomposition, multi-plan selection, external module-aided planning, reflection and refinement and memory-augmented planning [1].

Another benefit of utilizing an agent implementation over just a base language model is the agent’s ability to solve complex problems by calling tools. Tools can enable an agent to execute actions such as interacting with APIs, writing to third party applications, and more. Reasoning and tool calling are closely intertwined and effective tool calling has a dependency on adequate reasoning. Put simply, you can’t expect an agent with poor reasoning abilities to understand when is the appropriate time to call its tools.

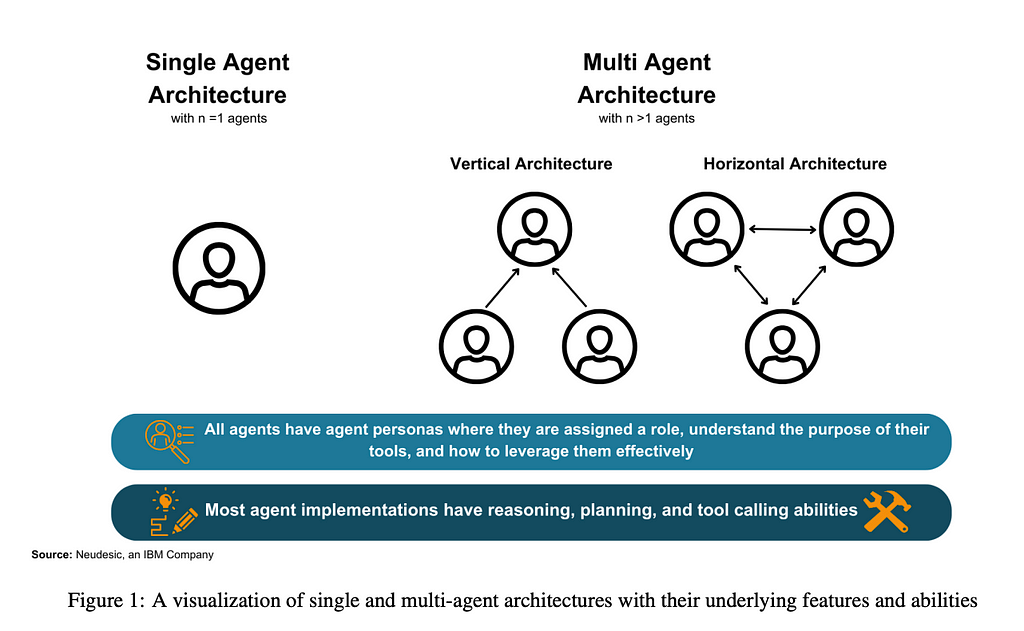

Our findings emphasize that both single-agent and multi-agent architectures can be used to solve challenging tasks by employing reasoning and tool calling steps.

For single agent implementations, we find that successful goal execution is contingent upon proper planning and self-correction [1, 2, 3, 4]. Without the ability to self-evaluate and create effective plans, single agents may get stuck in an endless execution loop and never accomplish a given task or return a result that does not meet user expectations [2]. We find that single agent architectures are especially useful when the task requires straightforward function calling and does not need feedback from another agent.

However, we note that single agent patterns often struggle to complete a long sequence of sub tasks or tool calls [5, 6]. Multi-agent patterns can address the issues of parallel tasks and robustness since multiple agents within the architecture can work on individual subproblems. Many multi-agent patterns start by taking a complex problem and breaking it down into several smaller tasks. Then, each agent works independently on solving each task using their own independent set of tools.

Architectures involving multiple agents present an opportunity for intelligent labor division based on capabilities as well as valuable feedback from diverse agent personas. Numerous multi-agent architectures operate in stages where teams of agents are dynamically formed and reorganized for each planning, execution, and evaluation phase [7, 8, 9]. This reorganization yields superior outcomes because specialized agents are utilized for specific tasks and removed when no longer required. By matching agent roles and skills to the task at hand, agent teams can achieve greater accuracy and reduce the time needed to accomplish the goal. Crucial features of effective multi-agent architectures include clear leadership within agent teams, dynamic team construction, and efficient information sharing among team members to prevent important information from getting lost amidst superfluous communication.

Our research highlights notable single agent methods such as ReAct, RAISE, Reflexion, AutoGPT + P, LATS, and multi agent implementations such as DyLAN, AgentVerse, and MetaGPT, which are explained more in depth in the full text.

Single Agent Patterns:

Single agent patterns are generally best suited for tasks with a narrowly defined list of tools and where processes are well-defined. They don’t face poor feedback from other agents or distracting and unrelated chatter from other team members. However, single agents may get stuck in an execution loop and fail to make progress towards their goal if their reasoning and refinement capabilities aren’t robust.

Multi Agent Patterns:

Multi agent patterns are well-suited for tasks where feedback from multiple personas is beneficial in accomplishing the task. They are useful when parallelization across distinct tasks or workflows is required, allowing individual agents to proceed with their next steps without being hindered by the state of tasks handled by others.

Feedback and Human in the Loop

Language models tend to commit to an answer earlier in their response, which can cause a ‘snowball effect’ of increasing diversion from their goal state [10]. By implementing feedback, agents are much more likely to correct their course and reach their goal. Human oversight improves the immediate outcome by aligning the agent’s responses more closely with human expectations, yielding more reliable and trustworthy results [11, 8]. Agents can be susceptible to feedback from other agents, even if the feedback is not sound. This can lead the agent team to generate a faulty plan which diverts them from their objective [12].

Information Sharing and Communication

Multi-agent patterns have a greater tendency to get caught up in niceties and ask one another things like “how are you”, while single agent patterns tend to stay focused on the task at hand since there is no team dynamic to manage. This can be mitigated by robust prompting. In vertical architectures, agents can fail to send critical information to their supporting agents not realizing the other agents aren’t privy to necessary information to complete their task. This failure can lead to confusion in the team or hallucination in the results. One approach to address this issue is to explicitly include information about access rights in the system prompt so that the agents have contextually appropriate interactions.

Impact of Role Definition and Dynamic Teams

Clear role definition is critical for both single and multi-agent architectures. Role definition ensures that the agents understands their assigned role, stay focused on the provided task, execute the proper tools, and minimizes hallucination of other capabilities. Establishing a clear group leader improves the overall performance of multi-agent teams by streamlining task assignment. Dynamic teams where agents are brought in and out of the system based on need have also been shown to be effective. This ensures that all agents participating in the tasks are strong contributors.

Summary of Key Insights

The key insights discussed suggest that the best agent architecture varies based on use case. Regardless of the architecture selected, the best performing agent systems tend to incorporate at least one of the following approaches: well defined system prompts, clear leadership and task division, dedicated reasoning / planning- execution — evaluation phases, dynamic team structures, human or agentic feedback, and intelligent message filtering. Architectures that leverage these techniques are more effective across a variety of benchmarks and problem types.

Our meta-analysis aims to provide a holistic understanding of the current AI agent landscape and offer insight for those building with existing agent architectures or developing custom agent architectures. There are notable limitations and areas for future improvement in the design and development of autonomous AI agents such as a lack of comprehensive agent benchmarks, real world applicability, and the mitigation of harmful language model biases. These areas will need to be addressed in the near-term to enable reliable agents.

Note: The opinions expressed both in this article and paper are solely those of the authors and do not necessarily reflect the views or policies of their respective employers.

If you still have questions or think that something needs to be further clarified? Drop me a DM on Linkedin! I‘m always eager to engage in food for thought and iterate on my work.

References

[1] Timo Birr et al. AutoGPT+P: Affordance-based Task Planning with Large Language Models. arXiv:2402.10778 [cs] version: 1. Feb. 2024. URL: http://arxiv.org/abs/2402.10778.

[2] Shunyu Yao et al. ReAct: Synergizing Reasoning and Acting in Language Models. arXiv:2210.03629 [cs]. Mar. 2023. URL: http://arxiv.org/abs/2210.03629.

[3] Na Liu et al. From LLM to Conversational Agent: A Memory Enhanced Architecture with Fine-Tuning of Large Language Models. arXiv:2401.02777 [cs]. Jan. 2024. URL: http://arxiv.org/abs/2401.02777.

[4] Noah Shinn et al. Reflexion: Language Agents with Verbal Reinforcement Learning. arXiv:2303.11366 [cs]. Oct. 2023. URL: http://arxiv.org/abs/2303.11366.

[5]Zhengliang Shi et al. Learning to Use Tools via Cooperative and Interactive Agents. arXiv:2403.03031 [cs]. Mar. 2024. URL: http://arxiv.org/abs/2403.03031.

[6] Silin Gao et al. Efficient Tool Use with Chain-of-Abstraction Reasoning. arXiv:2401.17464 [cs]. Feb. 2024. URL: http://arxiv.org/abs/2401.17464

[7] Weize Chen et al. AgentVerse: Facilitating Multi-Agent Collaboration and Exploring Emergent Behaviors. arXiv:2308.10848 [cs]. Oct. 2023. URL: http://arxiv.org/abs/2308.10848.

[8] Xudong Guo et al. Embodied LLM Agents Learn to Cooperate in Organized Teams. 2024. arXiv: 2403.12482 [cs.AI].

[9] Zijun Liu et al. Dynamic LLM-Agent Network: An LLM-agent Collaboration Framework with Agent Team Optimization. 2023. arXiv: 2310.02170 [cs.CL].

[10] Muru Zhang et al. How Language Model Hallucinations Can Snowball. arXiv:2305.13534 [cs]. May 2023. URL: http://arxiv.org/abs/2305.13534.

[11] Xueyang Feng et al. Large Language Model-based Human-Agent Collaboration for Complex Task Solving. 2024. arXiv: 2402.12914 [cs.CL].

[12] Weize Chen et al. AgentVerse: Facilitating Multi-Agent Collaboration and Exploring Emergent Behaviors. arXiv:2308.10848 [cs]. Oct. 2023. URL: http://arxiv.org/abs/2308.10848.

The Landscape of Emerging AI Agent Architectures for Reasoning, Planning, and Tool Calling: A… was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

The Landscape of Emerging AI Agent Architectures for Reasoning, Planning, and Tool Calling: A…

In this tutorial, I will demonstrate how to use Burr, an open source framework (disclosure: I helped create it), using simple OpenAI client calls to GPT4, and FastAPI to create a custom email assistant agent. We’ll describe the challenge one faces and then how you can solve for them. For the application frontend we provide a reference implementation but won’t dive into details for it.

LLMs rarely achieve complex goals on their own, and almost never on the first try. While it is in vogue to claim that ChatGPT given an internet connection can solve the world’s problems, the majority of high-value tools we’ve encountered use a blend of AI ingenuity and human guidance. This is part of the general move towards building building agents — an approach where the AI makes decisions from information it receives — this could be information it queries, information a user provides, or information another LLM gives it.

A simple example of this is a tool to help you draft a response to an email. You put the email and your response goals, and it writes the response for you. At a minimum, you’ll want to provide feedback so it can adjust the response. Furthermore, you will want it to give a chance to ask clarifying questions (an overly confident yet incorrect chatbot helps no one).

In designing this interaction, your system will, inevitably, become a back-and-forth between user/LLM control. In addition to the standard challenges around AI applications (unreliable APIs, stochastic implementations, etc…), you will face a suite of new problems, including:

And so on… In this post we’re going to walk through how to approach solving these — we’ll use the Burr library as well as FastAPI to build a web service to address these challenges in an extensible, modular manner; so you can then use this as a blue print for your own agent assistant needs.

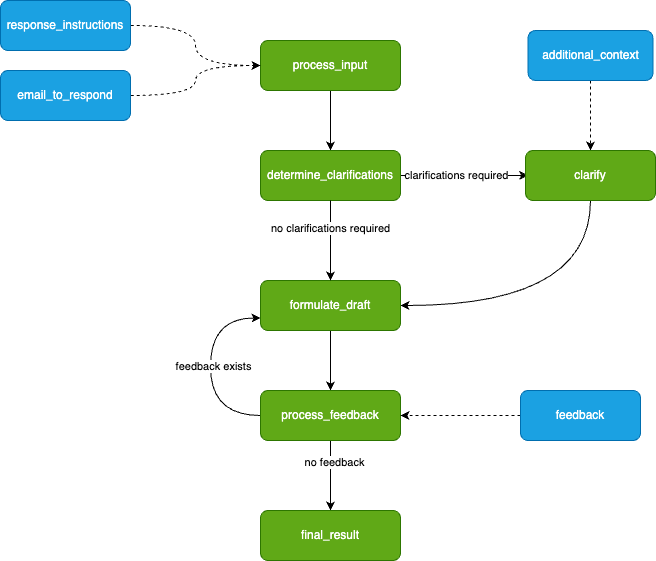

Burr is a lightweight python library you use to build applications as state machines. You construct your application out of a series of actions (these can be either decorated functions or objects), which declare inputs from state, as well as inputs from the user. These specify custom logic (delegating to any framework), as well as instructions on how to update state. State is immutable, which allows you to inspect it at any given point. Burr handles orchestration, monitoring and persistence.

@action(reads=["counter"], writes=["counter"])

def count(state: State) -> Tuple[dict, State]:

current = state["counter"] + 1

result = {"counter": current}

return result, state.update(counter=counter)

Note that the action above has two returns — the results (the counter), and the new, modified state (with the counter field incremented).

You run your Burr actions as part of an application — this allows you to string them together with a series of (optionally) conditional transitions from action to action.

from burr.core import ApplicationBuilder, default, expr

app = (

ApplicationBuilder()

.with_state(counter=0) # initialize the count to zero

.with_actions(

count=count,

done=done # implementation left out above

).with_transitions(

("count", "count", expr("counter < 10")), # Keep counting if the counter is less than 10

("count", "done", default) # Otherwise, we're done

).with_entrypoint("count") # we have to start somewhere

.build()

)

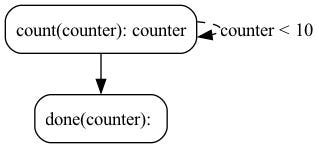

Burr comes with a user-interface that enables monitoring/telemetry, as well as hooks to persist state/execute arbitrary code during execution.

You can visualize this as a flow chart, i.e. graph / state machine:

And monitor it using the local telemetry debugger:

While we showed the (very simple) counter example above, Burr is more commonly used for building chatbots/agents (we’ll be going over an example in this post).

FastAPI is a framework that lets you expose python functions in a REST API. It has a simple interface — you write your functions then decorate them, and run your script — turning it into a server with self-documenting endpoints through OpenAPI.

@app.get("/")

def read_root():

return {"Hello": "World"}

@app.get("/items/{item_id}")

def read_item(item_id: int, q: Union[str, None] = None):

"""A very simpler example of an endpoint that takes in arguments."""

return {"item_id": item_id, "q": q}

FastAPI is easy to deploy on any cloud provider — it is infrastructure-agnostic and can generally scale horizontally (so long as consideration into state management is done). See this page for more information.

You can use any frontend framework you want — react-based tooling, however, has a natural advantage as it models everything as a function of state, which can map 1:1 with the concept in Burr. In the demo app we use react, react-query, and tailwind, but we’ll be skipping over this largely (it is not central to the purpose of the post).

Let’s dig a bit more into the conceptual model. At a high-level, our email assistant will do the following:

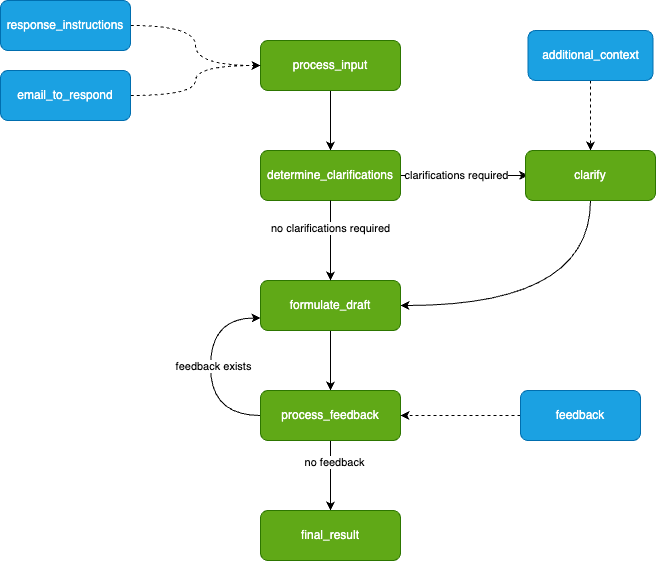

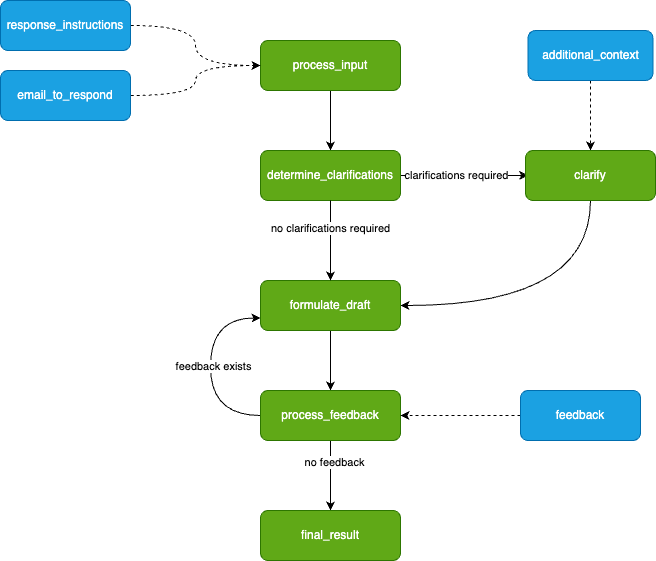

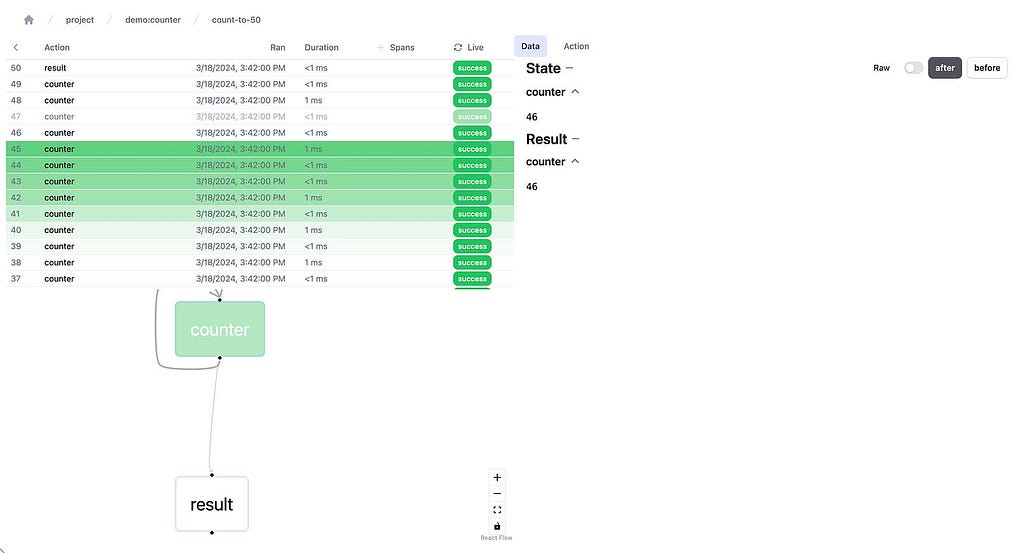

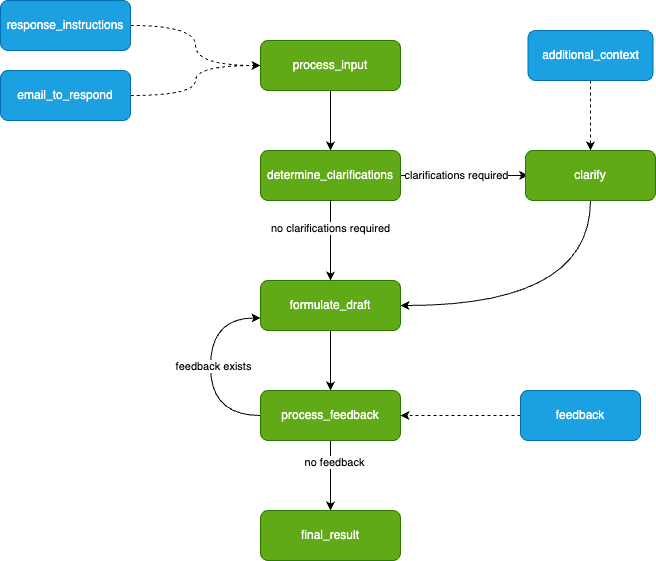

As Burr requires you to build a control flow from actions and transitions, we can initially model this as a simple flowchart.

We drafted this before actually writing any code — you will see it transforms to code naturally.

The green nodes represent actions (these take state in and modify it), and the blue nodes represent inputs (these are points at which the app has to pause and ask the user for information). Note that there is a loop (formulate_draft ⇔process_feedback) — we iterate on feedback until we’re happy with the results.

This diagram is simply a stylized version of what Burr shows you — the modeling is meant to be close to the actual code. We have not displayed state information (the data the steps take in/return), but we’ll need to track the following (that may or may not be populated at any given point) so we can make decisions about what to do next:

Looking at the requirements above, we can build a straightforward burr application since we can very closely match our code with our diagram above. Let’s take a look at the determine_clarifications step, for example:

@action(

reads=["response_instructions", "incoming_email"],

writes=["clarification_questions"]

)

def determine_clarifications(state: State) -> Tuple[dict, State]:

"""Determines if the response instructions require clarification."""

incoming_email = state["incoming_email"]

response_instructions = state["response_instructions"]

client = _get_openai_client()

result = client.chat.completions.create(

model="gpt-4",

messages=[

{

"role": "system",

"content": ("You are a chatbot that has the task of "

"generating responses to an email on behalf "

"of a user. "),

},

{

"role": "user",

"content": (

f"The email you are to respond to is: {incoming_email}."

# ... left out, see link above

"The questions, joined by newlines, must be the only "

"text you return. If you do not need clarification, "

"return an empty string."

),

},

],

)

content = result.choices[0].message.content

all_questions = content.split("n") if content else []

return {"clarification_questions": all_questions}, state.update(

clarification_questions=all_questions)

Note that this uses simple OpenAI calls — you can replace this with Langchain, LlamaIndex, Hamilton (or something else) if you prefer more abstraction, and delegate to whatever LLM you like to use. And, you should probably use something a little more concrete (E.G. instructor) to guarantee output shape.

To tie these together, we put them into the application builder — this allows us to set conditional transitions (e.g. len(clarification_questions>0) and therefore connect actions, recreating the diagram above.

application = (

ApplicationBuilder()

# define our actions

.with_actions(

process_input,

determine_clarifications,

clarify_instructions,

formulate_draft,

process_feedback,

final_result,

)

# define how our actions connect

.with_transitions(

("process_input", "determine_clarifications"),

(

"determine_clarifications",

"clarify_instructions",

expr("len(clarification_questions) > 0"),

),

("determine_clarifications", "formulate_draft"),

("clarify_instructions", "formulate_draft"),

("formulate_draft", "process_feedback"),

("process_feedback", "formulate_draft", expr("len(feedback) > 0")),

("process_feedback", "final_result"),

)

.with_state(draft_history=[])

.with_entrypoint("process_input")

.build()

)

To iterate on this, we used a jupyter notebook. Running our application is simple — all you do is call the .run() method on the Application, with the right halting conditions. We’ll want it to halt before any action that requires user input (clarify_instructions and process_feedback), and after final_result. We can then run it in a while loop, asking for user input and feeding it back to the state machine:

def request_answers(questions):

"""Requests answers from the user for the questions the LLM has"""

answers = []

print("The email assistant wants more information:n")

for question in questions:

answers.append(input(question))

return answers

def request_feedback(draft):

"""Requests feedback from the user for a draft"""

print(

f"here's a draft!: n {draft} n n What feedback do you have?",

)

return input("Write feedback or leave blank to continue (if you're happy)")

inputs = {

"email_to_respond" : EMAIL,

"response_instructions" : INSTRUCTIONS

}

# in our notebook cell:

while True:

action, result, state = app.run(

halt_before=["clarify_instructions", "process_feedback"],

halt_after=["final_result"],

inputs=inputs

)

if action.name == "clarify_instructions":

questions = state["clarification_questions"]

answers = request_answers(questions)

inputs = {

"clarification_inputs" : answers

}

if action.name == "process_feedback":

feedback = request_feedback(state["current_draft"])

inputs = {"feedback" : feedback}

if action.name == "final_result":

print("final result is:", state["current_draft"])

break

You can then use the Burr UI to monitor your application as it runs!

We’re going to persist our results to an SQLite server (although as you’ll see later on this is customizable). To do this, we need to add a few lines to the ApplicationBuilder.

state_persister = SQLLitePersister(

db_path="sqllite.db",

table_name="email_assistant_table"

)

app = (

ApplicationBuilder().

... # the code we had above

.initialize(

initializer=state_persister,

resume_at_next_action=True,

default_state={"chat_history" : []},

default_entrypoint="process_input"

)

.with_identifiers(app_id=app_id)

.build()

)

This ensures that every email draft we create will be saved and can be loaded at every step. When you want to resume a prior draft of an email, all you have to do is rerun the code and it will start where it left off.

To expose this in a web server we’ll be using FastAPI to create endpoints and Pydantic to represent types. Before we get into the details, we’ll note that Burr naturally provides an application_id (either generated or specified) for every instance of an application. In this case the application_id would correspond to a particular email draft. This allows us to uniquely access it, query from the db, etc… It also allows for a partition key (E.G. user_id) so you can add additional indexing in your database. We center the API around inputs/outputs

We will construct the following endpoints:

The GET endpoint allows us to get the current state of the application — this enables the user to reload if they quit the browser/get distracted. Each of these endpoints will return the full state of the application, which can be rendered on the frontend. Furthermore, it will indicate the next API endpoint we call, which allows the UI to render the appropriate form and submit to the right endpoint.

Using FastAPI + Pydantic, this becomes very simple to implement. First, let’s add a utility to get the application object. This will use a cached version or instantiate it:

@functools.lru_cache(maxsize=128)

def get_application(app_id: str) -> Application:

app = email_assistant_application.application(app_id=app_id)

return app

All this does is call our function application in email_assistant that recreates the application. We have not included the create function here, but it calls out to the same API.

Let’s then define a Pydantic model to represent the state, and the app object in FastAPI:

class EmailAssistantState(pydantic.BaseModel):

app_id: str

email_to_respond: Optional[str]

response_instructions: Optional[str]

questions: Optional[List[str]]

answers: Optional[List[str]]

drafts: List[str]

feedback_history: List[str]

final_draft: Optional[str]

# This stores the next step, which tells the frontend which ones to call

next_step: Literal[

"process_input", "clarify_instructions",

"process_feedback", None]

@staticmethod

def from_app(app: Application):

# implementation left out, call app.state and translate to

# pydantic model we can use `app.get_next_action()` to get

#the next step and return it to the user

...

Note that every endpoint will return this same pydantic model!

Given that each endpoint returns the same thing (a representation of the current state as well as the next step to execute), they all look the same. We can first implement a generic run_through function, which will progress our state machine forward, and return the state.

def run_through(

project_id: str,

app_id: Optional[str],

inputs: Dict[str, Any]

) -> EmailAssistantState:

email_assistant_app = get_application(project_id, app_id)

email_assistant_app.run(

halt_before=["clarify_instructions", "process_feedback"],

halt_after=["final_result"],

inputs=inputs,

)

return EmailAssistantState.from_app(email_assistant_app)

This represents a simple but powerful architecture. We can continue calling these endpoints until we’re at a “terminal” state, at which point we can always ask for the state. If we decide to add more input steps, we can modify the state machine and add more input steps. We are not required to hold state in the app (it is all delegated to Burr’s persistence), so we can easily load up from any given point, allowing the user to wait for seconds, minutes, hours, or even days before continuing.

As the frontend simply renders based on the current state and the next step, it will always be correct, and the user can always pick up where they left off. With Burr’s telemetry capabilities you can debug any state-related issues, ensuring a smooth user experience.

Now that we have a set of endpoints, the UI is simple. In fact, it mirrors the API almost exactly. We won’t dig into this too much, but the high-level is that you’ll want the following capabilities:

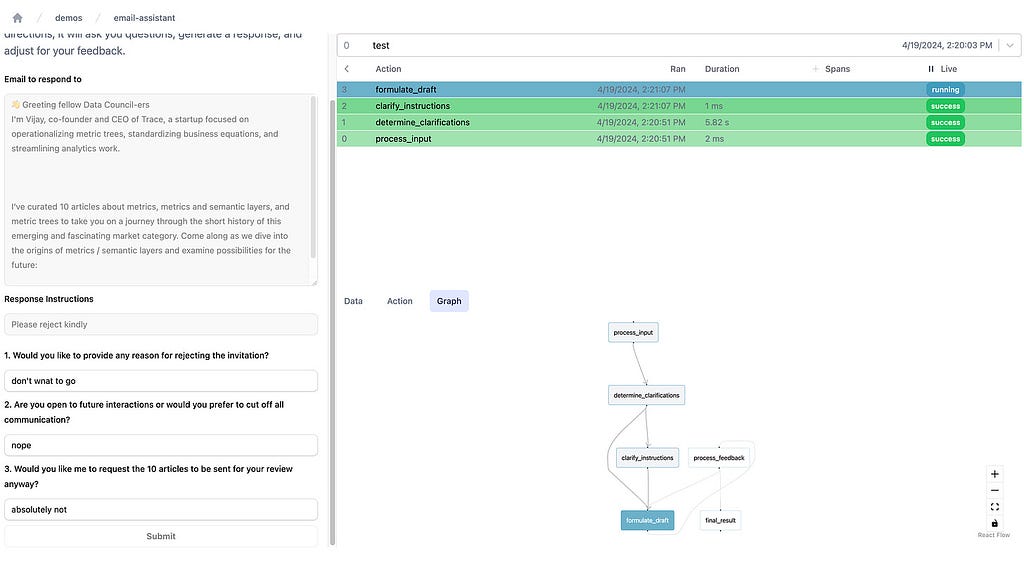

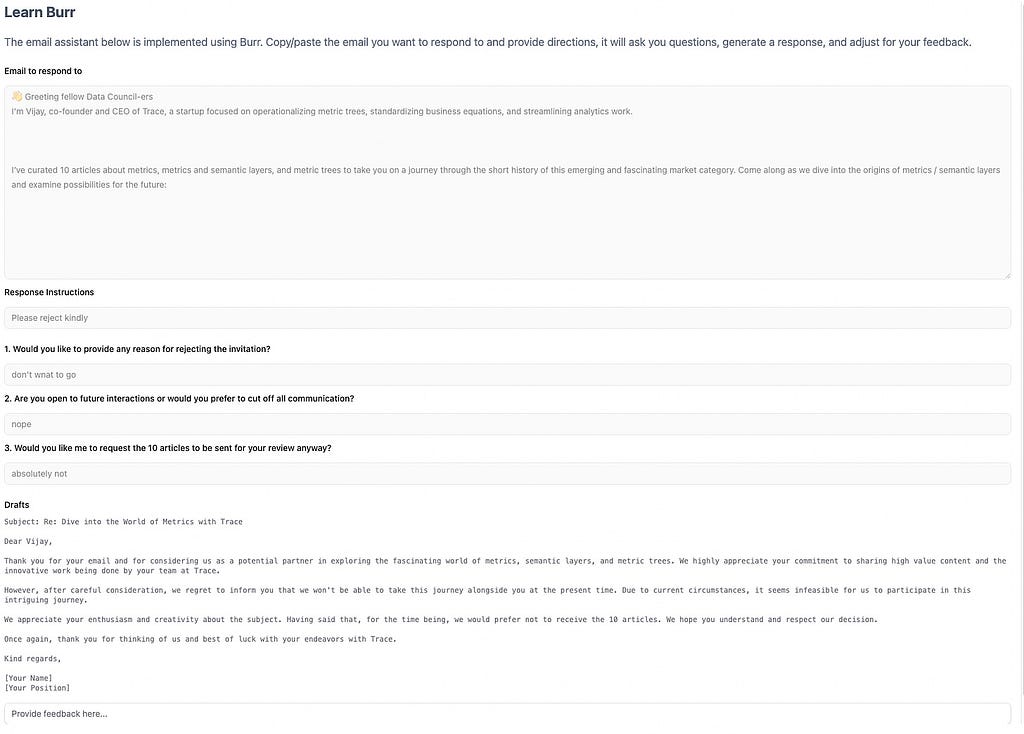

You can see the UI here. Here’s an example of it in action:

You can play around with it if you download burr (`pip install “burr[start]” && burr`), and navigate to http://localhost:7241/demos/email-assistant.

Note that there are many tools that make this easier/simpler to prototype, including chainlit, streamlit, etc… The backend API we built is amenable to interacting with them as well.

While we used the simple SQLLite persister, you can use any of the others that come with Burr or implement your own to match your schema/db infrastructure. To do this you implement the BaseStatePersister class, and add it in with the ApplicationBuilder, instead of the SQLLite persister we used above.

Using the Burr UI to monitor is not the only way. You can integrate your own by leveraging lifecycle hooks, enabling you to log data in a custom format to, say, datadog, langsmith, or langfuse.

Furthermore, you can leverage additional monitoring capabilities to track spans/traces, either logging them directly to the Burr UI or to any of the above providers. See the list of available hooks here.

While we kept the APIs we exposed synchronous for simplicity, Burr supports asynchronous execution as well. Burr also supports streaming responses for those who want to provide a more interactive UI/reduce time to first token.

As with any LLM application, the entire prompt matters. If you can provide the right guidance, the results are going to be better than if you don’t. Much like if you are going to instruct a human, more guidance is always better. That said, if you find yourself always correcting some aspect, then changing the base prompt is likely the best course of action. For example, using a single-shot or few-shot approach might be a good choice to try to help instruct the LLM as to what you’d like to see given your specific context.

In this post we discussed how to address some of the challenges around building human-in-the-loop agentic workflows. We ran through an example of making an email assistant using Burr to build and run it as a state machine, and FastAPI to run Burr in a web service. We finally showed how you can extend the tooling we used here for a variety of common production needs — e.g. monitoring & storage.

Building an Email Assistant Application with Burr was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Building an Email Assistant Application with Burr

Go Here to Read this Fast! Building an Email Assistant Application with Burr

Originally appeared here:

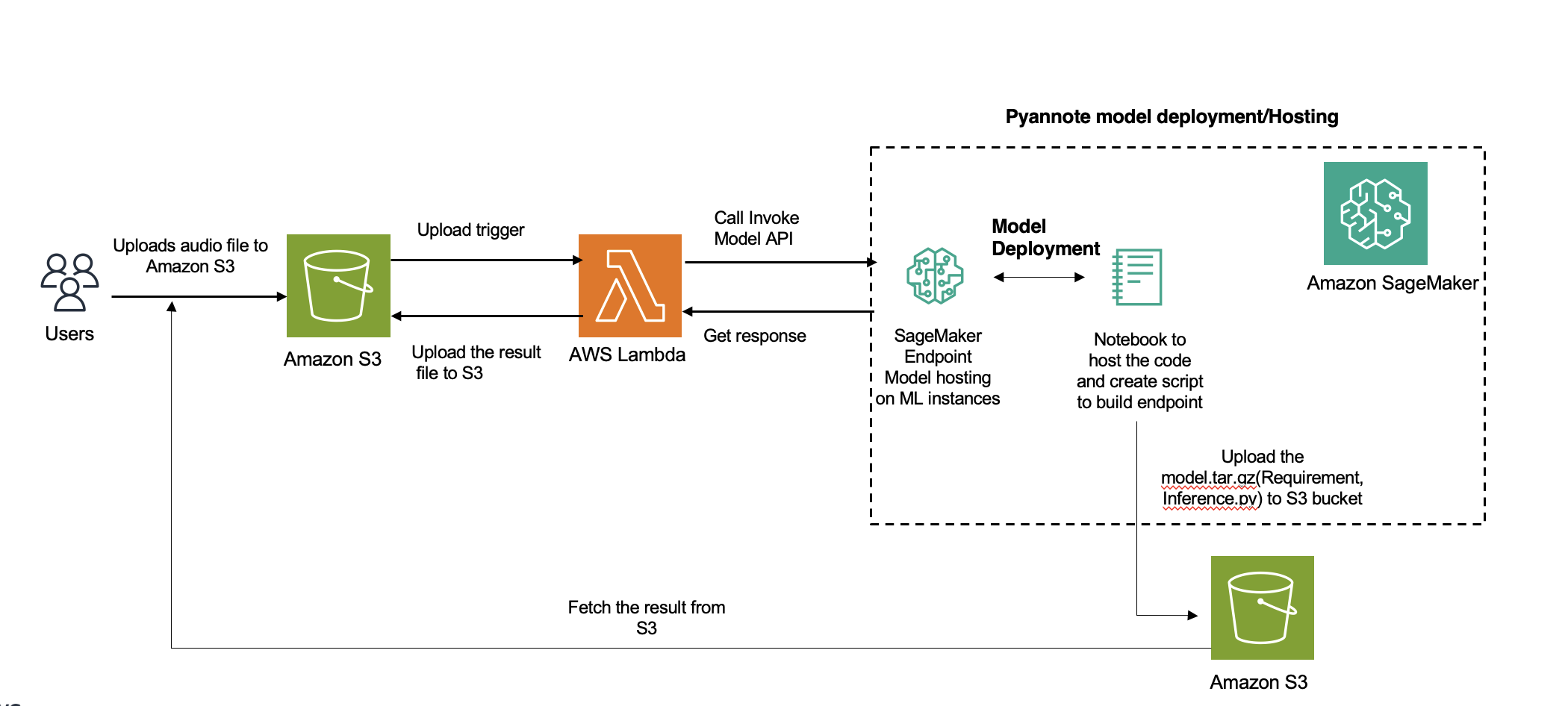

Deploy a Hugging Face (PyAnnote) speaker diarization model on Amazon SageMaker as an asynchronous endpoint

Originally appeared here:

Evaluate the text summarization capabilities of LLMs for enhanced decision-making on AWS